The Third Generation of Production ML Architectures

LinkWhere are production ML architectures going?

Production machine learning architectures are ML systems that are deployed in production environments. These are systems trained on billions of examples, and often serve millions of inferences per second. Because of this mammoth scale, they almost always require distributed training and inference. These systems require careful design to support the massive scale they run at, and so as an industry we have tried to create reusable systems to meet the sometimes difficult requirements.

As technology has advanced, production ML architectures have evolved. One way to see it is in terms of generations: The first generation involved “fixed function” pipelines, while the second generation involved programmability within the pipeline of particular existing actions.

What will the third generation of production ML architectures be like? This post tries to answer that by using history as a guide by looking at the evolution of GPU programming architectures. We’ll then talk about a system called Ray that seems to follow in the same footsteps.

LinkGPU Programming History

Let’s quickly look at GPU programming history -- the one that the deep learning revolution is built on. This might give us insight for what the future might hold for production machine learning architectures.

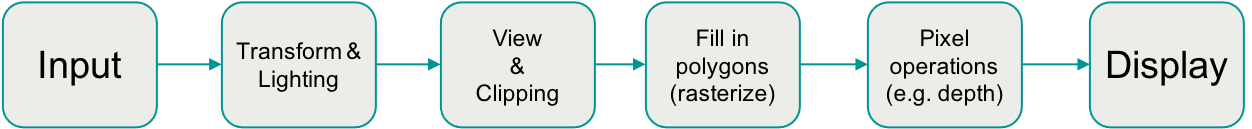

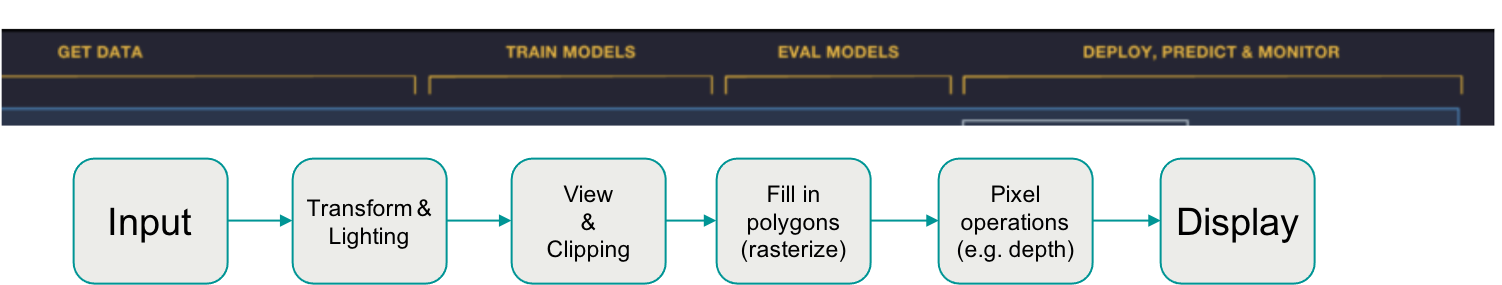

1st Generation of GPU programming architectures (OpenGL 1.0, Direct 3D)

1st Generation of GPU programming architectures (OpenGL 1.0, Direct 3D)In the mid to late 1990’s, we saw the first generation of GPU programming architectures. The input would be things like textures, polygons, meshes, etc. It would go through the pipeline and output be an image shown to the user. The revolutionary aspect of this fixed function pipeline was that it was all hardware accelerated. This was the first time that consumers had hardware accelerated graphics. This enabled the 3D gaming revolution. While this brought many new opportunities, it was also limited in many ways.

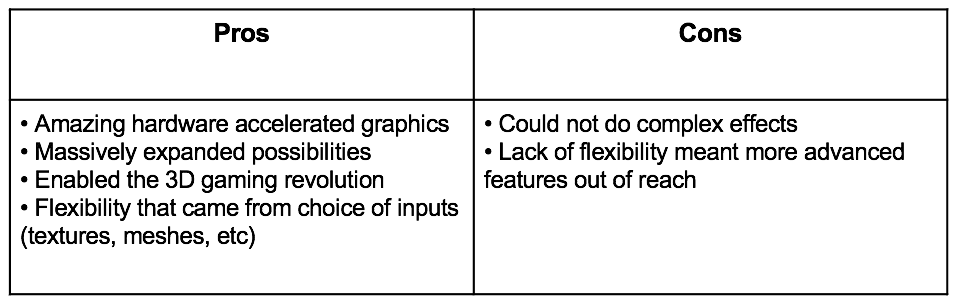

Pros and cons of the 1st GPU programming architecture

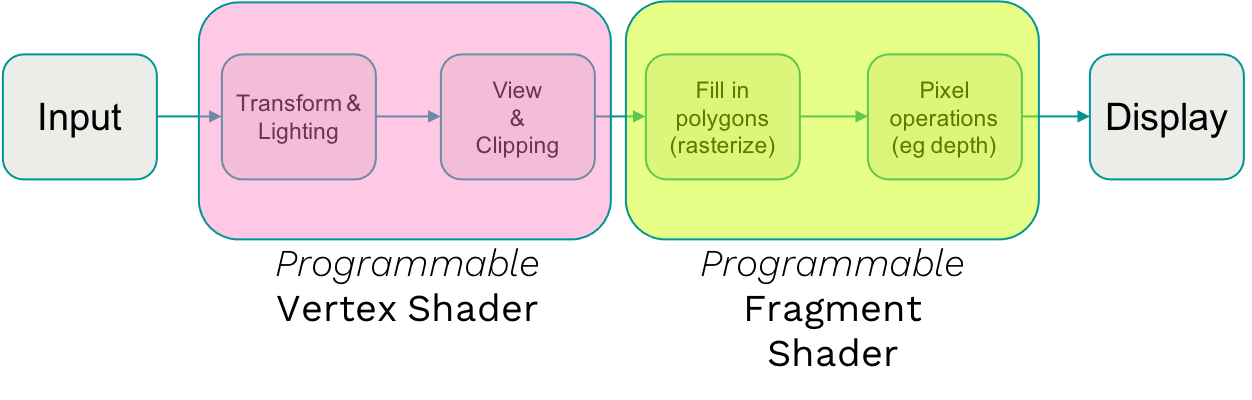

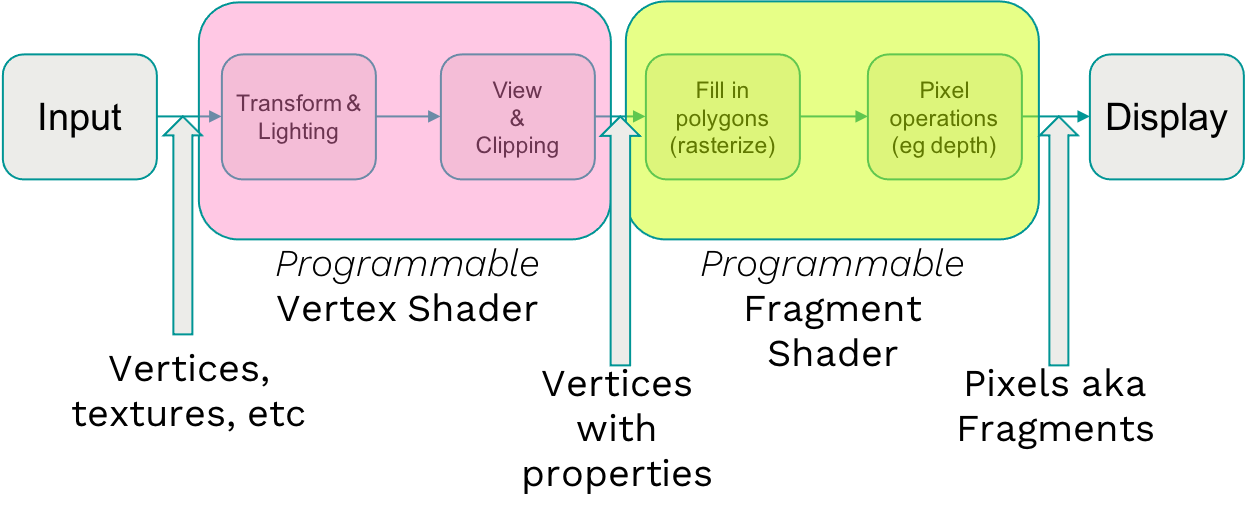

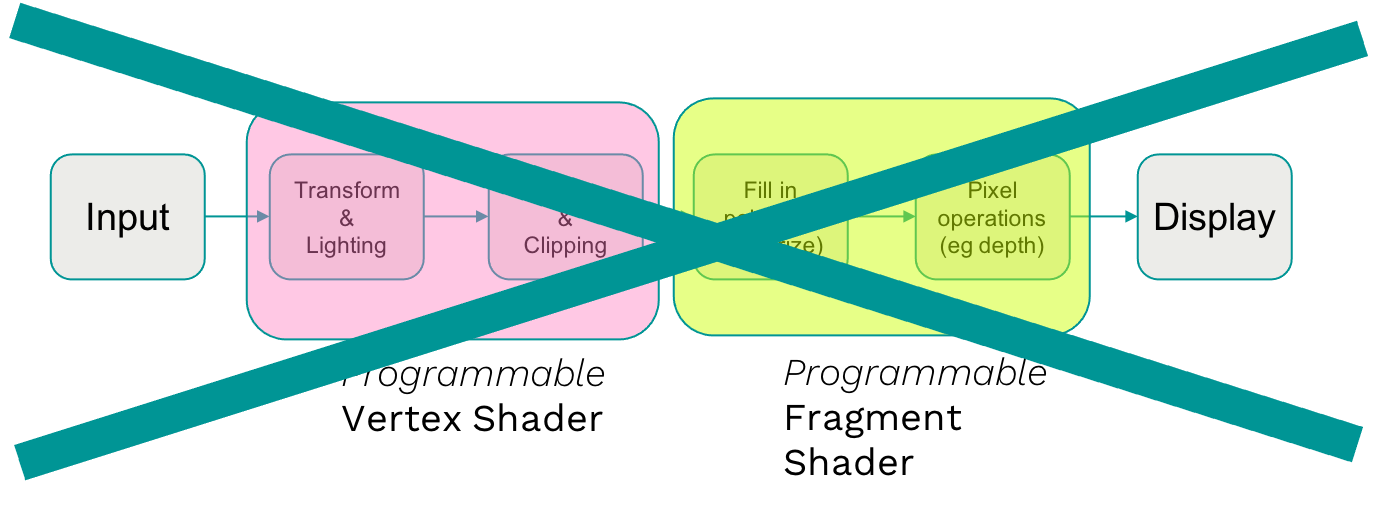

Pros and cons of the 1st GPU programming architectureThe 2nd generation had the same pipeline, however it was broken up into more programmable sections. This allowed for more flexibility with the vertex and fragment shaders being nothing more than small programs that run in the hardware.

2nd Generation of GPU programming architectures (OpenGL 2.0, Direct3D 10)

2nd Generation of GPU programming architectures (OpenGL 2.0, Direct3D 10)This had some programmability, but it still required conforming to the existing pipeline.

The required formats for the different sections were pretty rigid.

The required formats for the different sections were pretty rigid.This gave a great deal more flexibility to developers that allowed for amazing effects, and while far more flexible, there were still limits. In particular the inputs and outputs of each stage were well defined, and really could not be changed easily.

The second generation was Turing complete so it was possible to create anything, however possible and easy are not the same thing. For example, if someone was working on a physics problem (or a machine learning problem), they had to work really hard to make it conform to a combination of vertex and fragment shaders and had to have a deep understanding of how the underlying hardware was designed.

3rd generation of GPU programming architectures (Cg, OpenCL) are a lot more flexible because there is less of a focus on pipelines and more of a focus on full programmability.

3rd generation of GPU programming architectures (Cg, OpenCL) are a lot more flexible because there is less of a focus on pipelines and more of a focus on full programmability. The Third generation was the one that brought complete programmability combined with performance to GPU programming architectures. The third generation could do everything the previous generations could do and is a lot more flexible because there is less of a focus on pipelines. It is all about full programmability with a focus on libraries. Indeed the first and second generations literally become nothing more than libraries that can be reused at will (or completely ignored if you want to).

Originally, games were written on code that runs on Direct3D or OpenGL (first and second generation systems). But with the arrival of the third generation, people started to use engines like Unity or Unreal Engine 4 due to their programmability and flexibility with the GPU being more of an implementation detail. This enabled people without knowledge of pipelines and shaders to use it like a normal code library. This opened up the power of GPUs to a huge number of users and this has led to an explosion of applications. For example, modern deep learning infrastructure is built upon this. For example, CUDA led to cuDNN which led to Caffe then Torch and finally PyTorch.

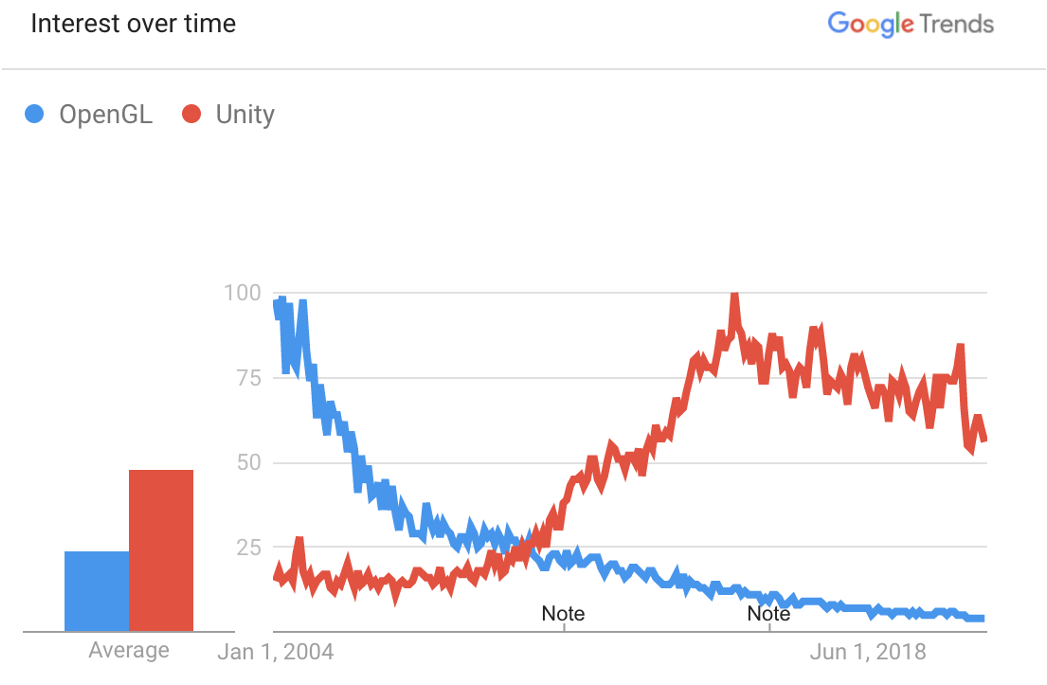

Unity (3rd gen) has become a lot more popular than OpenGL (2nd gen). With Unity, people are worrying less about the architecture and focusing more on the characteristics and the libraries.

Unity (3rd gen) has become a lot more popular than OpenGL (2nd gen). With Unity, people are worrying less about the architecture and focusing more on the characteristics and the libraries. LinkComparing GPU and production ML architectures

What does any of this have to do with Production ML architectures? There may be a similar evolutionary pattern at play.

To understand some similarities with GPU and ML architectures, let’s first start by looking at the evolution of Uber’s production ML architecture, Michelangelo. Note that this example should also apply very generally to other production ML architectures.

Fixed pipeline production ML architecture (image from 2017 Uber blog post)

Fixed pipeline production ML architecture (image from 2017 Uber blog post)The diagram above is of a 1st generation fixed pipeline ML production architecture from an Uber blog post in 2017. This pipeline has data preparation, data in feature stores (get data), batch training job (train models), repository for the models (eval models), and online as well as offline inference (deploy, predict, and monitor). If you compare the 1st generation of GPU and ML architectures, they might seem surprisingly familiar.

The 1st generation of ML and GPU architectures both seem to have fixed function pipelines.

The 1st generation of ML and GPU architectures both seem to have fixed function pipelines.A 2019 blog post from Uber discussed the problems with the 1st generation architecture.

“Michelangelo was initially launched with a monolithic architecture that managed tightly-coupled workflows … made adding support for new Spark transformers difficult and precluded serving of models trained outside of Michelangelo.”

Essentially, the monolithic architecture limited what they could do and made it difficult to serve models. This motivated the second generation which standardized interfaces and separated out things into components.

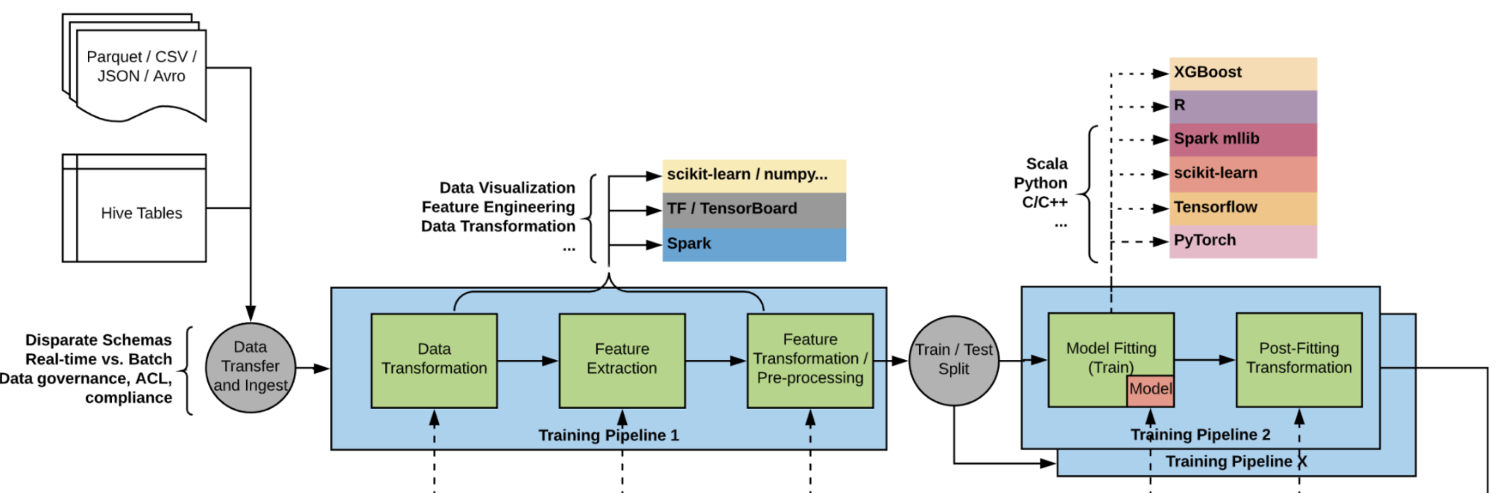

2nd generation Uber Michelangelo architecture (image from 2019 Uber blog post)

2nd generation Uber Michelangelo architecture (image from 2019 Uber blog post)The 2nd generation Uber Michelangelo architecture made it easier to replace any of the standard tools that we like to code in (scikit-learn, TensorFlow, Spark) with each other. This is similar to the 2nd generation of GPU architectures which have programmable sections within the pipeline.

LinkRay as a 3rd Generation Production ML Architecture

If mostly where we are right now is the second generation, let’s ask ourselves what the third generation would look like? We suggest -- using the history of GPU programming architectures as a guide -- that it would need the following qualities:

It needs to be able to do everything the 1st and 2nd generation can do (they’re just libraries)

The interface for programming the system is just a normal programming language which does not force developers to think about the problem in terms of the underlying architecture

The focus shifts to libraries: just as people started to talk about Unity and stopped talking about OpenGL itself; in much the same way, people would stop talking about particular architectures for ML and just use libraries.

With a focus on libraries, the compute engine could just be a detail. This would open the power of ML to a huge number of users and potentially lead to an explosion of applications. That brings us to Ray.

LinkWhat is Ray

Ray can be considered one of these third generation systems. Ray is:

A simple and flexible framework for distributed computation

A cloud-provider independent compute launcher/autoscaler

An ecosystem of distributed computation libraries built with #1

An easy way to “glue” together distributed libraries in code that looks like normal python while still being performant.

What makes Ray simple is that you make simple annotations (i.e., @ray.remote decorator in Python) to make functions and classes distributable. What makes Ray flexible is that it is not a batch model. Ray uses the actor model where tasks and actors can create new tasks and new actors without much cost so you can have a more dynamic creation of functionality.

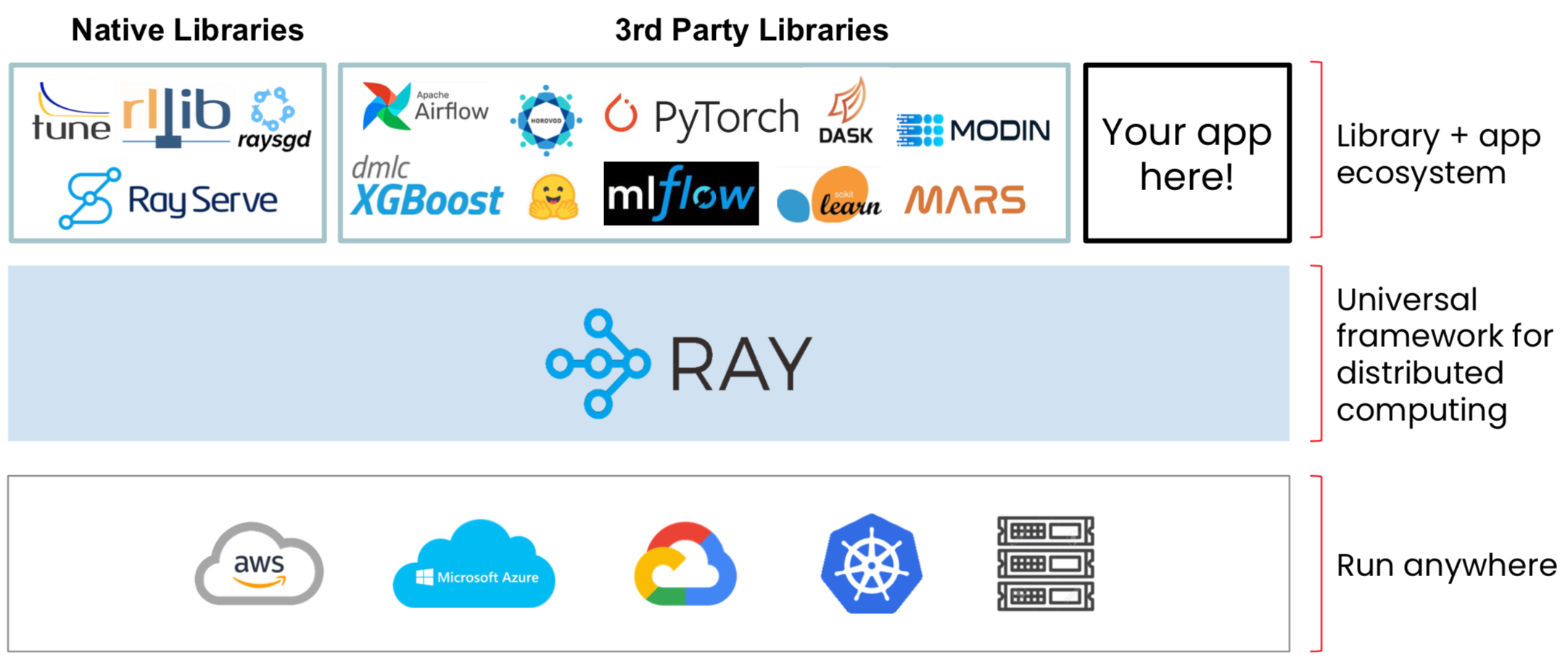

The image below shows the ray ecosystem.

Ray ecosystem.

Ray ecosystem.At the bottom layer, you have your favorite compute service provider (AWS, Azure, GCP, K8s, private cluster). At the middle layer, you have Ray which acts as an interface for these machine learning libraries to run on your compute service provider. The top layer contains scalable libraries for machine learning, model serving, data processing, and more. Some are native ray libraries designed from the beginning to run on top of Ray. There is also a healthy ecosystem of 3rd party libraries like PyTorch, scikit-learn, XGBoost, LightGBM, and more with varying levels of Ray integration.

Finally, you can glue together these distributed libraries in a way that feels like a single, normal python script; while underneath it is doing all this distributed computation.

LinkHow does Ray “fit” with the historical pattern?

Based on the history of GPU architectures, we believe a key goal of 3rd generation production ML architectures is that they are more programmable. Additionally, by moving the focus to libraries, you don’t have to worry about the details of how the distributed computation is happening. You can instead focus on the algorithm. This is similar to the 3rd generation of GPU architectures in which people started to use engines like Unity or Unreal Engine 4 due to their programmability and flexibility with the GPU becoming more of a detail.

LinkHow are Customers using Ray

Let’s now go over how Ray is being used by customers to see if it is embodying the characteristics of a 3rd Generation Production ML Architecture. Some ways Ray is being used include:

Being a simpler way to build 1st gen/2nd pipelines

A tool to parallelize high performance ML systems

A way to build ML applications that make ML accessible to non-specialists

LinkA simpler way to build 1st/2nd gen pipelines

Ray provides a simpler way to build 1st/2nd gen pipelines because it:

Allows you to implement existing systems more efficiently (programming language to define pipelines)

More easily allows for shared components (i.e., feature transformation during training vs real time)

“Out of the box” support for distributed ML

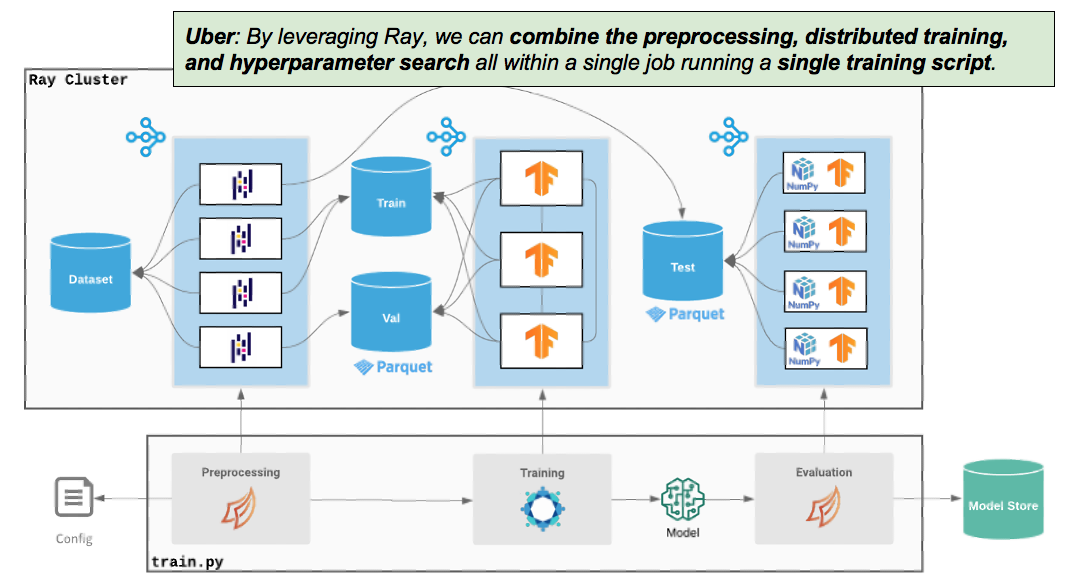

In a recent blog post, Uber shared its use of Ray to show what the 3rd generation Uber Michelangelo architecture would look like. They implemented this architecture in Ludwig 0.4.

Building 3rd generation Uber Michelangelo architecture (image from 2021 Uber blog post), implemented in Ludwig

Building 3rd generation Uber Michelangelo architecture (image from 2021 Uber blog post), implemented in LudwigThey said by leveraging Ray, it allowed them to treat the entire training pipeline as a single script making it far easier to work with and far more intuitive. What is even more interesting is that they mentioned the importance of the machine learning ecosystem/libraries and having a standardized way for people to write machine learning libraries both within Uber and the industry at large.

LinkA Tool for High Performance ML Systems

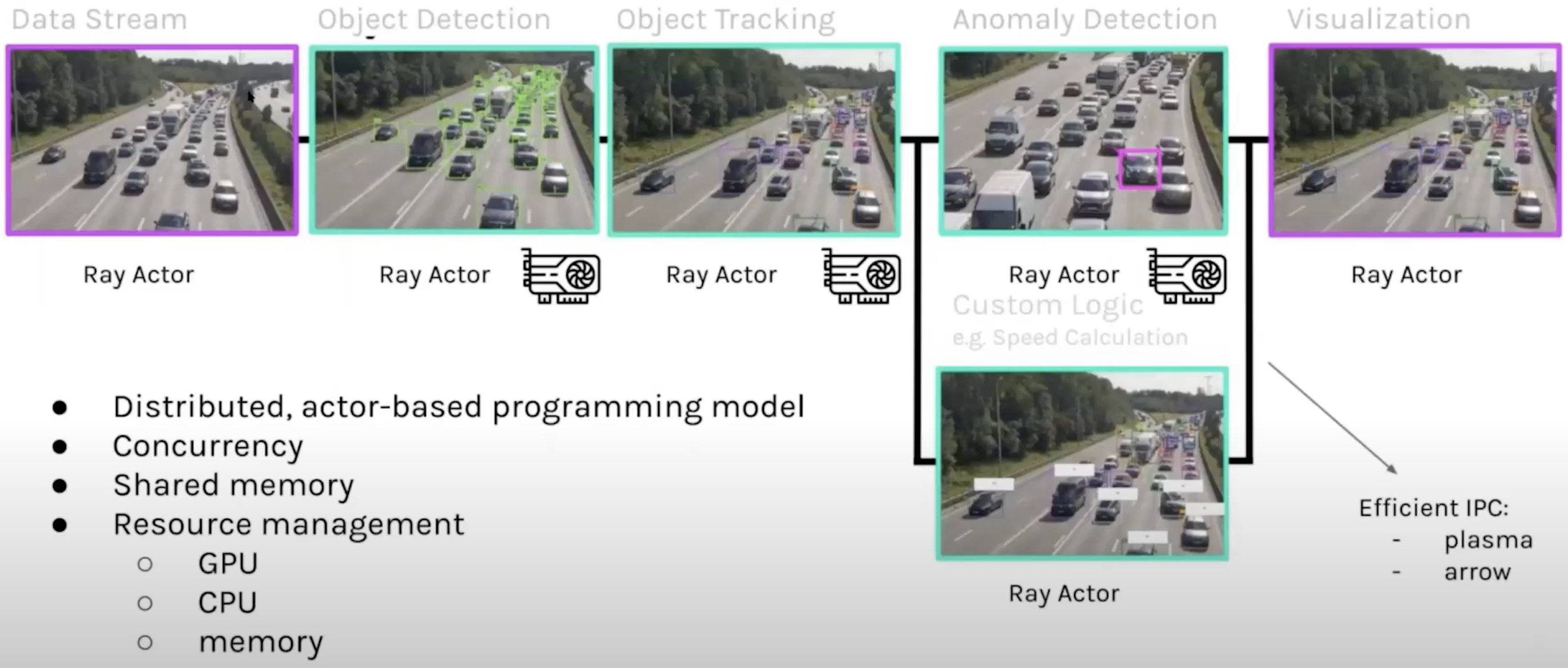

The second use case is high performance ML systems. A company using Ray called Robovision wanted to use vehicle detection using 5 stacked ML models. While they could make their models fit in one GPU or machine, they had to cut their models. They tried to use a vanilla Python implementation to do it which resulted in 5 frames per second. When they used Ray, they got about a 3x performance improvement on the same hardware (16 frames per second). It is important to keep in mind that this is certainly not the first time anyone has worked with 5 stacked ML models. For example, it is definitely possible to do this without Ray by converting each ML model into a microservice, but it is important to keep in mind the complexity. The image below shows the basic pipeline of what Robovision was trying to do.

Robovision processes images and outputs classifications of what the vehicles were and what they were doing. When using Ray, they took each of these things and wrapped them in a Ray actor and that gave them a lot more flexibility and an accessible way to the GPU. Code wise implementing a stacked model like this is surprisingly easy to do.

1@ray.remote

2Class Model:

3 def __init__(self, next_actor):

4 self.next = next_actor

5

6 def runmodel(self, inp):

7 out = process(inp); # different for each stage

8 self.next.call.remote(out)

9

10# input_stream -> object_detector ->

11# object_tracker -> speed_calculator -> result_collector

12

13result_collector = Model.remote()

14speed_calculator = Model.remote(next_actor=result_collector)

15object_tracker = Model.remote(next_actor=speed_calculator)

16object_detector = Model.remote(next_actor=object_tracker)

17

18for inp in input_stream:

19 object_detector.runmodel.remote(inp)

20At the top of the code, there is an annotation at the top to describe the class called Model and then you have subclasses that you change the computation for (e.g., speed_calculator, object_tracker). Basically, what you do is for each case is specify who is next in the chain and then you feed the input to the first one and they land at the thing that is actually capturing the data (result_collector). The code took a relatively complex stacked model, wrote it in this way, and now it can run on your local machine. By changing the cluster that it runs on, it can easily be run in a distributed manner. Instead of trying to run 5 stacked models on one machine, Ray makes it so you can run 5 stacked models on 5 machines each with their own GPU. Ray takes care of all the coordination and setting up those 5 machines.

LinkBuild apps that make ML accessible to non-specialists

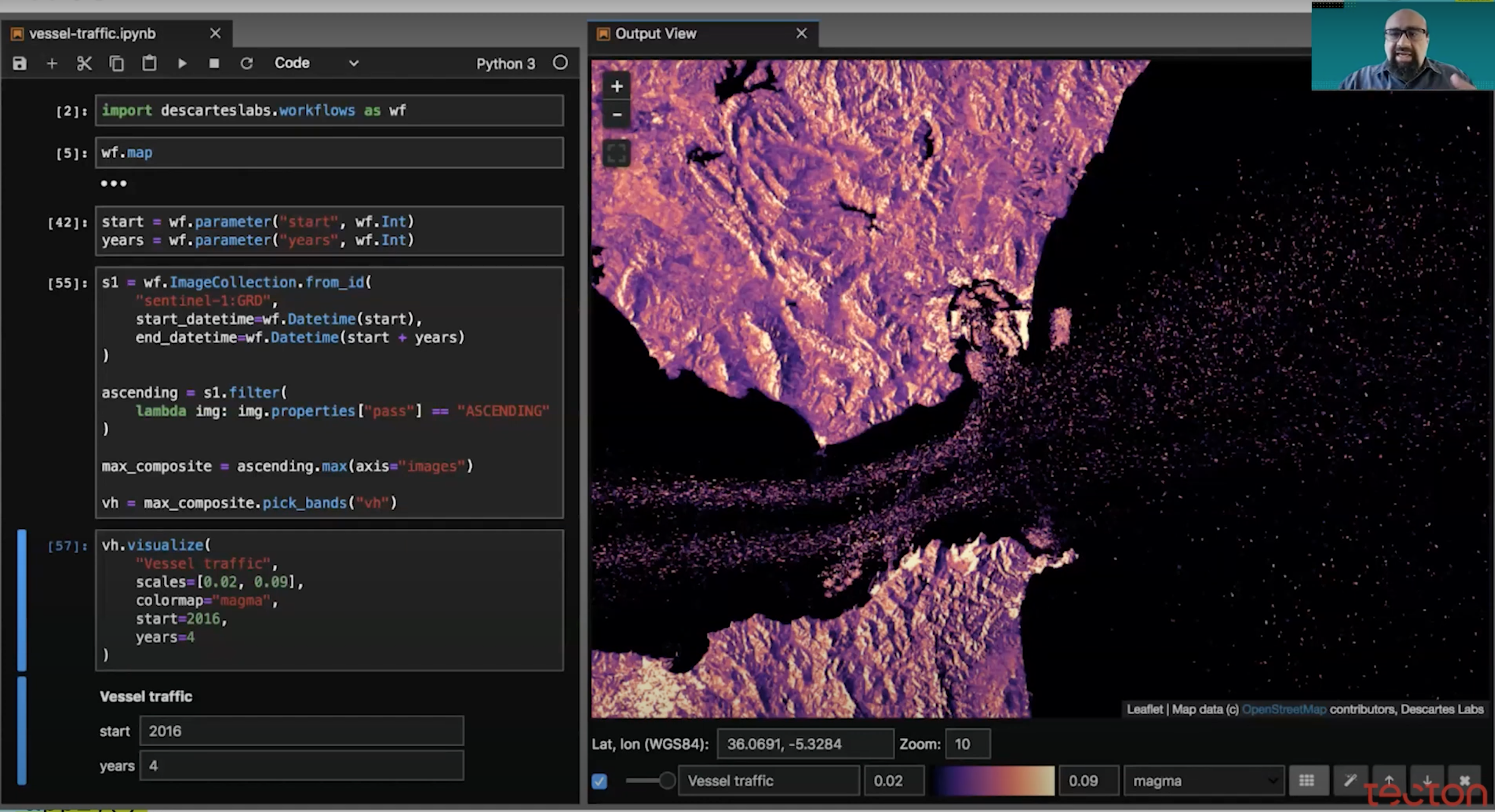

A third use case is around making machine learning accessible. Say you are looking at images all over the world and you want to find out where piles of garbage are coming into the ocean. Descartes Labs makes this possible by providing an easy to use geospatial data analysis platform with an interface that enables people to analyze petabytes of data at their user’s behest with just a little bit of code. Ray enables this by taking care of the distributed computing details.

If you want to learn more about how Descartes Labs uses Ray, there is an excellent talk on the subject here (image courtesy Descartes Labs).

If you want to learn more about how Descartes Labs uses Ray, there is an excellent talk on the subject here (image courtesy Descartes Labs).LinkTo undertake ML projects that don’t fit the ML Pipeline

The final use case are many important and practical ML projects that don’t fit the standard ML pipeline. Some examples of these types of projects include:

Reinforcement Learning: Reinforcement learning mixes the training and testing stages deliberately. We are unaware of any second generation ML system that supports reinforcement learning.

Online Learning: You may want to update your values and behavior online. This means that you have a feedback loop where you are updating your model often on a per minute basis.

Active/Semi-supervised learning.

For example, QuantumBlack, which is part of McKinsey, used Ray to help build the algorithms that helped that team win the America’s Cup - a premiere sailing competition. To learn more about this amazing achievement and how Ray’s RLlib library helped enable this, check out the rest of the Tecton talk here. If you would like to learn how other companies are using Ray’s RLlib library for reinforcement learning, check out this blog highlighting some impressive use cases.

LinkConclusion

This post demonstrated how the history of GPU rendering architectures might be giving us hints to where production ML architectures are going. What we saw was the evolution of production ML architectures. This included:

first generation “fixed function” pipelines.

second generation which involves programmability within the pipeline

third generation which involves full programmability

Ray can be considered an example of a third generation programmable, flexible production ML architecture. It has already led to new and interesting applications like:

Simplifying existing ML architectures

Parallelizing high performance ML systems

Making ML accessible to non-specialists

Highly scalable algorithms for deep reinforcement learning.

If you’re interested in learning more about Ray, you can check out the documentation, join us on Discourse, and check out the whitepaper! If you're interested in working with us to make it easier to leverage Ray, we're hiring!