Introducing KubeRay v1.4

Thank you to everyone who contributed to writing this blog, including: Anyscale (Kai-Hsun Chen, Chi-Sheng Liu, Jui-An Huang, Seiji Eicher), Mooncake Labs (Hao Jiang), Google Cloud (Andrew Sy Kim, Ryan O'Leary), Spotify (David Xia), Roblox (Steve Han, Yiqing Wang), Alibaba Cloud (Yi Chen, Kun Wu), ByteDance (Hongpeng Guo), Commotion (Dev Patel), opensource4you (Yi Chiu, Jun-Hao Wan, You-Cheng Lin, Che-Yu Wu, Nai-Jui Yeh, Fu-Sheng Chen)

We’re excited to announce the release of KubeRay v1.4, building on the strong momentum of Ray on Kubernetes. KubeRay v1.4 adds several upgrades for running Ray on Kubernetes with over 400 commits contributed by around 30 contributors.

Key highlights include the KubeRay API server V2 and the Ray Autoscaler V2, both of which deliver significant improvements in reliability and observability over their predecessors. KubeRay v1.4 also introduces Service Level Indicator (SLI) metrics, providing users with actionable insights into the health and performance of their Ray clusters.

Let's explore these updates in more detail!

LinkGaps Between POC and Production Environments

There are significant differences between POC and production environments. This section highlights those differences using an example of POC-level Ray infrastructure. Next, we’ll explain how KubeRay v1.4 addresses these issues to accelerate the path from POC to production.

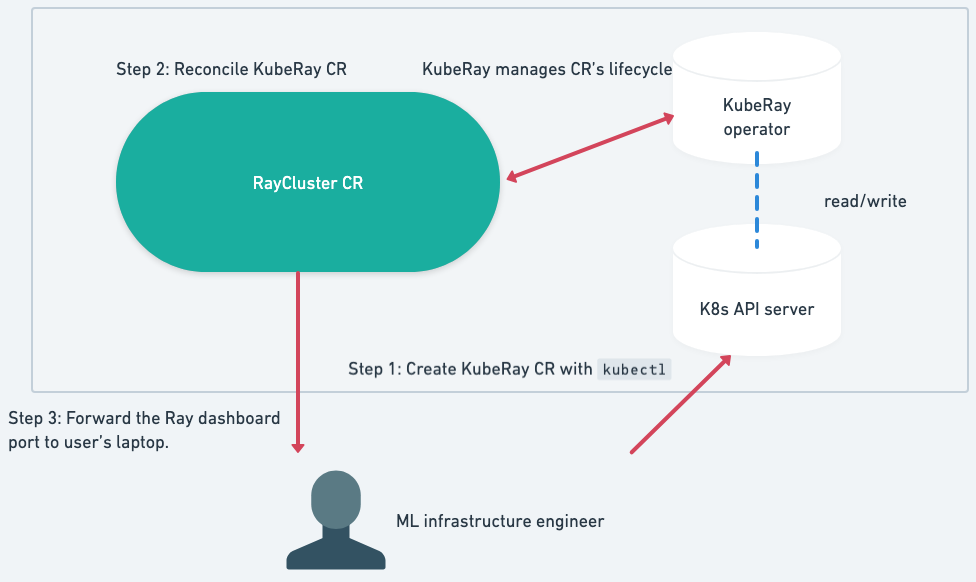

Take Fig. 1 as an example—it illustrates a sample workflow for running Ray POCs on KubeRay. Typically, an ML infrastructure engineer creates a KubeRay custom resource (CR) using the kubectl command. KubeRay then reconciles the custom resource, after which the engineer uses kubectl exec and kubectl port-forward to execute commands in the head Pod and access the Ray dashboard.

Fig. 1 A Sample Workflow for Running Ray POCs on KubeRay

Fig. 1 A Sample Workflow for Running Ray POCs on KubeRayIn real-world production environments, the infrastructure becomes much more complex. For example, the infrastructure and the workloads are often owned by different teams: the Ray infrastructure is managed by the ML infrastructure team, while the workloads are owned by the data science team. Some requirements arise when productionizing Ray on Kubernetes, slowing down the speed from POC to production.

Link#1: Streamlined Interface for Data Scientists

Data scientists may not have permissions to use kubectl to interact with the Kubernetes API server directly. They may also be unfamiliar with Kubernetes. To solve these issues, platform engineers need to build user interfaces that don’t require permissions to access the Kubernetes API server and hide the details of Kubernetes to streamline the UX for data scientists.

Link#2: Operational Simplicity and SLI Readiness

"Easy to operate" is also an important indicator to evaluate whether an ML platform is production-ready or not. In addition, platform engineers often spend a lot of time answering questions about SLA readiness from data science teams. For example: “Can your infrastructure support my use case, which requires startup within X seconds?”

Link#3: Determining Cluster Shape for Dynamic Workloads

It takes users a non-trivial amount of time to figure out the required cluster shape for dynamic workloads like batch inference in advance—for example, determining how many CPUs and GPUs are needed at different stages.

LinkKey Enhancements

KubeRay v1.4 includes the following improvements to address challenges managing Ray on Kubernetes:

KubeRay API Server V2 enables users to build user interfaces for data scientists who lack direct Kubernetes API server access.

KubeRay Dashboard visualizes KubeRay resources in a web UI.

KubeRay SLI metrics empower users with actionable insights into their KubeRay workloads for operation and SLI readiness.

Ray Autoscaler V2 allows users to focus on their Ray applications without worrying about the cluster shape, and V2 provides better observability than V1, which makes operations easier.

LinkKubeRay API Server V2 - Alpha

The KubeRay API server is an extension API server that provides a simple CRUD API to manage KubeRay custom resources. Some organizations build APIs on top of the KubeRay API server to support user interfaces for managing KubeRay resources.

KubeRay API Server v1 defines the KubeRay CR CRUD API interfaces using Protobuf, instead of reusing the KubeRay CRD OpenAPI spec. This means that for each new field added to the KubeRay CRD, a PR is required to update the Protobuf definition accordingly.

In v1.4, we are rearchitecting the KubeRay API server and introducing version V2. This new version reuses the KubeRay CRD OpenAPI spec to provide an HTTP proxy to the Kubernetes API server, allowing users to manage KubeRay CRs more seamlessly—eliminating the previous inconsistency issue. It also includes the following highlighted features:

Compatibility with existing Kubernetes clients and API interfaces, allowing users to use existing Kubernetes clients to interact with the proxy provided by the API server.

Provides the API server as a Go library for users to build their proxies with custom HTTP middleware functions.

See the README for more details.

LinkRay Autoscaler V2 - Beta

The Ray Autoscaler V2 Alpha integration was introduced in KubeRay v1.3.0. Since then, we have added more e2e tests for the integration to our CI workflow. We are now confident in moving the Ray Autoscaler V2 integration to beta for the incoming Ray 2.48 release. Also, thanks to davidxia, users can now enable the integration more easily with the new spec.autoscalerOptions.version field in the RayCluster CR.

1apiVersion: ray.io/v1

2kind: RayCluster

3metadata:

4 name: raycluster-autoscaler

5spec:

6 rayVersion: '2.46.0'

7 enableInTreeAutoscaling: true

8 autoscalerOptions:

9 version: v2

10 …We continue to encourage users to try Ray Autoscaler V2 and provide feedback to us! See Autoscaler V2 with KubeRay to get started! If you're interested in the progress, you can check kuberay#2600 for more details.

LinkSLI Metrics - Alpha

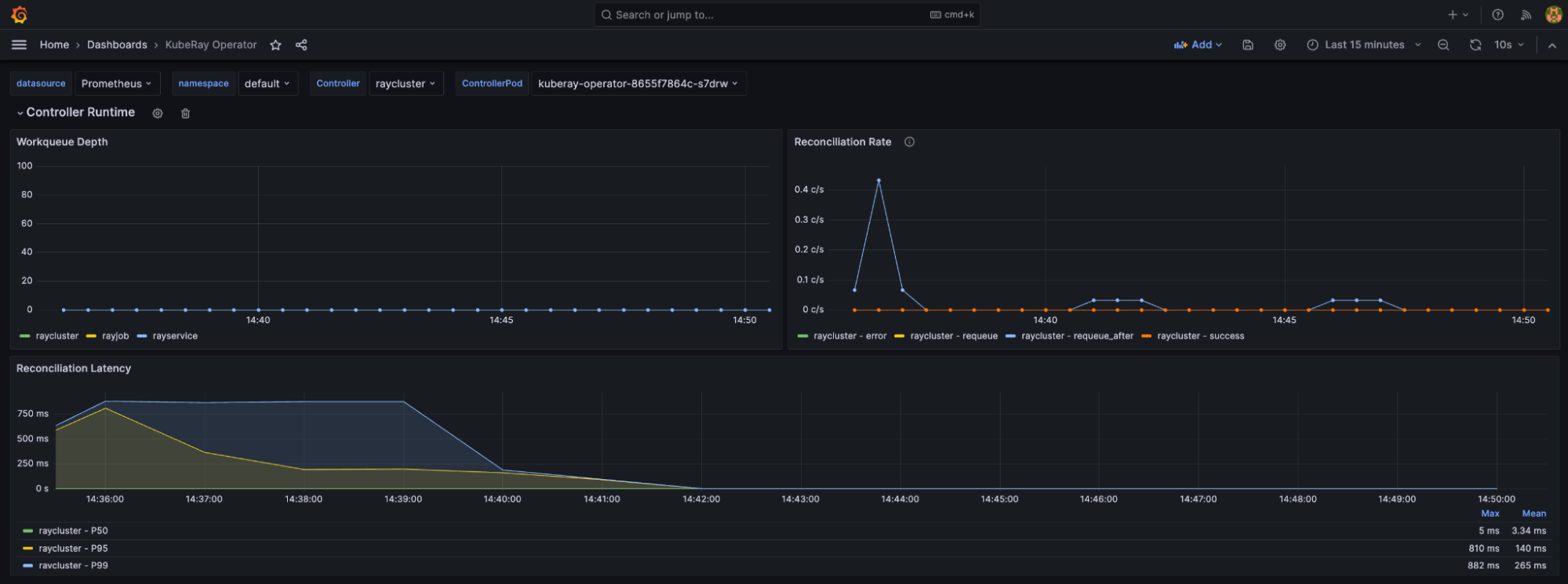

In KubeRay 1.4.0, we're excited to introduce Service Level Indicator (SLI) metrics to supercharge observability and empower users with actionable insights into their KubeRay workloads.

With these new metrics, it's easy to monitor the state and performance of KubeRay Custom Resources (CRs). For example:

kuberay_cluster_infoexposes key metadata about each RayCluster, giving you a high-level overview of your RayClusters.kuberay_job_execution_duration_secondsprovides visibility into RayJob execution time, helping you track performance and identify bottlenecks.

These metrics can be easily aggregated to unlock a broader view of your cluster’s health. For instance, by combining kuberay_cluster_info and kuberay_cluster_condition_provisioned, you can track how many RayClusters are fully provisioned.

And it gets even better—with Grafana dashboards, you can visualize everything from CR metadata to job durations, all at a glance.

Explore the full list of metrics in our KubeRay Metrics Reference. Get started with dashboards using Prometheus and Grafana.

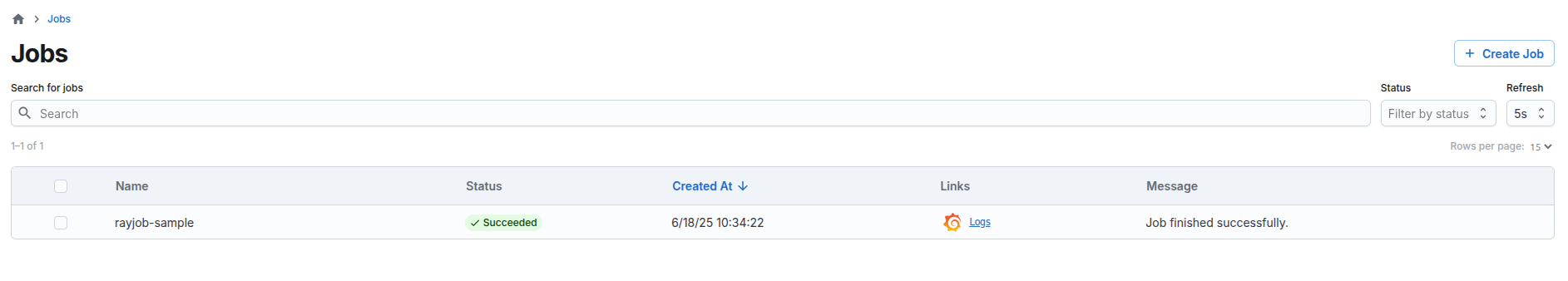

LinkKubeRay Dashboard - Experimental

Thank you to the Roblox team for generously donating the KubeRay dashboard to KubeRay!

The KubeRay Dashboard is a new component to visualize the KubeRay resources in a web UI. It is built with React, TypeScript, and Next.js. The team at Roblox has been using the UI to view the list of ray jobs, check their statuses, access links to logs and metrics, and delete jobs and clusters. Although it is quite bare bones right now, we hope to work on this with the community to make it a useful piece of infrastructure in the KubeRay ecosystem.

See the README for more details.

LinkRun LLM workloads with KubeRay

LinkServe a LLM using Ray Serve LLM

Scaling large language models (LLMs) on Kubernetes requires managing distributed GPU resources and inter-node communication. KubeRay and Ray Serve address these challenges by allowing users to easily create a multi-node, multi-GPU Ray cluster from an existing Kubernetes environment.

This means users can deploy LLMs with an autoscaling, OpenAI-compatible API by specifying the cluster hardware and service configuration in a single place. The KubeRay operator manages the cluster’s lifecycle and scaling based on this definition, eliminating the need for manual infrastructure orchestration.

We've introduced a guide Ray Serve LLM API on KubeRay that demonstrates how to use the new Ray Serve LLM API on KubeRay. Try out the example and let us know what you think!

LinkPost-training with verl on KubeRay

verl is a flexible, efficient, and production-ready RL training library for large language models. It uses Ray to coordinate the interactions between the inference engine and the training system. The guide Reinforcement Learning with Human Feedback (RLHF) for LLMs with verl on KubeRay shows how to train an LLM model to solve basic math problems with verl and KubeRay.

LinkOthers

LinkSupport Gang Scheduling with Scheduler Plugins

The kubernetes-sigs/scheduler-plugins repository provides out-of-tree scheduler plugins based on the scheduler framework. Starting with KubeRay v1.4, KubeRay integrates with the PodGroup API provided by scheduler plugins to support gang scheduling for RayCluster custom resources. See KubeRay integration with scheduler plugins for more details.

LinkStreamline uv Integration on KubeRay

uv is a modern Python package manager written in Rust, and Ray began supporting it in version 2.43. KubeRay v1.4 streamlines uv integration, allowing users to manage dependencies effortlessly. See Using uv for Python package management in KubeRay for more details.

LinkHelm Chart Unit Testing

KubeRay v1.4 introduces unit tests for Helm charts, enhancing the reliability and maintainability of the deployment process. Each Helm chart now comes with its own set of unit tests to ensure that configurations render correctly and behave as expected.

LinkJoin the Ray Community

KubeRay v1.4 delivers enhanced extensibility and more robust building blocks for creating scalable ML platforms with Ray on Kubernetes. As we look ahead to v1.5, we are collaborating with the Ray community to shape the roadmap. Help us build the future of KubeRay by sharing your feedback on kuberay#2999.

You can also join the Ray Slack workspace’s #kuberay-questions channel to ask your questions. In addition, follow the Ray/KubeRay community Google calendar to join the KubeRay bi-weekly community meeting to meet KubeRay contributors.

Follow the instructions below to get started, and check Getting Started with KubeRay for more details.

1helm repo add kuberay https://ray-project.github.io/kuberay-helm/

2helm repo update

3# Install both CRDs and KubeRay operator v1.4.2.

4helm install kuberay-operator kuberay/kuberay-operator --version 1.4.2