Deploy DeepSeek‑R1 with vLLM and Ray Serve on Kubernetes

Thank you to everyone who contributed to writing this blog including: Anyscale (Seiji Eicher, Ricardo Decal, Kai-Hsun Chen) and Google GKE (Yiwen Xiang, Andrew Sy Kim).

Open models continue to show significant improvements in their reasoning and coding capabilities. Deploying these models in your own environment offers a drastically lower cost-per-token than using proprietary APIs for models of similar performance. However, the most powerful open source models can be challenging to operate and scale in production. For example, deploying a single replica of the DeepSeek-R1 model requires a minimum of 16 H100 GPUs, deployed across multiple machines.

The new Ray Serve LLM APIs are designed to simplify and streamline LLM deployments natively within Ray. When deployed on a Kubernetes platform like Google Kubernetes Engine (GKE) via KubeRay, these APIs provide a powerful combination of a developer-friendly experience and production-ready reliability.

LinkStep-by-Step Guides:

Ray on Anyscale: For BYOC (bring your own cloud) deployments with maximum fault tolerance, reliability, and advanced observability, this entire stack can be managed with the Anyscale operator on Kubernetes. Try deploying DeepSeek on Anyscale with this template.

Self-Managed Ray: Use the open-source KubeRay Operator on GKE by following this guide.

LinkWhy deploy DeepSeek-R1 on K8s?

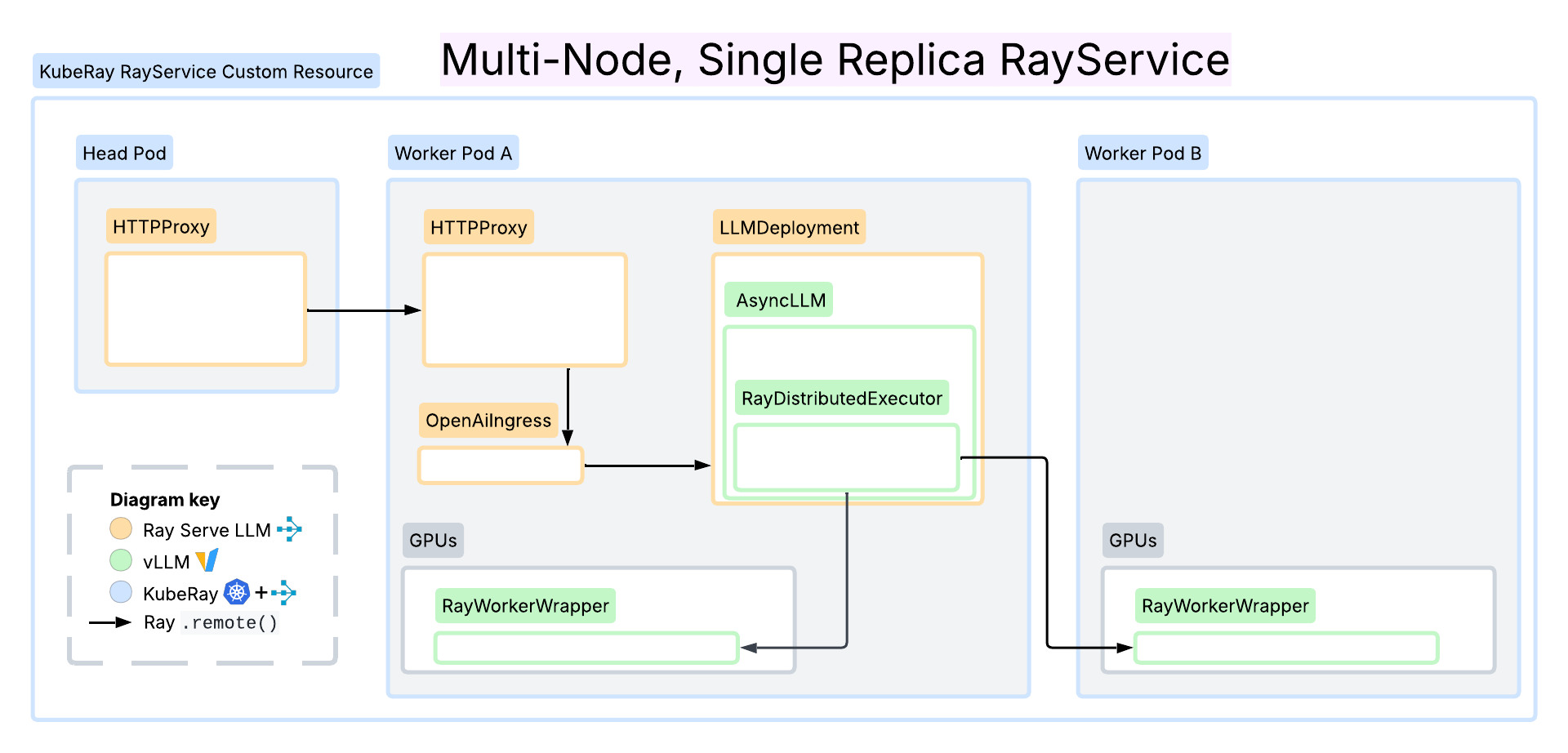

Figure 1. KubeRay RayService + Ray Serve LLM API Architecture Diagram

Figure 1. KubeRay RayService + Ray Serve LLM API Architecture DiagramTo run DeepSeek-R1, you need a minimum of 16 H100 GPUs. Using KubeRay with GKE, you can deploy Ray clusters on A3 High or A3 Mega machine types, which provide 8 H100 GPUs per node connected by high-speed NVLinks for intra-node communication and multi-node networking.

Running large models like DeepSeek-R1 on Kubernetes provides significant benefits, such as highly available Ray Serve applications, zero-downtime cluster upgrades, and GPU observability.

LinkWhy vLLM with Ray Serve LLM?

The Ray Serve LLM APIs provide features for LLM applications that complement the capabilities of the underlying inference engine:

Autoscaling, including scale-to-zero

Load balancing and custom request routing APIs

Multi-model deployments and multi-model pipelines

Batteries-included observability

Easy multi-node model deployments

Inference engine abstraction to keep up with advancements in the field

LinkAutoscaling

Ray Serve LLM’s autoscaling system monitors request queues and replica utilization for scaling decisions. The Serve framework supports scale-to-zero functionality for cost reduction during idle periods, traffic-aware scaling based on request queue depth, and configurable delays to prevent scaling oscillations.

LinkCustom Request Routing APIs

Ray Serve LLM implements a request routing system that supports load balancing strategies beyond power-of-two load balancing. For example, Ray Serve LLM comes with a custom prefix cache-aware router, PrefixCacheAffinityRouter to optimize KV cache hit rate. This can improve throughput by more than 2.5x for workloads with long, shared prefixes, like summarization, or chat applications with multiple system prompts. These APIs are public so requests can be routed in the way that maximizes performance on your workload.

LinkObservability

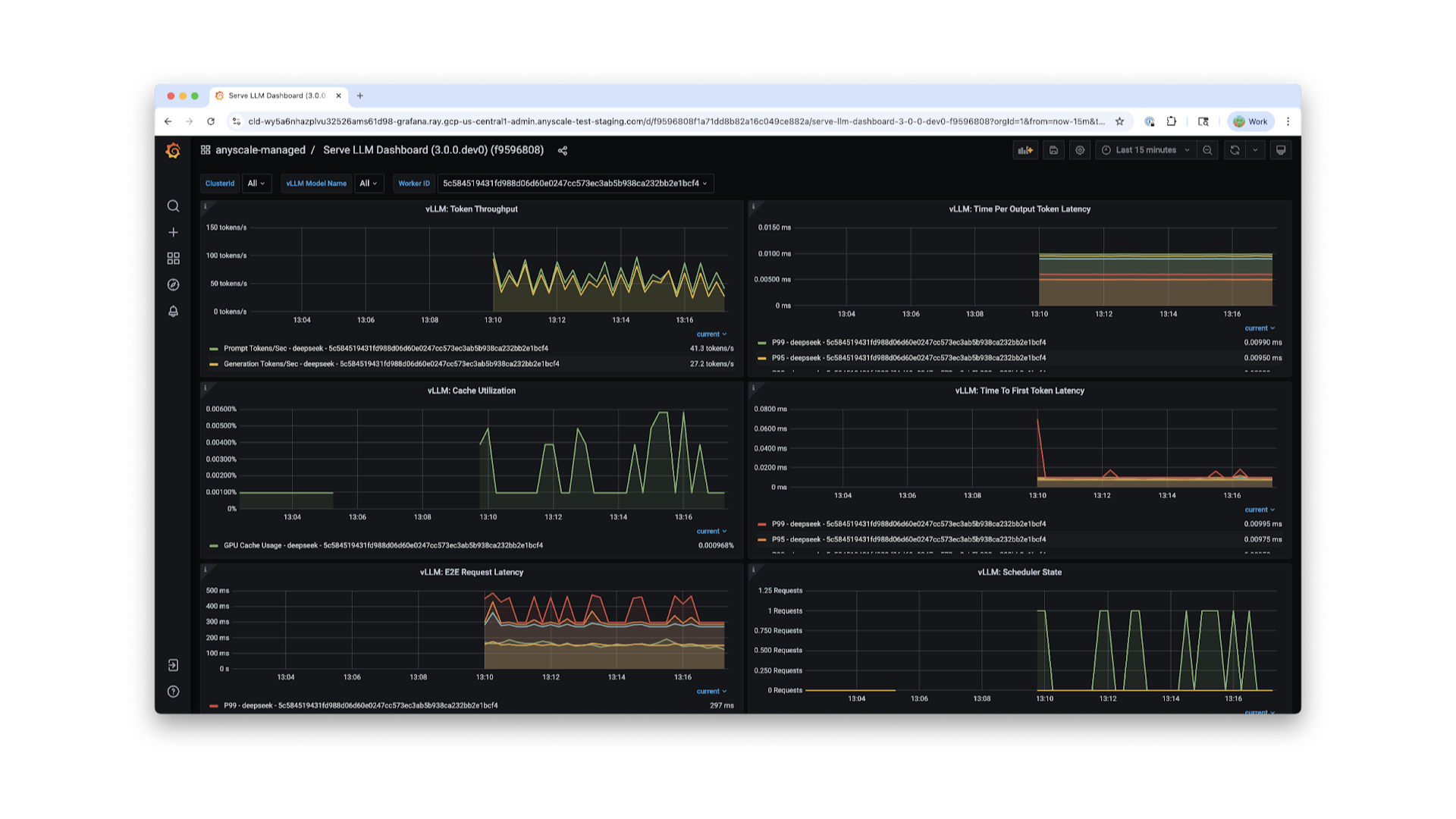

Ray provides an observability suite with logging and dashboard capabilities that displays memory utilization, task execution, resource allocation, and autoscaling behavior. In addition, Ray Serve LLM captures vLLM engine metrics including request-level data (tokens per request, input-to-output ratios), model performance (TTFT, TPOT), and other metrics (GPU memory, prefix cache hit rate). Ray integrates with vLLM to expose engine-level metrics alongside Ray cluster metrics via a shared Prometheus endpoint.

Figure 2. Ray Serve LLM dashboard

Figure 2. Ray Serve LLM dashboardLinkMulti-Node Model Deployment

Ray Serve LLM supports deployment of large models across multiple GPUs or nodes. For models exceeding single GPU memory capacity, the system implements tensor parallelism (model weight distribution across GPUs) and pipeline parallelism (layer distribution across nodes).

This capability is essential for large models like DeepSeek-R1 and Kimi K2 that require multiple nodes due to their size. Additionally, multi-node deployment provides better cost optimization by allowing organizations to utilize cheaper or more easily available hardware like L40Ss instead of harder-to-acquire H100s.

Ray cluster orchestration handles node placement and coordination automatically, so it’s easy to deploy models that require more than a single node’s resources.

LinkOpenAI API Compatibility

Ray Serve LLM deployments are OpenAI API compatible out of the box. Existing OpenAI client implementations work without any changes while supporting serving multiple models on the backend.

LinkJoin the Community

We believe that every developer should be able to succeed with AI (including running the latest models) without worrying about building out and managing their infrastructure.

If you want to be a part of a community to learn more about the cutting edge in AI infrastructure, join Ray Slack, or file an issue/make a PR on GitHub!