Simplify your ML Development Cycle with Anyscale and Weights & Biases

In this blog post we will go over the challenges of deploying ML models in production, and how using Anyscale with Weights & Biases simplifies MLOps with a built-in integration that allows for scalability, reproducibility and consistency. Anyscale and Weights & Biases minimize refactorization and reduce friction throughout the ML development lifecycle.

As organizations continue to expand the use of Machine Learning (ML) and AI workloads to improve their operations, MLOps (Machine Learning operations) has become a key initiative to ensure the smooth and efficient deployment and scaling of AI/ML workloads in production. While many organizations are still adapting; their teams, tools, processes and practices, present challenges to efficient MLOps due to several factors:

Data management: ML models require vast amounts of data for training, and this data can be spread across multiple sources. Managing this data, maintaining its quality, and ensuring that the right data is being used for the right models can be a significant challenge.

Model performance: Machine learning models are only as good as their performance, and monitoring the performance of models in production can be difficult. This requires constant monitoring, testing, and tuning to ensure that the models deliver the desired results.

Reproducibility: Ensuring that the same results are obtained every time a machine learning model executes poses challenges, particularly in a production environment with multiple dependencies.

Scalability: Machine learning models can be computationally intensive, and scaling them to meet the demands of a growing user base can result in bottlenecks, cost overruns, or outages.

Deployment: Deploying machine learning models in production requires a solid infrastructure, and this can be difficult and expensive to set up and maintain, particularly for teams with limited resources.

Security: Machine learning models and the data they use can be sensitive, and securing this data and the models themselves can be a significant challenge.

Simplifying MLOps requires balancing four key factors: consistency, reproducibility, scalability, and flexibility across the ML lifecycle. The Anyscale and Weights & Biases integration allows for a best of breed and unified ML platform for managing and deploying your ML models at scale.

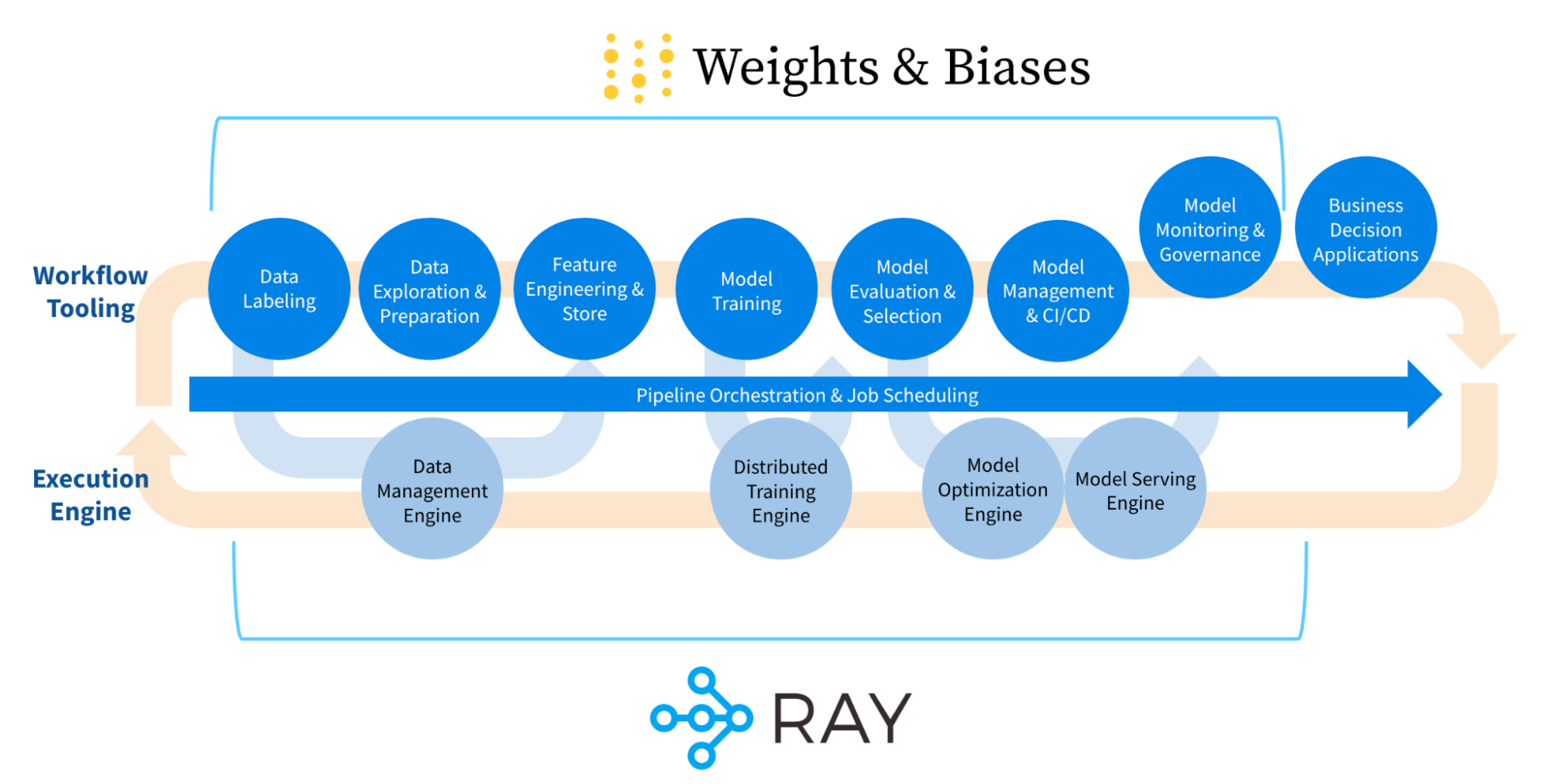

Figure 1. ML practitioner development lifecycle

With Anyscale, teams can easily scale and deploy models across different environments, ensuring consistency in model performance. Weights & Biases, on the other hand, provides a suite of tools for monitoring, logging and visualizing training runs, enabling teams to track the progress of their models. Together, these tools help teams manage the complexities of MLOps across your workforce and simplify the managing, deploying and monitoring machine learning models, ensuring consistency, reproducibility, scalability, and flexibility.

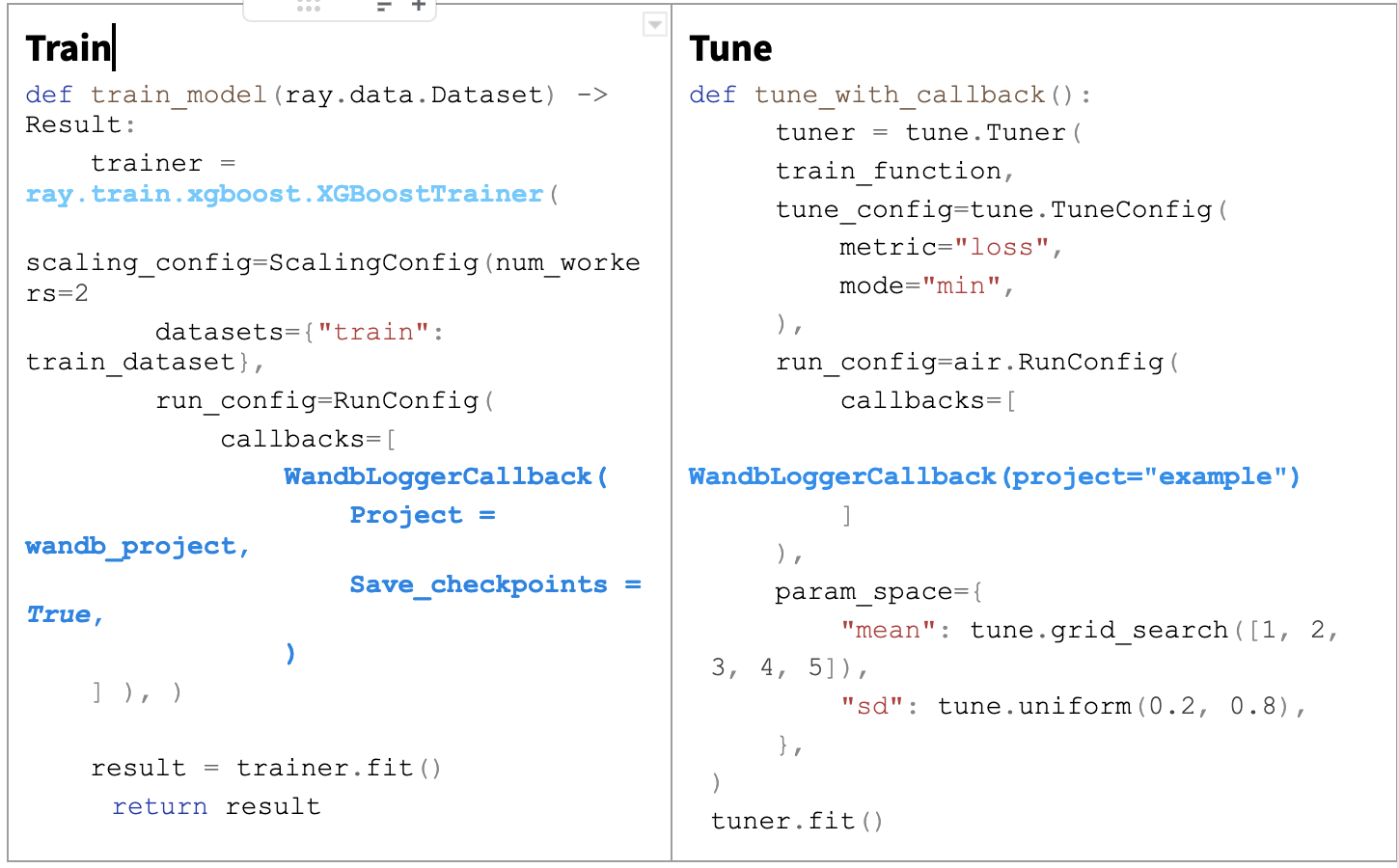

Figure 2. From Ray, you can easily add the WandbLoggerCallback with a few lines of codes allowing you to easily track your experiments with Weights & Biases.

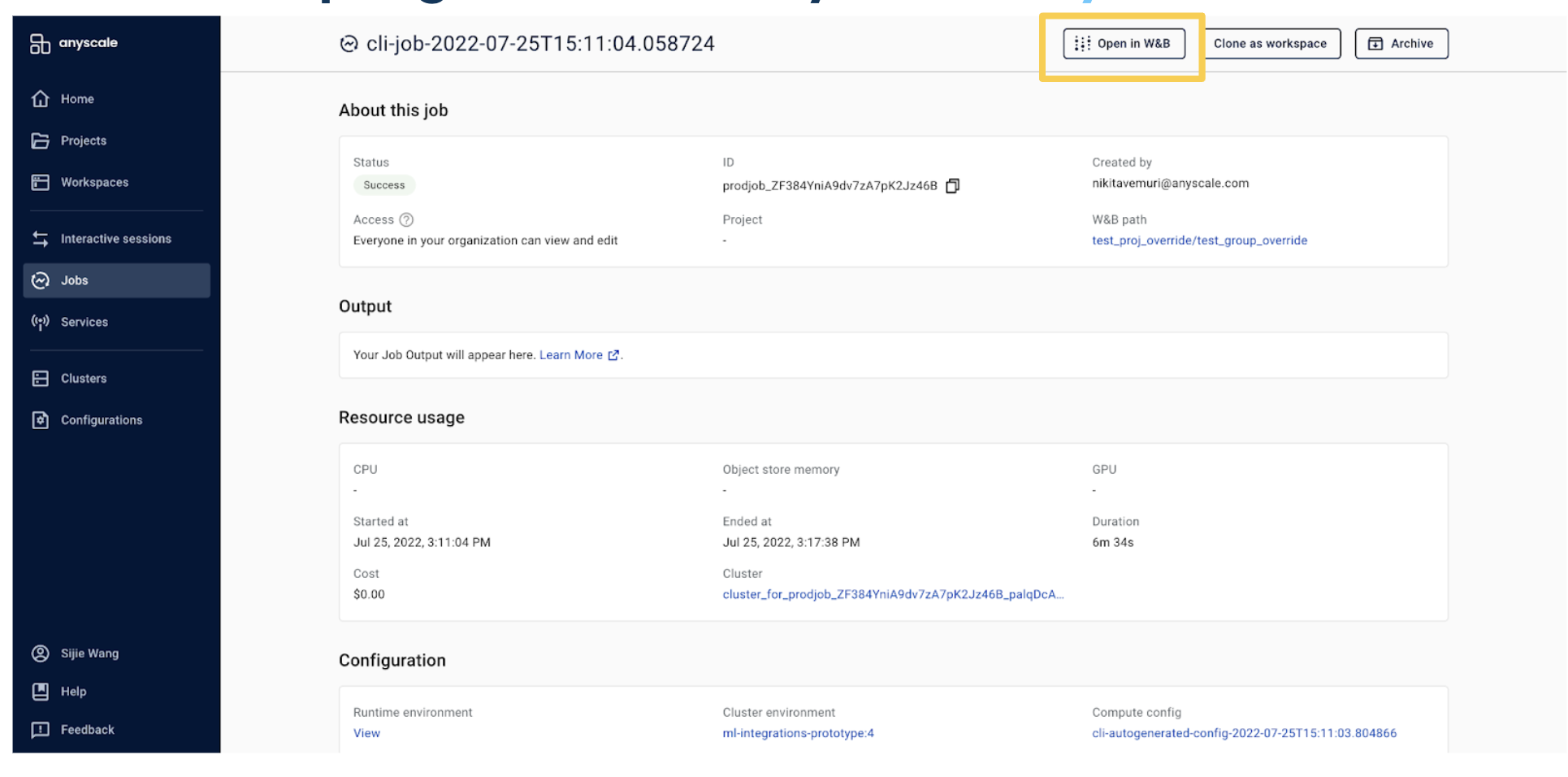

When using both Weights & Biases and Anyscale, the built-in integration provides a seamless developer experience for navigating across the two different tools:

User provides default values for the Weights & Biases project and group populated based on the type of Anyscale execution that is used - with the option to override these. This allows for an easy conceptual mapping and organization of model runs in the context of the execution in Anyscale

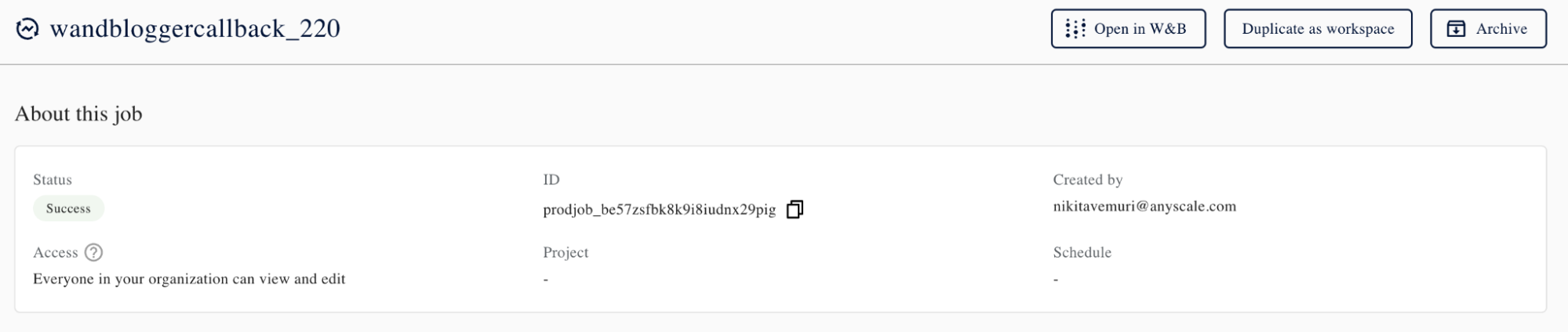

Also, you can navigate from the Anyscale web console page to the Weights & Biases resource for easier navigation

Figure 3. Anyscale and Weights & Biases integration provides out-of-the-box default mappings between Weights & Biases runs and Anyscale concepts

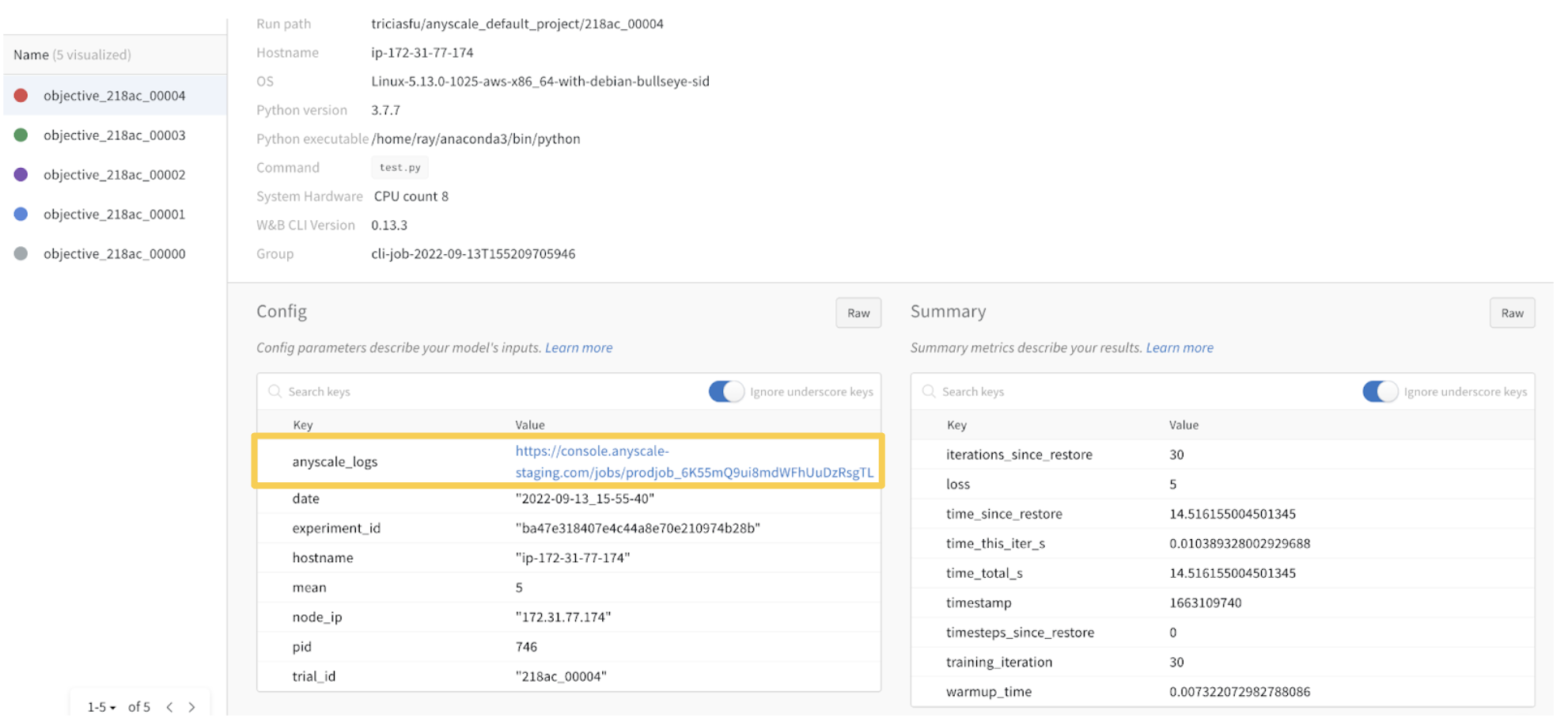

At the same time you can click from the Weights & Biases project and trial run back to the Anyscale console.

Figure 4. Easy access to logs for each trial across tasks in Anyscale for an intuitive debugging workflow.

The integration provides a bi-directional contextual linking between the different tools. This allows the ML practitioner to track all data and metadata onto Weights & Biases via a simple API for experiment tracking and reproducibility, with the ability to easily toggle back to the Anyscale ML compute runtime. The ML practitioner can easily “clone” or duplicate their ML runtime with all the necessary dependencies for consistency for easy debugging of a model in production.

Figure 5. Easy integration with a simple API for experiment tracking, reproducibility, and ability to toggle back to Anyscale

Watch the on-demand webinar to learn more about Anyscale and Weights & Biases integration and see a demo.