Reinforcement Learning with RLlib in the Unity Game Engine

Train different agents inside the Unity3D game engine, thereby observing that their initial clumsy behaviors become more and more sophisticated and clever over time. We will use Ray RLlib, a popular open-source reinforcement learning library, in connection with Unity’s ML-Agents Toolkit, to cover the heavy-lifting parts for us.

One or two decades ago, the term “Game AI” was reserved for hard-scripted behaviors of the likes of PacMan’s opponents or some more or less sophisticated seek-, attack-, and hide algorithms, used by enemy- and other non-player characters.

More powerful methods for creating the illusion of intelligent agents inside games - behavior trees and utility functions - came up next, but all of these still required a skilled AI programmer to write a plethora of relatively static, inflexible behavior code.

Deep reinforcement learning (deep RL) is an upcoming, not-so-academic-anymore technology, which allows any character in a game (or in any non-gaming, virtual world) to autonomously adopt useful behaviors, simply via a learning-by-doing approach.

In this blog post, we will train different agents inside the Unity3D game engine, thereby observing that their initial clumsy behaviors become more and more sophisticated and clever over time. We will use Ray RLlib, a popular open-source reinforcement learning library, in connection with Unity’s ML-Agents Toolkit, to cover the heavy-lifting parts for us.

LinkWhat exactly is Reinforcement Learning?

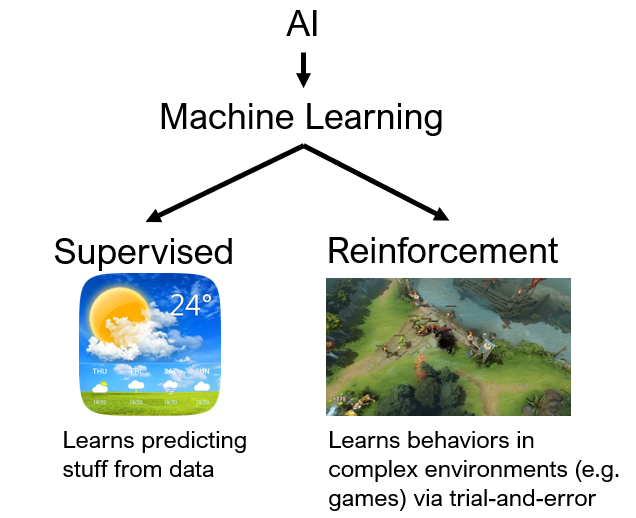

Machine learning (ML), a sub-field of AI, uses so-called “deep neural networks” (or other types of mathematical models) to flexibly learn how to interpret complex patterns. Two areas of ML that have recently become very popular due to their high level of maturity are supervised learning (SL), in which neural networks learn to make predictions based on large amounts of data, and reinforcement learning (RL), where the networks learn to make good action decisions in a trial-and-error fashion, using a simulator.

RL is the tech behind mind-boggling successes such as DeepMind’s AlphaGo Zero and the StarCraft II AI (AlphaStar) or OpenAI Five (DOTA 2). The reason for it being so powerful and promising for games is because RL is capable of learning continuously - sometimes even in ever changing environments - starting with no knowledge of how to play whatsoever (random behavior).

LinkSetup for the Experiments ahead

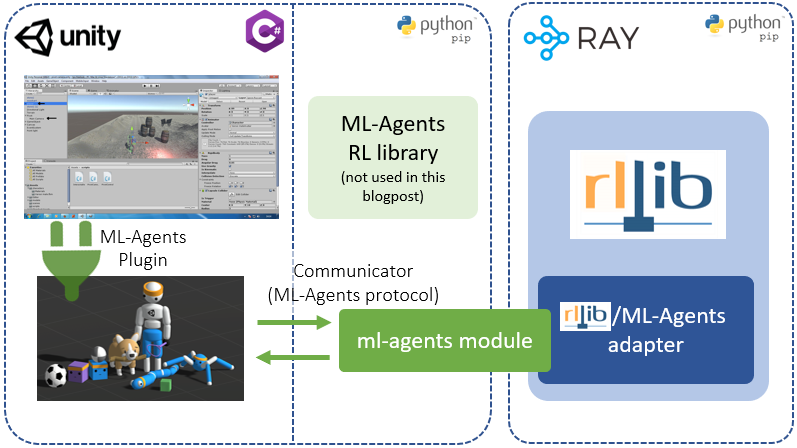

We will use the Unity3D editor as our simulation engine, running the game environment. Furthermore, we will activate the ML-Agents plugin inside the editor to allow our game characters to send observations and receive actions using ML-Agents’ TCP-based communication protocol.

On the reinforcement learning end, we will run RLlib, importing ML-Agent’s python module (to be able to communicate over TCP), and using an adapter that translates between ML-Agent’s and RLlib’s APIs.

An alternative setup would be to replace Ray RLlib with ML-Agent’s own RL-library (see figure). There are many differences between the two RL-backends and a detailed analysis would be beyond the scope of this post. But in short, RLlib caters more to reinforcement learning researchers and heavy-duty industry users due to its advanced features, and capabilities for parallelization and algorithmic customization. ML-Agents RL library, on the other hand, addresses the needs of game developers, who prefer an easier to set up and more simplified API, usable by non-experts without losing the capability to solve complex RL problems.

LinkGetting started - Installing all prerequisites

We need three things to get started: The Unity3D Editor, some python pip installs, and the ML-Agents example environments.

LinkInstalling Unity3D

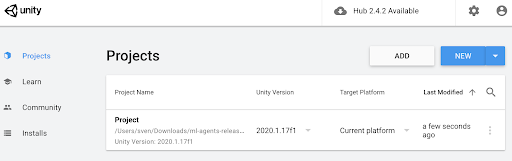

Head over to Unity’s download site and follow the instructions on how to install the engine on your computer. I found it useful to handle my Unity projects via the UnityHub that comes with the installation. From there you can manage your engine versions (e.g. add build modules) and game projects. We recommend starting with the newest engine version (2020.1.17f1 at the time of this writing), which worked without problems with the other dependencies described below.

LinkInstalling Ray’s RLlib and Unity’s ML-Agents Toolkit

Do three simple pip installs from your command line:

$ pip install ray[rllib] tensorflow mlagents==0.20.0

Quickly try things out to make sure everything is ok by typing the following on the command line:

$ python

Python 3.8.5 (default, Dec 15, 2020, 09:44:06 ...

Type "help", "copyright", "credits" or ...

>>> import ray

>>> from ray.rllib.agents import ppo

>>> import mlagents

>>>

LinkThe ML-Agents example environments

Download this zip file and unzip it into a local directory of your choice. In the UnityHub, go to Projects and add the project located within the zipped folder (click “ADD” in the Unity Hub Projects tab, then navigate to [unzipped folder]/Project and click “Open”):

Then open the added project by clicking on it in the Hub. You may have to upgrade the Project’s Unity version to the one you installed (e.g. 2020.1.17f1) and confirm this before it starts up.

If the commands above work without errors, we will all be set to start loading and modifying our first game and do a quick AI training run on it.

LinkOur first example game - Balancing balls (a lot)

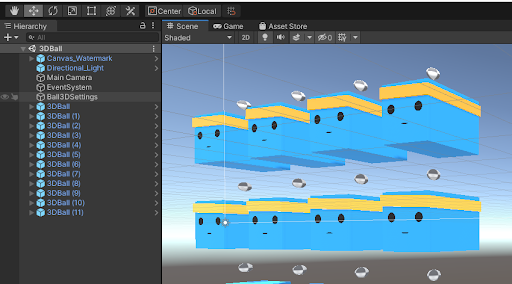

Let’s load our first scene (“3DBall”) into the opened Project and make sure we apply some necessary settings inside the game in order to make it “learnable” by RLlib. Click on “File->Open Scene” in your Unity editor and navigate to the downloaded (zip from above) ml-agents folder, inside of which you should be able to select: “Project->Assets->ML-Agents->Examples->3DBall->Scenes->3DBall.unity”

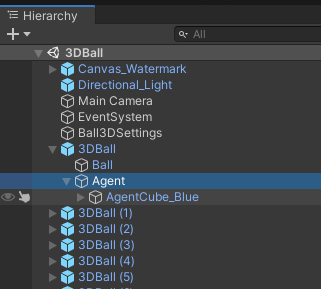

You should now see a scene similar to the one below.

Our (still untrained) agents are the blue cubes and their task is to balance the grey balls such that these don’t fall down. If they do, the respective agent will be “reset” and a new ball will appear on top of it. Now, clicking on the “3DBall (x)” links inside the Hierarchy panel on the left, should open the hierarchy for each agent.

Clicking on “Agent” within each “3DBall” should open an “Inspector” panel on the right side of your screen. In that panel, make sure that for every agent, the property “Model” is set to “None (NN Model)”, which means that we will train the neural network of this agent ourselves (and not use an already built-in, trained one).

Now let’s press the play button in the top center of the screen and watch our agents act. You can see that the agents don’t do anything and the balls keep falling down. Well, let’s fix that and make our cubes smart: RLlib to the rescue! Press Play again to stop the running game. In order to get the code that we’ll be working with in this blog, you can either git clone the entire Ray RLlib repository (which will have the script we’ll be using in the ray/rllib/examples/ folder), or just download the example python script here.

After you downloaded the script (or cloned the repo), run it via:

$ cd ray/rllib/examples

$ python unity3d_env_local.py --env 3DBall

All the script needs to train any arbitrary game is the game’s “observation-” and “action spaces”. Spaces are the data types plus shapes (e.g. small RGB images would belong to a [100 x 100 x 3]-uint8 space) of the information that the RL algorithm gets to see at each game tick (time step) as well as the space of the action decisions that the algorithm is required to output (e.g. a Pacman agent has to chose each timestep from 4 discrete actions: up, down, left, and right).

After a few seconds, the script will ask you to press the “play” button inside your running Unity3D editor. Do so and watch what happens next: your agents will start acting - wobbly and randomly at first - as well as learning how to improve over time and become better and better. Keep in mind, though, that getting from random movements to actually smart behavior will take some time. After all, we are only running a single environment (no parallelization in the cloud), with some built-in vectorization (12 agents practicing at the same time).

After about 30 minutes (this may vary depending on the compute power of your machine), you should see the agents having learnt a perfect policy and not dropping any balls any longer:

The script makes sure it saves progress every 10th training iteration in a so-called “checkpoint”. You should be able to find the checkpoints under ~/ray_results/PPO/PPO_unity3d_[]…. If you stop the game now and take a look at this folder, you should see some checkpoints in there. You can - at any point - pick up training from an existing checkpoint, by running our script with the following command line options:

$ python unity3d_env_local.py --env 3DBall --from-checkpoint /my/chckptdir/checkpoint-280

Even though we won’t go into any details regarding RLlib (or RL itself) in this blogpost, feel free to browse around in the unity3d_env_local.py script we have been using so far. You’ll see that we can change some parameters which control the learning behavior. For example, the learning rate (“lr”), an important parameter in machine learning, can be increased in order to learn faster (albeit making the process more unstable and learning success less likely) or decreased. You can also change the neural network architecture by changing the value under the “fcnet_hiddens” key. It determines how many nodes each of the neural network layers should have and how many layers you want altogether.

LinkUpping the difficulty - Solving a multiplayer game

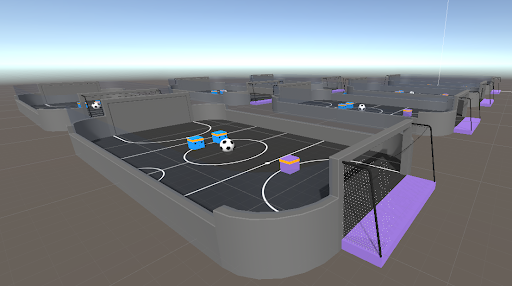

Now that we know how to set up a simple scene in Unity3D and hook it up to RLlib for autonomous learning, let’s up our game and move on to a slightly more difficult arena, in which two different types of agents have to learn how to play against each other. We’ll once more use one of the already existing Unity examples: Soccer - Strikers vs. Goalie. Well, the name is quite explicatory, two strikers, both of them on “team blue” have to pass around a soccer ball and try to shoot it into the purple goal (see screenshot below). The goal on the other side of the arena has been closed by a wall; how convenient ... for the strikers. A single goalkeeper (team purple) must try to defend this goal:

The “Soccer - StrikersVsGoalie” example environment of ML-Agents: We will use RLlib to train two different policies: The two blue strikers will try to score by passing the ball across the goal line (purple field on the right side). The purple goalie will try to defend the goal.

The “Soccer - StrikersVsGoalie” example environment of ML-Agents: We will use RLlib to train two different policies: The two blue strikers will try to score by passing the ball across the goal line (purple field on the right side). The purple goalie will try to defend the goal.The RLlib setup we’ll be using for this task is a little bit more complicated, due to the multi-agent configuration (take a look at the exact config being assembled in the script for the “SoccerStrikersVsGoalie” case). Again, feel free to either clone the entire Ray RLlib repo (with the script in it) or only download the single script from here. To start the training run, do:

$ python unity3d_env_local.py --env SoccerStrikersVsGoalie

After clicking play again in the Unity editor, training will start and you should see both teams randomly acting in the arena. You can tell that this is already a much more complex task and if we kept this running in only one editor instance it would take many hours for the two teams to play in a way that somewhat resembles actual soccer. Stop the running script.

So how do we speed things up a bit? We could move stuff to the cloud, but we don’t want to do this for this blog post (we’ll show you how to do this in a follow up one, when we aim at some really tough task). Instead, let’s simply parallelize on our local machine.

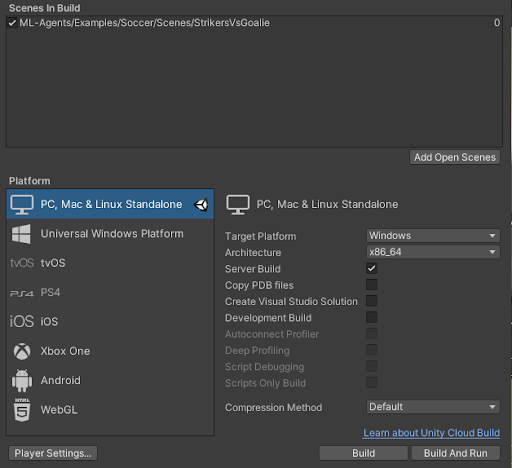

First, we’ll compile our game into a “headless” (no graphics) executable. Click on File -> Build Settings, then make sure your configuration roughly looks like this (make sure you tick Server Build for a headless version and the correct operating system of yours):

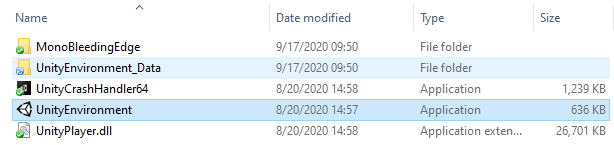

Click “Build” and choose an existing(!) target folder for your executable. Make sure the folder is empty as I often ran into problems with compile errors and dlls not being copied correctly. If the build goes well, you should see your executables like this (depending on your OS, your files may differ):

Restart the script with the same command line as before, except this time specify the compiled executable and the number of “workers” we would like to use:

$ python unity3d_env_local.py --env SoccerStrikersVsGoalie --num-workers 2 --file-name c:\path\to\UnityEnvironment.exe

In RLlib, a “worker” is a parallelized Ray process that runs in the background, collecting data from its own copy of the environment (the compiled game) and sending this data to a centralized “driver” process (basically our script). Each worker has its own copy of the neural network, which it uses to compute actions for each game frame. From time to time, the Workers’ neural networks get updated from the driver’s constantly learning one. Doing things in this distributed way, we should now see progress (even if not perfect behavior) after about 8-16h hours:

LinkWhat’s next?

In a future blog post, we will be exploring more difficult gaming tasks, the solving of which will require more sophisticated RL technologies (such as “Curiosity” and other cool tricks). We’ll cover the Unity Pyramids environment and possible solutions as well as give you many more insights into configuring and customizing your RLlib + Unity3D workflow.

Also consider joining the Ray discussion forum. It’s a great place to ask questions, get help from the community, and - of course - help others as well.