Ray Summit Series - Scaling Parallel Python Jobs

This is the third installment of our Ray Summit stories. The first installment went over how users are using Ray to train LLM, the second installment covered how users use Ray to build their own ML platforms. In this blog, we will go over how users use Ray to scale many Python jobs at massive scale. Scaling many parallel Python jobs can come in many different forms. You can use Ray to train one million jobs in record time, scale many simulations or scale the number of experiments that are part of a hyperparameter job.

LinkScaling Instacart fulfillment ML on Ray

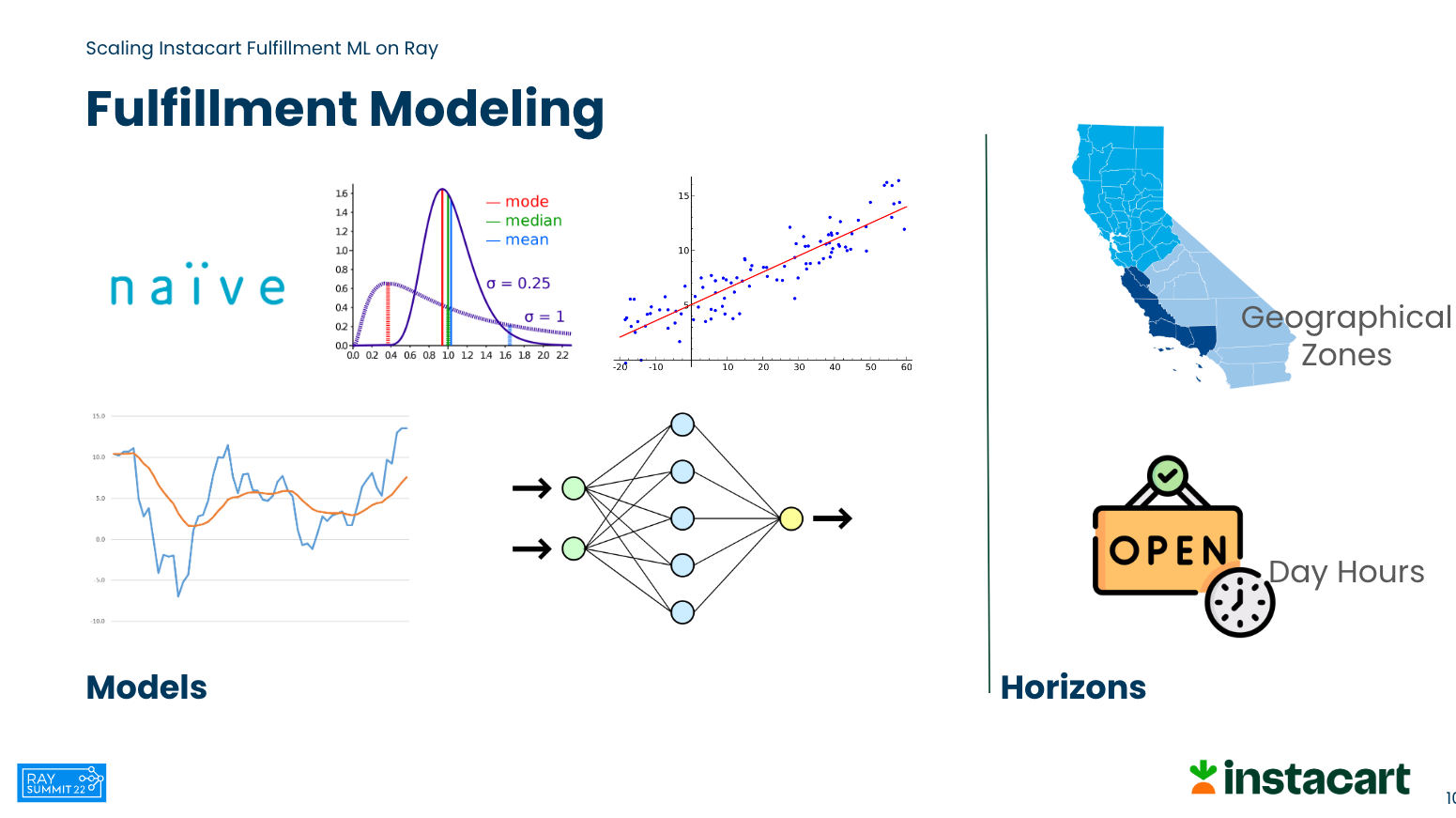

The Instacart fulfillment marketplace which operates in the US and Canada plays an integral part of the entire life cycle of every order from optimizing route delivery, to predicting supply and demand. As part of their fulfillment workload, Instacart needs to create thousands of models based on different attributes such as geographical zones, the time of the day, the number of shoppers (supply), the number of orders (demand), etc. These models are stateless in nature (train from the ground up every time) and are based on a window of data. This is very much akin to a dynamic pricing use case or the models powering the ETA on your mobile app.

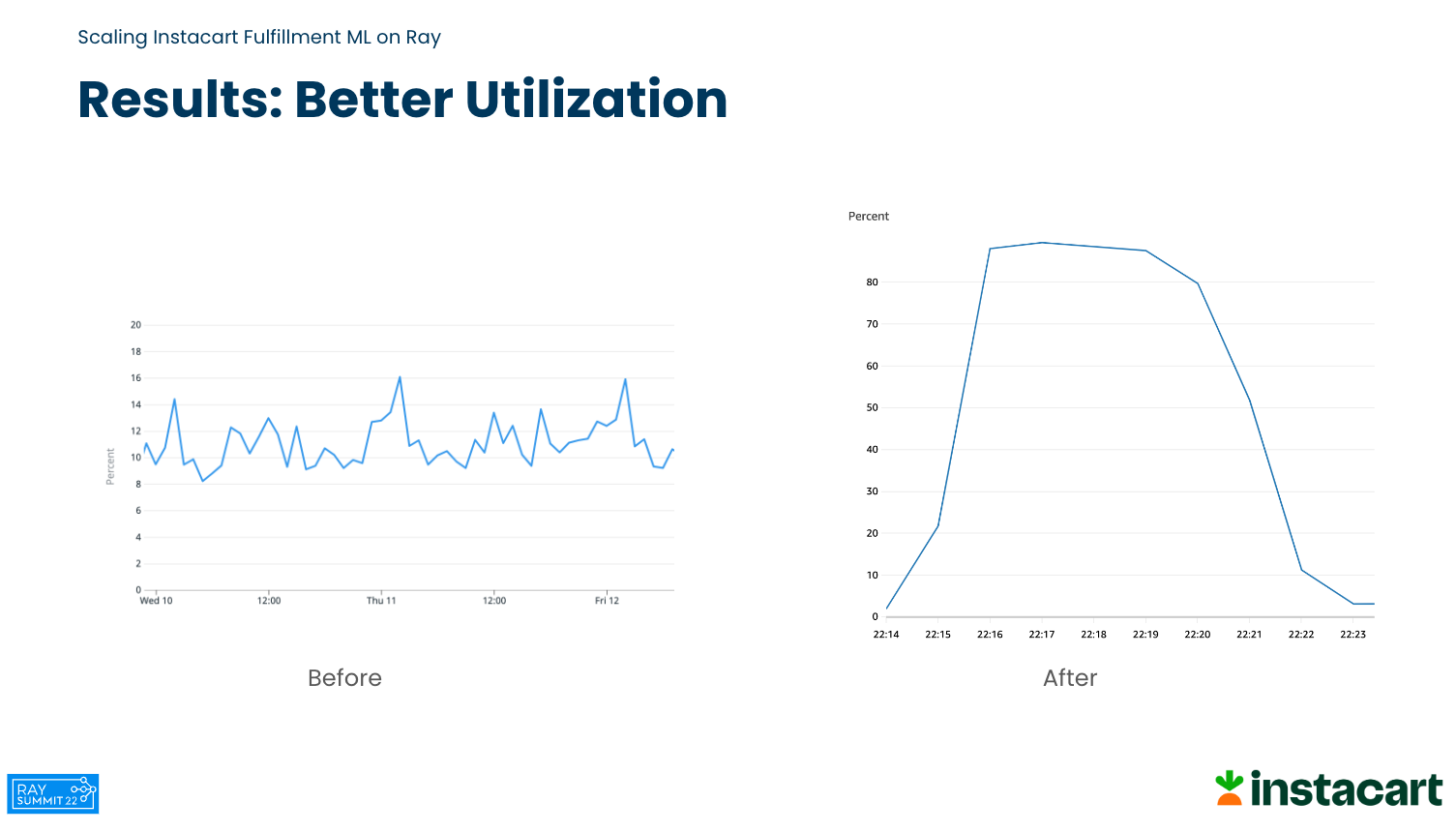

By migrating to Ray from their AWS ECS, Celery and Redis stack, Instacart was able to simplify their deployment and yield a ~10x better cost performance.

Example: Realtime Supply-Demand Gap Forecasting Model

750 zones, each zone contains 2 models (supply + demand) = 1.5k unique training jobs

Computation Resources: 10 m6a.4xlarge instances

Before: 10 concurrent Celery workers

Now: 70 Ray workers

Training Completion Time

Before: ~4 hours on Celery

Now: 20 mins on Ray Clusters

Check out the full talk:

LinkRay for distributed mixed integer optimization at Dow Chemicals

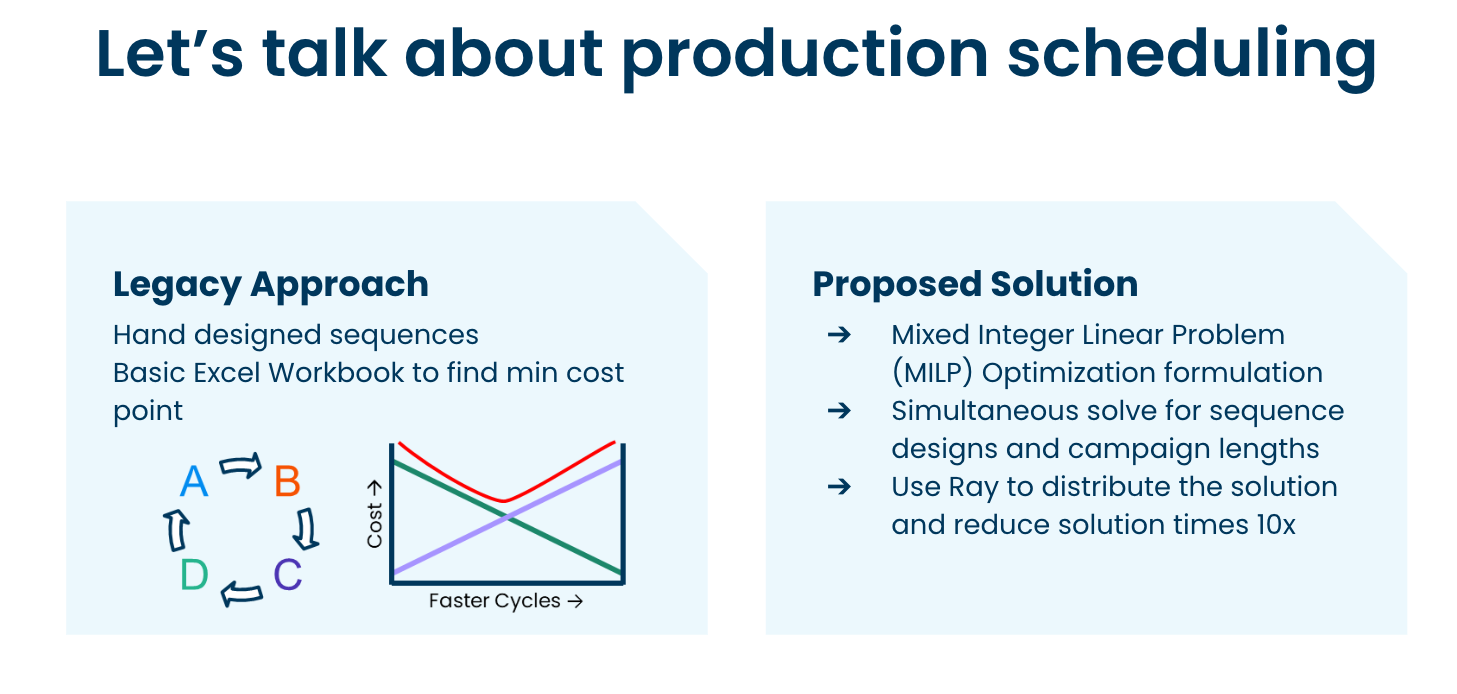

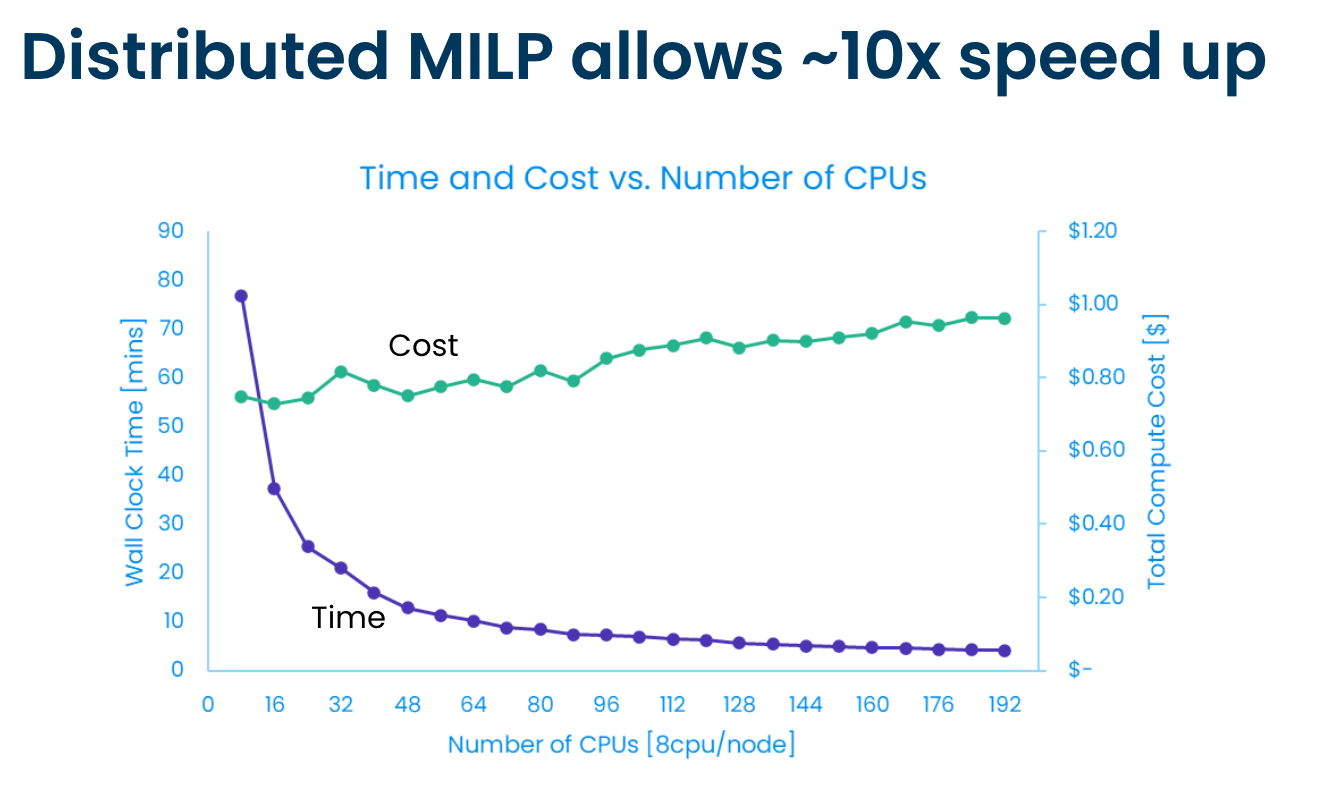

Production schedule design at Dow is a complex process that involves designing production cycles for multiple production units subject to manufacturing and scheduling constraints. Formulating this problem as a single large mixed integer linear program (MILP) optimization is computationally expensive. To solve this complex problem in a reasonable time, Dow introduced a multi-agent decomposition approach that split the problem into two separate hierarchical agents.

MILP doesn’t scale well when larger numbers of products are optimized, taking too long to solve. To address this, a hybrid sampling and simulation approach is introduced, using Ray to distribute independent samples. This approach involves randomly generating a campaign sequence and solving a sub-problem to the original mix integer linear problem, collecting the result, and repeating until a target number of samples is achieved.

By breaking down the problem and using Ray to parallelize the optimization problem, Dow was able to obtain a 10x better performance.

Check out the full talk:

Link

Reducing cost, latency, and manual efforts in hyperparameter tuning at Ridecell

Ridecell is using Ray Tune to efficiently search across all of their CV model’s hyperparameters which is powering their Lane Detection Algorithm and Object Detection Algorithm to identify 3D positions, models, and interactions. Their products are leading the way in the digital transformation of fleet businesses and operations. Ridecell’s Fleet Automation and Mobility solutions modernize and monetize fleets by combining data insights with digital vehicle control to turn today’s manual processes into automated workflows. The result is unmatched levels of efficiency and control for shared services, motor pools, rental, and logistic fleets.

This talk highlights some of the challenges of training many deep learning models, which can take thousands of GPU hours and cost thousands or millions of dollars. Ray Tune allows them to optimize their trials and use early stopping techniques. It also covers logging and parameter search libraries to optimize the grid search process and prevent costly mistakes.

Watch the full talk: