Ray Serve: Tackling the cost and complexity of serving AI in production

By Akshay Malik, Edward Oakes and Phi Nguyen | September 25, 2023

Announcing Ray Serve and Anyscale Services general availability

Three years ago we published a blog about how serving machine learning models is broken. Since then, we have been hard at work to build a better way that is flexible, performant, and scalable.

Over this time, we’ve seen many companies and individuals go to production with Ray Serve and Anyscale Services for a wide variety of AI applications including large language models, multi-model applications for video processing, high scale e-commerce applications, and many others. Since January 2023, the usage for Ray Serve and Anyscale Services has grown over 600%.

Today, we are excited to announce that Ray Serve (our open-source model serving library built on top of Ray) and Anyscale Services (managed production deployments of Ray Serve in a customer’s cloud) are generally available.

In this blog post, we outline the common challenges we’ve identified by working with Ray Serve users, and then cover how Ray Serve and Anyscale Services solve them by improving time to market, reducing cost, and ensuring production reliability.

LinkModern challenges of putting AI in production

With the boom in deep learning and generative AI in the last 2 years, AI applications have become more prevalent, complex, and expensive. As more individuals and companies get serious about deploying innovative AI applications in production, these challenges are top of mind.

In our discussions with AI practitioners from small shops to large enterprises, we identified three common problems in the industry:

Improving time to market: the AI landscape moves fast, so AI practitioners need to too. Reducing time spent from development to production and using the latest ML techniques means delivering more value to end users. Existing patterns like microservices add complexity with increased development and operational overhead.

Reducing cost: AI applications require flexibility and dynamic scaling due to dramatically more hardware than traditional web services. GPU cost and scarcity is only making this worse. Deploying each model as its own microservice,as is the case with most solutions in the market, can lead to poor hardware utilization and high operational overhead.

Ensuring production reliability: AI applications are increasingly powering serious and valuable use cases. AI developers need to trust that they can run a complex application 24/7 and respond to incidents. This challenge is compounded by optimizing for cost and developer productivity.

Ray Serve and Anyscale Services are purpose built to solve these challenges.

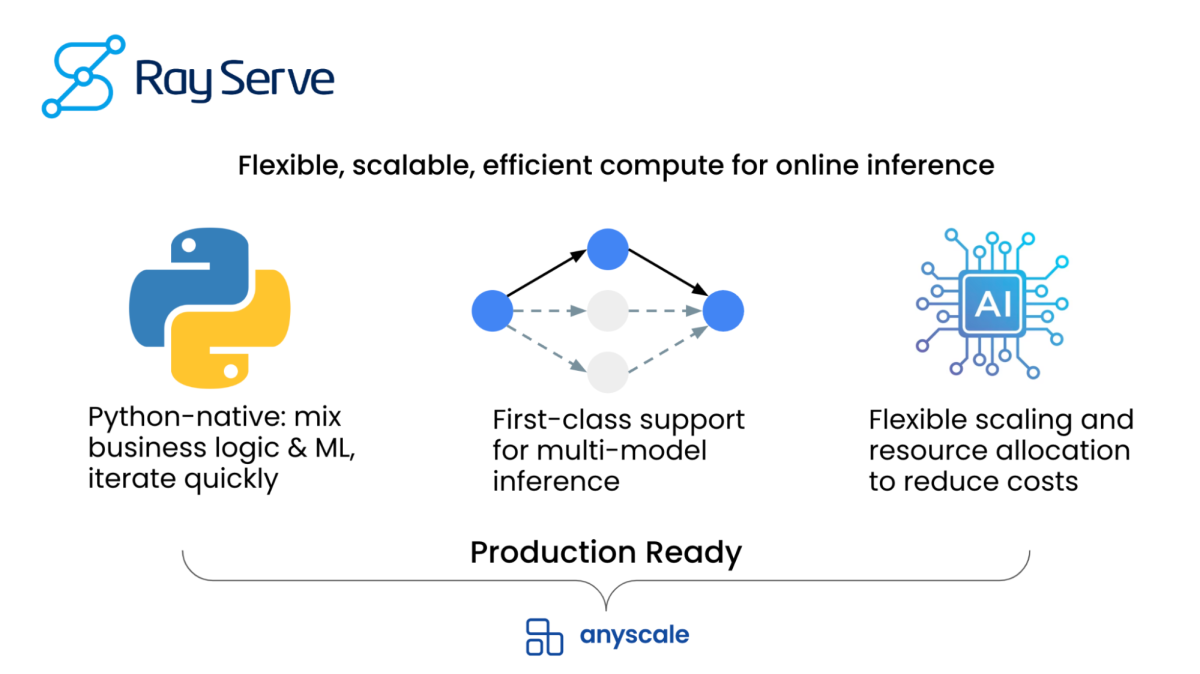

Ray Serve offers simplicity, flexibility, and scaling to give you the best of both worlds: helping you reduce costs and get the most out of your hardware while offering a simple Python-centric development experience for local development and testing.

Anyscale Services manages deployment infrastructure and integrations, and is heavily production-tested. This ensures that you can run in production reliably and build innovative AI applications without worrying about maintaining infrastructure. Anyscale Services also adds further hardware optimizations to reduce cost and maximize GPU availability.

The combination of Ray Serve on Anyscale Services helps you optimize the full serving stack - across the model, application and hardware layers.

LinkImproving time to market

Reducing the time from ideation to production is critical – whether it’s proving out value in the POC stage or improving applications over time, making researchers and engineers productive is a top priority and infrastructure should reflect it.

Microservices are a popular solution for AI applications due to the benefits they brought for web applications, enabling independent scaling and deployment across teams. This architecture can make local development and testing painful and add a high dev-ops overhead for deploying many separate models and business logic separately.

AI practitioners need to be able to test their applications easily and not worry about infrastructure setup. A model serving solution should require few changes from research code to production, improving iteration speed.

LinkDeveloper-centric Python API for writing AI applications

Ray Serve provides a simple Python interface for serving ML models and building AI applications – this is one of the primary reasons why developers love Ray Serve.

You can build and deploy complex ML applications with only a few code annotations, leveraging the simplicity and expressiveness of Python. Ray Serve also integrates with FastAPI, enabling you to build end to end applications, not just the ML inference component. Further, you can run the same code on your local machine during development as you would in production. In a recent a16z blog post, they outlined reference architectures for LLM applications - these types of applications can be built seamlessly using Ray Serve. You do not need to learn any new concepts about Kubernetes or other serving infrastructure to build a production-grade AI application.

Sample Ray Serve code that creates a production-grade deployment from a summarization + translation model:

1from starlette.requests import Request

2import ray

3from ray import serve

4from ray.serve.handle import RayServeHandle

5

6from transformers import pipeline

7

8@serve.deployment

9class Translator:

10 def __init__(self):

11 self.model = pipeline("translation_en_to_fr", model="t5-small")

12

13 def translate(self, text: str) -> str:

14 model_output = self.model(text)

15 return model_output[0]["translation_text"]

16

17@serve.deployment

18class Summarizer:

19 def __init__(self, translator: RayServeHandle):

20 self.model = pipeline("summarization", model="t5-small")

21 self.translator = translator

22

23 def summarize(self, text: str) -> str:

24 model_output = self.model(text, min_length=5, max_length=15)

25 summary = model_output[0]["summary_text"]

26 return summary

27

28 async def __call__(self, http_request: Request) -> str:

29 summary = self.summarize(await http_request.json())

30 translation = await self.translator.translate.remote(summary)

31 return translation

32

33app = Summarizer.bind(Translator.bind())

34LinkManaged deployment infrastructure

Anyscale Services further accelerates AI development by managing the infrastructure for fault tolerance and deployment allowing you to focus on your application code instead of the infrastructure. We support zero-downtime upgrades and canary rollouts for model or application code updates. Anyscale also features Workspaces, a fully managed development environment for building and testing Ray applications at scale. It comes with a rich set of model serving templates for you to use as a guide for building your own application, enabling new customers to deploy their first Anyscale Service in as little as an hour.

"Our initial GPU real-time inference pipeline solution using Redis and AWS ECS was effective but had limitations. It was complex to develop, troubleshoot and deploy and didn't scale for complex inference graph, lacked seamless research-to-production transition, and suffered from resource underutilization. With Anyscale Services, we were able to increase the productivity of our researchers as well as reducing the time to market while increasing efficiency, uptime and reliability on our production applications."

- Drew Barclay, ML platform lead, Entrupy

LinkReducing cost

AI applications require dramatically more compute resources than previous-generation web services. Typical web services are IO bound, while modern AI applications require a lot of compute for each request. Preprocessing and ML inference can occupy a CPU or GPU for up to seconds, so handling user traffic often requires many CPU and GPU instances. This is especially true for large generative AI models like LLMs.

These hardware requirements make AI applications both important and difficult to optimize. This is only made worse by the fact that GPU pricing has skyrocketed recently due to increased demand and limited supply.

An ML serving framework needs to be built with flexibility and dynamic scaling in mind. It must be easy to define and update a scaling pattern and to integrate with state-of-the-art techniques to optimize your models’ hardware utilization. However, most model serving frameworks are built like microservices, with one model behind each endpoint. This leads to high operational overhead, poor hardware utilization, and a painful experience when trying to evolve an architecture.

LinkFlexibility and scaling

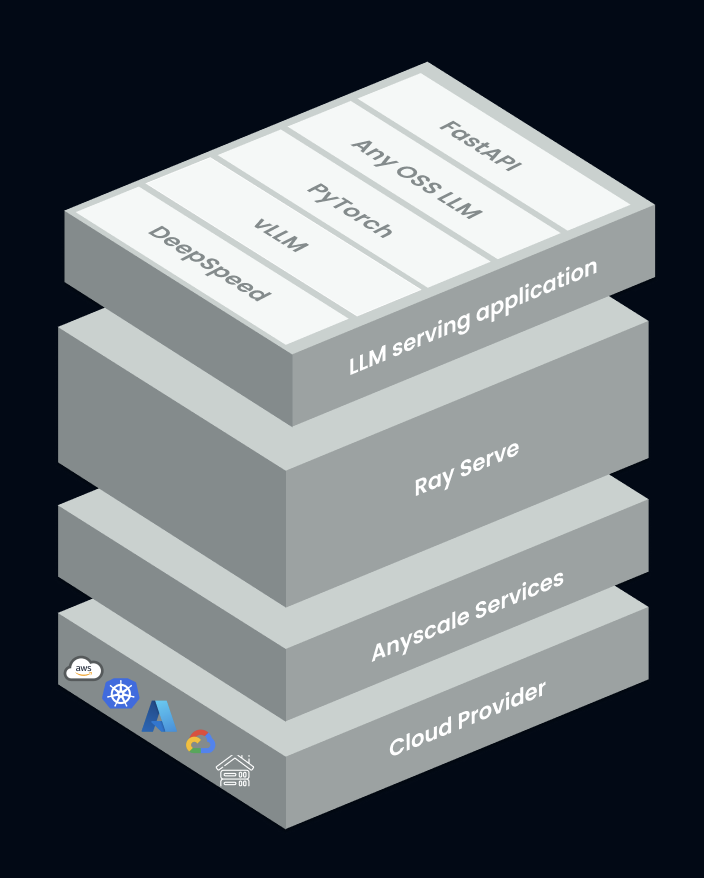

Recently we launched Anyscale Endpoints with the lowest price for the Llama-2-70b model at $1/million tokens, around 50% lower than OpenAI’s GPT-3.5. This breakthrough of cost savings is enabled by the flexibility of Ray Serve and proprietary optimizations in Anyscale. Ray Serve is framework-agnostic, allowing you to run any model framework such as PyTorch, TensorFlow, or model optimizers like TensorRT, DeepSpeed, etc. As an example, we were able to integrate continuous batching and vLLM in <1 week in our open-source LLM serving library to unlock 23x higher QPS.

Anyscale will also showcase integrations with TensorRT-LLM and Triton in a future release part of our new collaboration with NVIDIA. Compared to other solutions where you are often locked into using one optimization over another, you can have them all in Ray Serve, making it a truly future proof serving solution.

Ray Serve also has built-in auto-scaling, support for fractional GPUs/resources, and dynamic request batching. Ray Serve can support heterogeneous clusters that include different hardware types. These features allow you to tailor your scaling strategy to your application.

"Ant Group has deployed Ray Serve on 240,000 cores for model serving, which has increased by about 3.5 times compared to last year. The peak throughput during Double 11, the largest online shopping day in the world, was 1.37 million transactions per second. Ray allowed us to scale elastically to handle this load and to deploy ensembles of models in a fault tolerant manner."

- Tengwei Cai, Staff Engineer, Ant Group

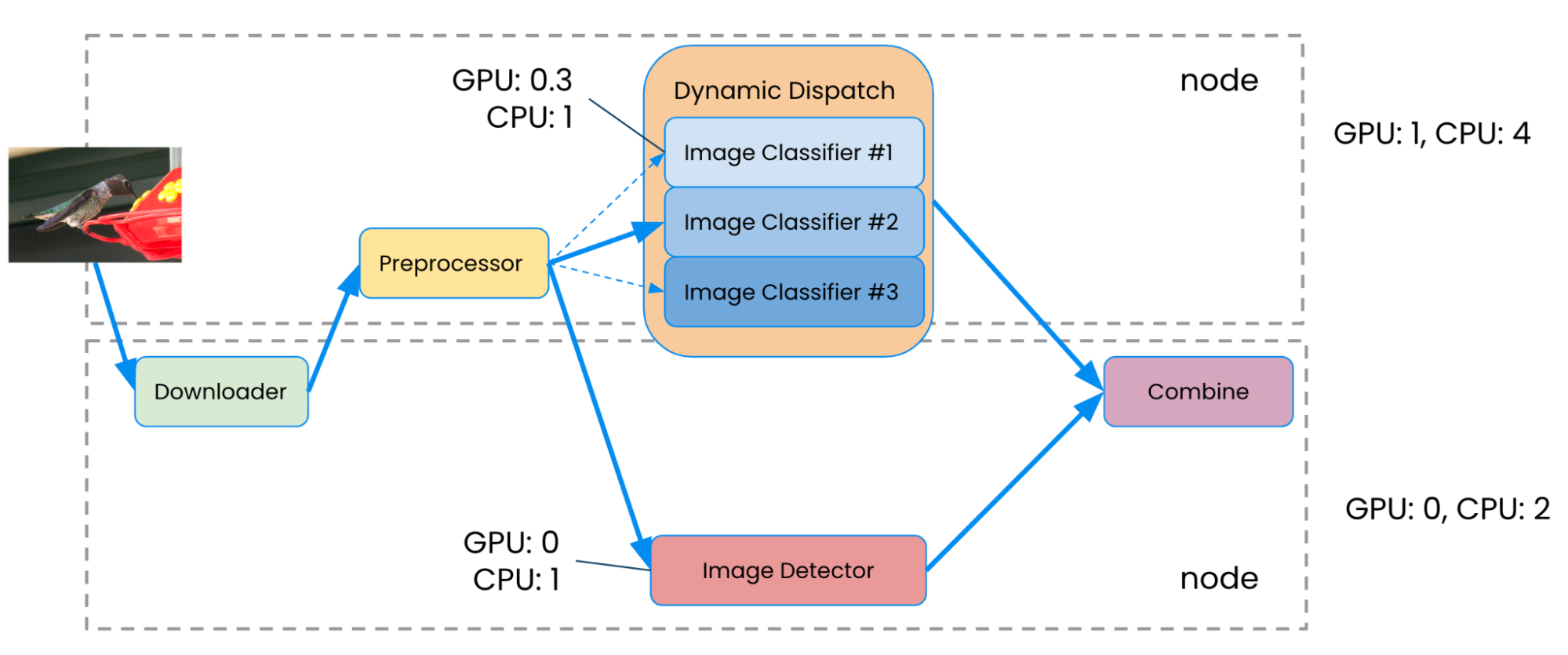

LinkHardware efficiency for serving many models

While Ray Serve can deploy single models efficiently, the scaling flexibility and APIs are even more powerful for building complex ML applications with many models and business logic. Model composition is one of our most popular features that allows you to chain together multiple models – each with their own hardware requirements – and let them scale independently using the Ray Serve auto-scaling.

Each of these models can also be upgraded independently while sharing the same cluster. Ray Serve supports fractional resources leading to less overhead and more efficient infrastructure utilization. Additionally, it reduces the operational overhead associated with maintaining and deploying many different microservices.

“Introducing Ray Serve dramatically improved our production ML pipeline performance, equating to a ~50% reduction in total ML inferencing cost per year for the company.”

- Pang Wu, Staff ML Engineer, Samsara

Read about why Samsara switched to Ray Serve and how they leveraged model composition to reduce serving costs

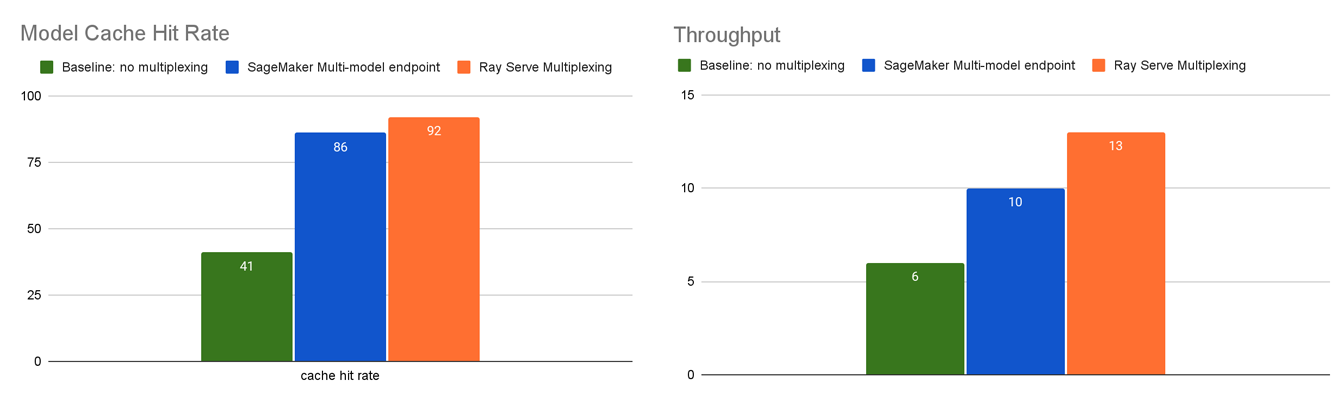

An emerging pattern in ML applications is serving many fine-tuned models with similar hardware requirements and uneven traffic patterns. Some areas where this is applicable is serving LLMs or image generation models fine-tuned on a specific dataset, or forecasting models trained per-customer or per-use case. To support this use case efficiently, we launched model multiplexing in Ray 2.5. Model multiplexing helps reduce average latencies helping you serve more traffic with less hardware. Multiplexing in Ray Serve achieves 2x higher QPS compared to a baseline and 20-30% higher QPS compared to Sagemaker Multi-Model Endpoints.

Footnote - Benchmark with 100 image classification (Resnet-50) models

Footnote - Benchmark with 100 image classification (Resnet-50) models LinkAnyscale infrastructure optimizations

Anyscale Services enhances heterogeneous hardware support with further infrastructure-level optimizations. You can achieve cost reductions of 2-3x and better GPU availability with reliable use of spot instances and smart instance selection across different hardware types. Spot instances offered by cloud providers can be 50-60% cheaper than on-demand instances but they are rarely used in production services because they can be preempted by cloud providers and cause availability drops. With Anyscale Services, we are able to use early signals from cloud providers and gracefully replace spot instances with on-demand ones to ensure no requests are dropped on your production service.

Anyscale infrastructure also comes with highly-optimized node startup time. We can achieve 30 seconds cold-start times for auto-scaling compared to >5 minutes on most competing platforms. We also support multiple cloud providers to help you avoid vendor lock-in. Read more about our infrastructure optimizations here.

LinkEnsuring production reliability

While many challenges related to serving AI applications are new, production reliability remains as important as ever. Many more companies have begun powering serious, revenue-generating applications with AI and they need to ensure they can run their systems reliably and respond effectively to incidents.

LinkHigh availability and observability

Anyscale Services run in your cloud and support both private and public networking setup for security compliance. They support running across multiple availability zones and ray head node fault tolerance for high availability. In our internal testing, we have availability upwards of 99.9% and scale of at least 5000 Ray Serve replicas.

"At Clari, we faced the challenge of deploying thousands of user ML models efficiently, maintaining top-notch AI infrastructure, and upholding SLA and privacy standards. Anyscale Services came to the rescue, streamlining our Multi-Model Lifecycle, cutting AI infrastructure costs, and seamlessly integrating with our existing ML systems. After a successful POC, Anyscale services delivered the best in latency, reliability, and provided substantial cost reduction for our multi-model serving platform compared to other major cloud providers native solutions.”

- Jon Park, Principal ML Engineer, Clari

To improve observability into your model deployments, we have created the Ray Dashboard for monitoring logs and metrics. For enhanced debugging and alerting, Anyscale Services can also export metrics and logs to 3rd party providers such as Splunk, Datadog, Cloudwatch and GCP Cloud Monitoring, among others. Read more about this in our documentation here. We also have integrations for model registries like W&B and MLFlow as well as CLI and SDK support for CI/CD integrations.

Here is how Ray Serve on Anyscale Services compares with other offerings:

Ray Serve on Anyscale Services | Sagemaker | Vertex | KServe | |

|---|---|---|---|---|

Model composition | Yes | Yes | x | x |

Model multiplexing | Yes | Yes | x | x |

Heterogeneous cluster support / Fractional GPUs | Yes | x | x | x |

API server | Yes | x | x | x |

Request batching | Yes | Yes | Yes | Yes |

Response Streaming | Yes | Yes | Yes | Yes |

Websocket support | Yes | x | x | x |

Canary rollouts | Yes | Yes | Yes | Yes |

Autoscaling | Yes | Yes | Yes | Yes |

LinkConclusion

Anyscale has had 6x user growth this year as many companies have moved to Ray Serve and Anyscale Services to uplevel their AI serving infrastructure. The flexibility of Ray Serve has allowed them to reduce costs and improve development velocity. Anyscale Services has provided them a managed and production-ready solution to realize business value from their AI applications much faster.

You can use our popular Ray libraries on Anyscale for data processing, training, batch inference, training, hyperparameter tuning, providing one centralized platform for building your AI applications. To get started:

Visit Ray Docs to learn more about Ray Serve features.

Get in touch with us on the #serve channel of the Ray Slack.

Sign up and get started with Anyscale Services.