Ray 1.12: Ray AI Runtime (alpha), usage data collection, and more

Ray 1.12 is here! The highlights in this release include:

The alpha release of Ray AI Runtime (AIR), a new, unified experience for Ray libraries

Ray usage data collection to help the Ray team discover and address the most pressing issues. NOTE: In this release, the feature is flagged off by default.

You can run pip install -U ray to access these features and more. With that, let’s dive in.

LinkIntroducing Ray AIR

With the Ray 1.12 release, we’re excited to introduce the alpha of Ray AI Runtime (AIR), an open-source toolkit for building end-to-end machine learning applications. With Ray AIR, our main goals are to:

Provide the compute layer for ML workloads

Allow for interoperability with other systems for storage and metadata needs

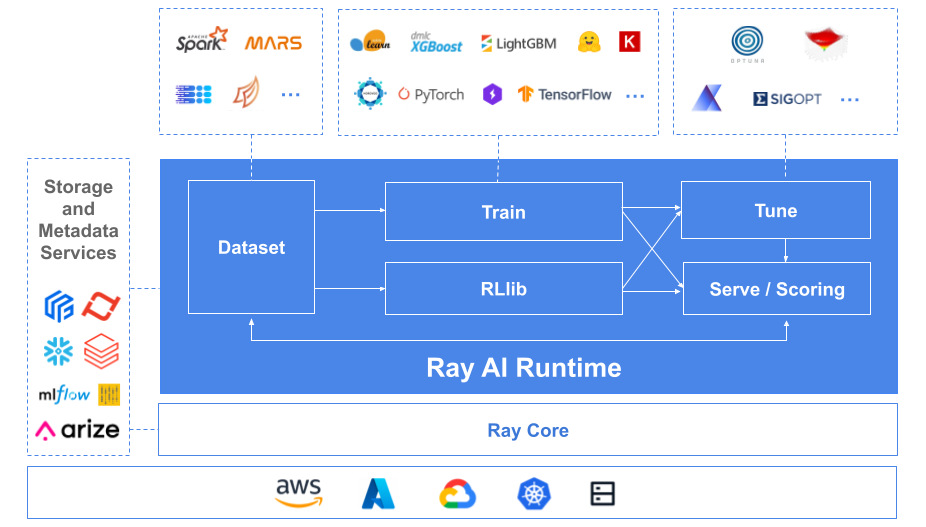

Ray AIR unifies the experience of using different Ray libraries: Ray Data for data processing; Ray Train for model training; Ray Tune for hyperparameter tuning; Ray Serve for model serving; and RLlib for reinforcement learning. Users can use these libraries interchangeably to scale different parts of standard ML workflows.

Ray AIR introduces a unified API for seamless integration across the ecosystem of Ray libraries, enabling you to pass data and models seamlessly between data processing, training, tuning, and inference (online and offline). If you’re already using Ray, this will not break backwards compatibility. You can run applications that use these components on your laptop, and scale out to Kubernetes, AWS, Google Cloud, or Azure without any changes to your code.

We’ll be holding office hours, development sprints, and other activities as we get closer to the beta release and general availability of Ray AIR. Want to join us? Fill out this short form!

Stay tuned for an in-depth blog post on Ray AIR, coming soon. Until then, you can find more information in the documentation or on the public RFC.

LinkUsage data collection

As the Ray project and community have grown, the surface area of Ray has increased a lot, and it’s been increasingly difficult for us to understand how to best spend our time and efforts to improve Ray.

Following the Usage Data Collection proposal RFC, in Ray 1.12, the Ray team has implemented a lightweight usage statistics and data collection mechanism to help us discover and address the most pressing issues. In this release, the feature is flagged off by default.

Though many will appreciate the actionable insights this data provides and how it will help improve the project, we also recognize that not everyone wants to send usage data.

We are firmly committed to giving Ray users full control over how their data is used and collected. You will always be able to disable usage data collection as described in this RFC.

LinkAdditional library features

The Deployment Graph API in Ray Serve is now in alpha. It provides a way to build, test and deploy complex inference graphs composed of many deployments.

We’ve also implemented several updates to Ray Datasets, including a new lazy execution model with automatic task fusion and memory-optimizing move semantics, first-class support for Pandas DataFrame blocks, and efficient random access datasets.

For a detailed list of features, refer to the Ray 1.12.0 release notes and documentation.

LinkLearn more

Many thanks to all those who contributed to this release. To learn about all the features and enhancements in this release, check out the Ray 1.12.0 release notes. If you would like to keep up to date with all things Ray, follow @raydistributed on Twitter and sign up for the Ray newsletter.