How to Scale Up Your FastAPI Application Using Ray Serve

[UPDATE 7/9/21: Ray Serve now has a seamless integration with FastAPI built in! Check it out on the documentation page: https://docs.ray.io/en/master/serve/http-servehandle.html#fastapi-http-deployments]

FastAPI is a high-performance, easy-to-use Python web framework, which makes it a popular way to serve machine learning models. In this blog post, we’ll scale up a FastAPI model serving application from one CPU to a 100+ CPU cluster, yielding a 60x improvement in the number of requests served per second. All of this will be done with just a few additional lines of code using Ray Serve.

Ray Serve is an infrastructure-agnostic, pure-Python toolkit for serving machine learning models at scale. Ray Serve runs on top of the distributed execution framework Ray, so we won’t need any distributed systems knowledge — things like scheduling, failure management, and interprocess communication will be handled automatically.

The structure of this post is as follows. First, we’ll run a simple model serving application on our laptop. Next, we’ll scale it up to multiple cores on our single machine. Finally, we’ll scale it up to a cluster of machines. All of this will be possible with only minor code changes. We’ll run a simple benchmark (for the source code, see the Appendix) to quantify our throughput gains.

To run everything in this post, you’ll need to have either PyTorch or TensorFlow installed, as well as three other packages which you can install with the following command in your terminal:

pip install transformers fastapi "ray[serve]"

LinkIntroduction

Here’s a simple FastAPI web server. It uses Huggingface Transformers to auto-generate text based on a short initial input using OpenAI’s GPT-2 model, and serves this to the user.

1from fastapi import FastAPI

2

3app = FastAPI()

4

5from transformers import pipeline # A simple API for NLP tasks.

6

7nlp_model = pipeline("text-generation", model="gpt2") # Load the model.

8

9# The function below handles GET requests to the URL `/generate`.

10@app.get("/generate")

11def generate(query: str):

12 return nlp_model(query, max_length=50) # Output 50 words based on query.Let’s test it out. We can start the server locally in the terminal like this:

uvicorn main:app --port 8080

Now in another terminal we can query our model:

curl "http://127.0.0.1:8080/generate?query=Hello%20friend%2C%20how"

The output should look something like this:

[{“generated_text”:”Hello friend, how much do you know about the game? I’ve played the game a few hours, mostly online, and it’s one of my favorites… and this morning I finally get to do some playtesting. It seems to have all the”}]%

That’s it! This is already useful, but it turns out to be quite slow since the underlying model is very complex. On my laptop, this request takes about two seconds. If the server is being hit with many requests, the throughput will only be 0.5 queries per second.

LinkScaling up: Enter Ray Serve

Ray Serve will let us easily scale up our existing program to multiple CPUs and multiple machines. Ray Serve runs on top of a Ray cluster, which we’ll explain how to set up below.

First, let’s take a look at a version of the above program which still uses FastAPI, but offloads the computation to a Ray Serve backend with multiple replicas serving our model in parallel:

1import ray

2from ray import serve

3

4from fastapi import FastAPI

5from transformers import pipeline

6

7app = FastAPI()

8

9serve_handle = None

10

11@app.on_event("startup") # Code to be run when the server starts.

12async def startup_event():

13 ray.init(address="auto") # Connect to the running Ray cluster.

14 client = serve.start() # Start the Ray Serve client.

15

16 # Define a callable class to use for our Ray Serve backend.

17 class GPT2:

18 def __init__(self):

19 self.nlp_model = pipeline("text-generation", model="gpt2")

20 def __call__(self, request):

21 return self.nlp_model(request.data, max_length=50)

22

23 # Set up a backend with the desired number of replicas.

24 backend_config = serve.BackendConfig(num_replicas=8)

25 client.create_backend("gpt-2", GPT2, config=backend_config)

26 client.create_endpoint("generate", backend="gpt-2")

27

28 # Get a handle to our Ray Serve endpoint so we can query it in Python.

29 global serve_handle

30 serve_handle = client.get_handle("generate")

31

32@app.get("/generate")

33async def generate(query: str):

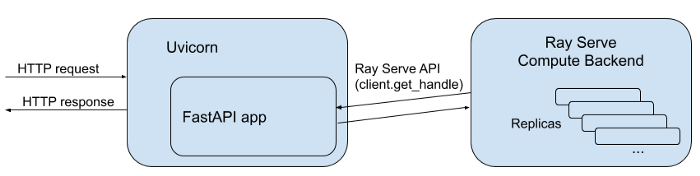

34 return await serve_handle.remote(query)Here we’ve simply wrapped our NLP model in a class, created a Ray Serve backend from the class, and set up a Ray Serve endpoint serving that backend. Ray Serve comes with an HTTP server out of the box, but rather than use that, we’ve just taken our existing FastAPI /generate endpoint and give it a handle to our Ray Serve endpoint.

Here’s a diagram of our finished application:

LinkTesting on a laptop

Before starting the app, we’ll need to start a long-running Ray cluster:

ray start --head

The laptop will be the head node, and there will be no other nodes. To use 2 cores, I’ll set num_replicas=2 in the code sample above. Running the server exactly as before, if we saturate our backend by sending many requests (see the Appendix), we see that our throughput has increased from 0.53 queries per second to about 0.80 queries per second. Using 4 cores, this only improves to 0.85 queries per second. It seems with such a heavyweight model, we have hit diminishing returns on our local machine.

LinkRunning on a cluster

The really big parallelism gains will come when we move from our laptop to a dedicated multi-node cluster. Using the Anyscale beta, I started a Ray cluster in the cloud with a few clicks, but I could have also used the Ray cluster launcher. For this blog post, I set up a cluster with eight Amazon EC2 m5.4xlarge instances. That’s a total of 8 x 16 = 128 vCPUs.

Here are the results measuring throughput in queries per second, for various settings of num_replicas:

1num_replicas Trial 1 Trial 2 Trial 3

21 0.41 0.45 0.43

310 4.16 4.17 4.09

4100 24.22 24.54 24.56

5That’s quite a bit of speedup! We would expect to see an even greater effect with more machines and more replicas.

LinkConclusion

Ray Serve makes it easy to scale up your existing machine learning serving applications by offloading your heavy computation to Ray Serve backends. Beyond the basics illustrated in this blog post, Ray Serve also supports batched requests and GPUs. The endpoint handle concept used in our example is fully general, and can be used to deploy arbitrarily composed ensemble models in pure Python. Please let us know if you have any questions through our Discourse or Slack!

If you want to learn more more about Ray Serve, check out the following links:

The Simplest Way to Serve your NLP Model in Production with Pure Python

Machine Learning Serving is Broken | by Simon Mo | Distributed Computing with Ray

Introducing Ray Serve: Scalable and Programmable ML Serving Framework — Simon Mo, Anyscale

LinkAppendix: Quick-and-dirty benchmarking

To saturate all of our replicas with requests, we can use Python’s asyncio and aiohttp modules. Here’s one way of doing it, based on this example HTTP client:

1import asyncio

2import aiohttp

3import time

4

5async def fetch_page(session, url):

6 async with session.get(url) as response:

7 assert response.status == 200

8 return await response.read()

9

10async def main():

11 num_connections = 100 # Use as many connections as replicas.

12 url = "http://127.0.0.1:8080/generate?query=Hello%20friend%2C%20how"

13 results = await asyncio.gather(*[run_benchmark(url) for _ in range(num_connections)])

14 print("Queries per second:", sum(results)) # Sum qps over all replicas.

15

16async def run_benchmark(url):

17 start = time.time()

18 count = 0

19 async with aiohttp.ClientSession(loop=loop) as session:

20 while time.time() - start < 60: # Run test for one minute.

21 count += 1

22 print(await fetch_page(session, url))

23 return count / (time.time() - start) # Compute queries per second.

24

25if __name__ == "__main__":

26 loop = asyncio.get_event_loop()

27 loop.run_until_complete(main())