Cheaper and 3X Faster Parallel Model Inference with Ray Serve

LinkTL;DR

Wildlife Studios’ ML team was deploying sets of ensemble models using Flask. It quickly became too hard and too expensive to scale. By using Ray Serve, Wildlife Studios was able to improve the latency and throughput while reducing the cost. Ray Serve not only made it easy to parallelize model inference, but also enabled “model disaggregation and model composition”: splitting the models into separate components and running those in parallel. This enabled the scaling of each part of the request pipeline separately. The primary reason for saving resources while also keeping latency down was actually due to a number of other benefits Ray Serve provided like:

(1) better cache efficiency because there were fewer models residing in memory.

(2) pipelining inference allowed both preprocessing and models to be busy at the same time.

(3) autoscaling which resulted in less resources being used when traffic was low, instead of always provisioning for the peak.

LinkBusiness use case overview

A previous business case study covered how the Dynamic Offers team at Wildlife Studios focuses on serving highly relevant offers to improve gaming experience and maximize both the dollars spent per player and overall playtime. Every day, when users log into one of the games, the game server pings the ML service to create a dynamic offer for in-game purchase items. For example, it will create a “for limited time, you can get 50% off for item X” offer considering the user’s attributes and purchase history. Wildlife’s data scientists train many models for each game and different user groups to optimize the purchase rate of these offers.

The team manages the model deployments on internal K8s cluster and is responsible for delivering dynamic offers response within 2s of P95 latency SLA. The is important for two reasons: (1) many of Wildlife Studios’ top revenue-generating games have a tight latency constraint, fallbacking to non-personalized offers that leads to a non-optimal experience for players, and (2) slow-loading offers can result in a loading screen, prompting players to leave the game. Currently there are about 4 games in Wildlife’s portfolio, each game has about 2-3 different versions of the models trained on different historical data. Each version, which is a single unit of deployment, contains 12+ models trained using the same algorithm but different data.

LinkSystem design

When a request comes in, the Dynamic Offers system uses multiple models to deliver a single response. This is using a common technique called “model ensembling”. Ensembling is similar to gathering recommendations from a group of experts, each expert has their expertise and experiences, aggregating them to boost the final decision’s accuracy score.

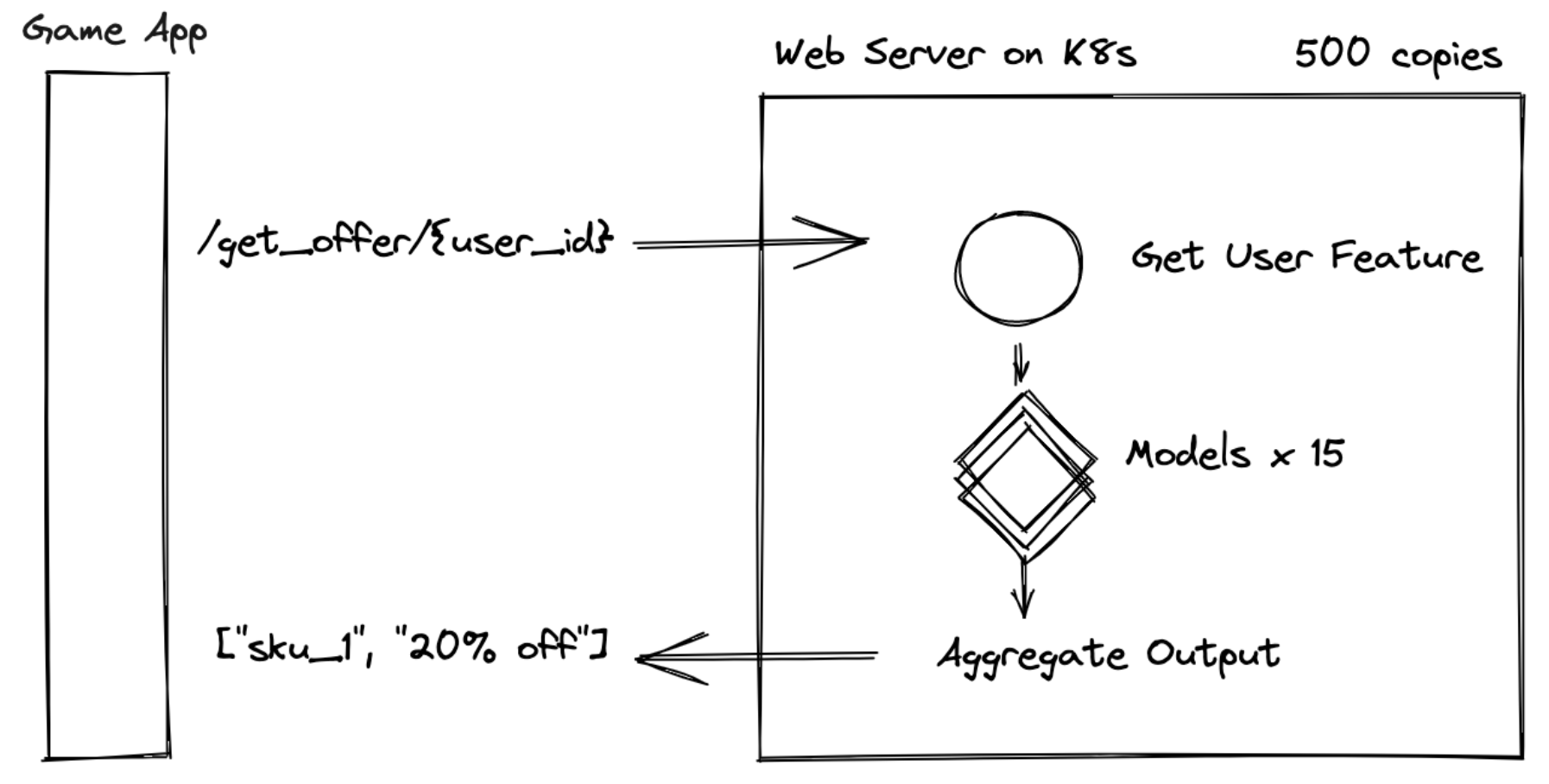

Wildlife Studios’ legacy system architecture for dynamic offer recommendations.

Wildlife Studios’ legacy system architecture for dynamic offer recommendations.Architecturally, existing model servers follow a pattern of “wrap your models in the web server”. For each model version, there is a single K8s Deployment that contains some number of Pods (the amount varies by the popularity of each game, between 10 and 200). Each Pod runs a container running a Python Flask server.

In the Flask server, all the models belonging to the same version are loaded at startup time. Upon each request, the Flask server will retrieve user features from DynamoDB and then run through the models sequentially. Finally, it will aggregate all the outputs and return the final output to the requester.

LinkChallenges

There are two major challenges of the current setup:

High latency per request: the end to end response time can take 500-600ms at P95 measurement. This is considered too high a response time and puts them at risk to deliver <2s SLA goal.

Low utilization: in order to handle traffic spikes, the web servers are replicated to many copies (sometimes up to 500 copies). Each web server loads all the models within a version. This means at a given time, many models are unused and the compute resources are underutilized.

LinkSolution: Parallelize Model Inference with Ray Serve

Ray enables running model inference in parallel and reduces inference latency by 3x.

Ray enables running model inference in parallel and reduces inference latency by 3x.Ray is a distributed runtime making it easy to parallelize Python and Java code across a cluster. With Ray, the sequential model inference for each request can be parallelized easily. Instead of running through 15 models one at a time, Ray can help run all of them in parallel. This yields lower end to end latency. A microbenchmark showed that with Ray the latency has been reduced by more than 3x: instead of 500ms at p95, the parallel inference now takes 150ms at p95 latency.

LinkNaive parallelization leads to high resource usage and low utilization

However, just paralleling the inference models is not enough. Doing so naively inside the web server can indeed reduce end to end latency, however, it will drastically increase the resource usage because each server needs to host many models in parallel in a threadpool.

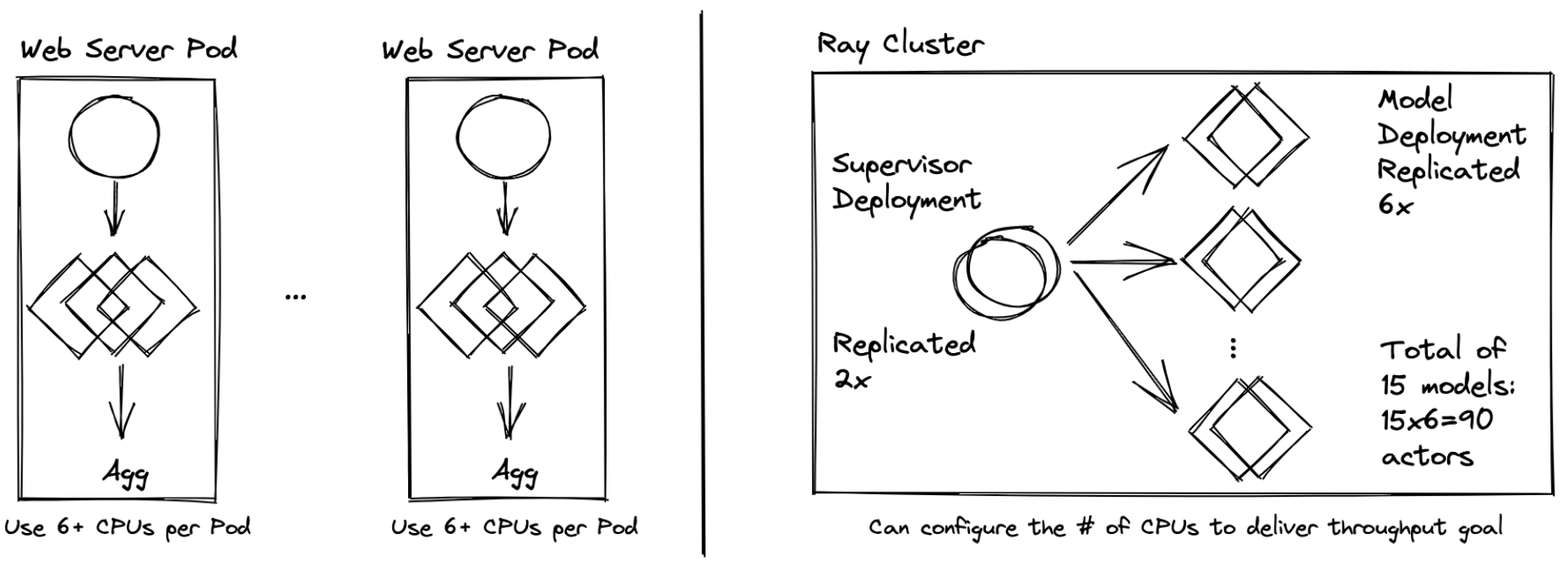

Ray Serve scales each component of the model inference pipeline into separate components, and scales them seamlessly on a multi-node cluster.

Ray Serve scales each component of the model inference pipeline into separate components, and scales them seamlessly on a multi-node cluster.This is where Ray Serve comes in. Ray Serve is a specialized model serving library built on top of Ray. Ray Serve helps to aggregate the components into “Deployment” units and scale each “Deployment” independently. It also gives the ability for each deployment to call each other in a flexible and user-friendly way.

Wildlife implemented the new system on top of Ray Serve by splitting each model into their deployment, and creating a “Supervisor” deployment that gets the user feature from DynamoDB. Once the user feature is retrieved, the supervisor calls 15 different model deployments for predictions in parallel, and aggregates them together.

The key advantage here is that instead of binding to 1 web server + 15 models per pod in the previous Flask app setting, the engineers and devops can configure each replication factor of each deployment separately. For example, the supervisor deployment is very lightweight, it just needs to receive the request, proxy it to the model deployments and aggregate the output to form a response. So the supervisor deployment has a replication factor of 2, each replica can handle at least a few hundred queries per second. In contrast, the model deployments are heavy weight so they are replicated to 6 replicas each, each replica can handle about tens of queries per second.

While this certainly helped, the reason why Ray Serve was able to achieve low resource utilization while also keeping the latency down was primarily due to good cache efficiency and latency hiding with pipelining.

LinkServe loads fewer model in memory and keep them busy: good for cache efficiency

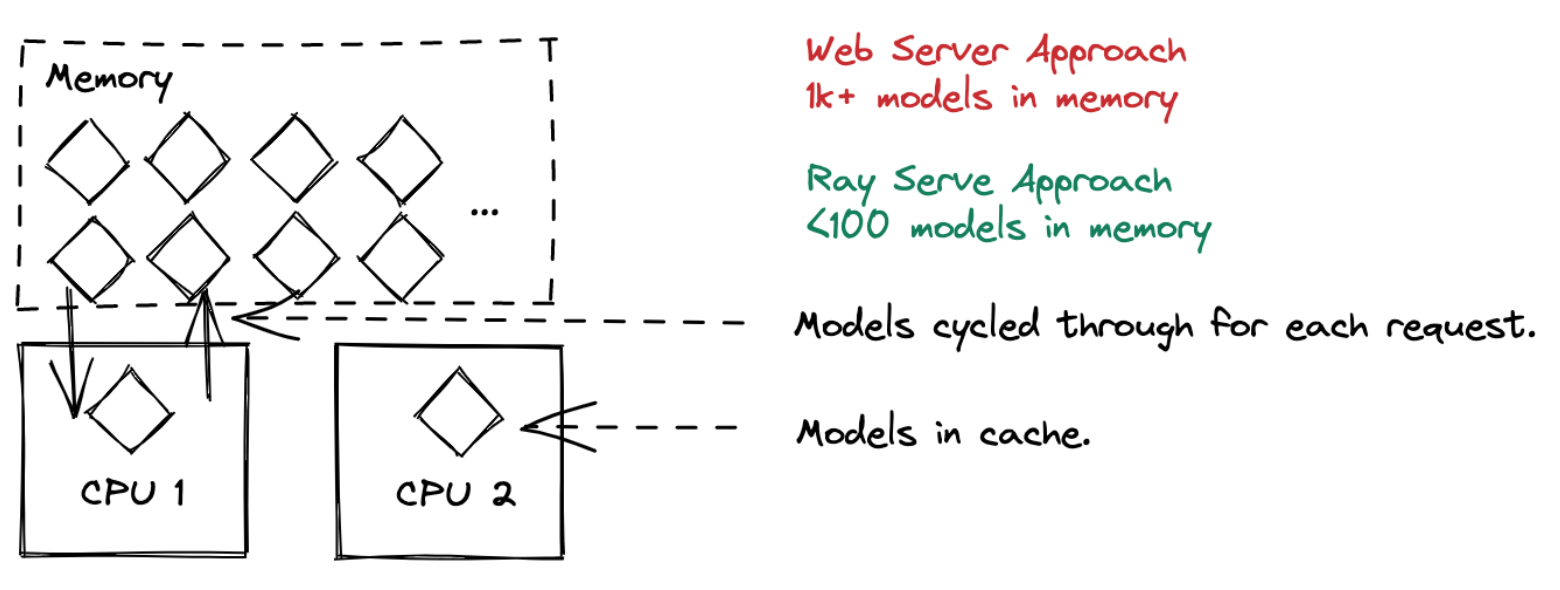

Keeping less models in memory, and scaling them independently helps to increase CPU cache hit rate.

Keeping less models in memory, and scaling them independently helps to increase CPU cache hit rate.Using Ray Serve has resulted in good cache efficiency due to deployments requiring less models in memory compared to the web server approach. This is due to two factors:

1) Model deployments in Ray Serve are more frequently used because they are shared among many requests

2) Ray Serve, unlike the web server approach, doesn't require you to load all the models into memory for each web server pod.

These two factors combined lead to more efficient utilization of memory which results in a higher CPU cache hit rate for each model. Using a back of envelope calculation, we can estimate the Ray Serve deployment model reduces memory usage by at least 3x. With dynamic batching, we anticipate saving even more memory because it should increase the throughput of a single replica.

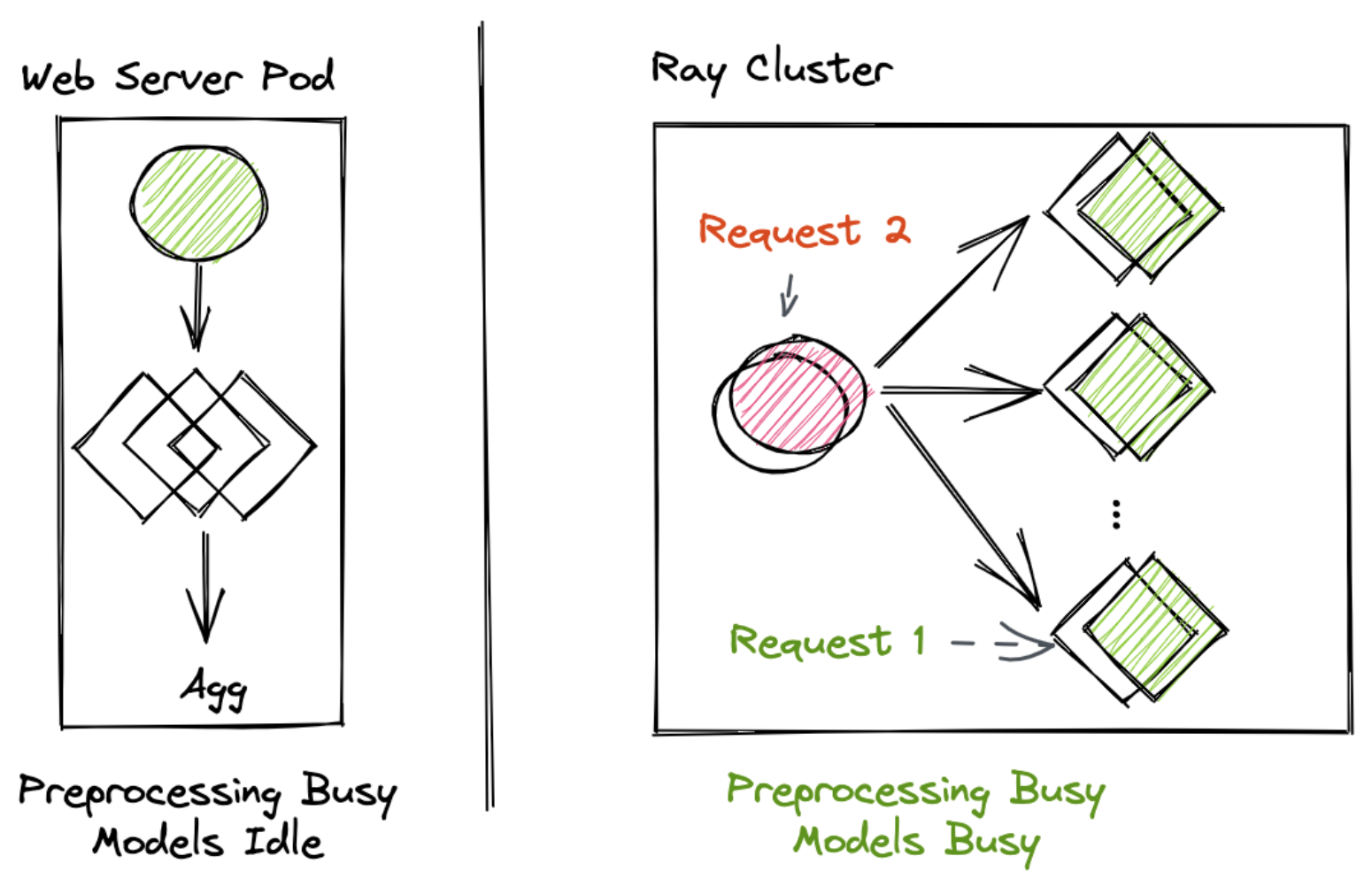

LinkServe can saturate the pipeline with requests, no component is idle

Ray Serve ensures no component is idle when serving requests.

Ray Serve ensures no component is idle when serving requests.Latency hiding comes from the fact that each web server can only handle one request at a time. while the server is getting user features from DynamoDB, the models must be idle; while the models are running through computation, new requests must wait. With Ray Serve, each step of the pipeline can be kept busy. Each step of the pipeline can process requests concurrently; while some components are busy with a group of requests, other components can be busy too.

LinkServe loads fewer model in memory and keep them busy: good for cache efficiency

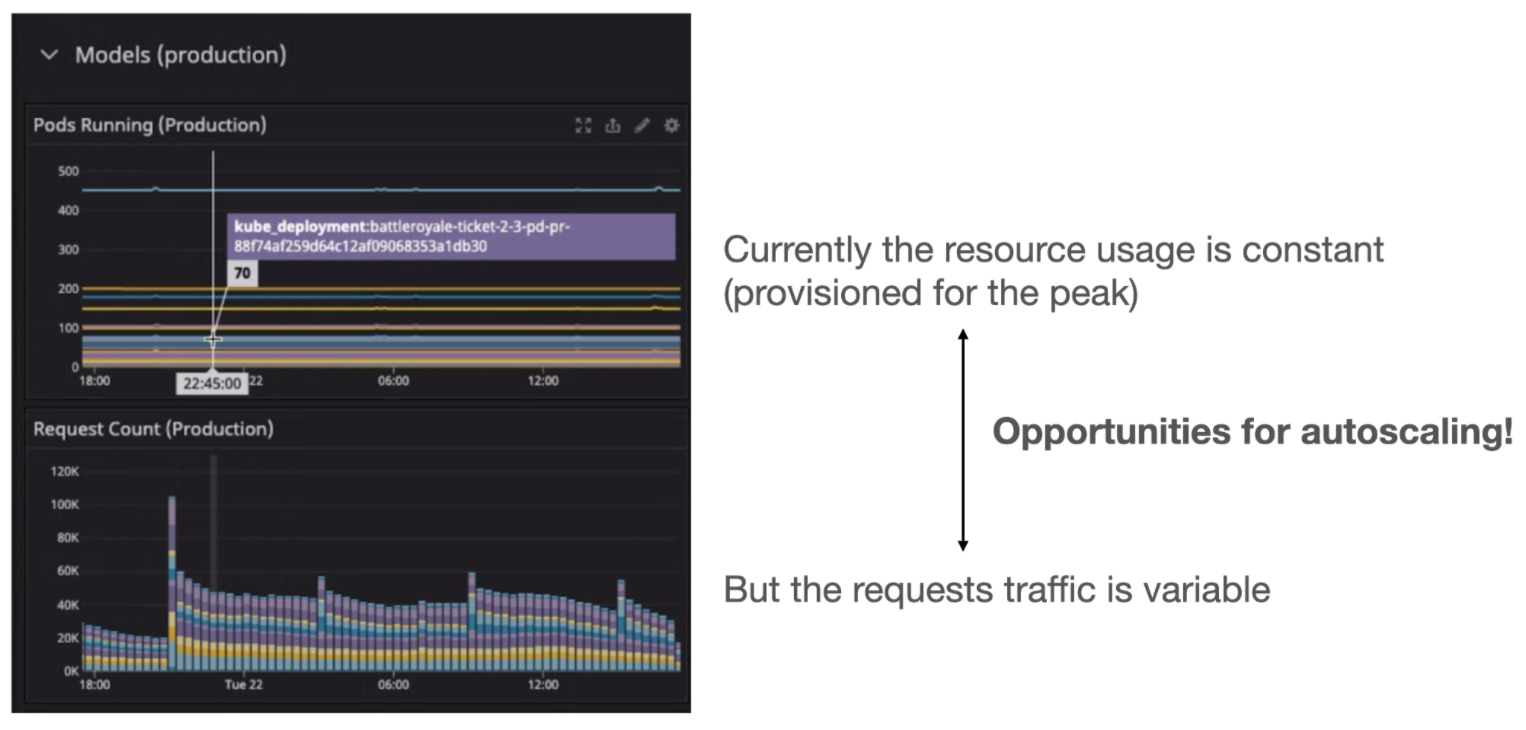

The live traffic pattern is variable and predictable. Serve’s replica autoscaling drives the cost down further during idle time.

The live traffic pattern is variable and predictable. Serve’s replica autoscaling drives the cost down further during idle time.Lastly, the requested traffic is not constant as it goes up and down because users log in and out of the games. Ray Serve provides mechanisms for autoscaling to shrink the size of deployment and save resource usage when the traffic is low.

LinkConclusion

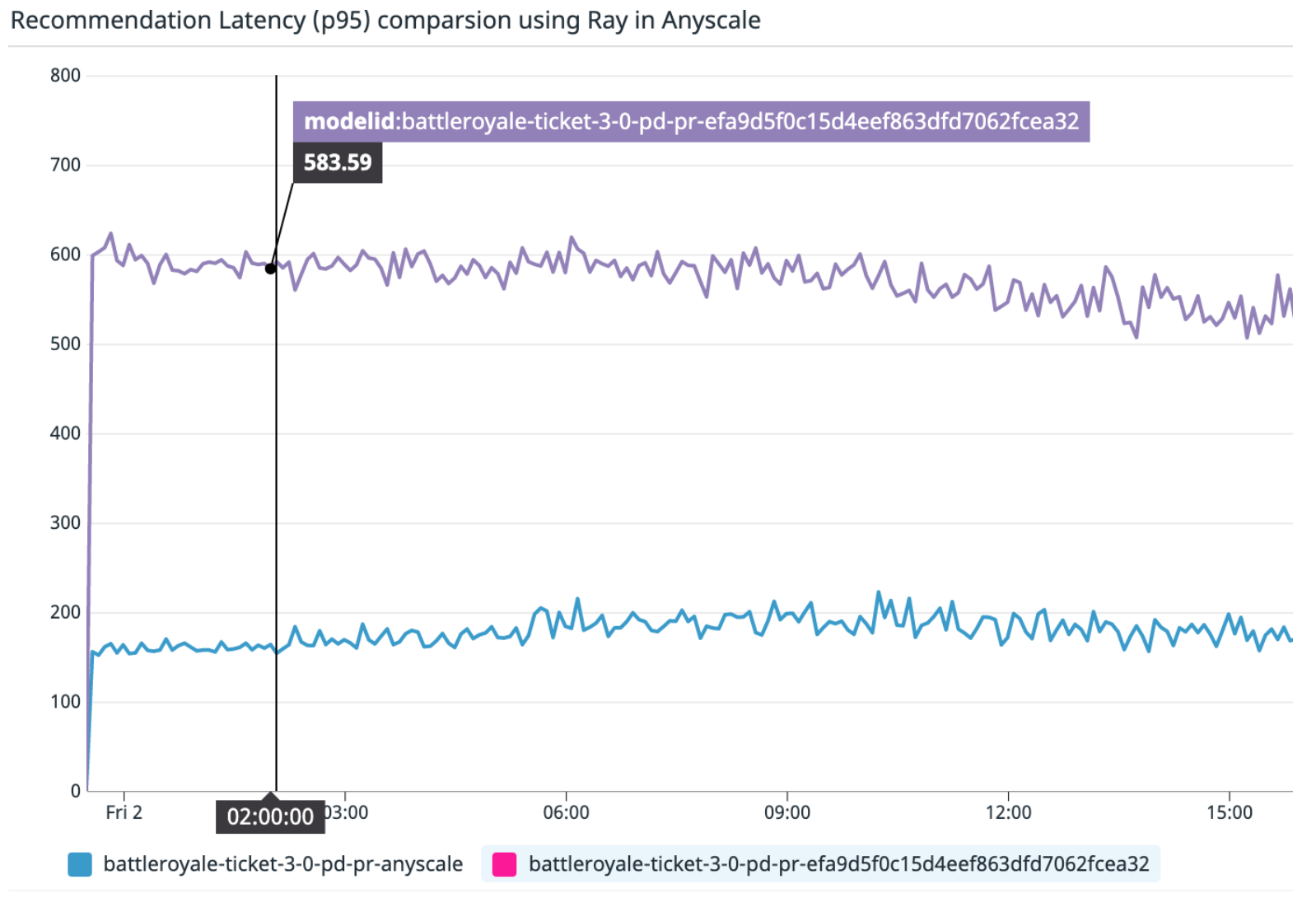

Comparing tail latency of the new Ray Serve system (blue) to the existing system (red) with live production traffic, Ray Serve is 3x faster than the existing system.

Comparing tail latency of the new Ray Serve system (blue) to the existing system (red) with live production traffic, Ray Serve is 3x faster than the existing system. The Ray Serve version of the model ensemble services was deployed to serve production traffic and it showed visible drop in p95 latency. Because of this, the Wildlife team has been moving more models into Ray Serve for production.

From a technical perspective, the key takeaway from this post is that Ray Serve makes it easy to do “model disaggregation and model composition”. That is splitting models into separate components and running those in parallel. Ray Serve enables scaling at each part of the request pipeline separately. The reasons behind saving resources while keep latency down was due to

(1) better cache efficiency because there were fewer models residing in memory.

(2) pipelining inference allowed both preprocessing and models to be busy at the same time.

(3) autoscaling which resulted in less resources being used when traffic was low, instead of always provisioning for the peak.