How Anyscale Solves Infrastructure Challenges related to LLM Applications

TLDR: Anyscale’s Private Endpoints solves key challenges for infrastructure engineers and LLM developers alike, enabling organizations to adopt and build Generative AI Applications faster, more reliably, and cost-effectively. Get started now.

Anyscale Private Endpoints Summary:

Rapid Deployment: Anyscale Private Endpoints enables quick deployment of any LLM, including Llama-2, Mistral-7B, Mixtral and more within 10 minutes

Customizable: Allows optimizing for specific application needs like low latency or high throughput.

Multi-cloud: leverage GPUs from major cloud providers to run your LLMs. Anyscale Private Endpoints can run on any GPU

Operational Ease: Offers pre-configured alerting and monitoring, fault tolerance, and autoscaling to reduce the operational burden of running LLMs.

Enhanced Throughput and Cost Efficiency: Features like performance optimizations and smart instance management for cost-effective operations.

Privacy and Security: Anyscale Private Endpoints runs in your cloud account and ensures SOC 2 Type 2 compliance and robust security

Future-Proof: Integrated with the Anyscale managed Ray platform providing a path for future AI workloads

LinkUnlocking the Power of Large Language Models: Anyscale's Private Endpoints

In today's fast-paced tech landscape, harnessing the potential of Large Language Models (LLMs) can be challenging due to high infrastructure cost, maintenance, and security. Anyscale's Private Endpoints is designed to address these challenges head-on.

Link1. Faster Time to Value

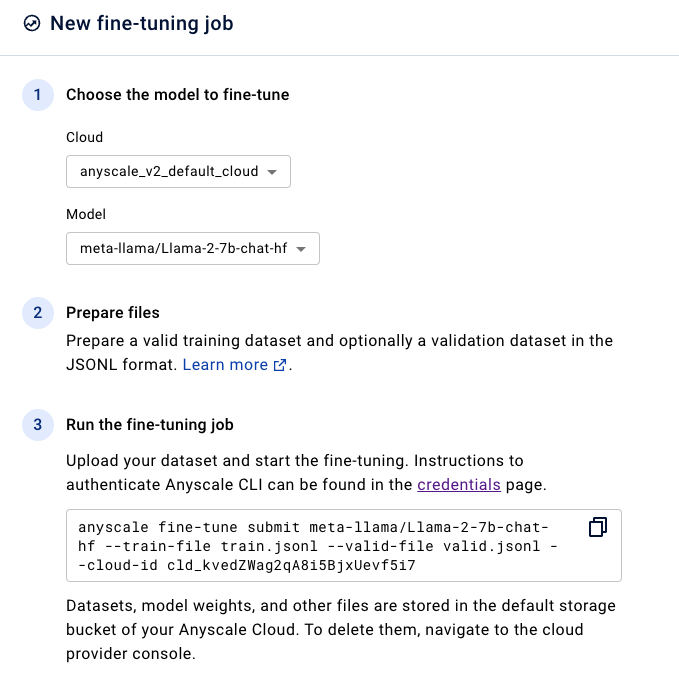

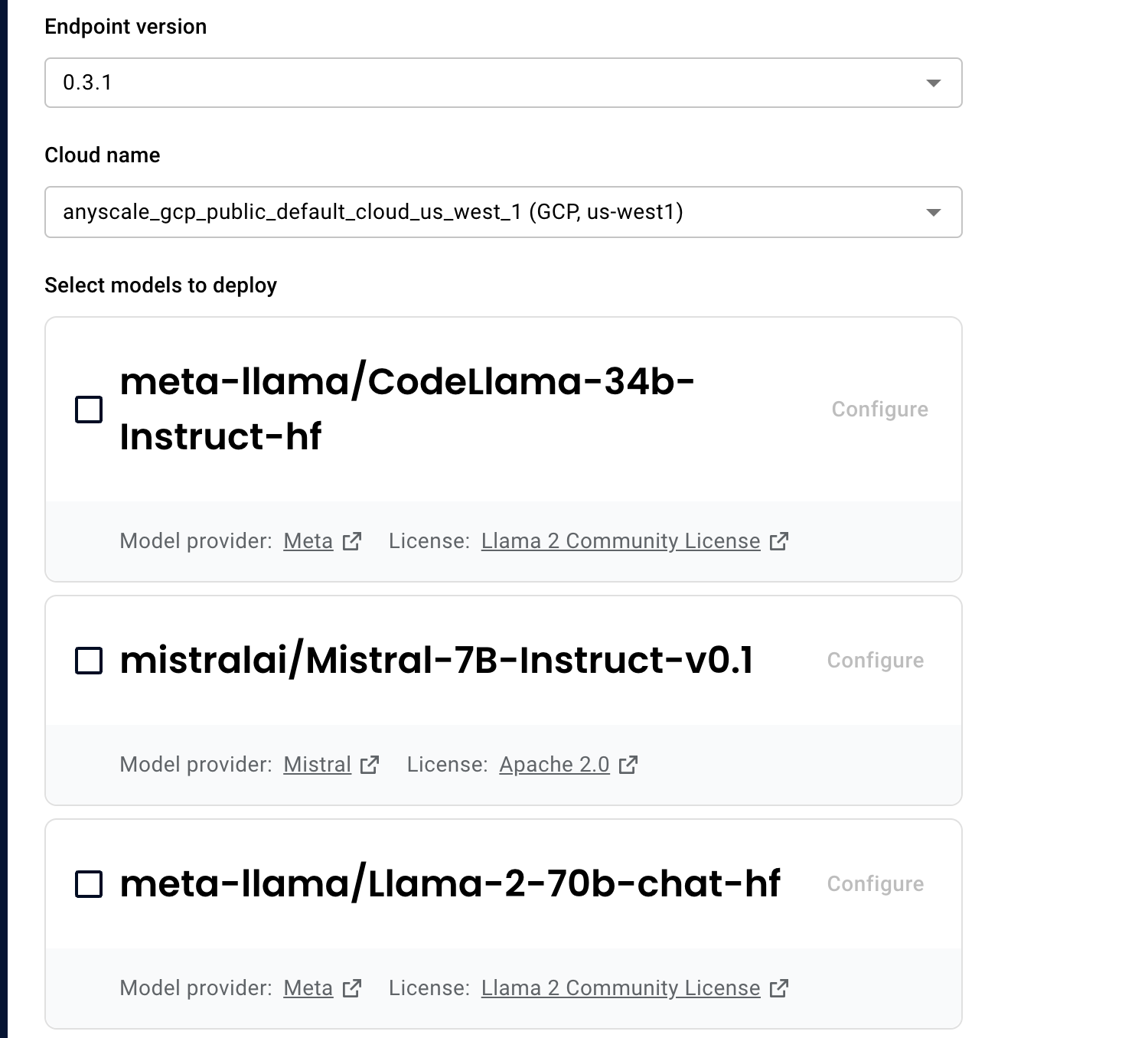

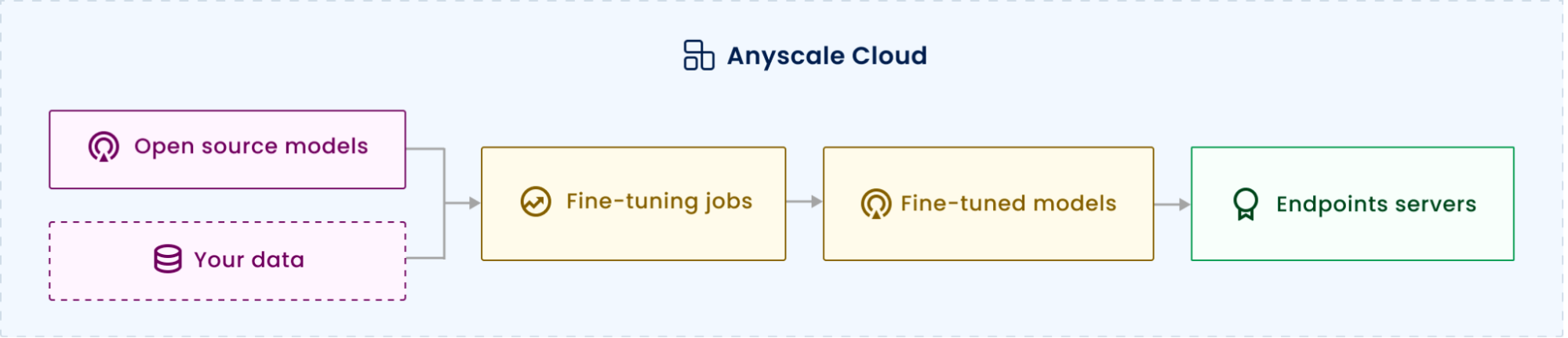

Anyscale’s Private Endpoints packaged solution lets you fine-tune and deploy your LLMs in under 10 minutes. With a click of a button, users can deploy the Llama-2 family of models, Mistral-7B, Mixtral, Zephyr, or any LLM. You can choose to deploy to your cloud of choice - whether that's your production environment serving live traffic or your development environment as you build your application.

A simple CLI enables developers to fine-tune models securely in their cloud on their own proprietary data and hardware–no need to configure infrastructure or code.

But there’s more to it than just fine-tuning a single model or deploying a single model. Anyscale’s Private Endpoints automatically enables the deployment of many models - configuring the routing and multiplexing to reduce costs while delivering highly personalized and customized applications. Each model is autoscaled independently helping improve hardware efficiency. Check out an example of the sort of personalized applications you can build with Private Endpoints!

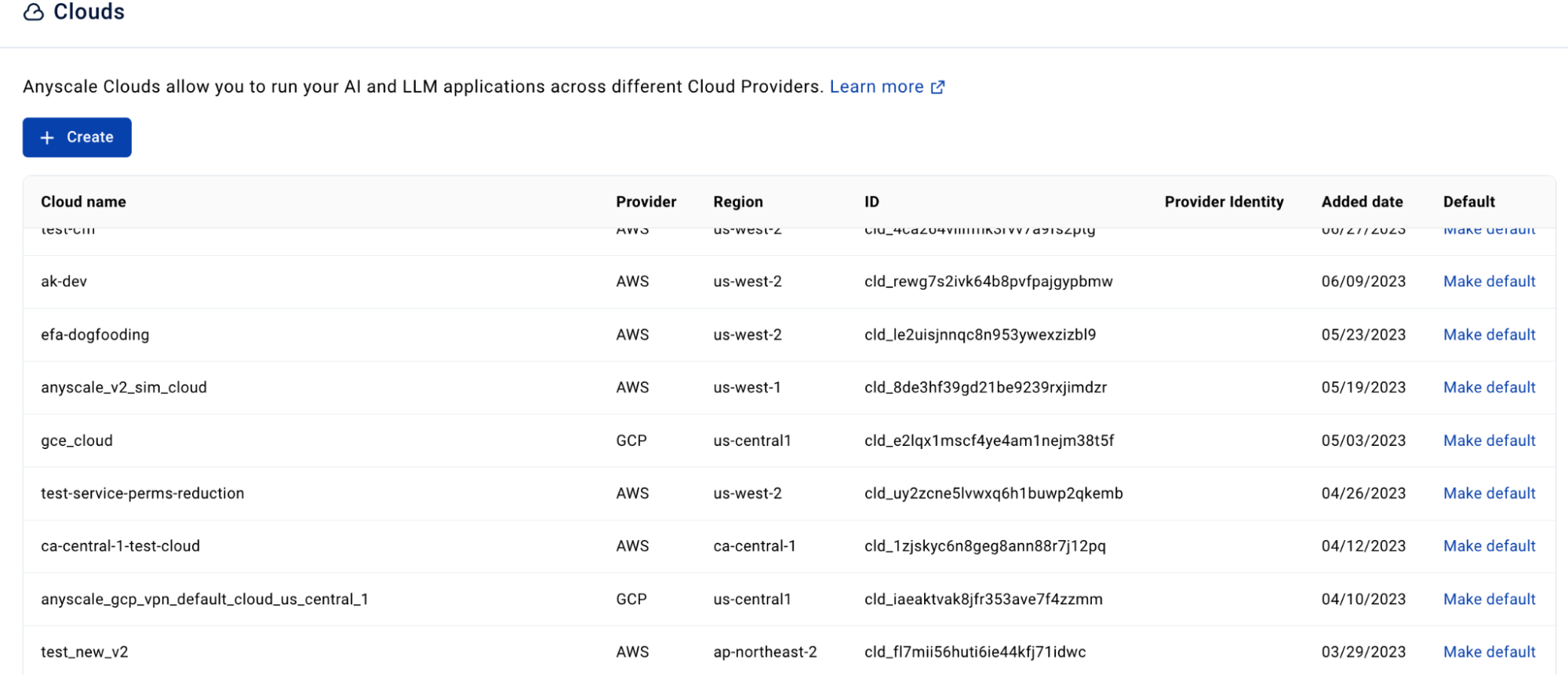

Link2. Any GPU, Multi-Cloud Optionality

Anyscale Private Endpoints can deploy on your cloud provider of choice, enabling you to use precious GPUs to run your LLM inference and Fine-Tuning workloads. Deploy into any of your cloud providers, whether its AWS or GCP; or use different clouds with different setups. With Anyscale Private Endpoints you can deploy to a cloud that uses A10G accelerators for development to keep costs low, and then deploy the same solution to a production cloud set to use A100s and H100s.

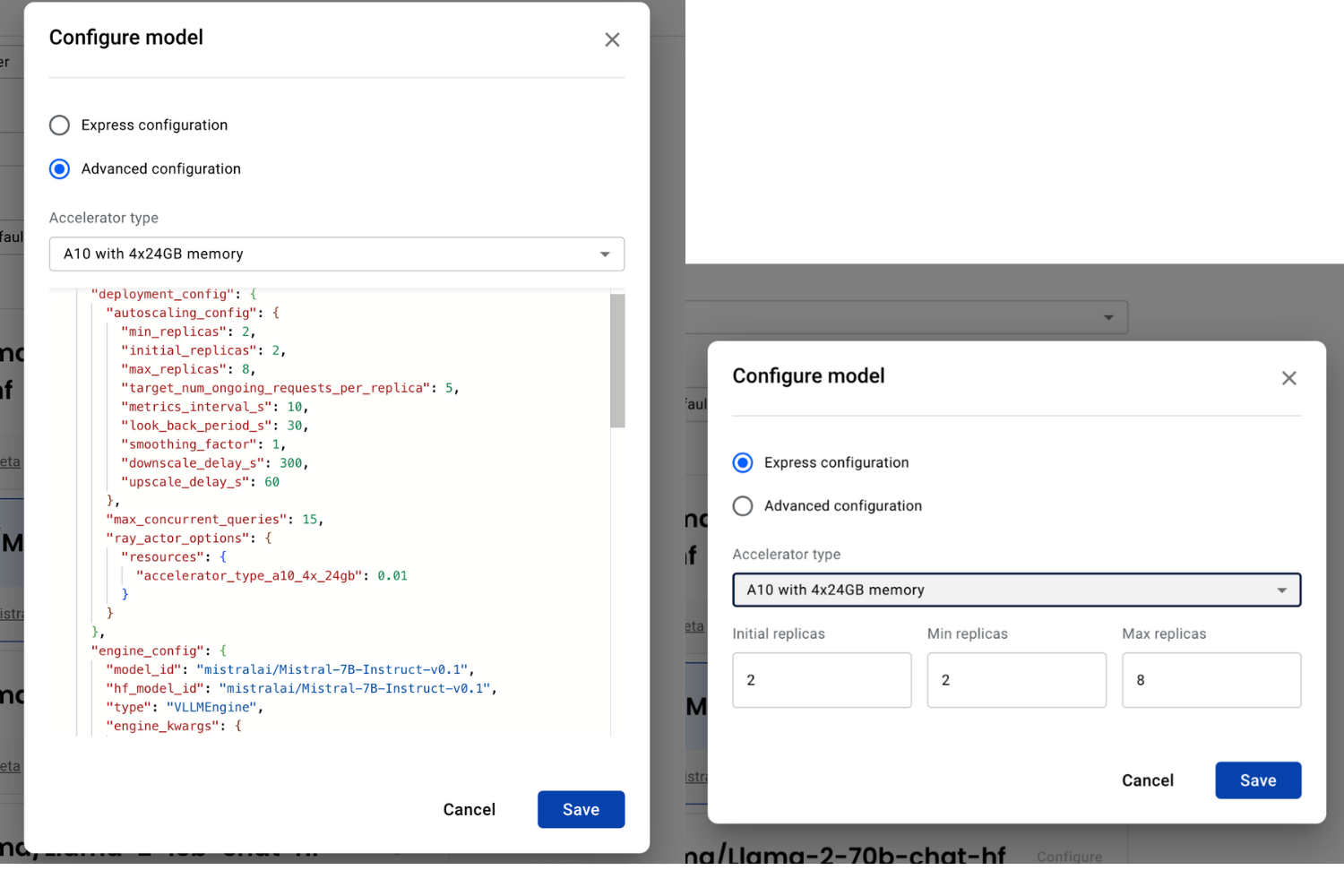

Link3. Unlimited Customizability: Optimize your application

Your deployment and LLM infrastructure needs to meet your requirements, and those requirements can vary by application and workload. For example, text-to-audio applications often require extremely low latency, as measured by Time-to-First-Token (TTFT), while summarization applications may have higher throughput needs over latency. With Private Endpoints, you have the ability to set up your applications and customize them to meet your performance requirements.

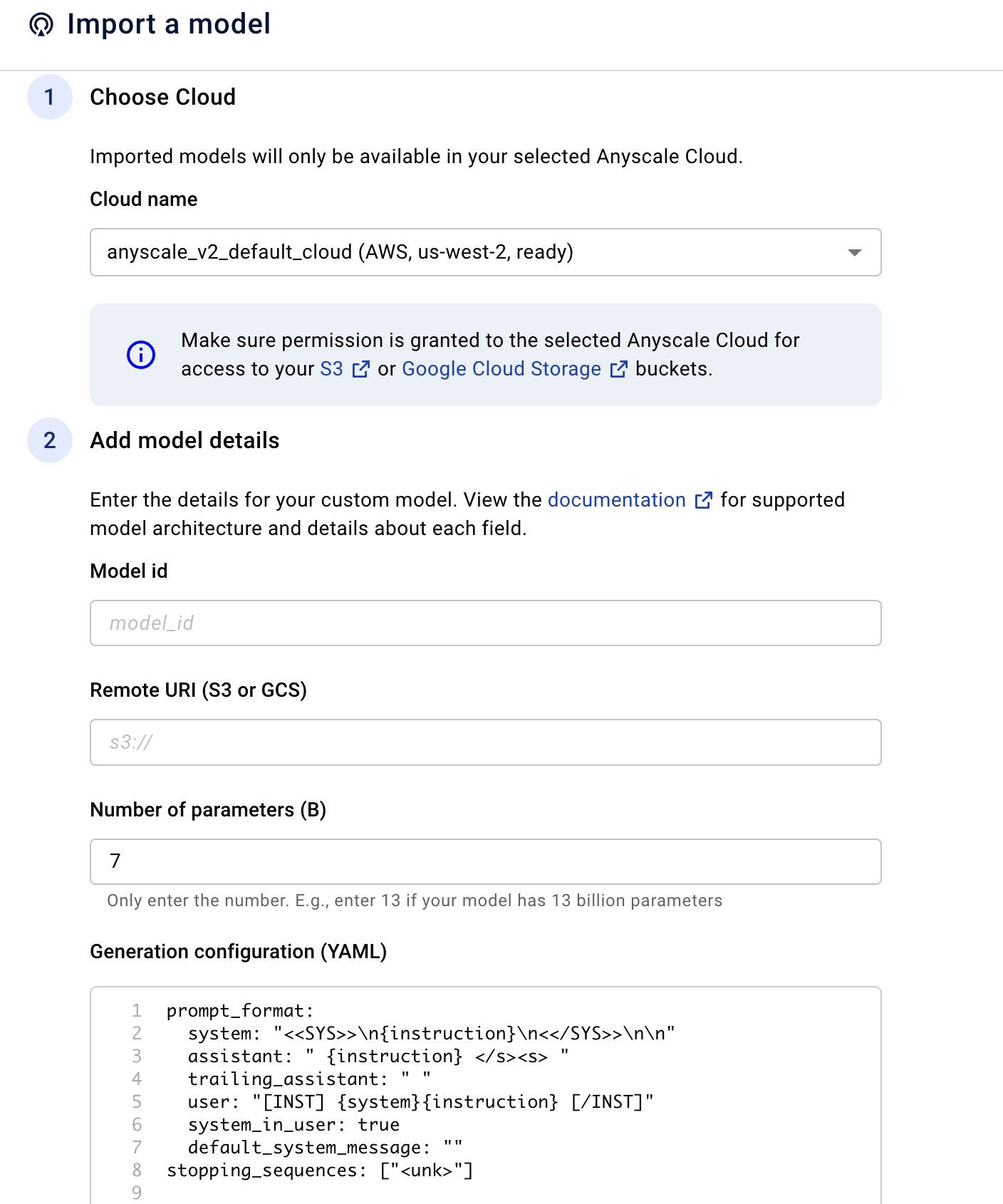

Customizabiilty also means any model for any application. The explosion of LLMs and fine-tuned variants means off the shelf models may not work for your application. Anyscale Private Endpoints allows you to easily import custom models, including those you may have previously fine-tuned or models available on Hugging Face.

Link4. Reduced Operational Burden and Production Readiness

One of the most daunting tasks when deploying LLMs in production is ensuring a highly available, fault-tolerant system. Anyscale’s Endpoints have significantly reduced the operational burden by providing:

Pre-configured multi-zone deployments.

An always-growing list of supported open source models like Llama-2 and Mistral.

Zero downtime upgrades

Integrated observability and alerting included real time alerts for unhealthy or failing services sent to your chosen notification channel like Slack or PagerDuty

Autoscaling including scale-to-zero

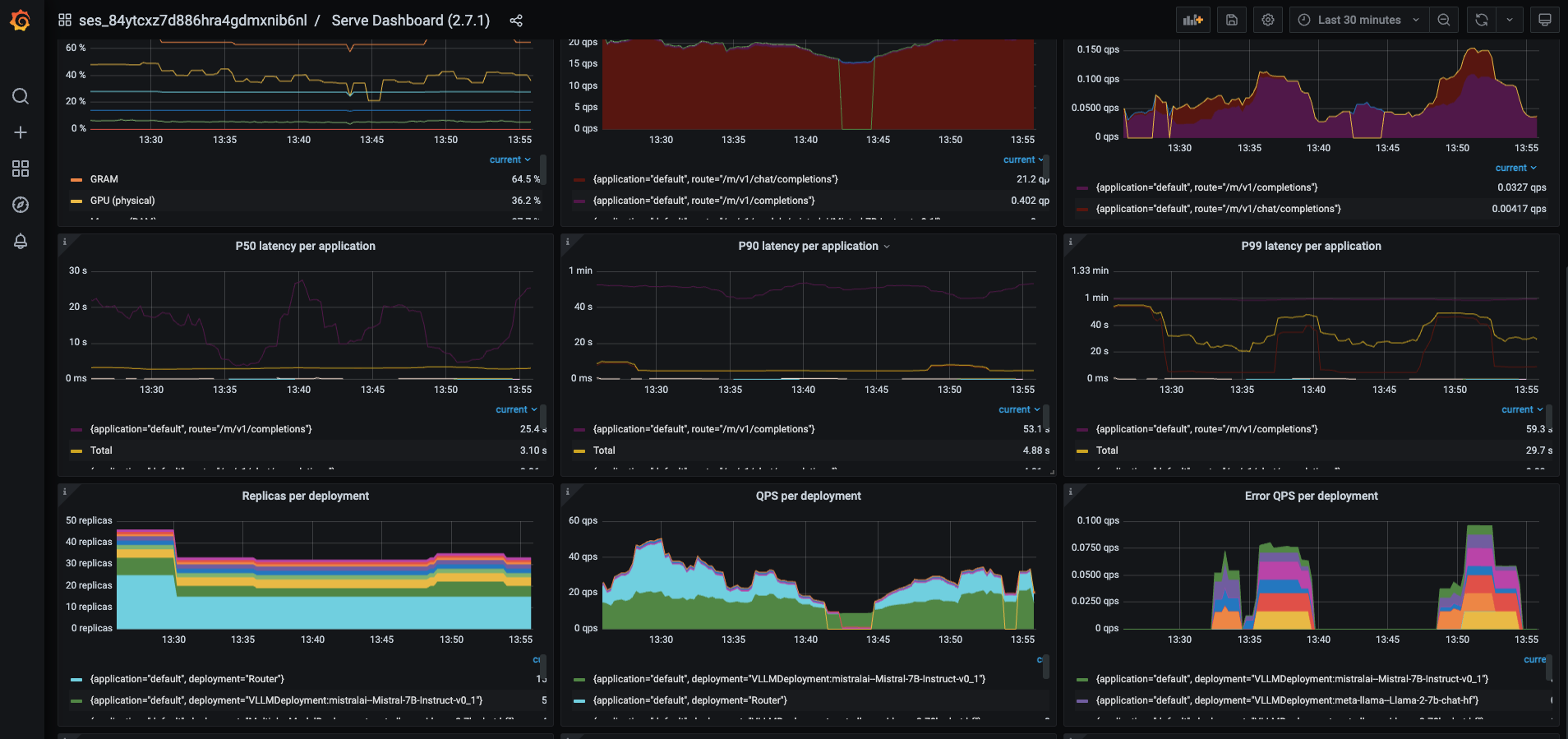

Example of preconfigured metrics available with Anyscale Private Endpoints

Example of preconfigured metrics available with Anyscale Private EndpointsAnyscale’s Private Endpoints have been tried, tested, and proven– powering the Anyscale Endpoints solution. Building such robust systems can be far from straightforward. Anyscale simplifies this process and allows practitioners to focus on their applications rather than maintaining and upgrading AI infrastructure.

Link5. Leading Performance

Anyscale doesn't just simplify; it optimizes. Benefits include:

Reducing instance startup times to less than 60 seconds and model loading optimizations to swap and serve unique models to spiky traffic

Machine pools designed to enable you to run LLMs on hardware from any cloud provider, including AWS, GCP, Lambda Labs, and more to ensure GPU availability for your LLM workloads

Link6. Future-Proof with a Comprehensive Platform

Powered by Ray, Anyscale Private Endpoints and the Anyscale platform provide a pathway to unlimited customizability and control for your AI Workloads. Anyscale Endpoints customers can upgrade to the full Anyscale Platform for limitless flexibility:

An ecosystem of libraries for last mile data processing, model training, reinforcement learning, or online inference for computer vision, many model training, general deep learning, and more.

Accelerator templates and pre-built application examples to expedite deployment for common AI workloads.

A simple python-first approach that eliminates the need to familiarize yourself with Kubernetes operators for new workloads. The Anyscale Platform powered by Ray is built for your AI workloads.

Specific to Large Language Models (LLMs), Ray’s flexibility allows Private Endpoints to quickly integrate the newest cutting edge optimizations across the stack ensuring your team spends time on your applications, not on implementing the latest algorithms.

Check out an overview video of Anyscale’s platform!

Link7. Security and Platform Services

Security is paramount when working with LLMs, and Anyscale’s Private Endpoints ensure your LLMs are deployed and fine-tuned in your cloud to mirror your security posture. Private Endpoints includes:

SOC 2 Type 2 compliance.

Cloud and resource isolation.

Customer defined networking for added security

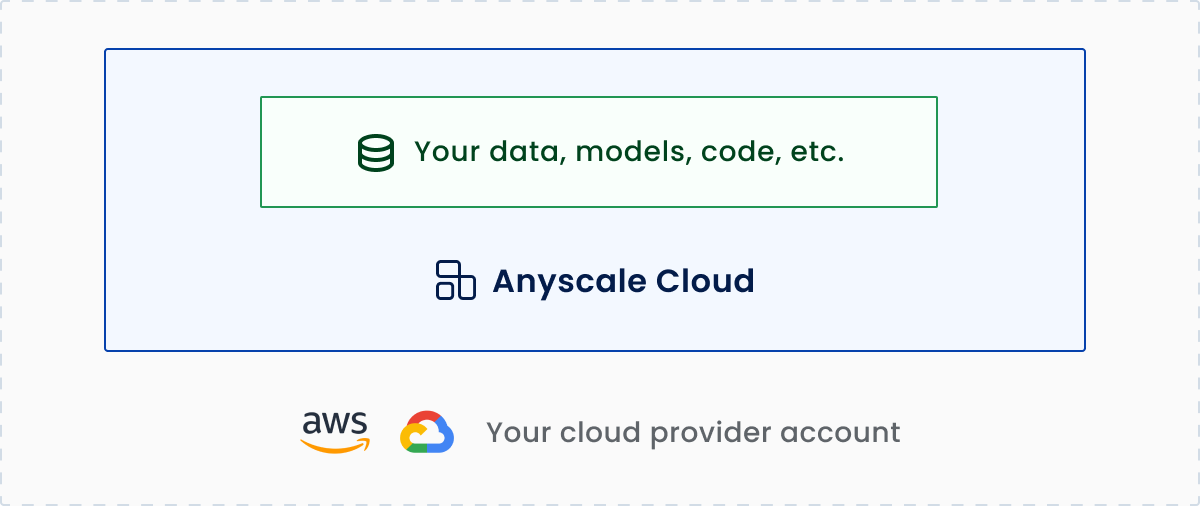

Anyscale Private Endpoints runs in customer clouds for enhanced privacy and security

Anyscale Private Endpoints runs in customer clouds for enhanced privacy and security Fine-Tuning Jobs run within the Anyscale Cloud deployed on the customers cloud provider infrastructure

Fine-Tuning Jobs run within the Anyscale Cloud deployed on the customers cloud provider infrastructureLink8. Dedicated Support

Anyscale isn’t just a platform; it's a partner. With Ray experts on standby, you're supported every step of the way. Our team proactively identifies and resolves issues, ensuring smooth operations and best in-class support.

LinkGet Started Today!

Private Endpoints are available today- sign up to get access!