Four Reasons Why Leading Companies Are Betting On Ray

Why tech leaders and alpha geeks are using Ray.

The recent Ray Summit featured a range of real-world applications and use cases made possible by Ray. Presenters included companies who need to overcome their current scaling challenges and who employ Ray as a foundation for future AI applications. For example, Dario Gil (Head of IBM Research) delivered a keynote talk in which he envisioned a world where quantum computing is more widespread:

“The future is going to be this intersection between classical and quantum information. We have the opportunity to bring together the worlds of Ray and Qiskit and explore the possibilities. … There will be this aspect of orchestration and parallelization of both classical and quantum resources that Ray and Qiskit will be able to do together. The benefit we get from this combination is that as quantum computing continues to advance, we will get exponential speedups for a class of problems that are important to business and society.”

Enthusiasts and tinkerers (“alpha geeks”) are early indicators — they tend to discover and embrace new trends and tools before entrepreneurs and other technologists do. So why exactly are more and more alpha geeks turning to Ray? In this post, we describe a few common reasons why leading organizations are coalescing around Ray. We draw from presentations from Ray Summit, from the results of surveys we’ve conducted over the past year, and conversations with companies who use Ray.

LinkSimple yet Flexible API for Distributed Computing

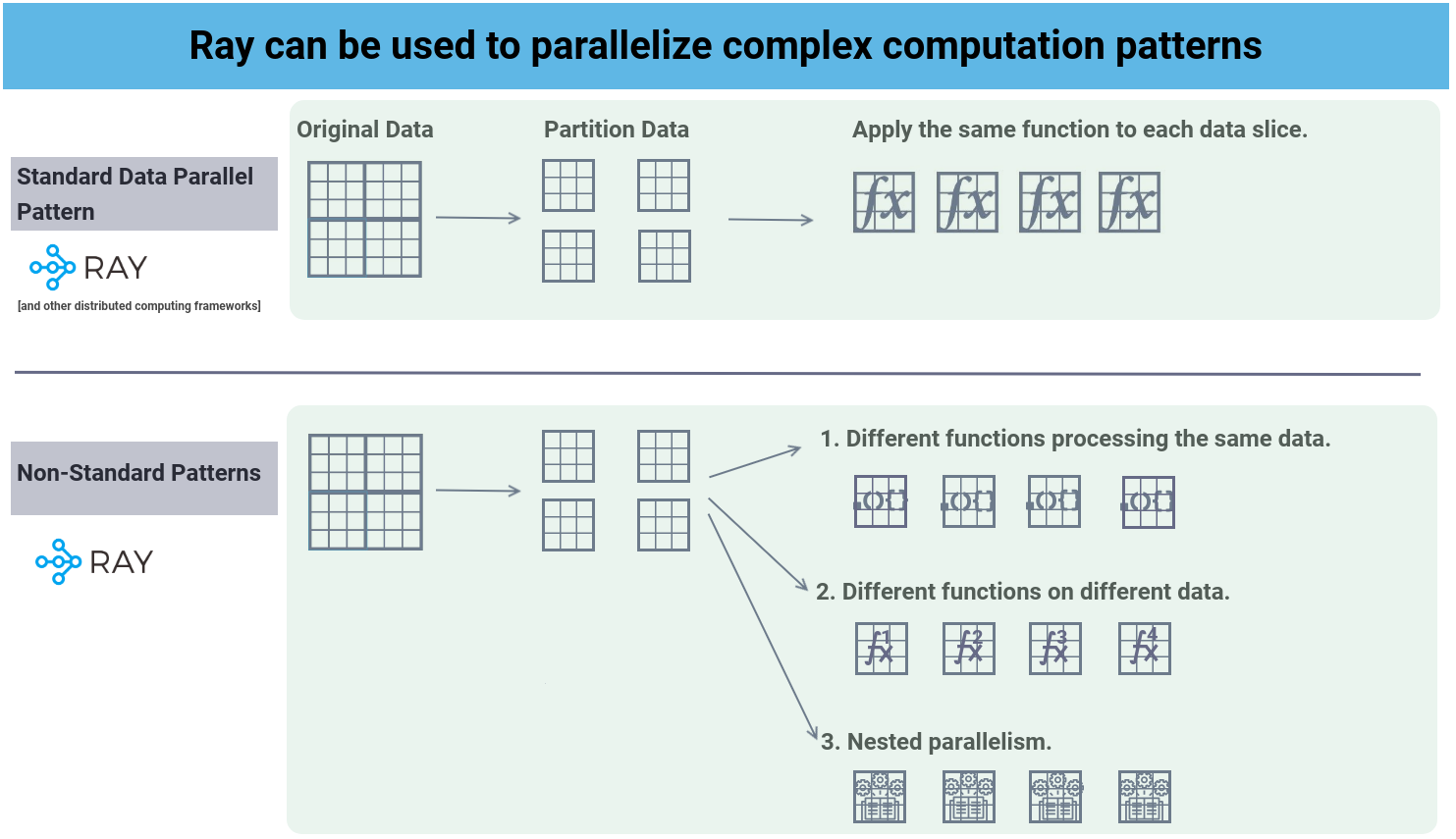

A key reason attracting developers to Ray is the simple yet flexible API. Ray allows developers to easily specify the application logic (in Python) and “most distributed computing aspects come for free.” Ray’s flexibility stems from its ability to support both stateless computations (Tasks) and stateful computations (Actors), with inter-node communications greatly simplified by a shared Object Store. This enables Ray to implement distributed patterns that go beyond data parallelism in which one runs the same function on each partition of a data set in parallel. Figure 1 illustrates some more complex patterns that frequently arise in machine learning applications (and Ray supports well):

Different functions processing the same data, i.e., training multiple models in parallel on the same input to identify the best model.

Different functions on different data, i.e., process time series from different sensors in parallel.

Nested parallelism, i.e., predictive maintenance on a set of devices, where for each device, we evaluate different models and pick the model with the lowest error.

Figure 1: Some computational patterns that arise in machine learning applications.

Figure 1: Some computational patterns that arise in machine learning applications.

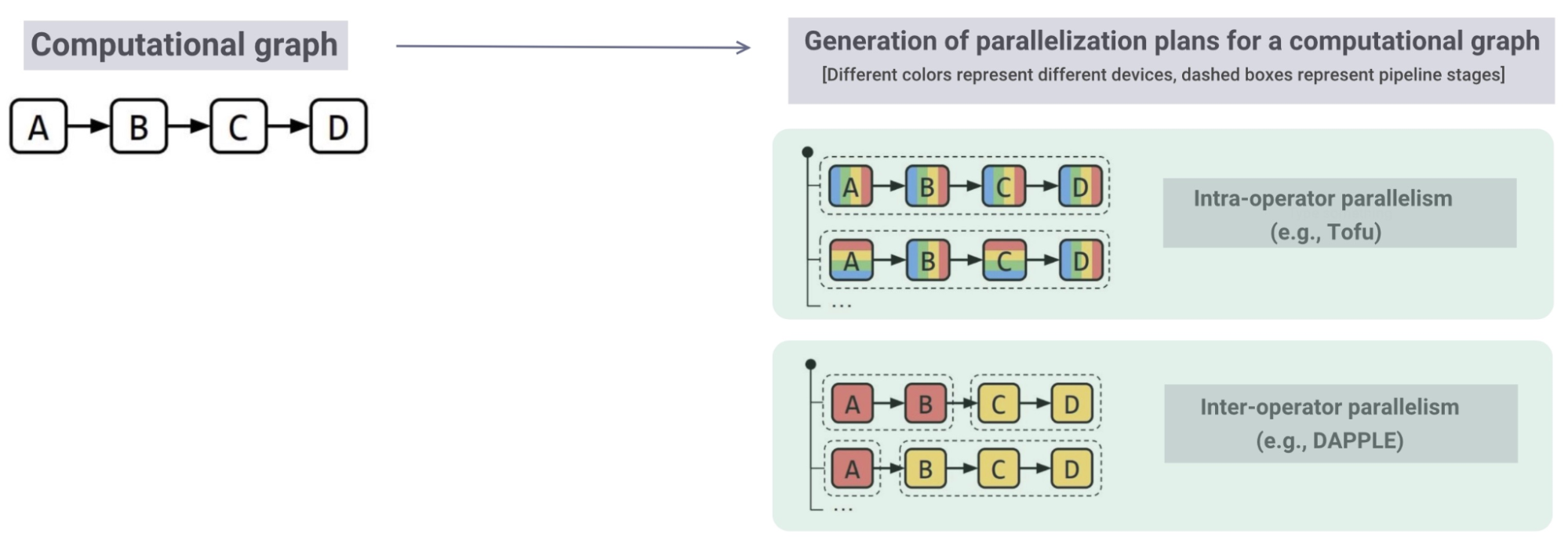

A recent example taking advantage of Ray’s flexibility is Alpa, an ambitious project developed by researchers from Google, AWS, UC Berkeley, Duke, and CMU. Alpa is a tool that simplifies training of large deep learning models. When a model is too big to fit on a single device (e.g., GPU), scaling often involves partitioning a computation graph of operators across many devices, possibly located on different servers. These operators perform different computations (as they implement different components of the neural network), they are stateful, and run in parallel. More specifically, there are two types of parallelism: inter-operator parallelism, where different operators are assigned to different devices, and intra-operator parallelism, where the same operator is split across different devices.

Figure 2: Parallelization plans for a computational graph, from “Alpa: Automating Inter- and Intra-Operator Parallelism for Distributed Deep Learning”. A, B, C, and D are operators. Each color represents a different device (i.e., GPU) executing a partition or the full operator leveraging Ray actors.

Figure 2: Parallelization plans for a computational graph, from “Alpa: Automating Inter- and Intra-Operator Parallelism for Distributed Deep Learning”. A, B, C, and D are operators. Each color represents a different device (i.e., GPU) executing a partition or the full operator leveraging Ray actors. Examples of intra-operator parallelism include data parallelism (Deepspeed-Zero), operator parallelism (Megatron-LM), and mixture-of-experts parallelism (GShard-MoE). Alpa is the first tool to unify these parallelism techniques, by automatically finding and executing the best inter-operator and intra-operator parallelism patterns in the computation graph. This way, Alpa automatically partitions, schedules, and executes the training computation of very large deep learning models on hundreds of GPUs.

To support these unified parallel patterns, Alpa developers chose Ray as the distributed execution framework because of Ray’s ability to support these highly flexible parallelism patterns and mappings between operations and devices.

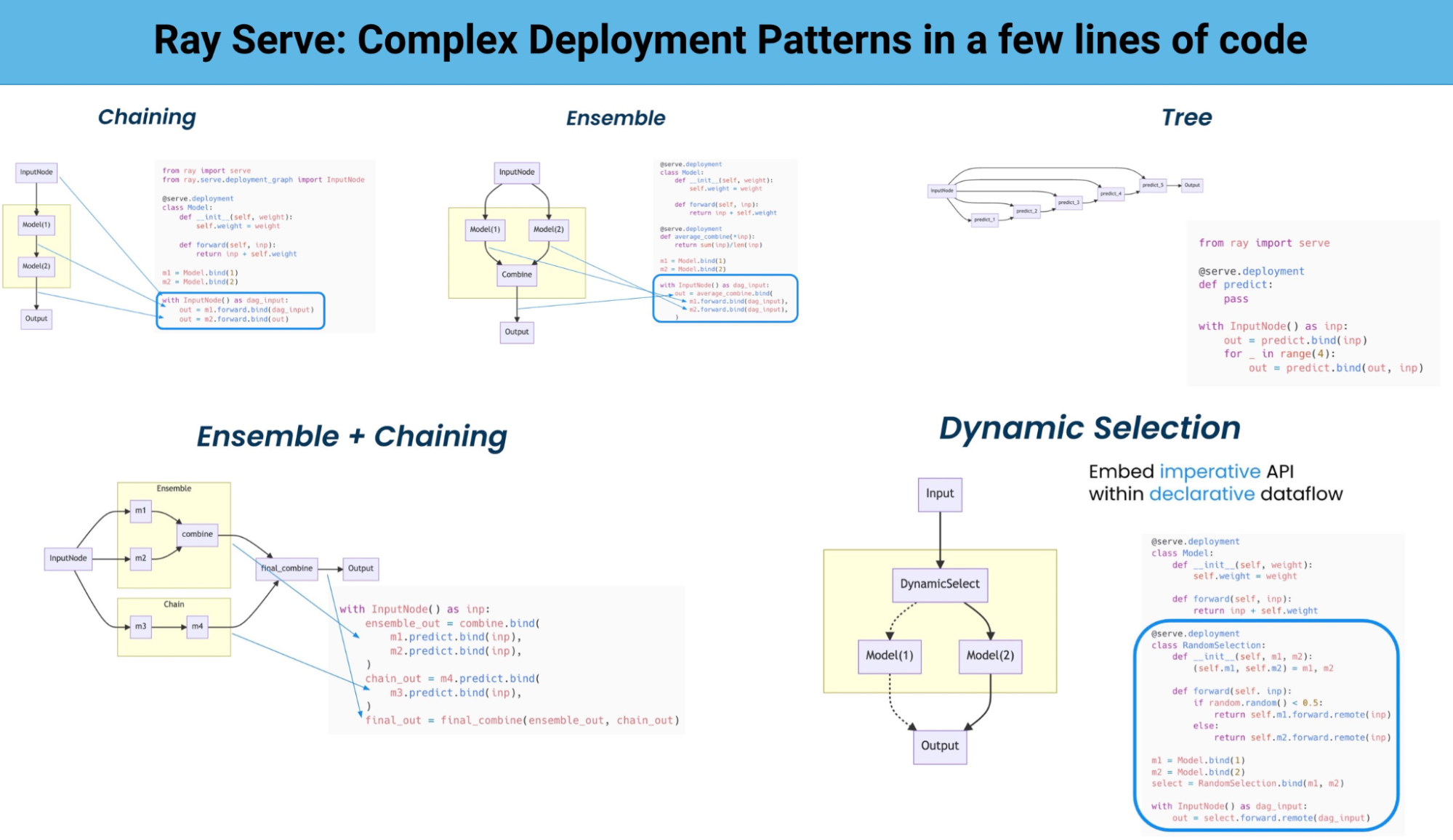

Another illustration of Ray’s flexibility is the Ray Serve (“Serve”) library that is used to provide model inference at scale: Serve supports complex deployment patterns that often involve deploying more than one model at a time. This is fast becoming an important capability particularly as machine learning models get embedded in more applications and systems. This requires the orchestration of multiple Ray actors, where different actors provide inference for different models. Furthermore, Ray Serve handles both batch and online inference (scoring), and can scale to thousands of models in production.

Figure 3: Deployment patterns from “Multi-model composition with Ray Serve deployment graphs” by Simon Mo, used with permission.

Figure 3: Deployment patterns from “Multi-model composition with Ray Serve deployment graphs” by Simon Mo, used with permission.LinkScaling Diverse Workloads

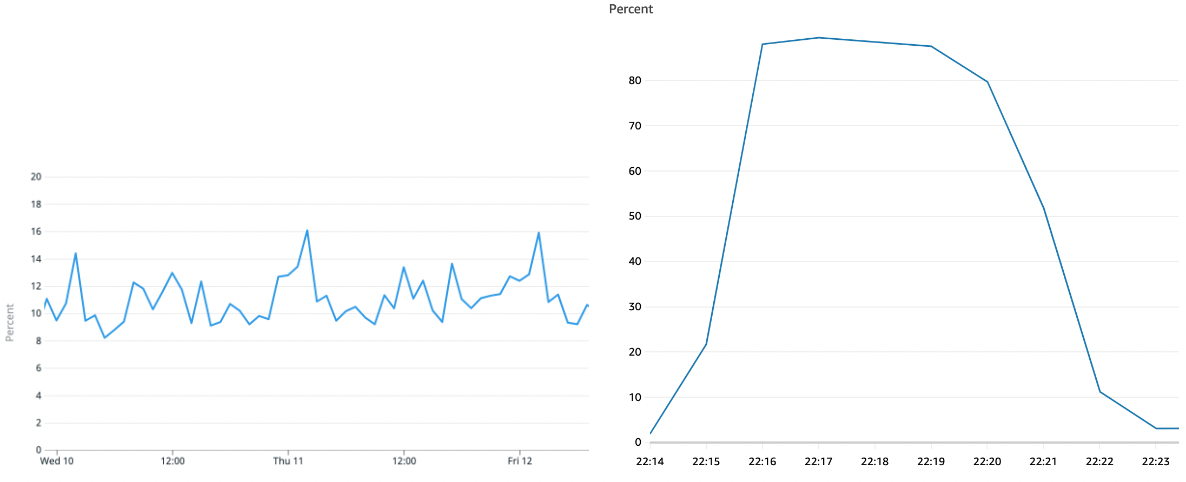

Another key property of Ray is scalability. An example of this is how Instacart uses Ray to power their large scale fulfillment ML pipeline. Ray allows their ML modelers to utilize large clusters in an easy, productive, and resource-efficient way. Fundamentally, Ray treats the entire cluster as a single, unified pool of resources and takes care of optimally mapping compute Tasks/Actors to the pool. By doing so, Ray largely eliminates non-scalable factors in the system – for example, the hard-partitioned tasks queues in Instacart’s legacy architecture.

“Ray enables them to run their models on very large data sets without having to get involved in the details of how to run a model on a large number of machines”

Figure 4: The “before” vs. after of resource utilization at Instacart’s Fulfillment ML pipeline

Figure 4: The “before” vs. after of resource utilization at Instacart’s Fulfillment ML pipeline

Another example of utilizing Ray to achieve scalable computing is OpenAI. According to Greg Brockman, President, Chairman & Co-Founder, Ray enables them to train their largest models:

“We looked at a half-dozen distributed computing projects, and Ray was by far the winner. … We are using Ray to train our largest models. It’s been very helpful to us to be able to scale up to unprecedented scale. … Ray owns a whole layer, and not in an opaque way.”

For users of popular machine learning frameworks, Ray Train scales model training for Torch, XGBoost, TensorFlow, scikit-learn, and more.Teams with more specialized needs (OpenAI, Hugging Face, Cohere, etc.) use Ray to train and deploy some of the largest machine learning models in production today.

Finally, Ray’s autoscaler implements autoscaling functionality. With autoscaling, Ray clusters can automatically scale up and down based on the resource demands of an application while maximizing utilization and minimizing costs.The autoscaler will increase worker nodes when the Ray workload exceeds the cluster's capacity. Whenever worker nodes sit idle, the autoscaler will remove them.

LinkUnification: One substrate to support ML workloads, graph processing, unstructured data processing

In a previous post, we listed the key reasons why Ray is a great foundation for machine learning platforms. The reasons we listed include the rich ecosystem of native and external libraries, along with out-of-the-box fault tolerance, resource management, and efficient utilization.

Here are some of the companies who use Ray in their ML platforms or infrastructure:

Figure 5: A sample of companies who use Ray in their ML platforms or infrastructure.

Figure 5: A sample of companies who use Ray in their ML platforms or infrastructure.

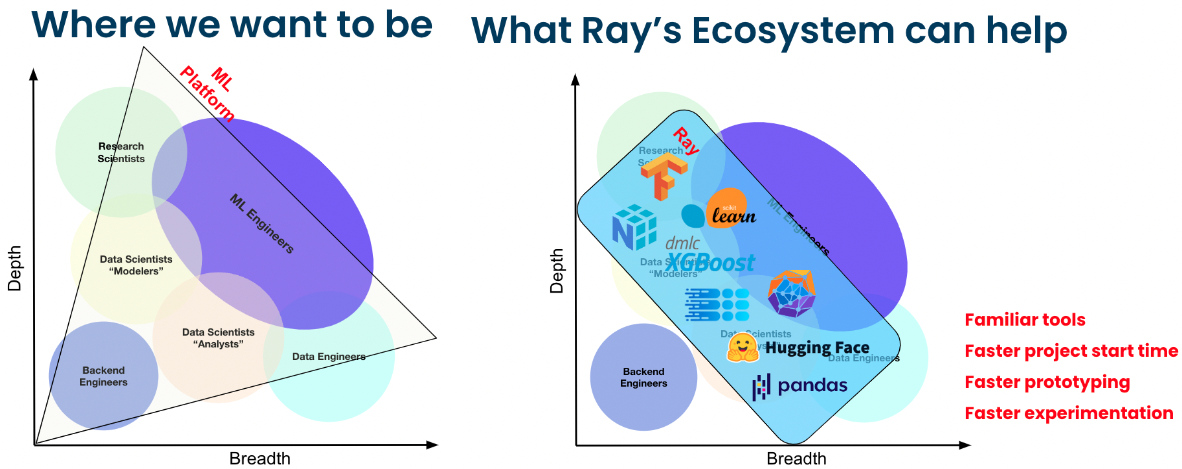

Diving deeper into Spotify’s talk in Ray Summit reveals insights on how the Ray-based unified substrate allows their scientists and engineers to access a rich ecosystem of Python-based ML libraries and tools:

Figure 6: How Ray’s ecosystem powers Spotify’s ML scientists and engineers

Figure 6: How Ray’s ecosystem powers Spotify’s ML scientists and engineersLooking beyond distributed training, users are attracted to Ray because it can scale other computations that are relevant for ML applications.

Graph computations at scale, as illustrated by projects at Bytedance and Ant Group, and recent work on knowledge graphs for industrial applications.

Reinforcement learning for recommenders, industrial applications,gaming, and beyond.

There are several companies using Ray to build bespoke tools for processing and handling new data types like images, video, and text. Since most data processing and data management tools target structured or semi-structured data, there are many highly optimized solutions for scaling tabular data (dataframes) or JSON files. Meanwhile, AI applications increasingly rely on unstructured data such as text, images, video, and audio. In fact, there is a pressing need for tools to process and manage unstructured data, and we've come across a few startups doing this. The reason data teams are opting to use Ray to process unstructured data is that it’s fast (C++), simple (Python native), scalable, and flexible enough to handle complex data processing patterns, and both streaming and batch workloads.

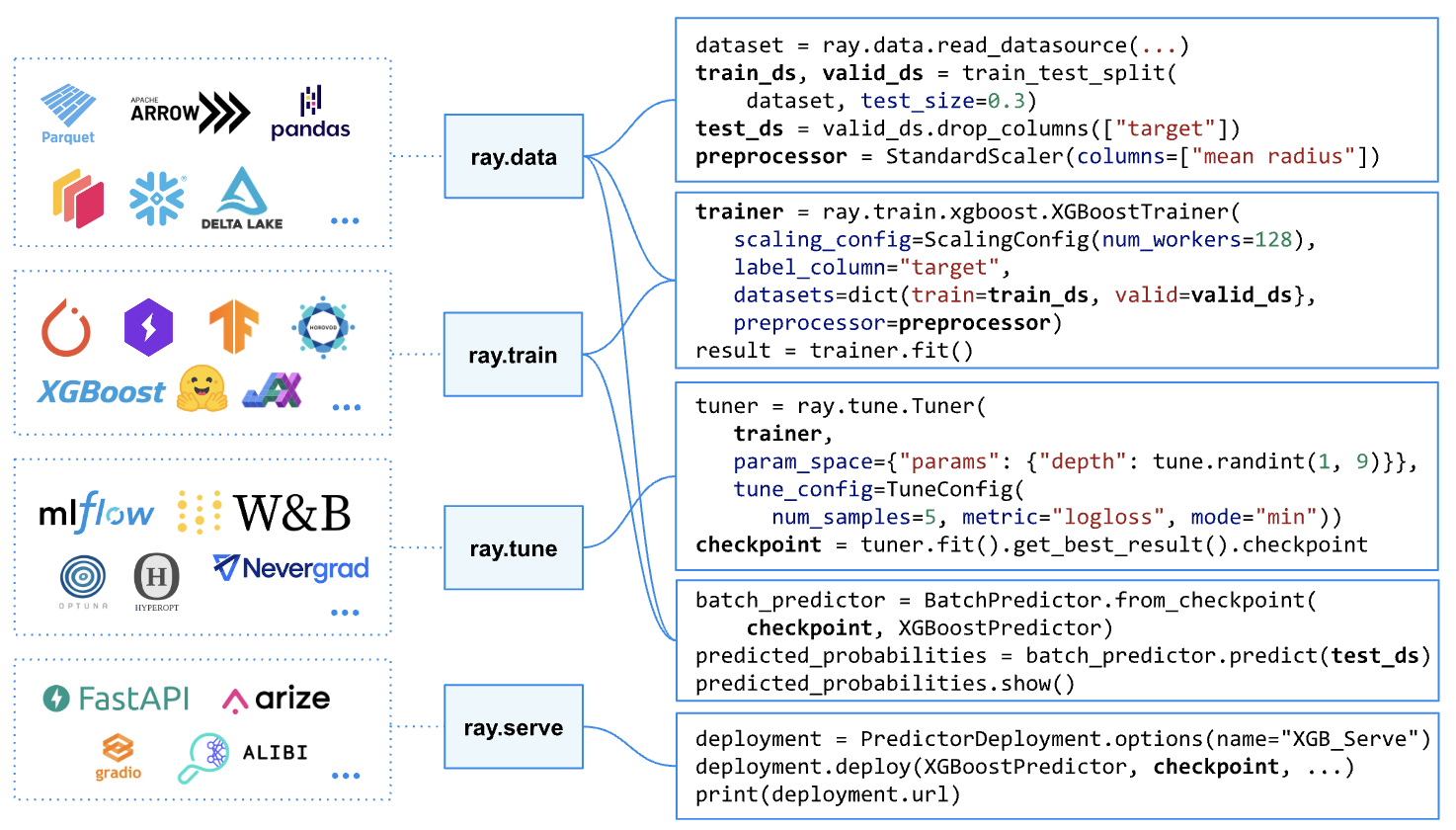

During Ray Summit, we also introduced Ray AI Runtime (AIR)* as part of Ray 2.0. Ray AIR greatly simplifies things even further by providing a streamlined, scalable, and unified toolkit for machine learning applications.

Figure 7: How Ray AIR unifies ML libraries in a simple way.

Figure 7: How Ray AIR unifies ML libraries in a simple way.LinkSupport for Heterogeneous Hardware

In response to the exponential growth of ML and data processing workloads, as well as the slow down of Moore’s Law, hardware manufacturers have released an increasing number of hardware accelerators. These accelerators are not only coming from traditional hardware manufacturers like Nvidia, Intel, and AMD, but from cloud providers (e.g., TPUs from Google, Inferentia from AWS) and a plethora of startups (e.g., Graphcore, Cerebras). Furthermore, the ubiquitous Intel and AMD CPUs are now challenged by ARM CPUs, as illustrated by the AWS’s Graviton instances. As such, when scaling a workload, one needs to develop not only distributed applications, but heterogeneous distributed applications.

One of the key properties of Ray is natively supporting heterogeneous hardware by allowing developers to specify such hardware when instantiating a Task or Actor. For example, a developer can specify in the same application that one Task needs 1 CPU, while an Actor requires 2 CPUs and 1 Nvidia A100 GPU.

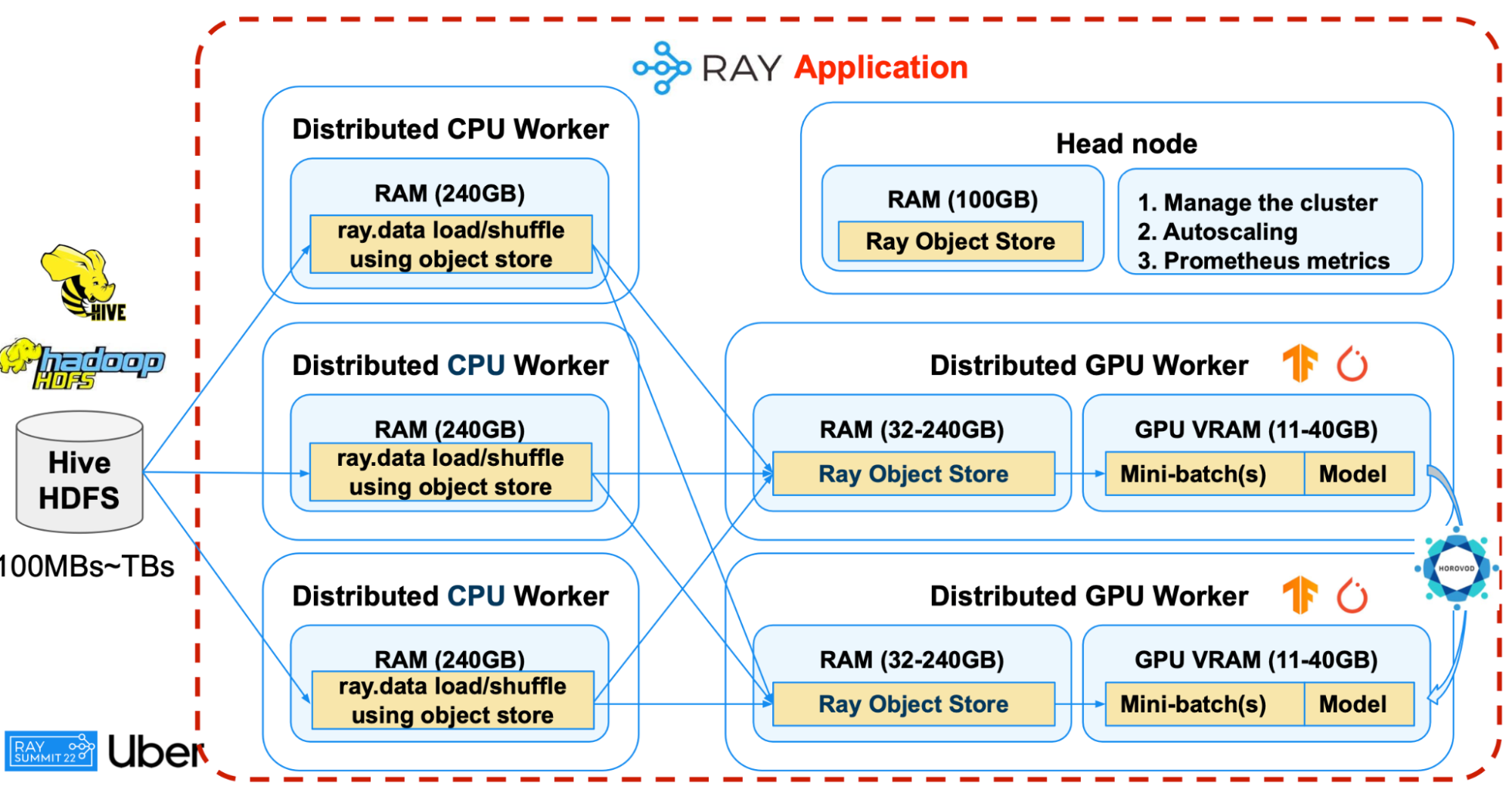

An illustrative example is the production deep learning pipeline at Uber. A heterogeneous setup of 8 GPU nodes and 9 CPU nodes improves pipeline throughput by 50%, while substantially saving capital cost, compared with the legacy setup of 16 GPU nodes.

Figure 8: Heterogeneous deep learning cluster at Uber

Figure 8: Heterogeneous deep learning cluster at Uber

A more future-oriented example is how IBM is planning to leverage Ray to empower developers to utilize both classic data centers and quantum computers by writing simple Python code on their laptops.

Figure 9: How IBM uses Qisit + Ray to orchestrate Quantum + Classical computing

Figure 9: How IBM uses Qisit + Ray to orchestrate Quantum + Classical computing

In summary, Ray provides simple and flexible APIs for developers to easily access scalable and unified distributed computing, on heterogeneous hardware. As a result, it has become a top choice for innovative AI applications. To learn more details, please see the full Ray Summit program and stay tuned to our meetup series!

Related Content:

*We are sunsetting the "Ray AIR" concept and namespace starting with Ray 2.7. The changes follow the proposal outlined in this REP.