Fine-tuning Llama-3, Mistral and Mixtral with Anyscale

In this blog post, we’ll explore the process of fine-tuning some of the most popular large language models (LLMs) such as Llama-3, Mistral, and Mixtral using Anyscale. Specifically, we’ll demonstrate how to:

Fine-tune an LLM using Anyscale’s llm-forge: We’ll cover the fine-tuning process end-to-end from preparing the input data to launching the fine-tuning job and monitoring the process.

Serve your model with Anyscale’s ray-llm: We’ll explain how to serve both LoRA and the full-parameter fine-tuned models, and how to leverage

ray-llmfor easy deployment and LoRA multiplexing.

Let’s get started!

LinkFine-tuning LLMs with Anyscale - Overview

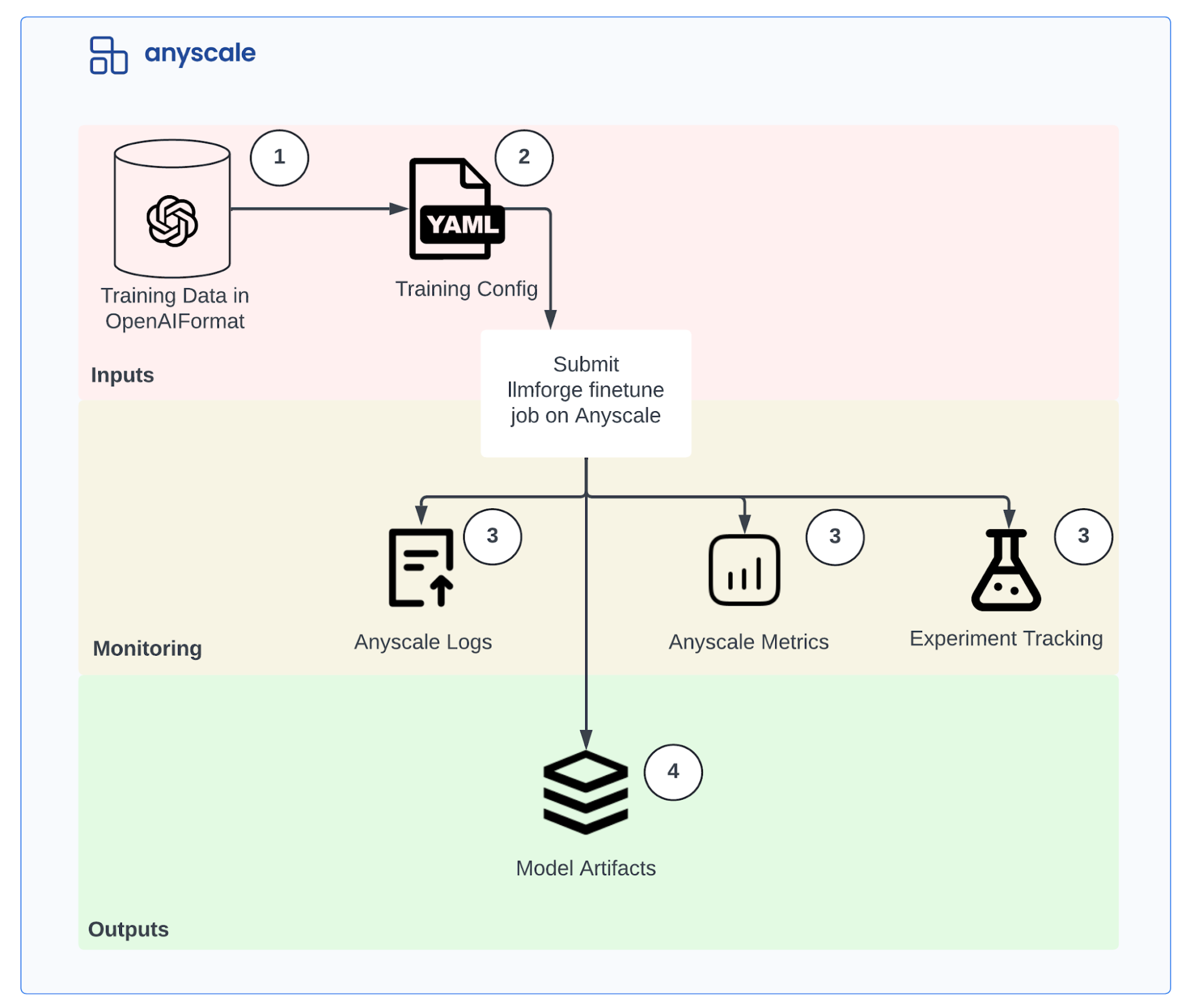

Here is a high-level overview of the steps involved in fine-tuning LLMs with Anyscale:

Prepare the data: Ensure that your training and validation data is in the OpenAI format.

Launch a fine-tuning job: Use the

llm-forgelibrary to launch a fine-tuning job on Anyscale.Monitor the fine-tuning process: Keep an eye on the training logs and metrics to track performance.

Inspect the produced model checkpoint: Check the model checkpoint and use it for deployment.

Below is a diagram that helps visualize the process:

LinkStep 1 - Prepare the data

Before you can start fine-tuning your LLM, you need to ensure that your training and validation data is in the correct format. The llm-forge library expects the data to be in the OpenAI dataset format, which is a JSON-based format that represents a conversation between a user and an assistant. Each JSON object in the dataset contains a “messages” field with a list of messages exchanged between the user and the assistant. Each message has a “role” field (either “system”, “user”, or “assistant”) and a “content” field that holds the actual text of the message.

Here’s an example of what the data should look like:

1{"messages": [

2 {"role": "system", "content": "Marv is a factual chatbot that is also sarcastic."},

3 {"role": "user", "content": "What's the capital of France?"},

4 {"role": "assistant", "content": "Paris, as if everyone doesn't know that already."}

5]}

6{"messages": [

7 {"role": "system", "content": "Marv is a factual chatbot that is also sarcastic."},

8 {"role": "user", "content": "Who wrote 'Romeo and Juliet'?"},

9 {"role": "assistant", "content": "Oh, just some guy named William Shakespeare. Ever heard of him?"}

10]}llm-forge will convert the OpenAI dataset format into the appropriate prompt format for the chosen base model. This conversion is crucial because any slight misspecification in the prompt format can significantly impact the quality of the fine-tuned model.

Once you have prepared your data, you can proceed to the next step of launching the fine-tuning job on Anyscale.

LinkStep 2 - Launch a fine-tuning job

With llm-forge, you launch a fine-tuning job by preparing a YAML configuration file. This file allows you to specify all the details of the fine-tuning process, including the data, model, training hyperparameters, and output settings. llm-forge offers pre-built YAML configurations for the most common base models so you don’t have to figure out most parameters from scratch.

LinkLaunching a LoRA-based fine-tuning job

Here’s an example YAML configuration for a LoRA-based fine-tuning of the Meta-Llama-3-8B:

1model_id: meta-llama/Meta-Llama-3-8B # <-- change this to the model you want to fine-tune

2train_path: s3://air-example-data/gsm8k/train.jsonl # <-- change this to the path to your training data

3valid_path: s3://air-example-data/gsm8k/test.jsonl # <-- change this to the path to your validation data. This is optional

4context_length: 512 # <-- change this to the context length you want to use

5num_devices: 4 # <-- change this to total number of GPUs that you want to use

6num_epochs: 10 # <-- change this to the number of epochs that you want to train for

7train_batch_size_per_device: 16

8eval_batch_size_per_device: 16

9learning_rate: 1e-4

10padding: "longest" # This will pad batches to the longest sequence. Use "max_length" when profiling to profile the worst case.

11num_checkpoints_to_keep: 1

12dataset_size_scaling_factor: 10000

13output_dir: /mnt/local_storage

14deepspeed:

15 config_path: deepspeed_configs/zero_3_offload_optim+param.json

16dataset_size_scaling_factor: 10000 # internal flag. No need to change

17flash_attention_2: true

18trainer_resources:

19 memory: 53687091200 # 50 GB memory

20worker_resources:

21 accelerator_type:A10G: 0.001

22lora_config:

23 r: 8

24 lora_alpha: 16

25 lora_dropout: 0.05

26 target_modules:

27 - q_proj

28 - v_proj

29 - k_proj

30 - o_proj

31 - gate_proj

32 - up_proj

33 - down_proj

34 - embed_tokens

35 - lm_head

36 task_type: "CAUSAL_LM"

37 modules_to_save: []

38 bias: "none"

39 fan_in_fan_out: false

40 init_lora_weights: trueThis configuration sets up a LoRA-based fine-tuning job for the Meta-Llama-3-8B, using 4 A10 GPUs and training for 10 epochs. To launch the fine-tuning job, use the following command:

1llmforge anyscale finetune "finetune/lora/meta-llama-3-8b.yaml"llmforge anyscale finetuneYou can also integrate with tools like Weights & Biases by setting your API key before launching your fine-tuning job:

1export WANDB_API_KEY={YOUR_WANDB_API_KEY}

2llmforge anyscale finetune "finetune/lora/meta-llama-3-8b.yaml"LinkLaunching a full-parameter fine-tuning job

Alternatively, here’s an example YAML configuration for a full-parameter fine-tuning of the Llama 3 8B model:

1model_id: meta-llama/Meta-Llama-3-8B # <-- change this to the model you want to fine-tune

2train_path: s3://air-example-data/gsm8k/train.jsonl # <-- change this to the path to your training data

3valid_path: s3://air-example-data/gsm8k/test.jsonl # <-- change this to the path to your validation data. This is optional

4context_length: 512 # <-- change this to the context length you want to use

5num_devices: 16 # <-- change this to total number of GPUs that you want to use

6num_epochs: 10 # <-- change this to the number of epochs that you want to train for

7train_batch_size_per_device: 8

8eval_batch_size_per_device: 16

9learning_rate: 5e-6

10padding: "longest" # This will pad batches to the longest sequence. Use "max_length" when profiling to profile the worst case.

11num_checkpoints_to_keep: 1

12dataset_size_scaling_factor: 10000

13output_dir: /mnt/local_storage

14deepspeed:

15 config_path: deepspeed_configs/zero_3_offload_optim+param.json

16dataset_size_scaling_factor: 10000 # internal flag. No need to change

17flash_attention_2: true

18trainer_resources:

19 memory: 53687091200 # 50 GB memory

20worker_resources:

21 accelerator_type:A10G: 0.001This configuration sets up a full-parameter fine-tuning job for the Llama 3 8B model, using 16 A10 GPUs and training for 10 epochs. Note that both LoRA and full-parameter fine-tuning can leverage DeepSpeed optimizations, which you can read more about on the deep configuration page.

The key difference between the LoRA and full-parameter configurations is that the latter is significantly more resource intensive requiring 16 GPUs opposed to 4 GPUs for half the training batch size. We’ll discuss the trade-offs between these two approaches in the “Advanced fine-tuning tips” section.

To launch the fine-tuning job, use the following command:

1llmforge anyscale finetune "finetune/full_param/meta-llama-3-8b.yaml"LinkAdvanced tuning tips

Should you perform LoRA-based or full-parameter fine-tuning?

There is no general answer to this, but here are some considerations:

The quality of the fine-tuned models will, in most cases, be comparable if not the same.

LoRA shines if:

You want to serve many fine-tuned models at once yourself.

You want to rapidly experiment (because fine-tuning, downloading, and serving the model take less time).

Full-parameter shines if:

You want to ensure that your fine-tuned model has the maximum quality.

You want to serve only one fine-tuned version of the model.

You can learn more about this in one of our blog posts, where you’ll also find guidance on the LoRA parameters and why, in most cases, you don’t need to change them.

How to optimize for compute cost?

Before optimizing for compute, ensure that you have selected a context length that is long enough for your dataset. If you have very few data points in your dataset that require a much larger context than the others, consider removing them. The model of your choice and fine-tuning technique should also suit your data.

If you want different compute, we suggest the following workflow to find a suitable configuration:

Start with a batch size of 1

Choose a GPU instance type that you think will give you good flops/$. If You are not Sure, here is a rough guideline:

A10 nodes for high availability

A100 nodes for lower availability but better flops/$

Anything higher-end if you have the means of acquiring them

Do some iterations of trial and error on instance types and deepspeed settings to fit the workload while keeping other settings fixed

Use deepspeed stage 3 (all default configs in this template use stage 3)

Try to use deepspeed offloading only if it reduces the minimum number of instances you have to use

Deepspeed offloading slows down training but allows for larger batch sizes because of a more relaxed GRAM foot-print

Use as few instances as possible. Fine-tune on the same machine if possible.

The GPU to GPU communication across machines is very expensive compared to the memory savings it could provide. You can use a cheap CPU-instance as a head-node for development and a GPU-instance that can scale down as a worker node for the heavy lifting.

Training single-node on A100s may end up cheaper than multi-node on A10s if availability is not an issue

Be aware that evaluation and checkpointing introduce their own memory-requirements

If things look good, run fine-tuning for a full epoch with evals.

After you have followed the steps above, increase batch size as much as possible without OOMing.

We do not guarantee that this will give you optimal settings, but have found this workflow to be helpful ourselves in the past.

LinkStep 3 - Monitor the fine-tuning process

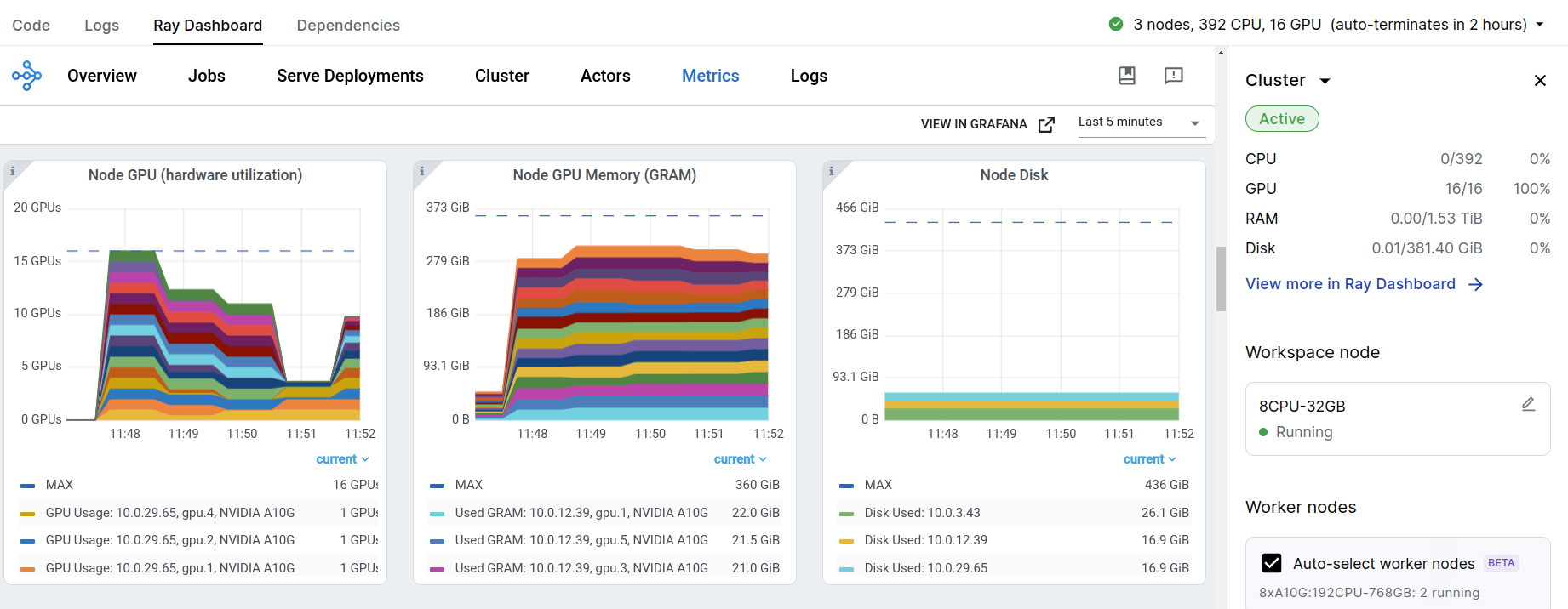

As your fine-tuning job runs on the Anyscale platform, you’ll want to monitor the progress and performance metrics. Anyscale provides a convenient Ray Dashboard that allows you to monitor various aspects of your training run.

In the Ray Dashboard, you can check the GPU usage and other key metrics.

In the logs of the fine-tuning job, you’ll see detailed information about the training process and performance metrics, such as:

Training loss over time

Validation loss and perplexity

Throughput of trained tokens

Time spent on forward and backward passes

Overall training time and iterations

Below is an example of the output you should see in the logs:

1Training finished iteration 84 at 2024-06-03 11:11:32. Total running time: 16min 44s

2╭─────────────────────────────────────────────────────────╮

3│ Training result │

4├─────────────────────────────────────────────────────────┤

5│ checkpoint_dir_name checkpoint_000005 │

6│ time_this_iter_s 36.40451 │

7│ time_total_s 844.85971 │

8│ training_iteration 84 │

9│ avg_bwd_time_per_epoch 3.04739 │

10│ avg_fwd_time_per_epoch 2.93967 │

11│ avg_train_loss_epoch 0.07368 │

12│ bwd_time 2.63804 │

13│ epoch 11 │

14│ eval_loss 0.26874 │

15│ eval_time_per_epoch 13.15118 │

16│ fwd_time 2.78809 │

17│ learning_rate 0. │

18│ num_iterations 7 │

19│ perplexity 1.30831 │

20│ step 6 │

21│ total_trained_steps 84 │

22│ total_update_time 511.17685 │

23│ train_loss_batch 0.0213 │

24│ train_time_per_epoch 42.60657 │

25│ train_time_per_step 5.42907 │

26│ trained_tokens 704400 │

27│ trained_tokens_this_iter 2172 │

28│ trained_tokens_throughput 1377.99668 │

29│ trained_tokens_throughput_this_iter 400.28001 │

30╰─────────────────────────────────────────────────────────╯Additionally, the output includes information about the checkpoint directory, where the fine-tuned model is being saved. This allows you to easily access the checkpoint and use the fine-tuned model for deployment or further experimentation.

By closely monitoring the fine-tuning process, you can ensure that your model is converging as expected and make any necessary adjustments to the training configuration or hyperparameters. Finally, if you enable Weights and Biases, you can track your fine-tuning experiments and compare performance across different runs.

LinkStep 4 - Serve the fine-tuned models

Now that you’ve successfully fine-tuned your model, it’s time to serve it for inference.

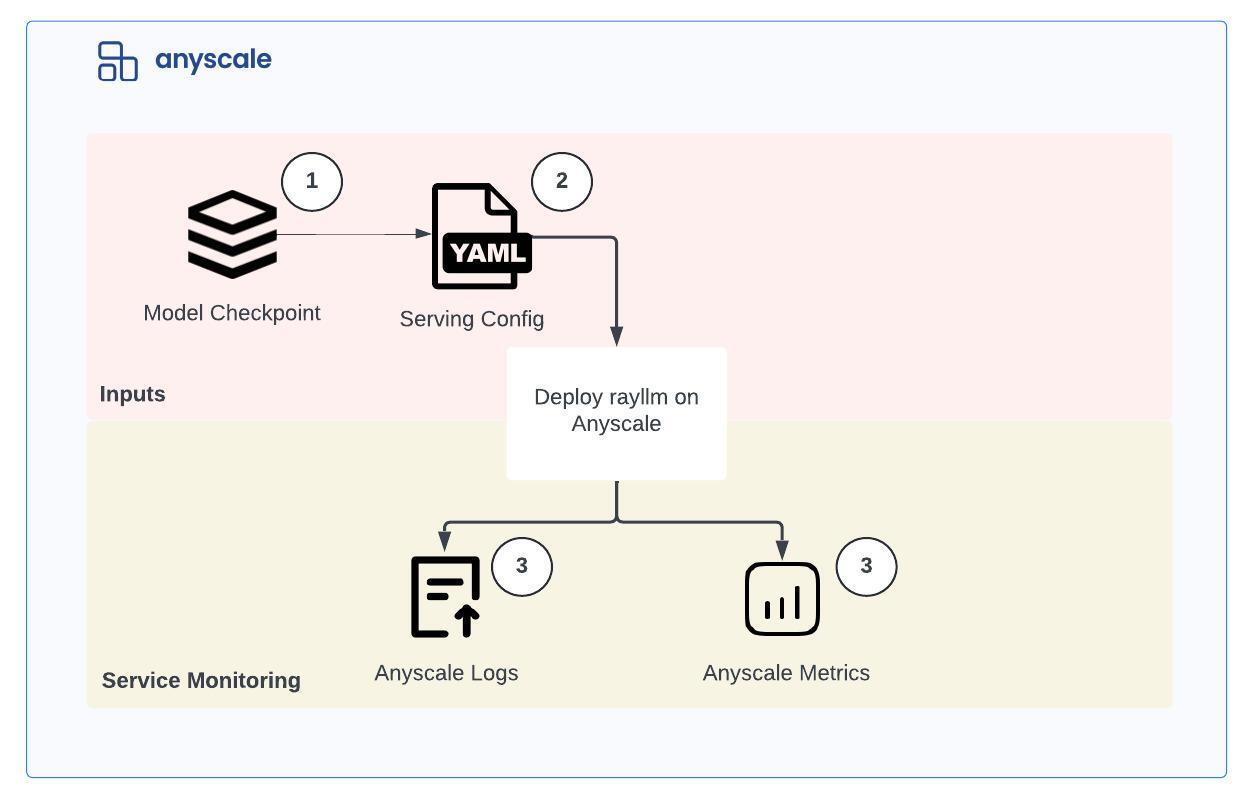

The key steps involved are:

Save the fine-tuned model checkpoint to a location accessible by Anyscale (e.g., a cloud storage service like S3 or GCS).

Create a ray-llm compatible YAML configuration file that specifies the model to be served and any additional settings.

Deploy and monitor the custom model to Anyscale Services, which will make it available as a scalable, production-ready endpoint.

Below is a diagram that helps visualize the process:

LinkServing full-parameter fine-tuned models

For full-parameter fine-tuning, you can use the stored checkpoint from the fine-tuning process and serve the model using Anyscale Services. The checkpoint will contain the complete set of fine-tuned model parameters.

Here’s an example of the checkpoint location:

1Best checkpoint is stored in:

2{CHECKPOINT_PATH}/TorchTrainer_2024-06-03_10-54-47/TorchTrainer_5e49d_00000_0_2024-06-03_10-54-48/checkpoint_000003And here’s an example YAML configuration for serving the full-parameter fine-tuned model:

1applications:

2- args:

3 embedding_models: []

4 function_calling_models: []

5 models: []

6 multiplex_lora_adapters: []

7 multiplex_models: []

8 vllm_base_models:

9 - ./model_config/meta-llama--Meta-Llama-3-8B-Instruct.yaml

10 import_path: aviary_private_endpoints.backend.server.run:router_application

11 name: llm-endpoint

12 route_prefix: /In this configuration, we reference the fine-tuned model configuration in the vllm_base_models field, which points to a yaml file containing the model checkpoint location.

Furthermore, you can refer to this working example that demonstrates how to deploy your own LLM.

LinkServing LoRA-based fine-tuned models

For LoRA-based fine-tuning, you will use a very similar YAML configuration file to the full-parameter finetuning except:

instead of referencing the model under

vllm_base_models, you will usemultiplex_modelsyou will store your LoRA model checkpoint under the

dynamic_lora_loading_path

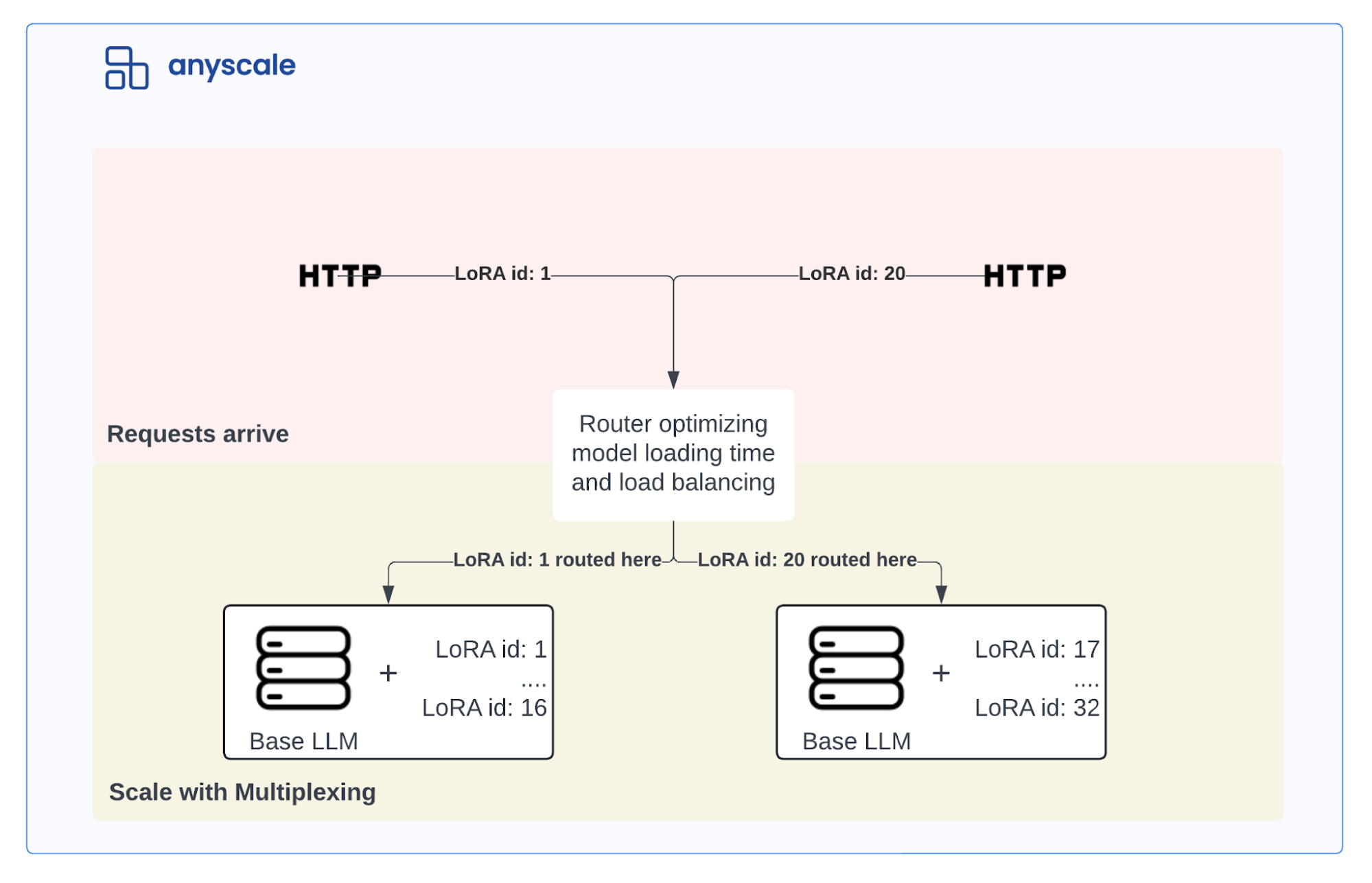

The dynamic_lora_loading_path is a “directory” that contains all the LoRAs that you want to multiplex on the same base model. This approach allows you to load the LoRA adapter weights dynamically, which is significantly more efficient than loading each fine-tune as a full parameter model.

Here’s an example YAML configuration for serving a LoRA-based fine-tuned model:

1Training finished iteration 84 at 2024-06-03 11:11:32. Total running time: 16min 44s

2╭─────────────────────────────────────────────────────────╮

3│ Training result │

4├─────────────────────────────────────────────────────────┤

5│ checkpoint_dir_name checkpoint_000005 │

6│ time_this_iter_s 36.40451 │

7│ time_total_s 844.85971 │

8│ training_iteration 84 │

9│ avg_bwd_time_per_epoch 3.04739 │

10│ avg_fwd_time_per_epoch 2.93967 │

11│ avg_train_loss_epoch 0.07368 │

12│ bwd_time 2.63804 │

13│ epoch 11 │

14│ eval_loss 0.26874 │

15│ eval_time_per_epoch 13.15118 │

16│ fwd_time 2.78809 │

17│ learning_rate 0. │

18│ num_iterations 7 │

19│ perplexity 1.30831 │

20│ step 6 │

21│ total_trained_steps 84 │

22│ total_update_time 511.17685 │

23│ train_loss_batch 0.0213 │

24│ train_time_per_epoch 42.60657 │

25│ train_time_per_step 5.42907 │

26│ trained_tokens 704400 │

27│ trained_tokens_this_iter 2172 │

28│ trained_tokens_throughput 1377.99668 │

29│ trained_tokens_throughput_this_iter 400.28001 │

30╰─────────────────────────────────────────────────────────╯As long as all the LoRA models are stored in the same location specified by dynamic_lora_loading_path, you can serve multiple LoRA models using a single ray-llm endpoint. ray-llm will efficiently multiplex the models for you, avoiding costly LoRA loading operations by optimally routing requests to the correct model based on the request headers.

Below is a diagram visualizing multiple LoRA models being served using ray-llm:

LinkConclusion

By following the instructions in this post, you should now have an understanding of:

How to fine-tune large language models using Anyscale’s

llm-forgelibraryHow to serve fine-tuned models using Anyscale’s

ray-llmlibrary

To proceed with fine-tuning your custom models, you should:

Create an account on the Anyscale platform and get access to free compute credits

Finally, if you want an end-to-end workflow for LLMs that you can adopt, checkout our detailed guide here