Cloud Infrastructure for LLM and Generative AI Applications

By Yifei Feng, Sriram Sankar, Siddharth Venkatesh and Ameer Haj Ali | September 14, 2023

Update June 2024: Anyscale Endpoints (Anyscale's LLM API Offering) and Private Endpoints (self-hosted LLMs) are now available as part of the Anyscale Platform. Click here to get started on the Anyscale platform.

We dive into the Anyscale platform’s evolution to accelerate Gen AI and LLM app development and what enables our Anyscale LLM Endpoints offering. Discover how we've drastically accelerated startup times, optimizing compute availability, enhanced security & access controls, reduced costs, improved spot instance support, provided fast, scalable storage solutions, and more!

LinkSummary

We recently introduced Anyscale Endpoints, with the intention to help developers integrate fast, cost-efficient, and scalable LLM APIs. In this blog post, we discuss the cloud infrastructure that powers the entire lifecycle of GenAI and LLM application development and deployment.

With Anyscale,organizations can put their entire focus on developing LLM and Gen AI applications. By eliminating the need to manage infrastructure (e.g resources like VMs, networking, storage), we empower teams to concentrate solely on innovation and delivery. While we simplify the process for most organizations, we also offer advanced controls for those who require them. This flexibility sets Anyscale apart from many other application platforms.

Instance startup times are a major bottleneck for real-time Generative AI and LLM applications, impacting development and deployment efficiency. Through a series of optimizations, we achieved a 4-5x improvement in cluster startup time, enhancing overall productivity and accelerating time to value for businesses.

Anyscale's cloud platform now has the ability to optimize compute availability by intelligently determining the most suitable availability across clouds, regions, zones and instance types, particularly for GPU-intensive GenAI and LLM workloads.

Current GenAI and LLM applications struggle between balancing cost and reliability. Anyscale’s innovative approach ensures that we fallback to the best available instance. One specific mechanism - that involves combining On Demand to Spot and Spot to On Demand fallback- offers the optimal balance between service availability and cost efficiency.

Stay tuned and join us at Ray Summit to hear more about what we are building!

At Anyscale, one of our primary objectives is to offer seamless infrastructure solutions that support the entire lifecycle of GenAI and LLM application development and deployment. This includes everything from containerization, efficient scaling, cluster orchestration, and security, to fully harnessing the GPU availability and hardware capabilities offered by various cloud providers. Our platform handles the complexities of the underlying infrastructure, removing bottlenecks and accelerating your time to market. By eliminating the need to manage infrastructure, we empower teams to concentrate solely on innovation and delivery. This same infrastructure also powers Anyscale Endpoints – our fast, cost-efficient, and scalable LLM APIs. While we streamline the process for most users, we also offer advanced controls for organizations that require them. By offering a platform that's both easy to use and highly customizable, we provide a solution that's not just powerful, but also incredibly flexible. This unique combination of capabilities distinguishes Anyscale from other platforms.

In this blog post, we dive into the work we have been doing to achieve these goals.

LinkFaster Instance Startup Time: A step change in performance for GenAI and LLM applications

Instance startup times are crucial for Generative AI and large language model (LLM) applications. The time it takes to initialize these models can bottleneck their use in real-time applications. Faster startup time enables rapid autoscaling and quicker reaction to traffic. When combined with downscaling, this allows us to maintain a minimally-sized cluster given the load, reducing costs while providing reliable inference service. Beyond serving, faster startup also accelerates the iterative development of these models, considerably shortening the time to market.

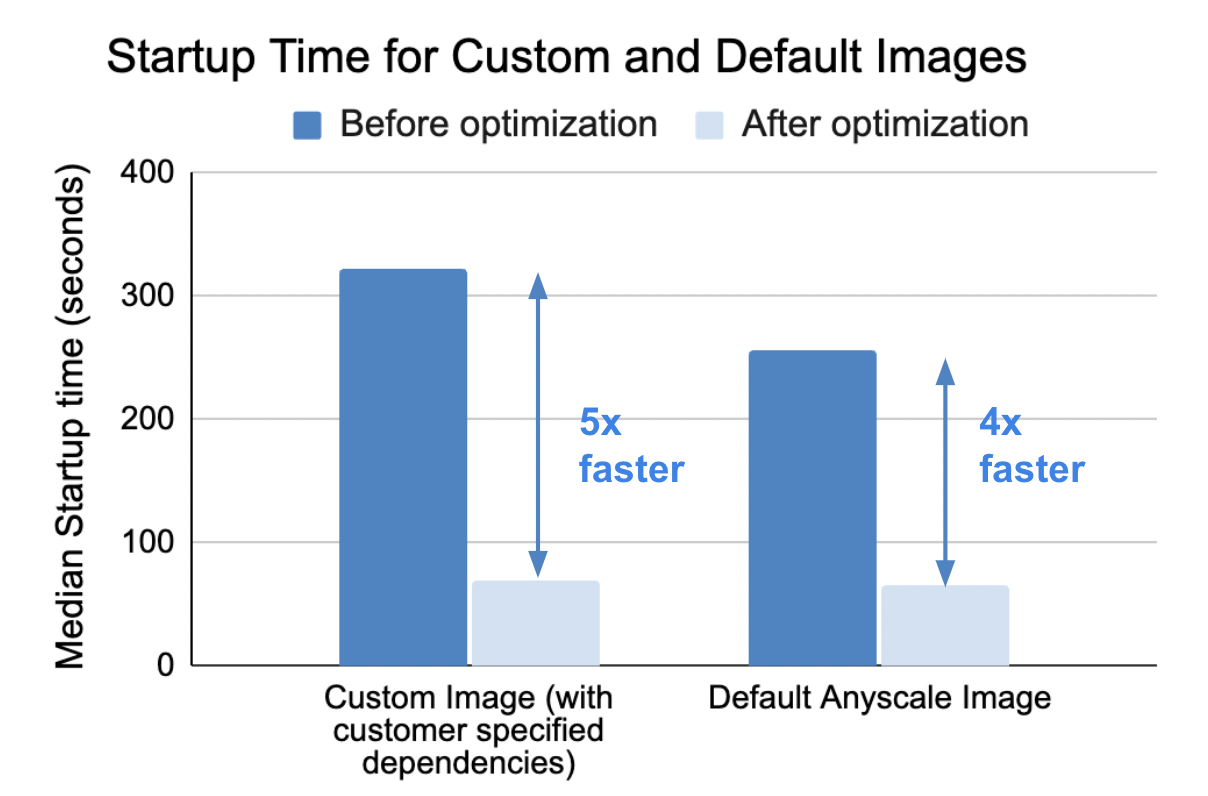

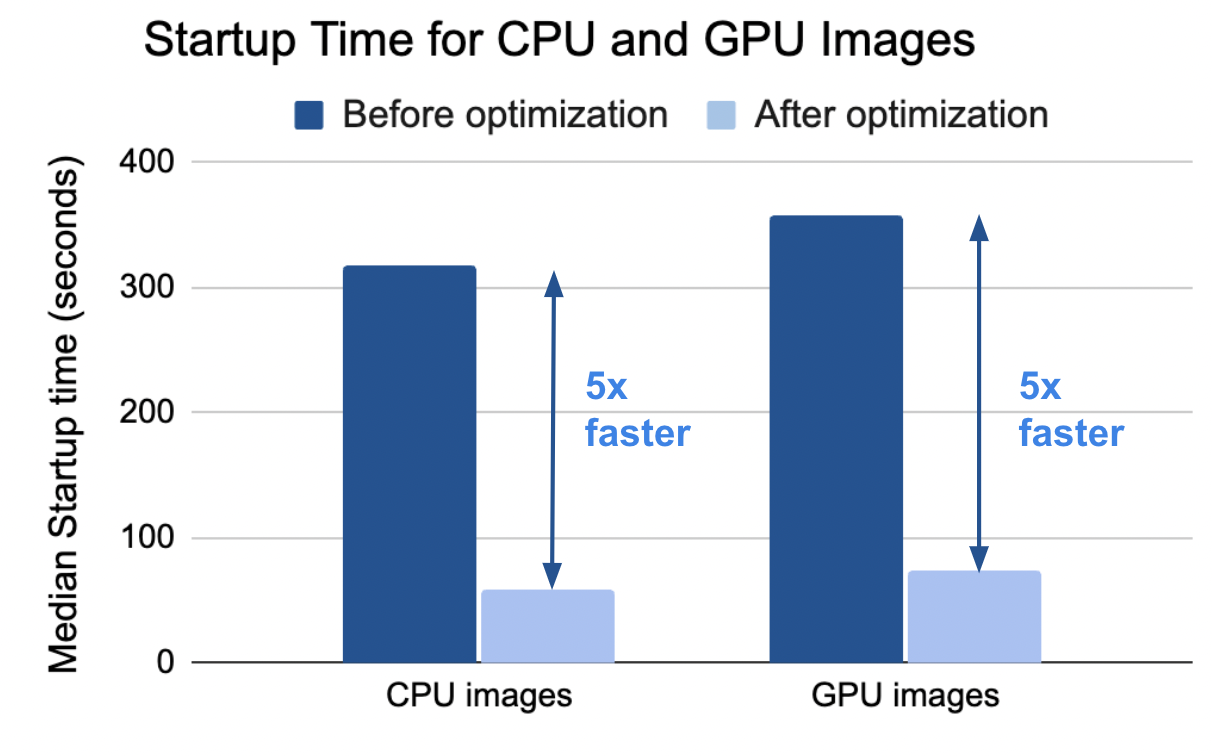

In our experience of collaborating with many customers in developing and deploying large-scale workloads, we have consistently identified slow instance startup time as a major challenge. Addressing this issue has been a focus of the team. During our investigation, we identified container image download and extraction durations as the primary contributors to long instance startup times. This is particularly noticeable when dealing with large GPU images for Generative AI and LLM applications. To address this, we optimized our image snapshot format and switched away from using the Docker runtime as the container runtime. We also developed our own container snapshotter. These customized changes allowed us to fully utilize the network bandwidth available to the machines.

By benchmarking against a typical customer workload, we observed an average improvement of 4-5x in cluster startup time. The optimizations we implemented showed a consistent improvement across cloud providers, regions, CPU and GPU images, and both custom and default images. This includes user specified Docker images, enabling private dependency/package builds, and integration with your organization's CI/CD pipelines for effective environment management.

Figure 1: Consistent improvement across custom and default images

Figure 2: Consistent improvement across CPU and GPU images

Beyond instance start and model pulling times, our team also optimized model loading to achieve a fast end-to-end application cold startup time. Stay tuned for an upcoming blog post where we will delve deeper into the technical aspects of these enhancements.

LinkInstance Availability: Finding and utilizing the optimal compute anywhere

Compute capacity in the cloud can be hard to find; this is especially true for GenAI and LLM applications which often require specialized GPU instances. Anyscale allows you to automatically

launch instances across multiple availability zones,

select the best instance types for your workload based on availability, cost and performance

maximize utilization of spot instances.

This allows you to focus on developing your applications and not worry about the infrastructure.

Let’s discuss each of these in more detail.

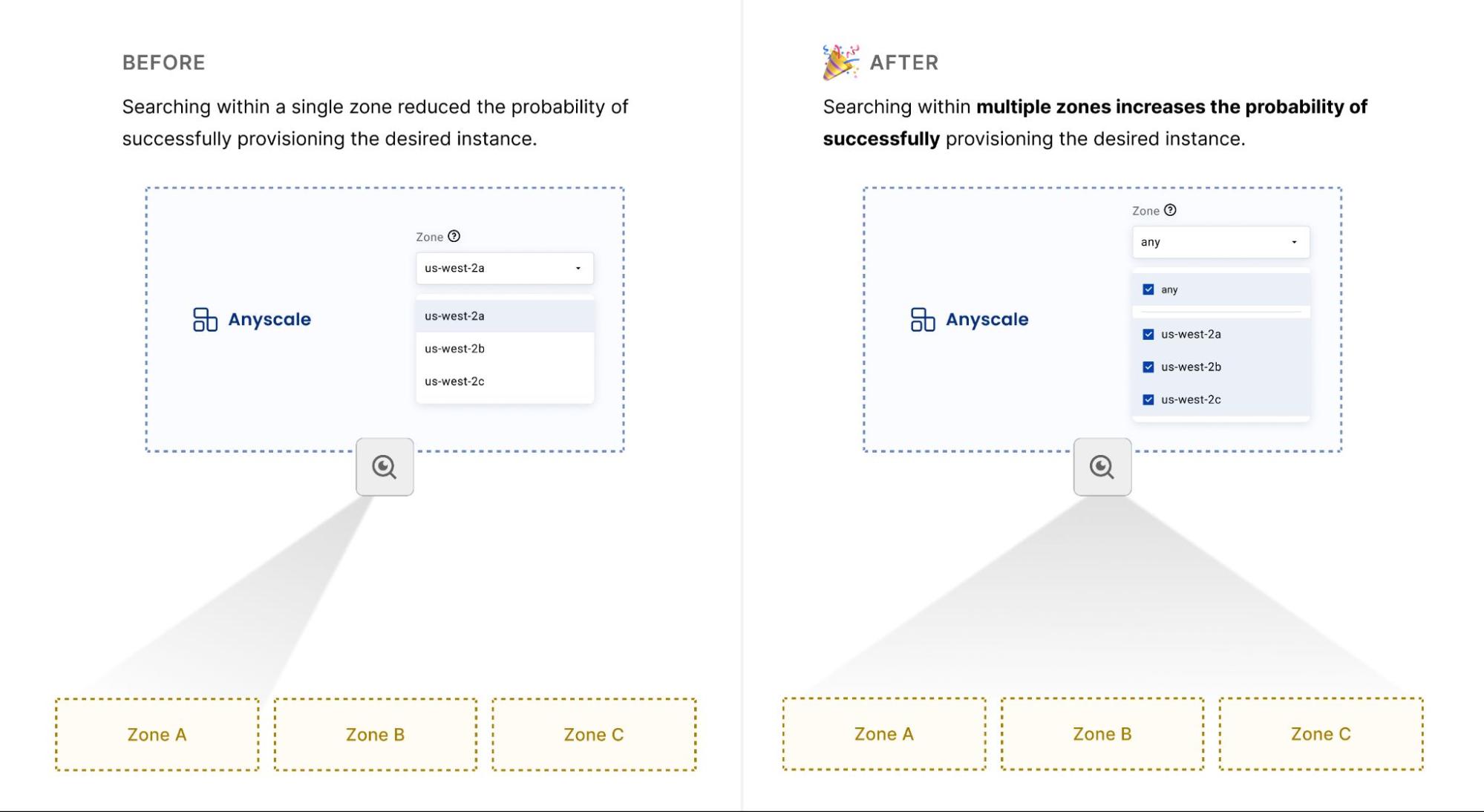

To launch instances across multiple availability zones, users can configure the availability zone as “any” in the Anyscale console (see figure below). We use various internal metrics to intelligently identify the zone that is most likely to satisfy users’ compute requirements and preferably allocate instances in that zone, spilling over to different zones if needed to run your workload. This increases the probability of success of provisioning the type of instances most suitable for customers’ workload at a lower price. For example, we will choose the availability zone that is mostly likely to have spot availability for the instance type users prefer.

As the GPU shortage becomes increasingly prominent for Gen AI and LLM applications, we are continually improving our infrastructure to leverage available compute across not only availability zones, but also various regions and different cloud providers.

Besides choosing availability zones, regions and cloud providers, another challenge that many users face is choosing the best instance types for their workloads. While some users want more control over which instances are selected, other users prefer Anyscale to automatically select instances for them. We developed a smart instance manager that satisfies both needs: Users can let us choose the instance intelligently based on our past benchmark data, the workload, current availability and cost. Alternatively users can specify instance preferences and Anyscale will determine and launch instances based on the specifications.

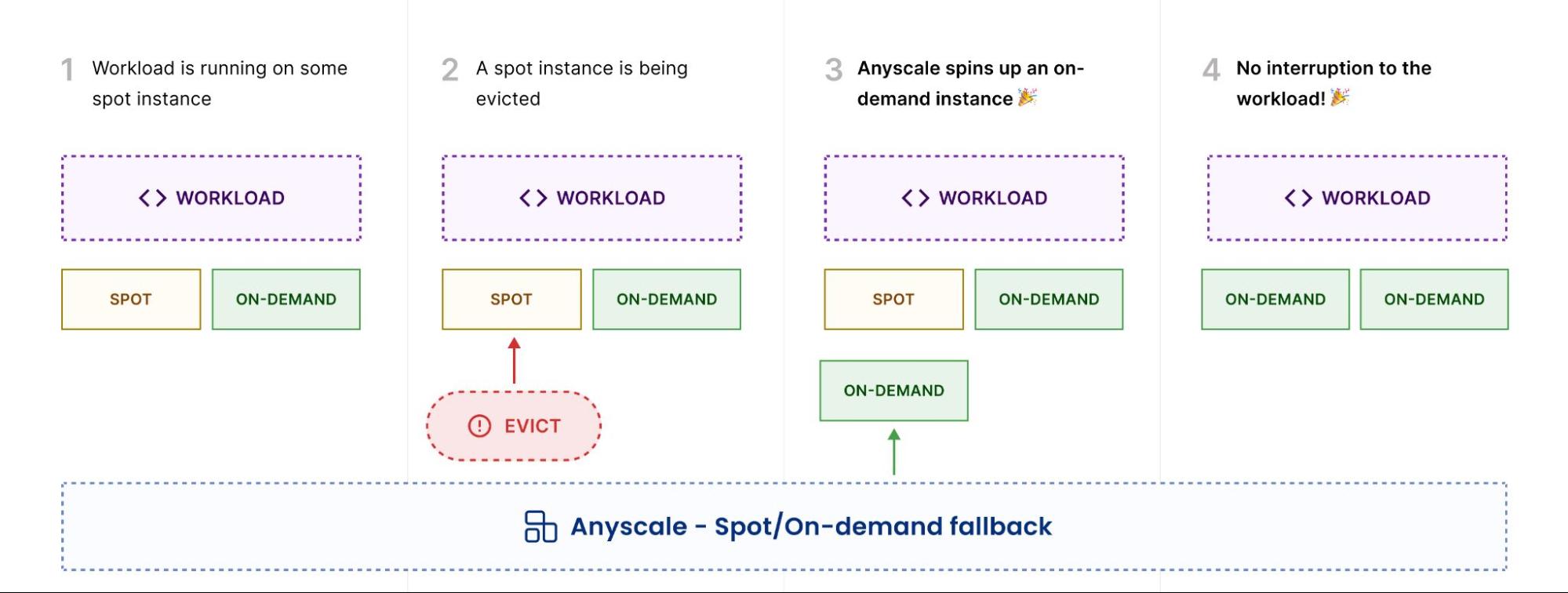

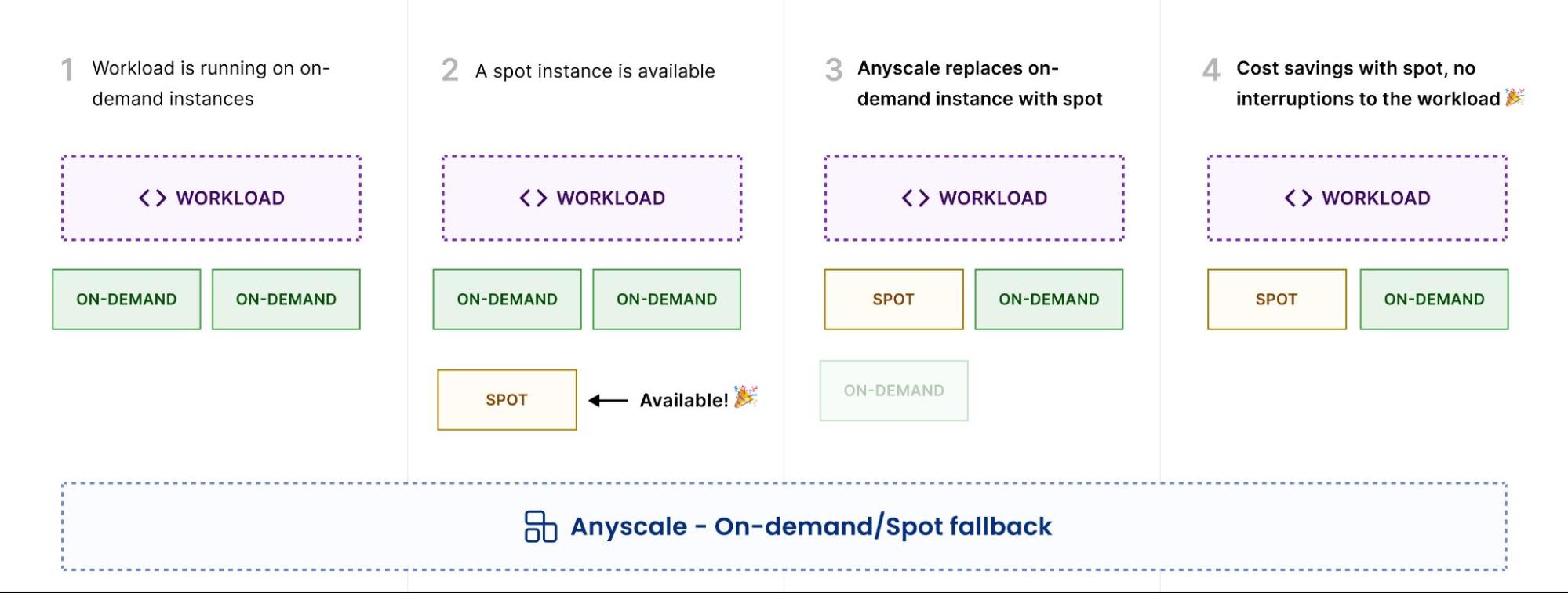

Current GenAI and LLM applications struggle between balancing cost and reliability. Another way to reduce cost is to utilize spot instances. While offering deep discounted pricing, spot instances run the risk of eviction and compromising the reliability of long running applications. Anyscale’s Spot/ On-Demand fallback and On-Demand/ Spot Fallback features, together with the fault tolerance provided by Ray, allow users to maximize the spot cost saving without losing reliability.

Spot/ On-demand fallback means in the event where there is no spot capacity, or a spot instance is being evicted, Anyscale will spin up an on demand instance to ensure availability and no interruption to your workload.

On-demand Spot fallback represents the opposite scenario. In the event there is restored spot availability but a user has an on-demand instance running, Anyscale will replace the on-demand node with spot to save on costs.

By intelligently launching instances across multiple availability zones, selecting the most suitable instance types based on availability and cost, and maximizing the utilization of spot instances, Anyscale finds and leverages the cheapest available compute suitable for users’ workload. With Anyscale, our customers can focus on developing their GenAI and LLM applications without worrying about infrastructure in today's GPU-scarce environment.

LinkPrivate IPs and Cloud Security: Enabling Data Privacy for GenAI and LLM applications

Data privacy is a primary concern when deploying LLM applications, since they will often process business critical or sensitive user data. Our platform empowers enterprises to deploy their technology stack either within their own Virtual Private Cloud (VPC) or on the public internet. This flexibility allows customers to make the best choice based on their specific needs and regulatory requirements.

The Customer Defined Networking deployment option is specifically designed for scenarios where privacy and security considerations prevent organizations from using public providers. Our architecture is designed to separate the control plane from the data plane, providing an additional layer of security. In this mode, Ray clusters will be deployed in private subnets and accessed over Private IP addresses.

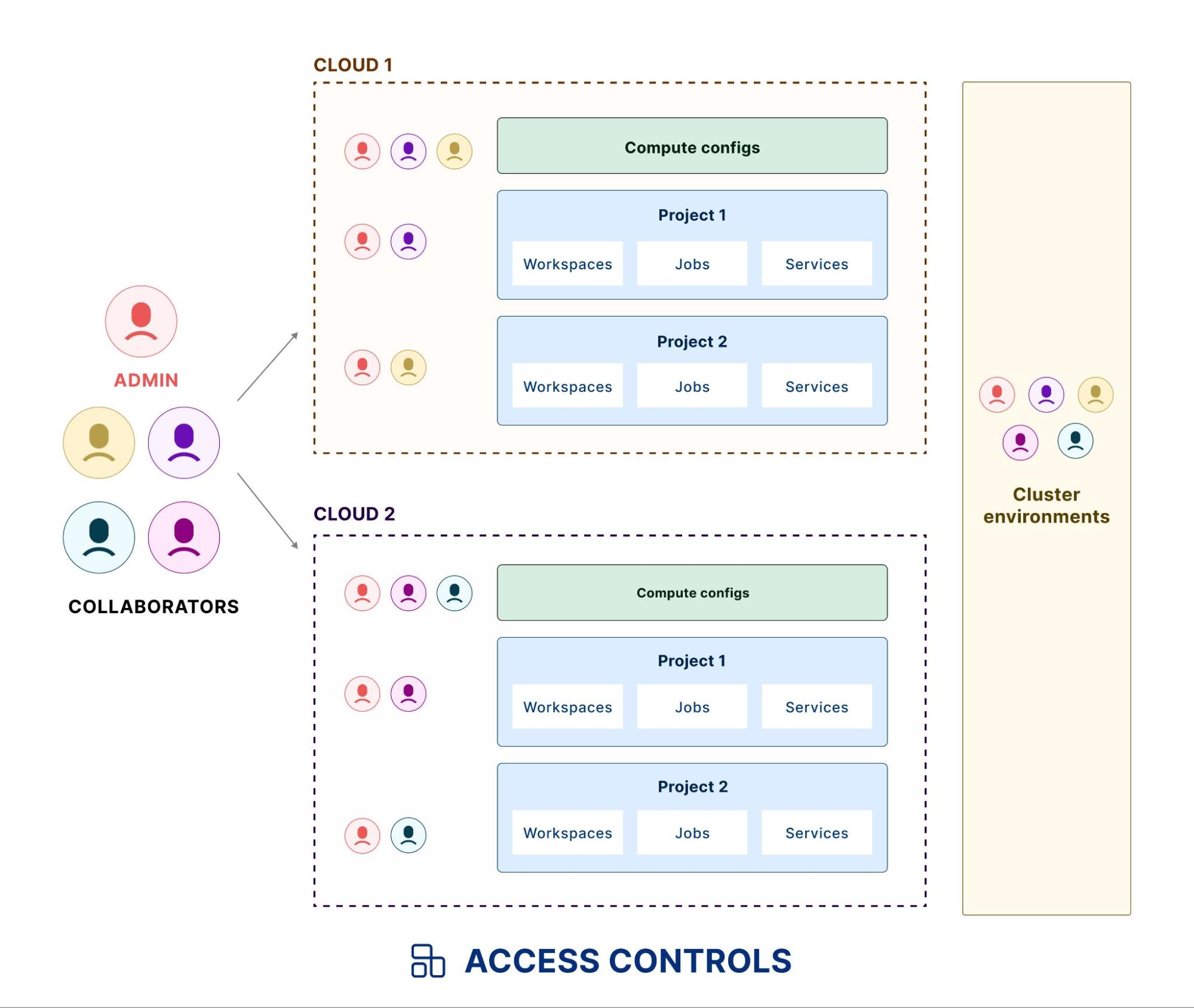

In addition, Anyscale now provides finer-grained access controls on clouds and cloud associated resources, which provides peace of mind to administrators and organizations since they now have the capability to specify which users have access to what environments (e.g. for development and production). This ensures that end users can’t accidentally perform actions in environments that they’re not allowed to access.

LinkCloud Storage: Fast and scalable storage for development and production

LLM and GenAI applications have high demands on storage for data and model checkpoints. The Anyscale platform makes it easy to strike the right balance between flexibility, cost and performance for your storage needs. We provide out-of-the box integration with managed NFS cloud storage, which makes development more productive. NFS allows for quick and seamless installation of dependencies directly into cluster storage, making them immediately available across the entire cluster. With a centralized storage system, multiple team members can easily access shared resources like data or models, ensuring a more streamlined collaborative experience. For production scenarios, we automatically configure object storage (e.g. S3) permissions for your clusters, enabling easy access to scalable and cost-effective storage.

Anyscale also supports Non-Volatile Memory Express (NVMe) as a cutting-edge interface to access SSD storage volumes. NVMe significantly speeds up data access for caching and storing large models or datasets. By utilizing NVMe for object spilling, you can offload data from costly RAM storage to SSDs. This not only brings down costs but can also improve performance compared to traditional options like EBS. Based on our internal benchmarking on a read heavy workload, we observe the m5d.8xlarge with 2 NVMe devices offering up to 2GB/s, and the r6id.24xlarge with 4 NVMe offering up to 2.7GB/s. Read more about this in the documentation here.

LinkConclusion

Anyscale is committed to providing a seamless, efficient, and secure infrastructure for GenAI and LLM application development and deployment. We have made significant progress in improving instance startup times, optimizing compute availability, enabling security & access controls, and providing fast, scalable storage solutions. Our platform is designed to handle the complexities of the underlying infrastructure and by eliminating the need to manage these aspects, we enable teams to focus on innovation and delivery. Anyscale’s offering is unique in the level of flexibility that we provide - in addition to simplifying processes that most organizations benefit from, we also offer advanced controls for those who require them. We are continually improving our platform to meet the evolving needs of our customers, and we are excited about the future of GenAI and LLM applications.

Stay tuned and join us at Ray Summit to hear more about what we are building. You can register here.