Batch LLM Inference on Anyscale slashes AWS Bedrock costs by up to 6x

LinkIntro

LLMs have taken the technology industry by storm, with a lot of focus placed on optimizing inference costs due to sky-high GPU prices. However, much of the effort has been focused on online inference for latency-sensitive, 24/7 workloads.

While online inference provides low-latency, real-time responses, batch inference for LLMs offers higher throughput and greater cost-effectiveness by optimizing GPU resource utilization. Consequently, for tasks that do not require immediate interaction, offline inference enables a more efficient and economical use of LLMs.

In certain cases, Anyscale is able to reduce costs by up to 2.9x compared to online inference providers such AWS Bedrock and OpenAI, and up to 6x in shared prefix scenarios, by optimizing batch inference for throughput and cost rather than latency and availability.

LinkWhat are the Core Differences Between Online and Batch Inference?

It is common to see online solutions repurposed to be used in offline batch workloads. However, there are some key differences between these two use cases.

Online inference is often configured to optimize low latency, while batch inference configurations often optimize overall token throughput.

As a result, the configuration space looks quite different for the two:

Online deployments often use techniques like speculative decoding and high degrees of tensor parallelism to trade off throughput for latency, while batch inference configurations will use techniques like pipeline parallelism and KV cache offloading to maximize the throughput.

Online inference is often deployed on high-end hardware (H100s, A100s) to meet SLAs, while batch inference prioritizes throughput-per-dollar, achievable with more cost-effective and accessible GPUs (A10G, L4, L40S).

Online deployments also incur overhead associated with HTTP and request handling, which can be removed in batch settings.

LinkWhy LLM Batch Inference on Ray & Anyscale?

For self-hosted inference, Ray and Anyscale offer unique advantages when it comes to optimizing batch LLM inference. LLM Batch Inference on Anyscale will use key components of our stack: our proprietary inference engine, Ray Data, Ray Core, and the Anyscale Platform.

For inference, we use a proprietary inference engine for optimized LLM inference.

This inference engine is based off of vLLM, a popular open source LLM inference engine.

As a strong proponent of OSS infrastructure, many of our performance improvements have been contributed back to vLLM open source, such as FP8 support, chunked prefill, and speculative decoding.

Ray Core and Ray Data are built for data-intensive AI workloads and provide a unique set of features compared to other batch systems.

Ray Core’s flexible distributed primitives (tasks, actors) allow Ray users to express nested parallelism, making different distributed libraries easy to compose -- in this case, vLLM and Ray Data.

Ray Data has support for Python async primitives for request pipelining, which enables high GPU utilization.

Ray Data also leverages Ray Core’s built-in fault tolerance, which allows effective usage of cost-effective spot instances.

The Anyscale Platform is a fully-managed Ray solution, tailored to provide a great user experience and cost effective way to run AI applications using Ray.

Anyscale’s Smart Instance Manager enables cross-zone instance searching and flexible compute configuration, leading to cost-effective hardware utilization.

Anyscale provides a library for accelerated model loading for quick startup of large model replicas, crucial for autoscaling and handling unreliable compute environments.

We’ve also created a new library, RayLLM-Batch, targeted at optimizing this stack for LLM batch inference workloads.

LinkIntroducing RayLLM-Batch

RayLLM-Batch is a library leveraging Ray and Anyscale components to optimize LLM batch inference at scale:

Simplified API: Abstracts the complexity of the underlying stack, allowing easy expression and execution of user-provided workloads on a batch inference cluster.

Optimized Inference Engine: Incorporates proprietary performance features while maintaining close alignment with the open-source repository.

Fault Tolerance: Introduces job-level checkpointing and restoring for improved reliability in large inference jobs.

Batch LLM Inference Optimizer: Tunes and configures the underlying stack to reduce costs based on workload characteristics and available hardware.

By combining these elements, RayLLM-Batch offers a powerful, cost-effective solution for large-scale batch LLM inference, addressing the unique challenges and opportunities presented by batch processing workloads.

You can check out the documentation for RayLLM-Batch here.

LinkBenchmarking with LLaMA

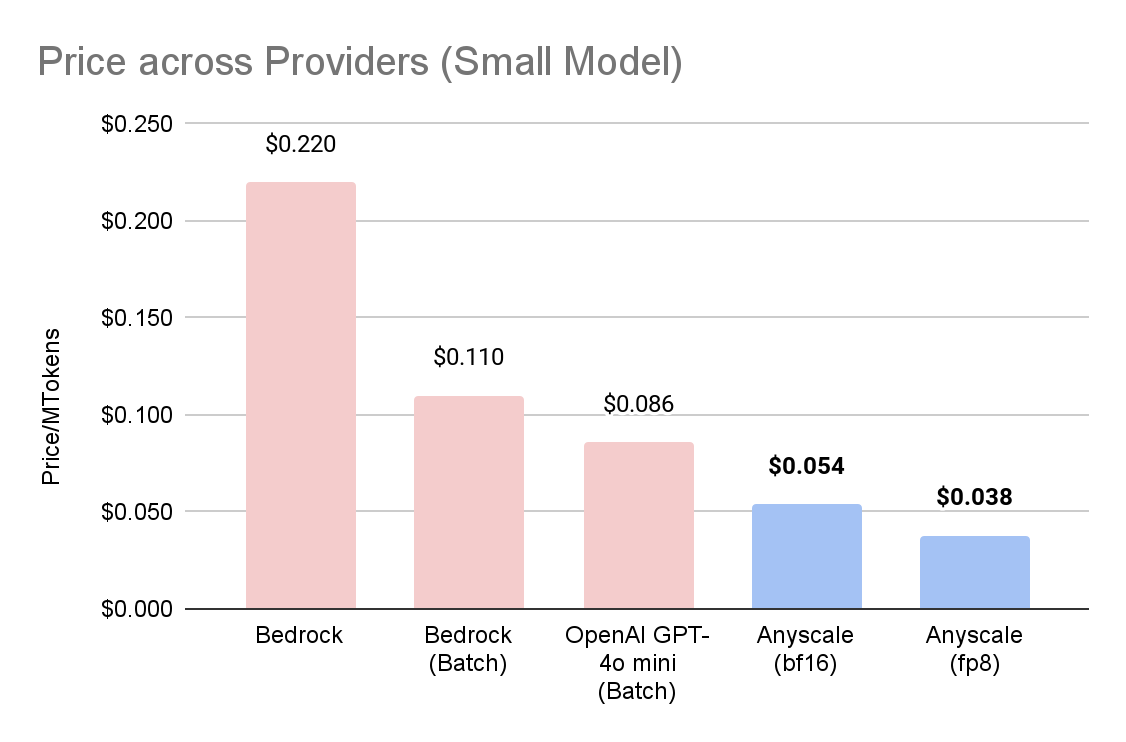

In the experiment below, we evaluate RayLLM-Batch on Anyscale on Llama 3.1 8b Instruct and compare it with common alternative solutions, Bedrock, Bedrock with Batch pricing, and OpenAI GPT-4o mini with Batch pricing. In all of the Anyscale experiments, we use commonly available GPUs on AWS such as L4s and L40S which can be provisioned on-demand.

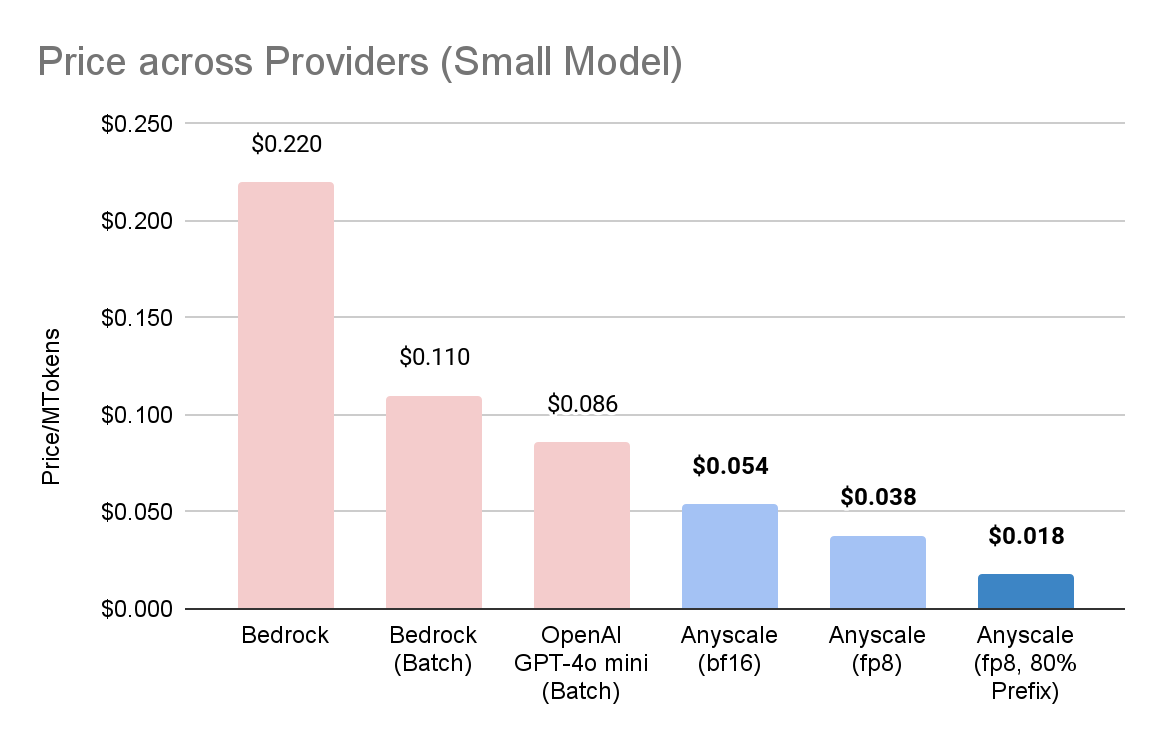

We evaluate the solutions using a dataset of 2000 input, 100 output tokens with no shared prefix, which is a common request shape for summarization tasks. We evaluate the performance across 1000 requests and calculate per-token pricing based on request throughput. For Anyscale, we evaluate both the original BF16 model and an FP8 version of the model, which has a 99.28% recovery of the original Llama 3.1 8b Instruct on Open LLM Leaderboard evaluation scores and is commonly used in lieu of the original BF16 model.

We see that for this specific workload, compared to alternatives, the Anyscale FP8 deployment is 2.2x cheaper than OpenAI GPT-4o mini’s batch pricing, and 2.9x cheaper than Amazon Bedrock.¹

In many offline workloads, it’s common to see shared prompts / prefixes across requests. In such situations, the cost differential can be much greater, as Anyscale can take advantage of prefix caching, unlike OpenAI and Bedrock. On the same workload (2000/100, Llama 3.1 8b) with 80% shared prefix across requests, we see that the Anyscale solution for this workload comes out to be 6.1x cheaper than the AWS Bedrock with batch pricing, and 4.78x cheaper than OpenAI GPT-4o mini with batch pricing.

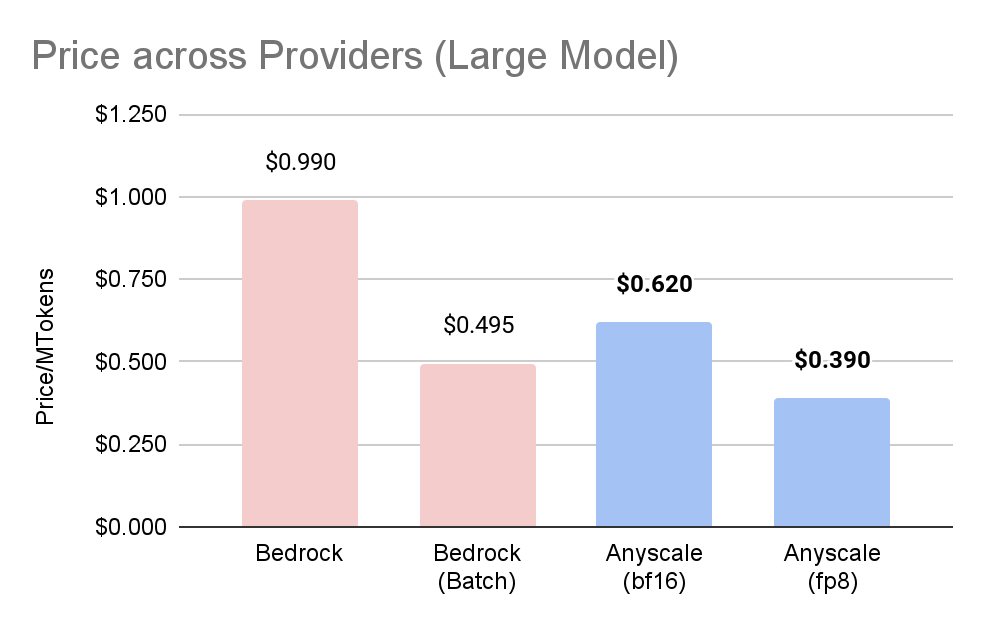

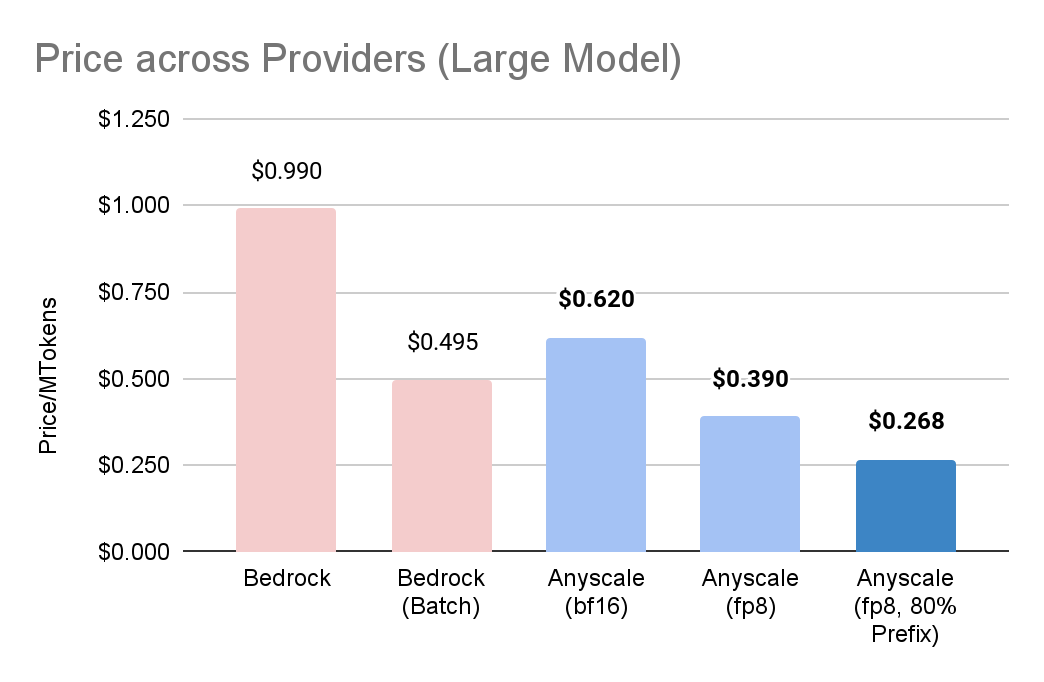

In the below experiment, we evaluate RayLLM-Batch on Anyscale on Llama 3.1 70b Instruct and compare it with Amazon Bedrock’s pricing on its Llama 3.1 70b Instruct model. We evaluate the solutions using a dataset with requests of 2000 input, 100 output tokens with no shared prefix.

For Anyscale, we evaluate both the original BF16 model and also show results for an FP8 version of the model, which has a 99.88% recovery of the original Llama 3.1 70b Instruct on Open LLM Leaderboard evaluation scores and is commonly used in lieu of the original BF16 model.

We see that in this workload, compared to alternatives, Anyscale FP8 batch inference solution is 22% cheaper than the Amazon Bedrock solution.

With 80% shared prefix across requests, we see that the Anyscale solution for this workload comes out to be 1.8x cheaper than the AWS Bedrock solution in batch pricing.

Evidently, we can see that for the chosen workload, in both small and large model use cases, Anyscale can outperform other common solutions on price-performance. Note that though we try to choose representative workloads, user performance can vary depending on the exact details of their own workload.

LinkConclusion

You can get started with our batch inference solution on Anyscale today with our newly released RayLLM-Batch template. Documentation for RayLLM-Batch can be found here.

Check out Anyscale today for accelerating other AI workloads: https://www.anyscale.com/

To reproduce our benchmark, refer to our Github repo.

¹

Anyscale Pricing based on self-hosted compute model with EC2 list prices as of September 2024.