Anyscale Endpoints

Anyscale EndpointsAnnouncing Anyscale Private Endpoints and Anyscale Endpoints Fine-tuning

Anyscale Endpoints

Anyscale EndpointsUpdate June 2024: Anyscale Endpoints (Anyscale's LLM API Offering) and Private Endpoints (self-hosted LLMs) are now available as part of the Anyscale Platform. Click here to get started on the Anyscale platform.

TLDR: Anyscale recently released Anyscale Endpoints to help developers integrate fast, cost-efficient, and scalable open source LLMs using familiar APIs. Today, Anyscale goes a step further by enabling LLM Developers to deploy Anyscale Endpoints privately in their cloud, helping customers align their usage of LLMs with their security posture while providing enhanced control and configurability. Additionally, Anyscale Endpoints Fine-tuning is also now publicly available, enabling developers to use their own data to optimize the quality of OSS Models and build custom tailored applications.

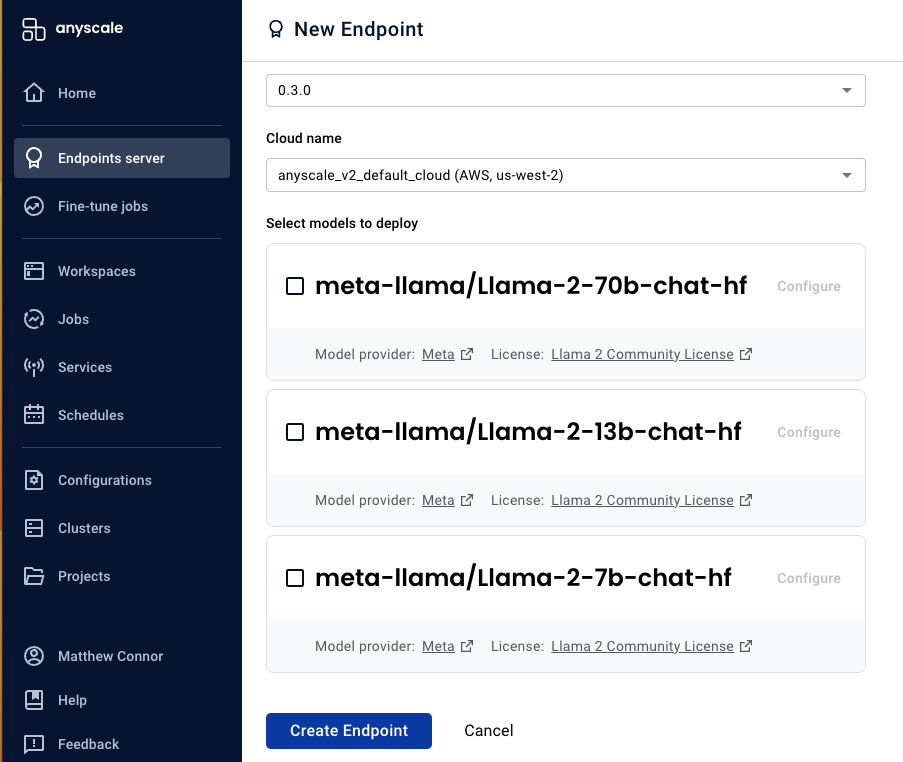

LinkAnyscale Private Endpoints

Here's a quick glimpse at what Anyscale Private Endpoints offers:

State-of-the-art open-source models with purpose-built performance and cost optimizations.

An easy serverless approach to running open-source LLMs, mitigating infrastructure complexities.

Fine tuning of LLMs in customer accounts using private data.

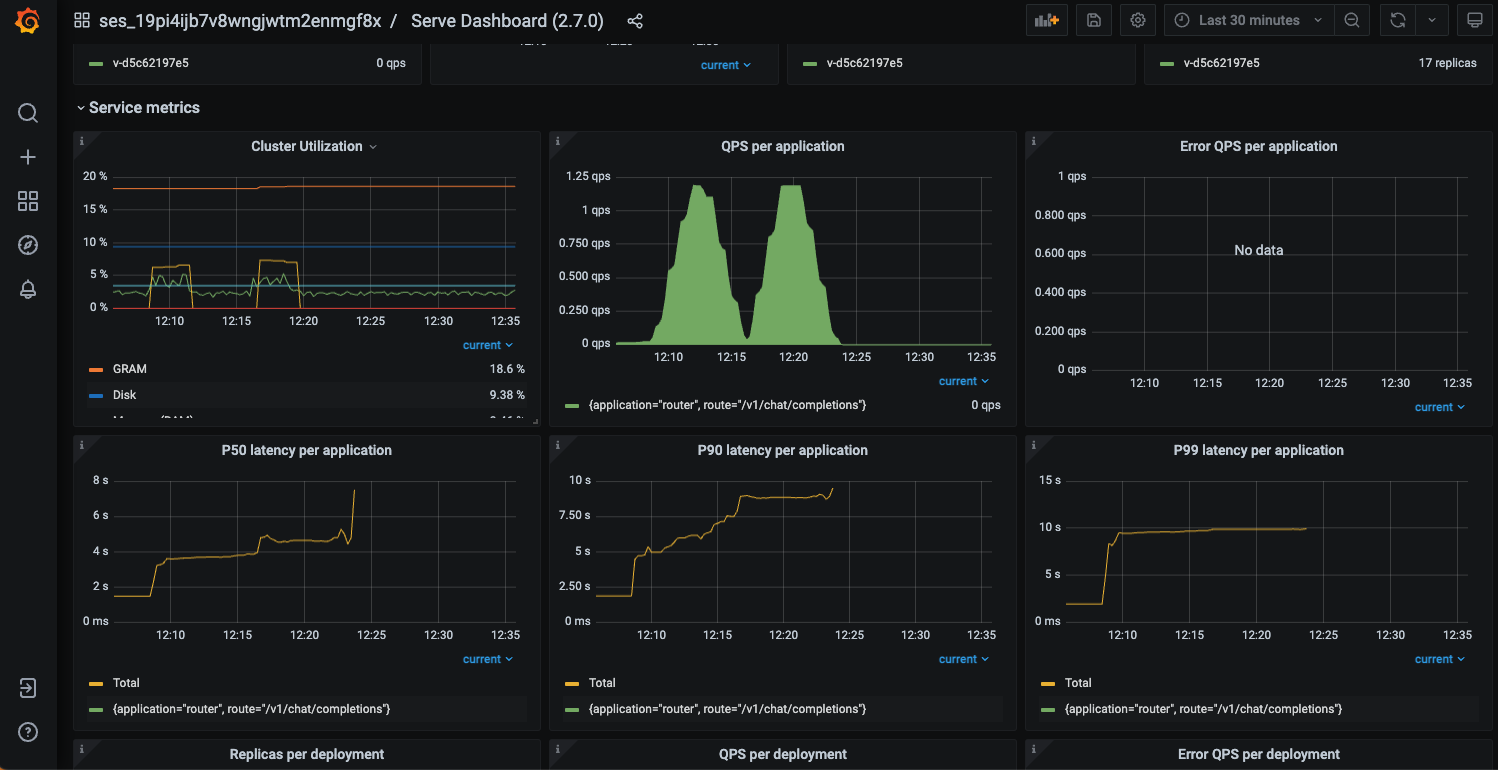

Production readiness with zero downtime upgrades, high availability, and fault tolerance.

Enhanced observability and alerting capabilities.

Seamless integration with the Anyscale Platform - enabling further LLM application development and support for your AI and ML workloads.

Sign up for the preview → Once approved, seamlessly deploy and integrate the best OSS models with a fully OpenAI-compatible API and SDK.

LinkAnyscale Endpoints Fine-tuning:

State-of-the-art open-source models with purpose-built performance and cost optimizations.

Seamless deployment and a dedicated LLM, customized for your application

The best price / performance combination

Small models (Llama2 7B):

Fixed cost of $5 to start a fine tuning job

Fine tuning $1 / million tokens

Serving: $0.25 / million tokens

Medium models (Llama2 13B) :

Fixed cost of $5 to start a fine tuning job

Fine tuning $2 / million tokens

Serving: $0.50 / million tokens

LinkAnyscale Endpoints is announcing GA for base model inference:

Anyscale Endpoints is announcing General Availability (GA) for base model serving

Mistral 7B has been added as a base model. Mistal 7B has proven to be one of the highest-quality small size models and a powerful OSS model for LLM application developers.

Anyscale Endpoints is enabling companies like RealChar.ai and Merlin to serve their customers at scale.

LinkAdditional services from the Anyscale Platform:

Over the years, we have developed a robust and scalable infrastructure through continuous iterations and valuable feedback from users and customers. The stability and reliability of our managed Ray solution on the Anyscale platform allow you to focus on your AI applications, leaving the complexities of infrastructure management to us. Anyscale handles the advanced autoscaling, smart instance selection, intelligent spot instance support, and user-customized Docker images, Anyscale Private Endpoints serves as the ideal platform for accelerating your LLM application development.

We are excited to see the innovation that you’ll bring to your generative AI applications with Anyscale Private Endpoints and Anyscale Fine-tuning and look forward to your feedback! Join us in this transformative journey by diving into the Anyscale platform.