An Introduction to Reinforcement Learning with OpenAI Gym, RLlib, and Google Colab

One possible definition of reinforcement learning (RL) is a computational approach to learning how to maximize the total sum of rewards when interacting with an environment. While a definition is useful, this tutorial aims to illustrate what reinforcement learning is through images, code, and video examples and along the way introduce reinforcement learning terms like agents and environments.

In particular, this tutorial explores:

LinkWhat is Reinforcement Learning

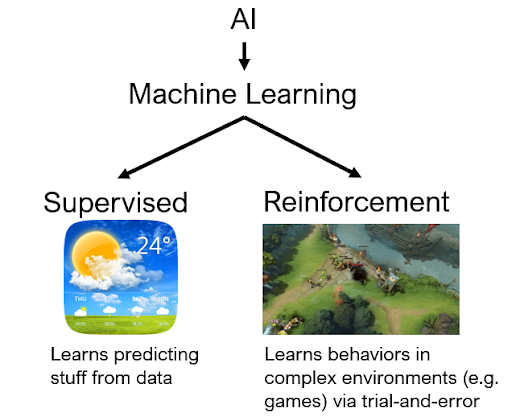

As a previous post noted, machine learning (ML), a sub-field of AI, uses neural networks or other types of mathematical models to learn how to interpret complex patterns. Two areas of ML that have recently become very popular due to their high level of maturity are supervised learning (SL), in which neural networks learn to make predictions based on large amounts of data, and reinforcement learning (RL), where the networks learn to make good action decisions in a trial-and-error fashion, using a simulator.

RL is the tech behind mind-boggling successes such as DeepMind’s AlphaGo Zero and the StarCraft II AI (AlphaStar) or OpenAI’s DOTA 2 AI (“OpenAI Five”). Note that there are many impressive uses of reinforcement learning and the reason why it is so powerful and promising for real-life decision making problems is because RL is capable of learning continuously — sometimes even in ever changing environments — starting with no knowledge of which decisions to make whatsoever (random behavior).

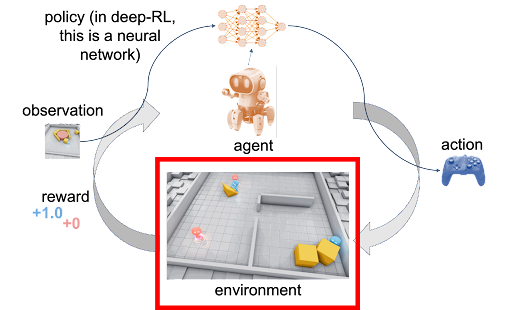

LinkAgents and Environments

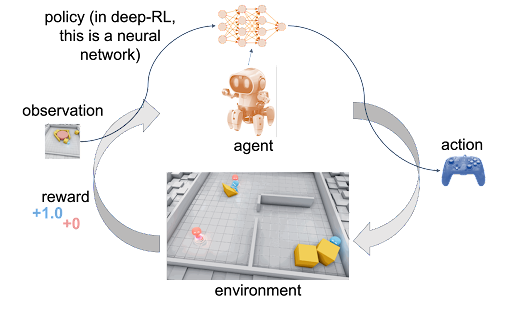

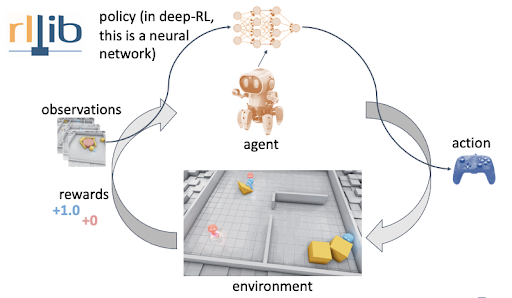

The diagram above shows the interactions and communications between an agent and an environment. In reinforcement learning, one or more agents interact within an environment which may be either a simulation like CartPole in this tutorial or a connection to real-world sensors and actuators. At each step, the agent receives an observation (i.e., the state of the environment), takes an action, and usually receives a reward (the frequency at which an agent receives a reward depends on a given task or problem). Agents learn from repeated trials, and a sequence of those is called an episode — the sequence of actions from an initial observation up to either a “success” or “failure” causing the environment to reach its “done” state. The learning portion of an RL framework trains a policy about which actions (i.e., sequential decisions) cause agents to maximize their long-term, cumulative rewards.

LinkThe OpenAI Gym Cartpole Environment

CartPole

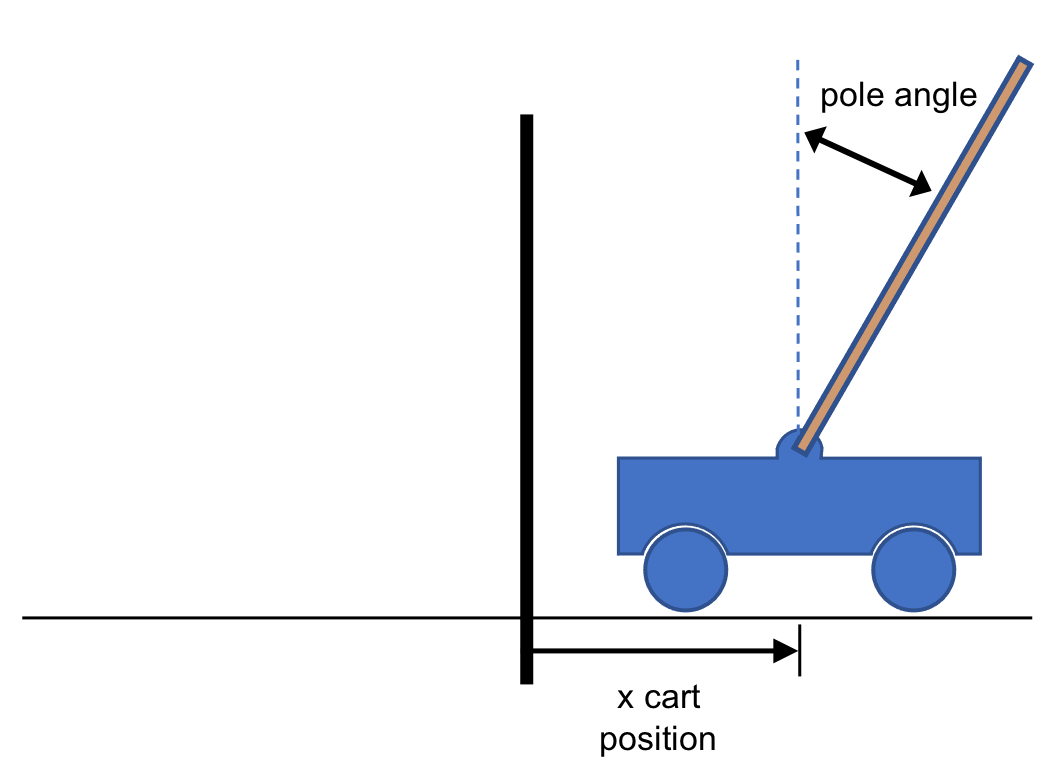

CartPoleThe problem we are trying to solve is trying to keep a pole upright. Specifically, the pole is attached by an un-actuated joint to a cart, which moves along a frictionless track. The pendulum starts upright, and the goal is to prevent it from falling over by increasing and reducing the cart's velocity.

Rather than code this environment from scratch, this tutorial will use OpenAI Gym which is a toolkit that provides a wide variety of simulated environments (Atari games, board games, 2D and 3D physical simulations, and so on). Gym makes no assumptions about the structure of your agent (what pushes the cart left or right in this cartpole example), and is compatible with any numerical computation library, such as numpy.

The code below loads the cartpole environment.

1import gym

2env = gym.make("CartPole-v0")Let's now start to understand this environment by looking at the action space.

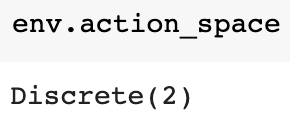

1env.action_space

The output Discrete(2) means that there are two actions. In cartpole, 0 corresponds to "push cart to the left" and 1 corresponds to "push cart to the right". Note that in this particular example, standing still is not an option. In reinforcement learning, the agent produces an action output and this action is sent to an environment which then reacts. The environment produces an observation (along with a reward signal, not shown here) which we can see below:

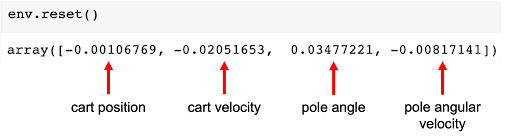

1env.reset()

The observation is a vector of dim=4, containing the cart's x position, cart x velocity, the pole angle in radians (1 radian = 57.295 degrees), and the angular velocity of the pole. The numbers shown above are the initial observation after starting a new episode (`env.reset()`). With each timestep (and action), the observation values will change, depending on the state of the cart and pole.

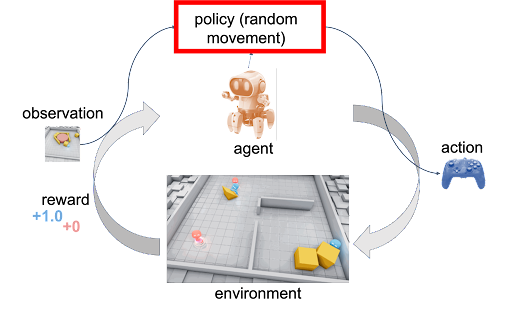

LinkTraining an Agent

In reinforcement learning, the goal of the agent is to produce smarter and smarter actions over time. It does so with a policy. In deep reinforcement learning, this policy is represented with a neural network. Let's first interact with the RL gym environment without a neural network or machine learning algorithm of any kind. Instead we'll start with random movement (left or right). This is just to understand the mechanisms.

The code below resets the environment and takes 20 steps (20 cycles), always taking a random action and printing the results.

1# returns an initial observation

2env.reset()

3

4for i in range(20):

5

6 # env.action_space.sample() produces either 0 (left) or 1 (right).

7 observation, reward, done, info = env.step(env.action_space.sample())

8

9 print("step", i, observation, reward, done, info)

10

11env.close()

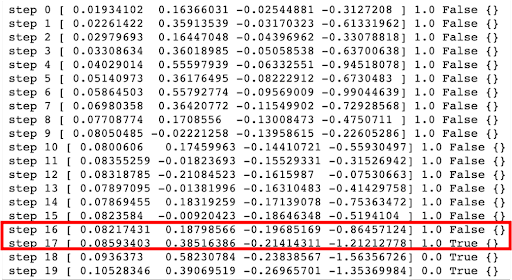

12 Sample output. There are multiple conditions for episode termination in cartpole. In the image, the episode is terminated because it is over 12 degrees (0.20944 rad). Other conditions for episode termination are cart position is more than 2.4 (center of the cart reaches the edge of the display), episode length is greater than 200, or the solved requirement which is when the average return is greater than or equal to 195.0 over 100 consecutive trials.

Sample output. There are multiple conditions for episode termination in cartpole. In the image, the episode is terminated because it is over 12 degrees (0.20944 rad). Other conditions for episode termination are cart position is more than 2.4 (center of the cart reaches the edge of the display), episode length is greater than 200, or the solved requirement which is when the average return is greater than or equal to 195.0 over 100 consecutive trials.

The printed output above shows the following things:

step (how many times it has cycled through the environment). In each timestep, an agent chooses an action, and the environment returns an observation and a reward

observation of the environment [x cart position, x cart velocity, pole angle (rad), pole angular velocity]

reward achieved by the previous action. The scale varies between environments, but the goal is always to increase your total reward. The reward is 1 for every step taken for cartpole, including the termination step. After it is 0 (step 18 and 19 in the image).

done is a boolean. It indicates whether it's time to reset the environment again. Most tasks are divided up into well-defined episodes, and done being True indicates the episode has terminated. In cart pole, it could be that the pole tipped too far (more than 12 degrees/0.20944 radians), position is more than 2.4 meaning the center of the cart reaches the edge of the display, episode length is greater than 200, or the solved requirement which is when the average return is greater than or equal to 195.0 over 100 consecutive trials.

info which is diagnostic information useful for debugging. It is empty for this cartpole environment.

While these numbers are useful outputs, a video might be clearer. If you are running this code in Google Colab, it is important to note that there is no display driver available for generating videos. However, it is possible to install a virtual display driver to get it to work.

1# install dependencies needed for recording videos

2!apt-get install -y xvfb x11-utils

3!pip install pyvirtualdisplay==0.2.*

4The next step is to start an instance of the virtual display.

1from pyvirtualdisplay import Display

2display = Display(visible=False, size=(1400, 900))

3_ = display.start()OpenAI gym has a VideoRecorder wrapper that can record a video of the running environment in MP4 format. The code below is the same as before except that it is for 200 steps and is recording.

1from gym.wrappers.monitoring.video_recorder import VideoRecorder

2before_training = "before_training.mp4"

3

4video = VideoRecorder(env, before_training)

5# returns an initial observation

6env.reset()

7for i in range(200):

8 env.render()

9 video.capture_frame()

10 # env.action_space.sample() produces either 0 (left) or 1 (right).

11 observation, reward, done, info = env.step(env.action_space.sample())

12 # Not printing this time

13 #print("step", i, observation, reward, done, info)

14

15video.close()

16env.close() Usually you end the simulation when done is 1 (True). The code above let the environment keep on going after a termination condition was reached. For example, in CartPole, this could be when the pole tips over, pole goes off-screen, or reaches other termination conditions.

Usually you end the simulation when done is 1 (True). The code above let the environment keep on going after a termination condition was reached. For example, in CartPole, this could be when the pole tips over, pole goes off-screen, or reaches other termination conditions.The code above saved the video file into the Colab disk. In order to display it in the notebook, you need a helper function.

1from base64 import b64encode

2def render_mp4(videopath: str) -> str:

3 """

4 Gets a string containing a b4-encoded version of the MP4 video

5 at the specified path.

6 """

7 mp4 = open(videopath, 'rb').read()

8 base64_encoded_mp4 = b64encode(mp4).decode()

9 return f'<video width=400 controls><source src="data:video/mp4;' \

10 f'base64,{base64_encoded_mp4}" type="video/mp4"></video>'

11The code below renders the results. You should get a video similar to the one below.

1from IPython.display import HTML

2html = render_mp4(before_training)

3HTML(html)Playing the video demonstrates that randomly choosing an action is not a good policy for keeping the CartPole upright.

LinkHow to Train an Agent using Ray's RLlib

The previous section of the tutorial had our agent make random actions disregarding the observations and rewards from the environment. The goal of having an agent is to produce smarter and smarter actions over time and random actions don't accomplish that. To make an agent make smarter actions over time, itl needs a better policy. In deep reinforcement learning, the policy is represented with a neural network.

This tutorial will use the RLlib library to train a smarter agent. RLlib has many advantages like:

Extreme flexibility. It allows you to customize every aspect of the RL cycle. For instance, this section of the tutorial will make a custom neural network policy using PyTorch (RLlib also has native support for TensorFlow).

Scalability. Reinforcement learning applications can be quite compute intensive and often need to scale-out to a cluster for faster training. RLlib not only has first-class support for GPUs, but it is also built on Ray which is an open source library for parallel and distributed Python. This makes scaling Python programs from a laptop to a cluster easy.

A unified API and support for offline, model-based, model-free, multi-agent algorithms, and more (these algorithms won’t be explored in this tutorial).

Being part of the Ray Project ecosystem. One advantage of this is that RLlib can be run with other libraries in the ecosystem like Ray Tune, a library for experiment execution and hyperparameter tuning at any scale (more on this later).

While some of these features won't be fully utilized in this post, they are highly useful for when you want to do something more complicated and solve real world problems. You can learn about some impressive use-cases of RLlib here.

To get started with RLlib, you need to first install it.

1!pip install 'ray[rllib]'==1.6Now you can train a PyTorch model using the Proximal Policy Optimization (PPO) algorithm. It is a very well rounded, one size fits all type of algorithm which you can learn more about here. The code below uses a neural network consisting of a single hidden layer of 32 neurons and linear activation functions.

1import ray

2from ray.rllib.agents.ppo import PPOTrainer

3config = {

4 "env": "CartPole-v0",

5 # Change the following line to `“framework”: “tf”` to use tensorflow

6 "framework": "torch",

7 "model": {

8 "fcnet_hiddens": [32],

9 "fcnet_activation": "linear",

10 },

11}

12 stop = {"episode_reward_mean": 195}

13 ray.shutdown()

14ray.init(

15 num_cpus=3,

16 include_dashboard=False,

17 ignore_reinit_error=True,

18 log_to_driver=False,

19)

20# execute training

21analysis = ray.tune.run(

22 "PPO",

23 config=config,

24 stop=stop,

25 checkpoint_at_end=True,

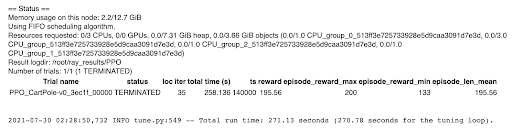

26)This code should produce quite a bit of output. The final entry should look something like this:

The entry shows it took 35 iterations, running over 258 seconds, to solve the environment. This will be different each time, but will probably be about 7 seconds per iteration (258 / 35 = 7.3). Note that if you like to learn the Ray API and see what commands like ray.shutdown and ray.init do, you can check out this tutorial.

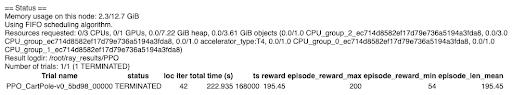

LinkHow to use a GPU to Speed Up Training

While the rest of the tutorial utilizes CPUs, it is important to note that you can speed up model training by using a GPU in Google Colab. This can be done by selecting Runtime > Change runtime type and set hardware accelerator to GPU. Then select Runtime > Restart and run all.

Notice that, although the number of training iterations might be about the same, the time per iteration has come down significantly (from 7 seconds to 5.5 seconds).

LinkCreating a Video of the Trained Model in Action

RLlib provides a Trainer class which holds a policy for environment interaction. Through the trainer interface, a policy can be trained, action computed, and checkpointed. While the analysis object returned from ray.tune.run earlier did not contain any trainer instances, it has all the information needed to reconstruct one from a saved checkpoint because checkpoint_at_end=True was passed as a parameter. The code below shows this.

1# restore a trainer from the last checkpoint

2trial = analysis.get_best_logdir("episode_reward_mean", "max")

3checkpoint = analysis.get_best_checkpoint(

4 trial,

5 "training_iteration",

6 "max",

7)

8trainer = PPOTrainer(config=config)

9trainer.restore(checkpoint)Let’s now create another video, but this time choose the action recommended by the trained model instead of acting randomly.

1after_training = "after_training.mp4"

2after_video = VideoRecorder(env, after_training)

3observation = env.reset()

4done = False

5while not done:

6 env.render()

7 after_video.capture_frame()

8 action = trainer.compute_action(observation)

9 observation, reward, done, info = env.step(action)

10after_video.close()

11env.close()

12# You should get a video similar to the one below.

13html = render_mp4(after_training)

14HTML(html)This time, the pole balances nicely which means the agent has solved the cartpole environment!

LinkHyperparameter Tuning with Ray Tune

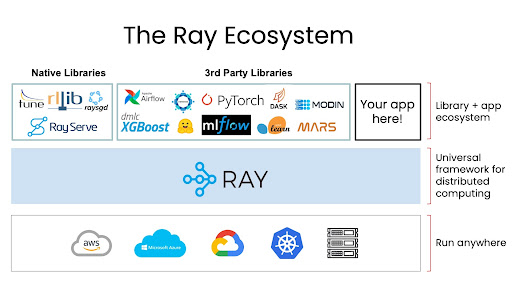

The Ray Ecosystem

The Ray EcosystemFor a deep-dive on hyperparameter tuning, from the basics to how to distribute hyperparameter tuning using Ray Tune, check our our blog series on hyperparameter optimization.

RLlib is a reinforcement learning library that is part of the Ray Ecosystem. Ray is a highly scalable universal framework for parallel and distributed python. It is very general and that generality is important for supporting its library ecosystem. The ecosystem covers everything from training, to production serving, to data processing and more. You can use multiple libraries together and build applications that do all of these things.

This part of the tutorial utilizes Ray Tune which is another library in the Ray Ecosystem. It is a library for experiment execution and hyperparameter tuning at any scale. While this tutorial will only use grid search, note that Ray Tune also gives you access to more efficient hyperparameter tuning algorithms like population based training, BayesOptSearch, and HyperBand/ASHA.

Let’s now try to find hyperparameters that can solve the CartPole environment in the fewest timesteps.

Enter the following code, and be prepared for it to take a while to run:

1parameter_search_config = {

2 "env": "CartPole-v0",

3 "framework": "torch",

4

5 # Hyperparameter tuning

6 "model": {

7 "fcnet_hiddens": ray.tune.grid_search([[32], [64]]),

8 "fcnet_activation": ray.tune.grid_search(["linear", "relu"]),

9 },

10 "lr": ray.tune.uniform(1e-7, 1e-2)

11}

12

13# To explicitly stop or restart Ray, use the shutdown API.

14ray.shutdown()

15

16ray.init(

17 num_cpus=12,

18 include_dashboard=False,

19 ignore_reinit_error=True,

20 log_to_driver=False,

21)

22

23parameter_search_analysis = ray.tune.run(

24 "PPO",

25 config=parameter_search_config,

26 stop=stop,

27 num_samples=5,

28 metric="timesteps_total",

29 mode="min",

30)

31

32print(

33 "Best hyperparameters found:",

34 parameter_search_analysis.best_config,

35)

36By asking for 12 CPU cores by passing in num_cpus=12 to ray.init, four trials get run in parallel across three cpus each. If this doesn’t work, perhaps Google has changed the VMs available on Colab. Any value of three or more should work. If Colab errors by running out of RAM, you might need to do Runtime > Factory reset runtime, followed by Runtime > Run all. Note that there is an area in the top right of the Colab notebook showing the RAM and disk use.

Specifying num_samples=5 means that you will get five random samples for the learning rate. For each of those, there are two values for the size of the hidden layer, and two values for the activation function. So, there will be 5 * 2 * 2 = 20 trials, shown with their statuses in the output of the cell as the calculation runs.

Note that Ray prints the current best configuration as it goes. This includes all the default values that have been set, which is a good place to find other parameters that could be tweaked.

After running this, the final output might be similar to the following output:

INFO tune.py:549 -- Total run time: 3658.24 seconds (3657.45 seconds for the tuning loop).

Best hyperparameters found: {'env': 'CartPole-v0', 'framework': 'torch', 'model': {'fcnet_hiddens': [64], 'fcnet_activation': 'relu'}, 'lr': 0.006733929096170726};'''

So, of the twenty sets of hyperparameters, the one with 64 neurons, the ReLU activation function, and a learning rate around 6.7e-3 performed best.

LinkConclusion

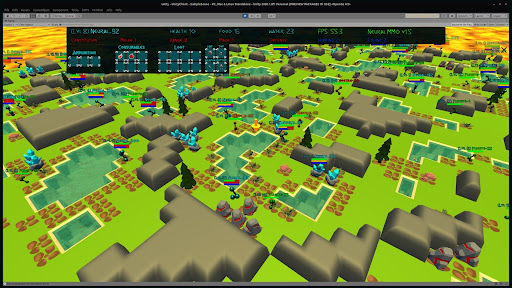

Neural MMO is an environment modeled from Massively Multiplayer Online games — a genre supporting hundreds to thousands of concurrent players. You can learn how Ray and RLlib help enable some key features of this and other projects here.

Neural MMO is an environment modeled from Massively Multiplayer Online games — a genre supporting hundreds to thousands of concurrent players. You can learn how Ray and RLlib help enable some key features of this and other projects here. This tutorial illustrated what reinforcement learning is by introducing reinforcement learning terminology, by showing how agents and environments interact, and by demonstrating these concepts through code and video examples. If you would like to learn more about reinforcement learning, check out the RLlib tutorial by Sven Mika. It is a great way to learn about RLlib’s best practices, multi-agent algorithms, and much more. If you would like to keep up to date with all things RLlib and Ray, consider following @raydistributed on twitter and sign up for the Ray newsletter.