Ray Summit 2024: Breaking Through the AI Complexity Wall

Ray Summit 2024 brought together an energized community of innovators, contributors, and industry leaders, all excited about one thing: the future of AI. This year’s summit felt like a pivotal moment, not only because Ray has grown to over 1,000 contributors, but because the platform has now reached a staggering milestone—Ray is orchestrating more than 1 million clusters per month. This explosive growth solidifies Ray as the go-to AI Compute Engine, proving that the demand for scalable, flexible, and powerful AI infrastructure has never been greater.

But behind this surge of adoption lies a critical challenge: companies are hitting an AI Complexity Wall. As the number of models, data modalities, and accelerators increase, delivering real value with AI is becoming increasingly difficult. Everyone is clear on the potential, but teams are held back. And this complexity is only going to get worse. Without the right tools to scale and optimize AI workloads, businesses risk falling behind.

Fortunately, Ray and Anyscale are leading the charge.

LinkThe AI Complexity Wall: A Growing Challenge

AI innovation is no longer just about building better models. At Ray Summit 2024 5 key trends emerged:

Link1. Scale

The compute requirements to train state-of-the-art models has grown 5x every year. This has been driven by the scaling law which simply says that more data and more compute lead to better models.

We aren’t just seeing compute requirements increase for scaling—it’s also happening for inference. Recently OpenAI released o1, their most advanced reasoning model yet, which—when presented with a question—thinks, tests its thoughts, and then affirms or refutes them. But with advanced reasoning comes context requirements that are orders of magnitude larger than before—it can take o1 10s of seconds to generate a single answer. This is the beginning of a new scaling era for model inference.

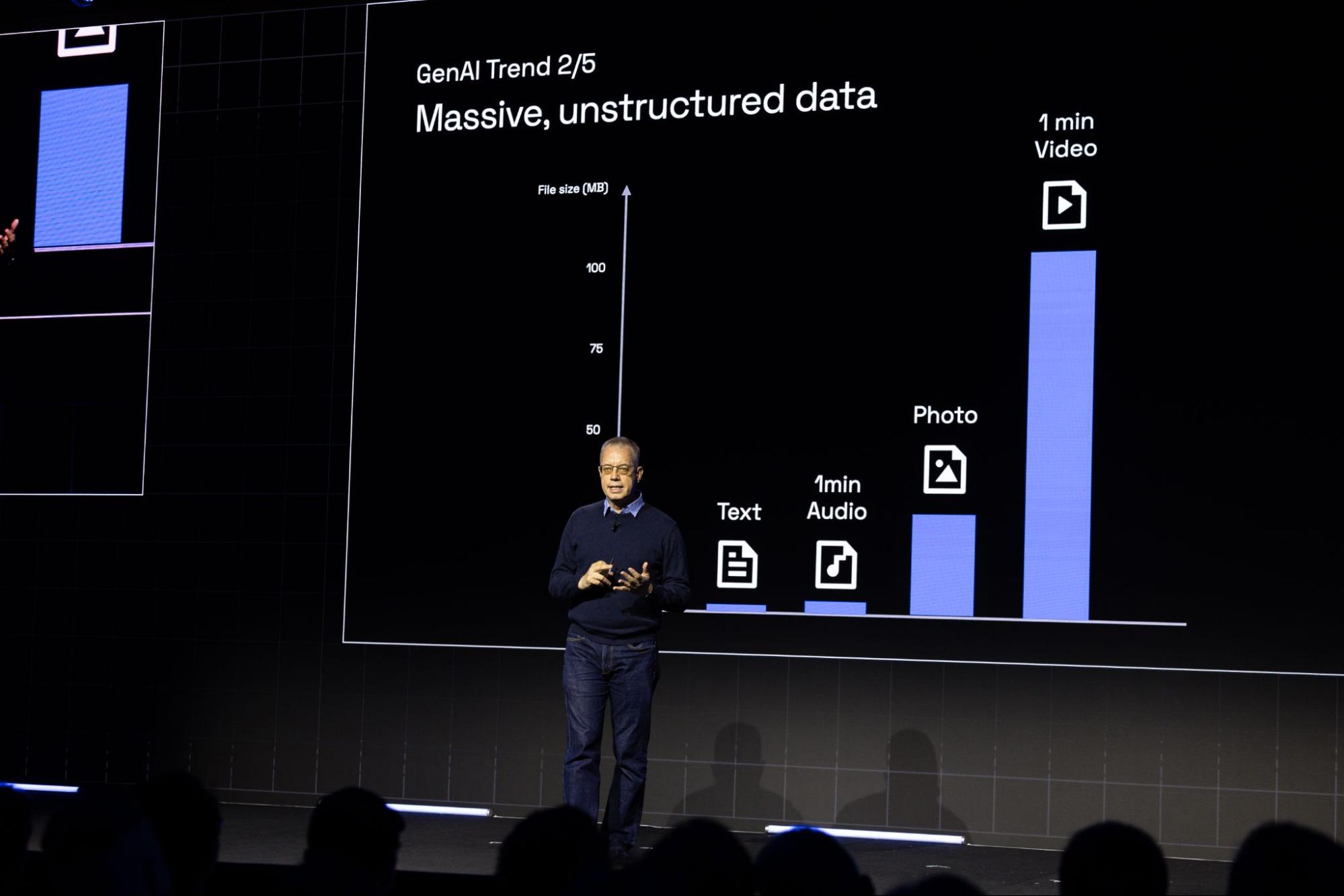

Link2. Multimodal Data

The era of unstructured data is here. Text, audio, images, video; these data modalities—which are inherently much bigger than structured data—have become increasingly critical.

Video companies building foundation models like Open AI with Sora, or Genmo and Runway, are processing millions of videos and images every day. It isn’t just video companies—Pinterest and Apple are also processing PBs of multimodal data to power their GenAI applications.

Link3. Sophisticated post-training

Training no longer means just pre-training and fine-tuning, but model pruning, distillation, merging, reinforcement learning, and more.

Link4. AI is powering AI

AI is being increasingly used to optimize every aspect of model development, from data pre-processing, to synthetic data generation, to model partitioning across thousands of GPUs, and more. Each part of the AI stack is itself becoming AI-powered.

Link5. Compound AI and Agentic Systems

In actual enterprise scenarios, it’s not about a single model, but about agentic systems that work with many models—LLMs yes, but also traditional predictive ML models. Compound AI systems only increase the pressure on the underlying AI infrastructure.

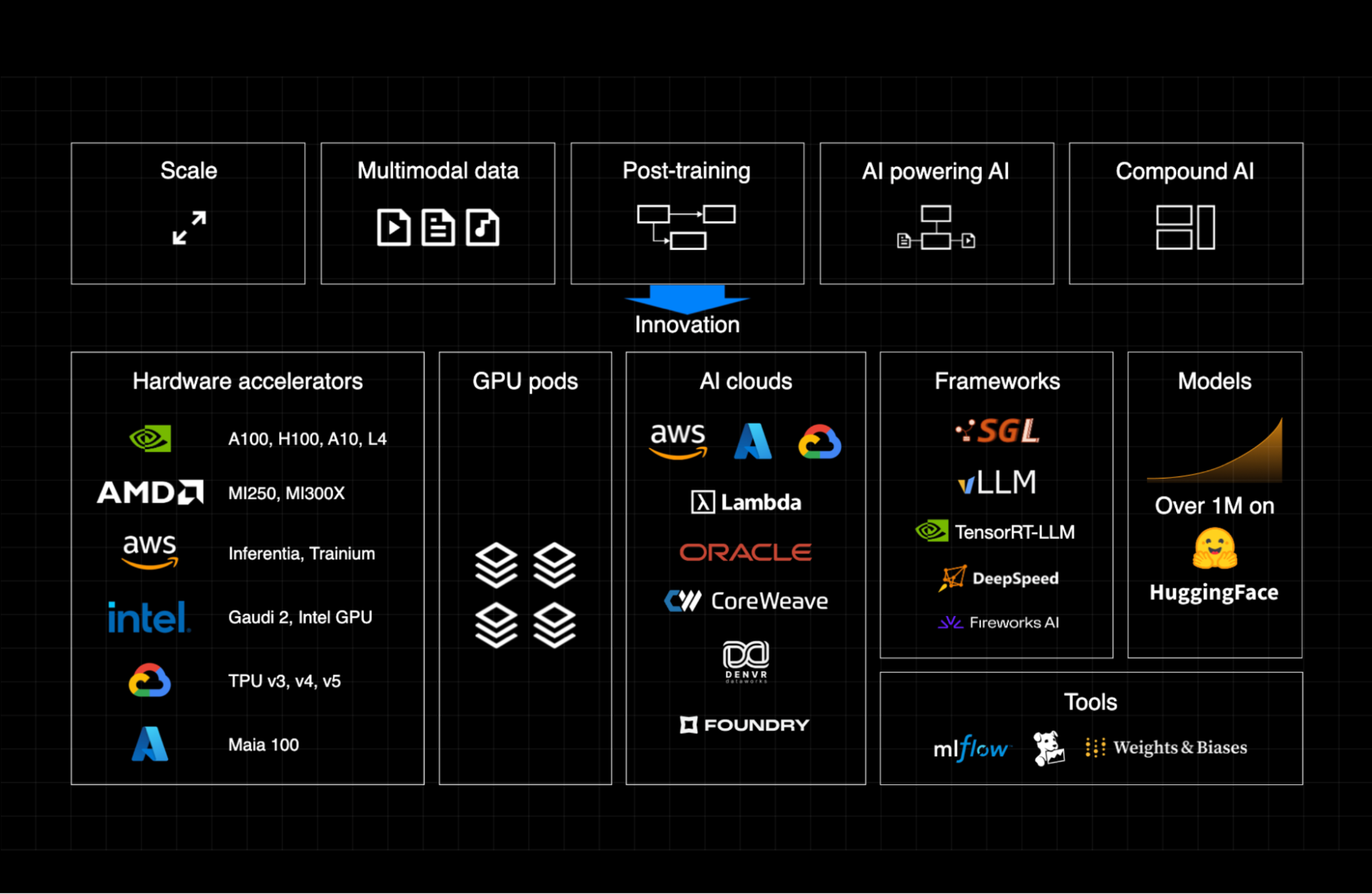

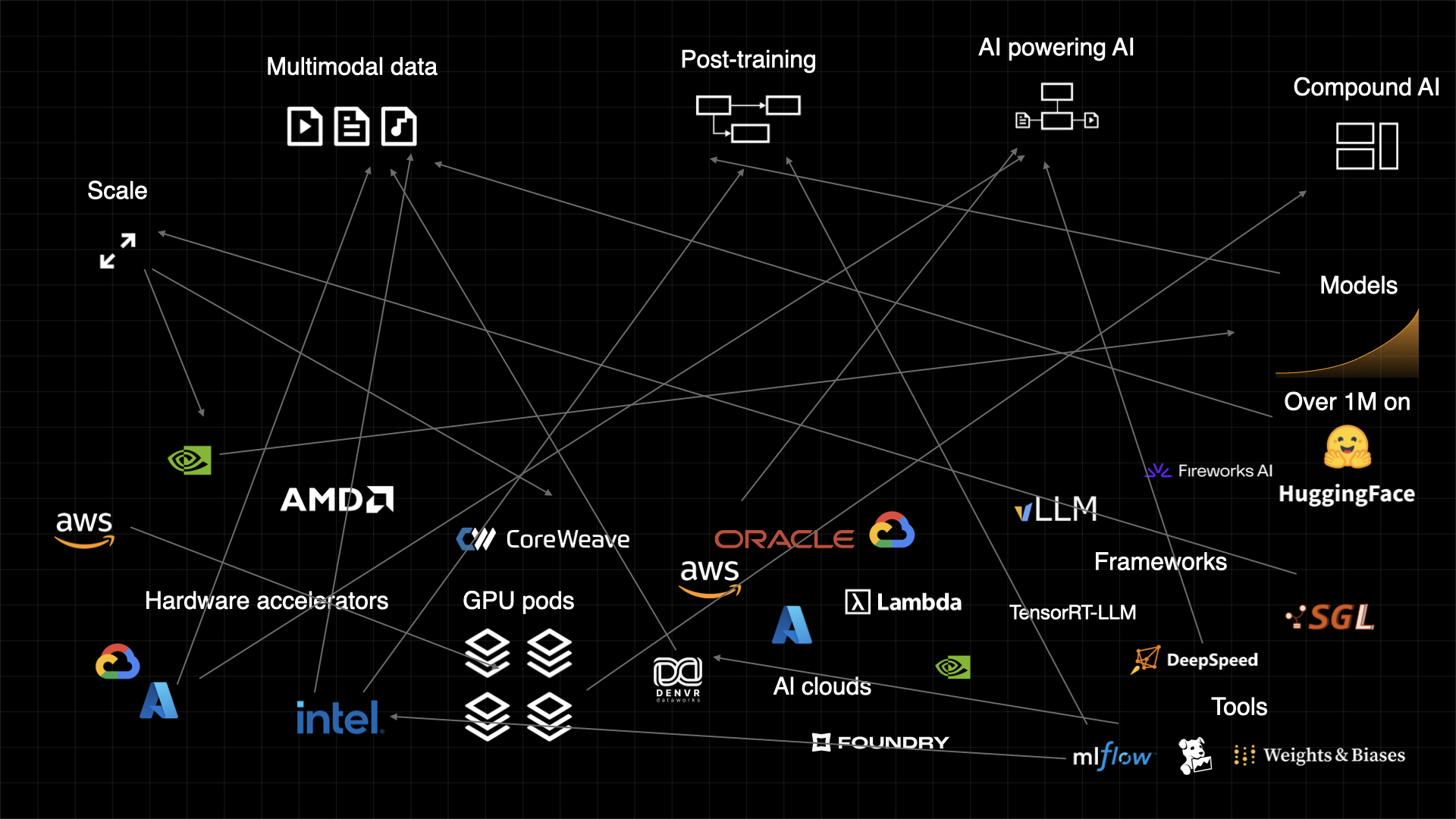

The result of these trends is innovation. Over the past five years, the world has shifted from a CPU-centric to an accelerator-centric model, with GPUs now spanning across multiple vendors. like Nvidia, AMD, and Intel, each offering various models like Nvidia's H100, A100, and more. GPU pods with hundreds or thousands of units are being deployed to support the growing demand of large AI models, while new AI-focused clouds, such as Coreweave and Lambda Labs, and a proliferation of libraries like vLLM and TRT-LLM, have emerged. The landscape of available AI models has exploded, with over a million models now on HuggingFace.

So the story is simple:

The huge changes in the compute needs for ML/AI workloads

....leads to very rapid evolution and innovation in the infrastructure and tooling, which

... lead to enormous complexity, which

... create huge obstacles to AI progress

We call this: The AI Complexity Wall.

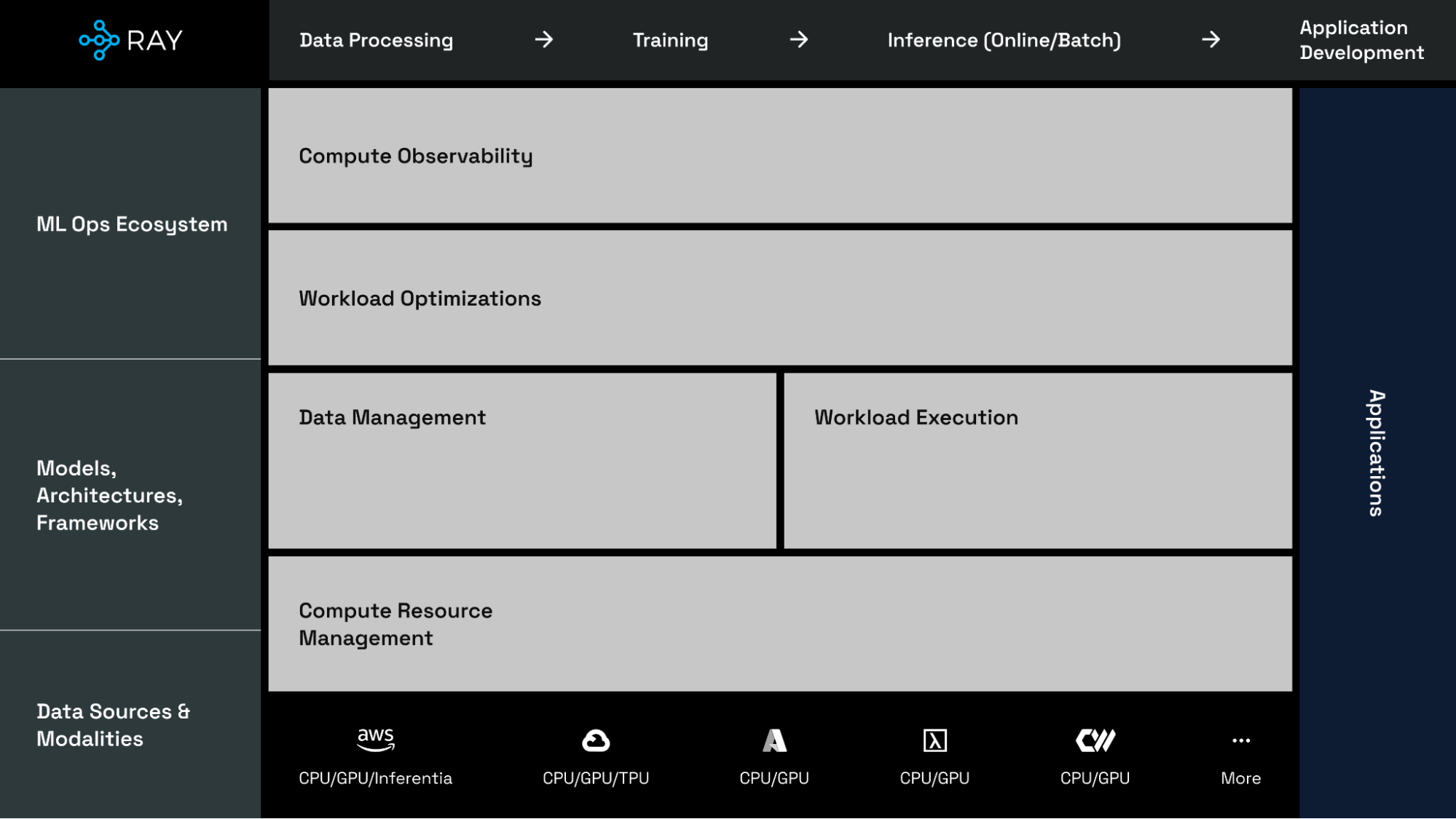

LinkThe AI Compute Engine

To break through the AI Complexity Wall, businesses need more than just raw compute power—they need an AI Compute Engine that:

Supports any AI or ML workload

Supports any data types and model architecture

Decouples the allocation of different resource types

Fully utilizes every accelerator, and

Scales from your laptop to thousands of GPUs

While simultaneously being able to:

Abstract away the complexity of this infrastructure from the end developer

Serve as a flexible and unifying platform for the entire AI ecosystem

The AI Compute Engine, of course, is Ray.

When done right, it can have staggering benefits. For example, with Ray and Anyscale:

Instacart is training on 100 times more data

Niantic reduced lines of code by 85%

Canva cut cloud costs in half

LinkRay Announcements

At Ray Summit, we announced key Ray and Anyscale product and feature announcements designed to help companies in every industry overcome the AI Complexity Wall.

Link1. [New] Compiled Graphs

Ray’s new compiled graphs API reduces the overhead of small tasks by pre-allocating resources and enabling fast, peer-to-peer GPU communication, eliminating slow CPU-GPU data transfers. Plus, it optimizes training efficiency for multi-modal architectures.

This optimization has led to up to:

17x faster GPU communication on a single node

2.8x faster multi-node performance

15% boost in throughput for batch inference with LLMs

40% improvement in throughput per dollar (achieved by mapping and co-locating text encoders to cheapers GPUs)

Link2. Ray Data is Now GA

The team announced that Ray Data has reached general availability.

Unstructured and AI data preprocessing is the single fastest-growing use case for Ray. And because data preprocessing involves regular preprocessing mixed with inference, these workloads bring a new regime of system requirements:

They require CPU and GPU compute

They are (simultaneously) GPU and data intensive

As organizations increasingly use AI to read their data—and process it—data processing will become a fundamentally GPU-driven workload. Today’s CPU-based data processing systems, while powerful, will not be able to keep up. Ray Data handles everything from ingestion to last-mile preprocessing to streaming data into training or batch inference workflows.

Companies around the world are already doing amazing things with Ray Data—recently, Amazon took an exabyte scale workload and migrated it from Spark to Ray. They cut costs by around 82%, and are saving $120 million dollars annually.

Link3. Stability Improvements

In terms of what really matters, stability at scale is paramount. In that vein, the team announced improvements to Ray’s scalability, improving 4x to support up to 8,000 nodes.

We’re committed to maintaining stability. In the past months, we’ve started shipping Ray releases weekly to get features and fixes in the hands of our community faster. Plus, over the last year, the Anyscale team has fixed over 1,700 reliability and usability issues.

LinkAnyscale Announcements

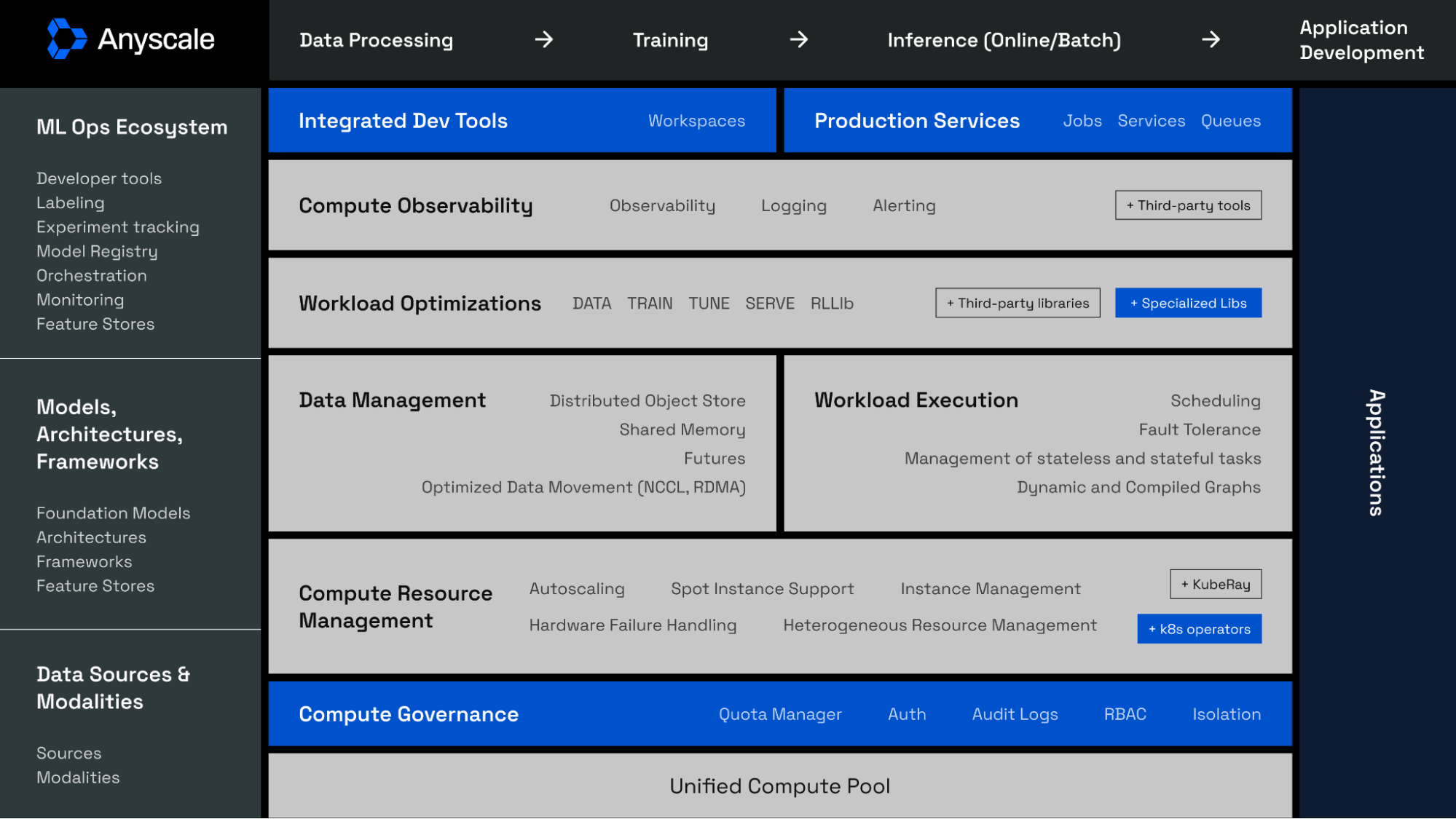

The Anyscale team, led by new CEO Keerti Melkote, walked through Anyscale, the Unified AI Platform built by the creators of Ray.

Anyscale builds on Ray, offering a truly optimized Ray Runtime that is up to 5.1x more performant than Ray Open Source on certain workloads, an entire governance layer that every enterprise needs to maintain control over AI sprawl and runaway spend, and an end-to-end suite of developer tools and production services to actually build AI apps.

Link1. Introducing: RayTurbo

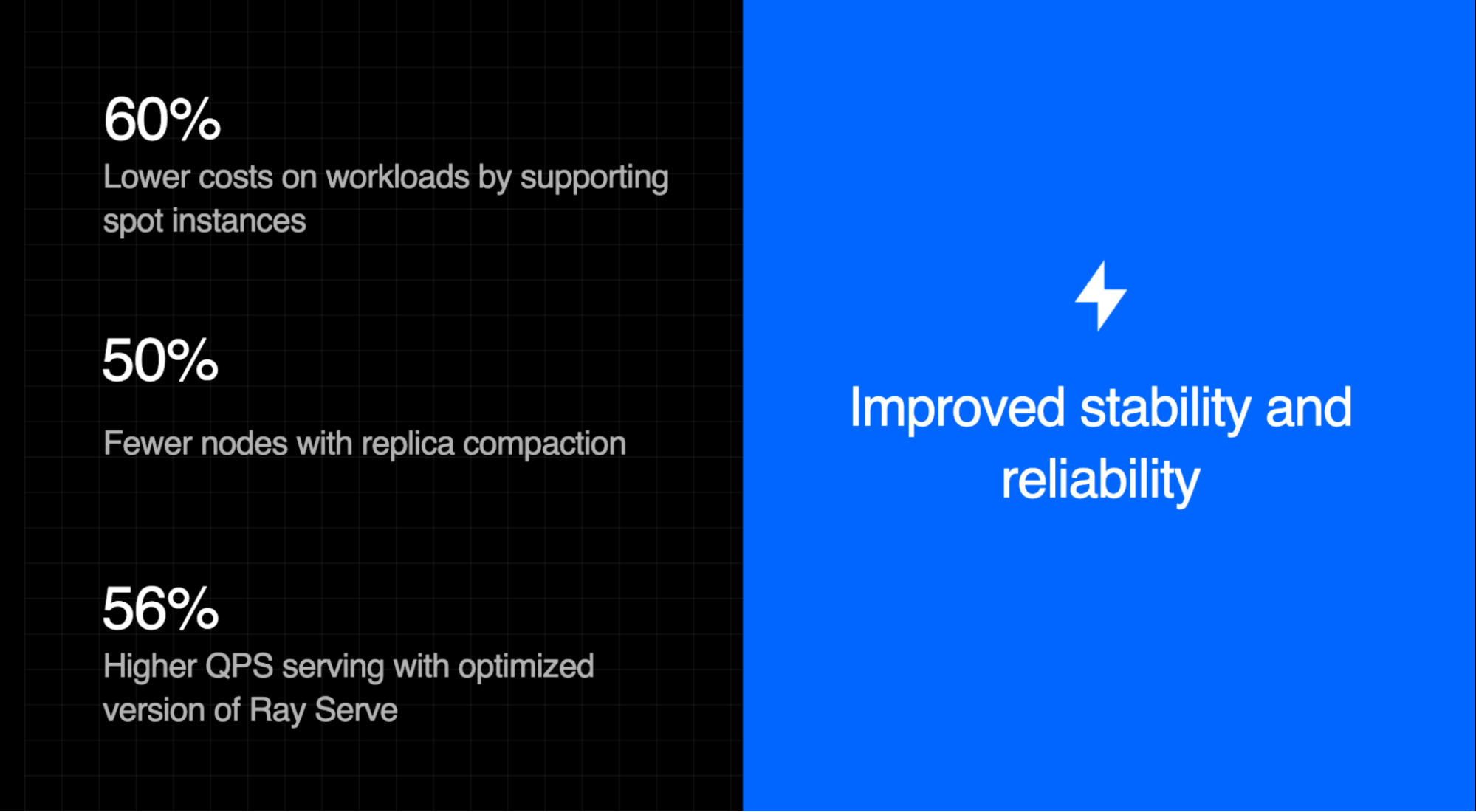

During Ray Summit 2024, we announced RayTurbo, a newly announced optimized runtime packed with 30+ performance and efficiency improvements.

These enhancements, available to Anyscale customers, make AI workloads more effective and efficient and include:

Ray Data enhancements like streaming metadata fetching, making it up to 4.5x faster compared to the open source version of Ray Data.

Ray Serve optimizations like Replica Compaction and high-QPS improvements, making Serve up to 56% faster than Ray Open Source.

Ray Train advancements including elastic training, reducing costs by up to 60% thanks to reliable spot instance support.

5.1x faster node autoscaling, saving massive compute costs

Up to 6x lower costs compared to AWS Bedrock for LLM batch inference

Link2. AI, Anywhere

At Ray Summit 2024, Anyscale unveiled a range of new features focused on helping customers unlock all of their compute estate.

With support for Kubernetes (K8s) and Machine Pools for hybrid cloud environments, Anyscale makes it possible to deploy AI workloads anywhere.

Joined by launch partners Oracle Cloud Infrastructure OKE, Google GKE, Azure AKS, and Amazon EKS, the Operator lets teams run Anyscale’s platform in their Kubernetes clusters with their existing tooling.

Link3. Advanced Governance Suite

At the summit, Anyscale announced new tools to give platform leaders the fine-grained controls they need to control AI sprawl and spend, including the Anyscale Governance Suite. The governance suite includes powerful observability tools to optimize utilization, resource quotas, and usage tracking across every compute workload.

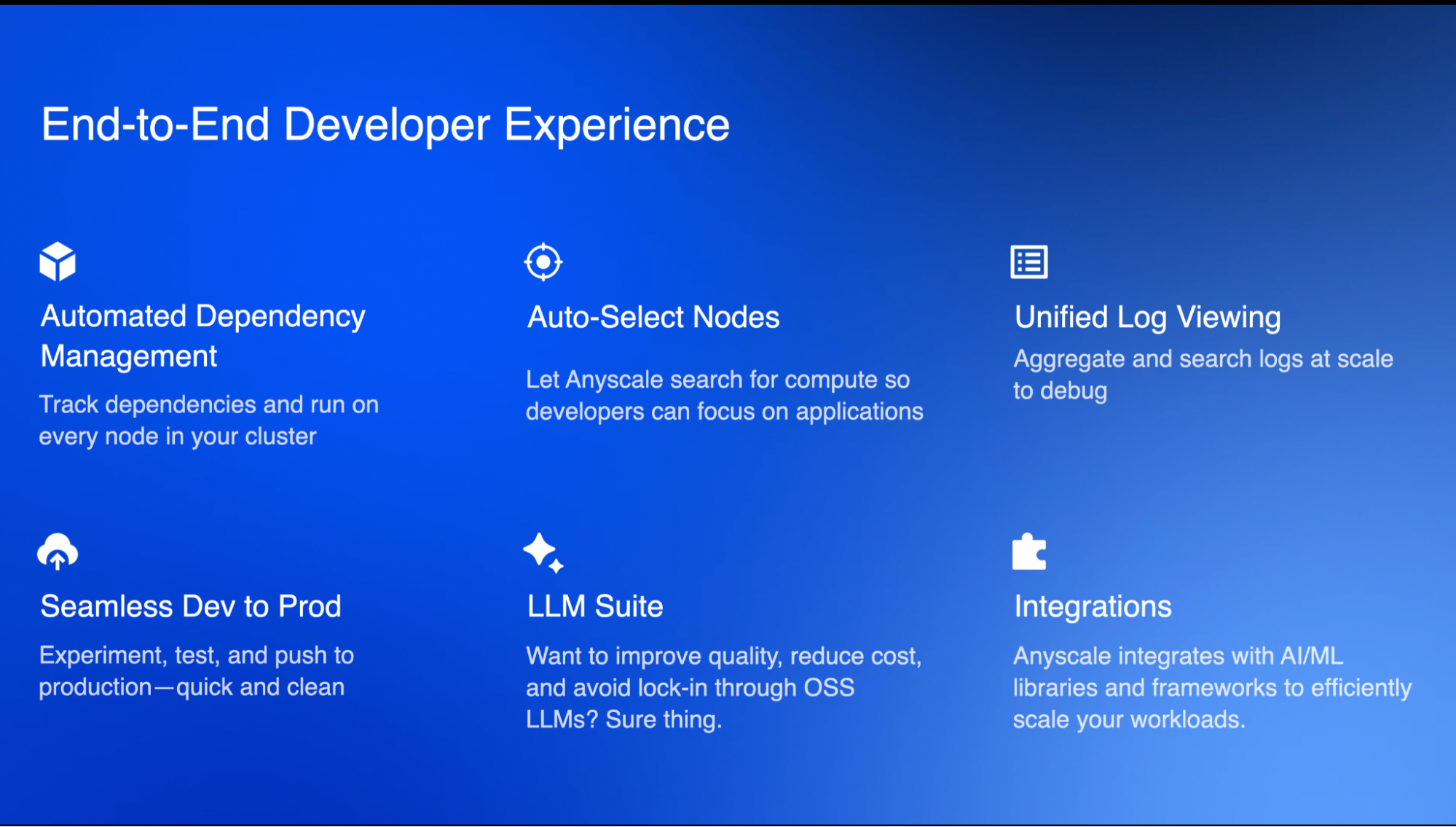

Link4. Delightful DevEX

At Ray Summit 2024, we introduced new features designed to simplify the developer experience. These include a reimagined UX with key features including node autoselection, a unified log viewer, seamless dependency management, and an intuitive Pythonic framework that makes scaling AI workloads frictionless.

Anyscale also announced the LLM Suite, a comprehensive set of capabilities for Gen AI applications covering embeddings, fine-tuning, and serving with built in LLM Ops.

Link5. Marketplaces

Lastly, Anyscale is now available on AWS Marketplace and GCP Marketplace. Getting started with Anyscale has never been easier.

LinkThe Future of AI with Ray and Anyscale

Ray Summit 2024 left us with a clear message: to overcome the AI Complexity Wall, organizations need a robust AI Compute Engine like Ray. Anyscale takes it a step further with additional enhancements in scalability, efficiency, performance, flexibility, and stability—all of which are set to reshape how companies build, deploy, and scale AI.

We loved connecting with Ray and Anyscale users at Ray Summit 2024, and can’t wait to see what you all build with the new features announced this week. We know that these tools will help AI transform industries and deliver real ROI.

If you missed the summit or want to dive deeper into the keynotes and breakout sessions, we’ll be posting recorded content on our YouTube channel soon. Be sure to subscribe, and don’t forget to join the Ray community on Slack to continue the conversation.