Wildlife Studios Serves In-game Offers 3X Faster at 1/10th the Cost with Ray Serve

Mobile gaming giant Wildlife Studios’ legacy system for serving revenue-generating in-game offers was not scaling to meet their latency and cost requirements. After switching to Ray Serve on Anyscale, their Dynamic Offers team is now able to serve offers three times faster. With Ray and Anyscale, they delivered a better gaming experience to their players and increased revenue from more relevant and faster served in-game offers while reducing infrastructure costs through better CPU utilization.

Wildlife Studios, one of the largest mobile gaming companies in the world, needed a faster system for serving in-game offers to their more than 100 million monthly players. With over two billion downloads across their 60+ games, Wildlife Studios has been growing rapidly since 2011 while delivering an engaging, personalized, and immersive experience to each one of their players. Their massive catalog of highly successful free games includes the 2012 Apple Game of the Year “Bike Race,” as well as “Tennis Clash,” the number one game in more than 100 countries for 2019.

LinkHighly relevant offers delivered at exactly the right time to drive revenue

Every one of Wildlife Studios’ games are free to download and play. Players can pay for in-game offers to procure specialty items, advance through the game faster, or create a more customized experience. These in-game offers are the primary source of revenue for most games of Wildlife Studios, and thus critical to their success. The Dynamic Offers team at Wildlife Studios is a specialized group that focuses on serving highly relevant offers to improve gaming experience and maximize both the dollars spent per player and overall playtime.

Example of in-game offers in some of Wildlife Studios’ mobile games

Example of in-game offers in some of Wildlife Studios’ mobile gamesThe Dynamic Offers team cares deeply about two key metrics: offer relevance / efficacy and serving latency. The more relevant and attractive an offer is to the user, the higher the chance of that offer generating revenue. But even the most relevant offer won’t be effective if it’s not delivered in a timely manner. For Wildlife Studios, it was critical to keep the response time for serving up offers within 2 seconds at P95 latency for two reasons: (1) many of Wildlife Studios’ top revenue-generating games have tight timeouts, fallbacking to non-personalized offers that leads to a non-optimal experience to our players , and (2) slow-loading offers can result in a loading screen, prompting players to leave the game.

“Our existing serving stack was struggling to meet our latency targets as ensemble models with additional complexity were being developed,” said Lucas Machado, Engineering Manager on the Dynamic Offers team. Wildlife Studios’ existing serving system stacked their models inside a Flask server and scaled it horizontally using Kubernetes. The Kubernetes autoscaler was not able to scale quickly enough to handle the request peaks the platform sustained . As a result, the team had to build a custom made scaling solution for Kubernetes, that would scale the models in anticipation of the peaks. This solution is highly manual and doesn't handle non-planned peaks.

LinkImmediate 3X serving speed up with Ray Serve

In early 2021, the Dynamic Offers team started looking for a better way to distribute the computation of their ensemble models for serving in-game offers. They were already familiar with the Ray ecosystem through their use of RLlib, the reinforcement learning library based on Ray. Just a few months prior, the Dynamic Offers team had moved the machine learning model for one of their most popular games to RLlib and had immediately seen improved offer conversion and a 3% bump in player projected lifetime spend.

Through their prior experience with Ray, Wildlife Studios had confidence that it was a system built to scale. So they decided to do a proof of concept (POC) with Ray Serve for serving in-game offers. Ray Serve is a framework-agnostic model-serving library based on Ray. “From our experience with RLlib, we knew that Ray would be able to handle our dynamic scaling requirements smoothly. And the idea of streamlining our entire training to serving pipeline on a single universal-compute platform was a bonus,” noted Felipe Antunes, senior staff data scientist at Wildlife Studios.

“The PoC exceeded our expectations. With Ray Serve, we were able to serve offers in 150 milliseconds with a P95 latency — which is three times faster than our legacy system. Ray Serve is allowing us to serve up the most relevant offers even more quickly — which means improved user experience, increased play times, and increased revenue,” said Leonnardo Rabello, software engineer on the Dynamic Offers team who ran the PoC.

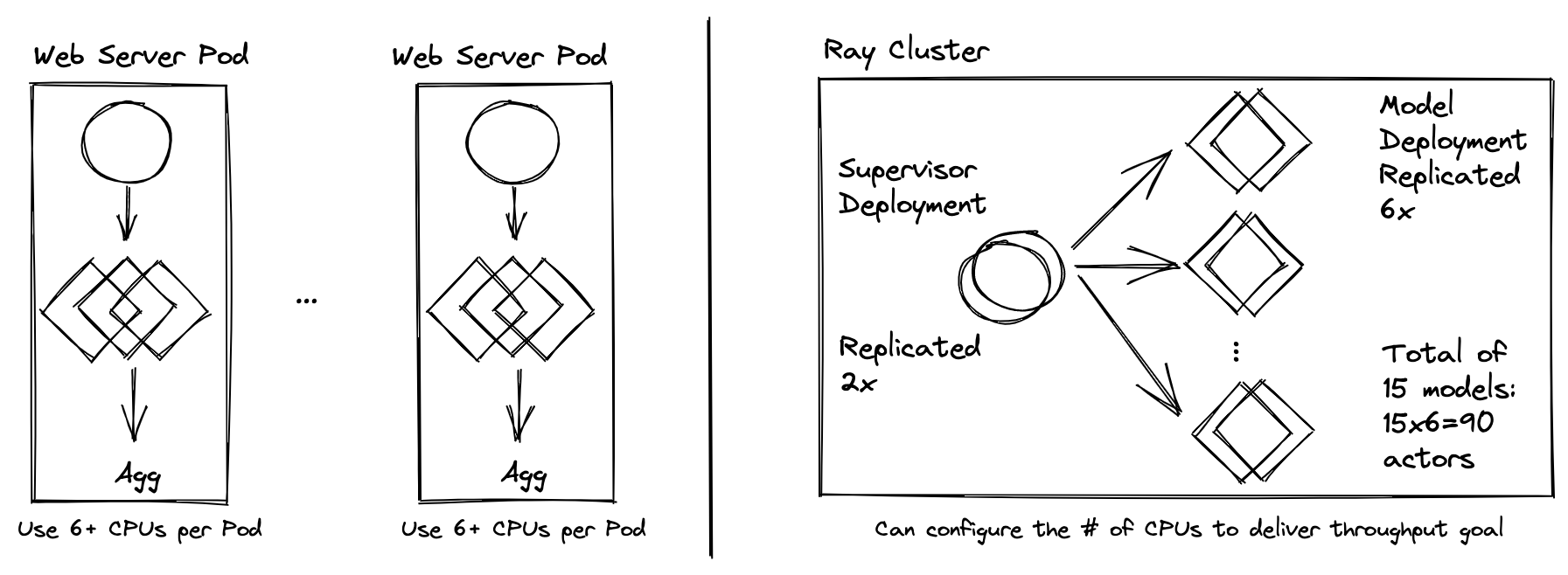

The Ray Serve architecture provided support for parallel inference on multiple models, decreasing latency and minimizing idle machines. Ray Serve makes it easy to run the models in parallel across the cluster. Rather than running through each model serially, models can be run concurrently.

Link$400,000 annual cost savings from more efficient infrastructure utilization

In addition to slashing latency, the new serving system will also drastically lower Wildlife Studios’ infrastructure costs. With Ray Serve, they can dynamically scale their serving infrastructure up and down to meet demand, which means they don’t have to be constantly provisioned for peak times. Ray Serve allowed them to create a deployment per each model and independently scale individual deployments to maximize CPU usage, further reducing infrastructure costs. Lucas noted, “With this PoC on just 1 game, we were able to slash our total CPU hours per year by nearly 90 percent. We are excited to migrate the in-game offers for additional three games in our portfolio to this new serving system, which will be more than $400,000 in infrastructure cost savings per year.”

LinkSimplified operations and cluster management with Anyscale

Wildlife Studios chose to deploy Ray Serve through the Anyscale platform. The Anyscale platform makes it easy to deploy, configure, and maintain Ray clusters hosted in the cloud. Lucas said, “As a small team of data scientists with limited in-house bandwidth to handle infrastructure operations, the operational simplicity from the managed Anyscale platform was very attractive to us.” With Anyscale, the Wildlife team gets simple cluster management on Amazon EC2, faster cluster start up times, and an easy-to-use interface, in addition to an API, for managing and monitoring their Ray applications. “Beyond the operational benefits, direct access to Ray creators and maintainers at Anyscale was invaluable — we were able to quickly debug any issues and knew that our inference pipeline was always using the most optimal architecture.”

See how your team can deliver on key metrics more efficiently with Ray and Anyscale. Download the open source Ray Serve or sign up for Anyscale to see how easy it can be to develop and deploy distributed applications at any scale.