Reinforcement Learning with Ray: Training UAVs

Reinforcement Learning (RL) is reshaping industries by enabling systems to independently learn complex behaviors through trial-and-error. Its diverse applications range from robotics and autonomous vehicles to financial trading and industrial automation and beyond.

Despite its potential, RL poses significant challenges. Training RL models typically requires extensive computational resources due to the high volume of environment interactions needed. These resource demands can slow development, complicate scalability, and create efficiency bottlenecks, especially when managing large simulations and datasets.

Ray and its RL-specific library, RLLib, directly address these scalability and efficiency challenges. Ray simplifies distributed computing, while RLLib provides user-friendly APIs to effortlessly scale RL workloads across clusters. Together, they enable rapid, parallel training without extensive code changes, dramatically reducing experimentation time.

In this guest blog, Peter Haddad, Staff AI/ML Engineer at Lockheed Martin, walks through an example of reinforcment learning for training autonomous unmanned aerial vehicles (UAVs). His work exemplifies how Ray and RLlib empower practitioners to accelerate real-world innovation.

Read on for Peter’s walkthrough of RL for UAV navigation.

LinkTraining UAVs with Reinforcement Learning

Imagine an Unmanned Aerial Vehicle (UAV) navigating from waypoint-to-waypoint, making decisions based on observations, learning from outcomes, and honing its decision-making skills over time.

To achieve this goal, Ray and its library for reinforcement learning, RLlib, can train a single-agent policy to optimally control a UAV within the PyFlyt simulation environment.

Figure 1. UAV Flying Towards Waypoints. This example was contributed back to the RLLib project through a pull request. The code to reproduce this tutorial is in the official RLlib repository located here.

Let’s take a look at how Ray makes it possible.

LinkTraining Plan

The objective involved training an agent to navigate a UAV through an environment with obstacles, wind, and other variables that can impact the UAV's flight path. In these experiments, Ray is used to emulate real-world UAV missions, where waypoint-to-waypoint navigation within a specific timespan is a generalizable challenge.

LinkReinforcement Learning Considerations

LinkEnvironment Parameters

Fully understanding the environment's constraints, reward function, and states is crucial for solving reinforcement learning problems. Therefore, researchers often publish a paper or include these details in their project documentation to provide a comprehensive understanding of the environment.

PyFlyt is an open-source library of environments built using the PyBullet physics engine. Out of the box, PyFlyt comes with configurable scenarios for quadcopters, fixed-wing aircrafts, and rocket landings; offering a powerful toolkit for RL and control system use cases.

For this specific use casetraining program, the PyFlyt QuadX-Waypoints-V1 environment is ideal for this use case. This environment comes with a variety of configuration options which allow users to modify the task complexity. For example, users can configure the number of targets, time limit, and distance from each waypoint the UAV must reach. The ultimate goal was to train the agent to reach four waypoints within a ten second time limit.

LinkExploitation vs. Exploration

In Ray RLlib, exploration and exploitation are concepts that represent the trade-off between an agent's desire to explore new actions and its tendency to choose the actions it already knows is good. Here are the differences between exploration and exploitation:

Exploration refers to the agent's efforts to gather information about the environment by trying new actions and observing their outcomes. This helps the agent to discover new, potentially better, options and to expand its understanding of the environment's dynamics.

Exploitation refers to the agent's tendency to select actions that have previously yielded high rewards. By choosing actions with high rewards, the agent can maximize its immediate performance.

LinkReward Shaping

The reward function takes an action and state as inputs, and calculates a scalar feedback signal, a single numerical value, known as the reward. This reward shapes the agent's behavior, encouraging exploration and exploitation to solve complex problems. In essence, the reward function acts like a scoring system, providing feedback that guides the agent towards the desired goals throughout training.

A key aspect of the reward function is the "done state," which is part of the reward function and indicates the end of an episode. An episode is a sequence of interactions between an agent and its environment. Consisting of the agent taking actions, from the current state to the new state, and receives feedback from the environment in the form of rewards or penalties. This "done state" will only be "true" when the agent crashes, flies out of bounds, or successfully completes the task; each giving a different reward score. By providing feedback on the agent's actions during an episode, the reward function helps the agent make better decisions over time and learn more effectively.

In summary, a well-designed reward function is crucial for the learning process, as it shapes the agent's behavior, encourages exploration and exploitation, and provides feedback that guides the agent towards the desired goals after each completed episode, ensuring the agent's continuous improvement.

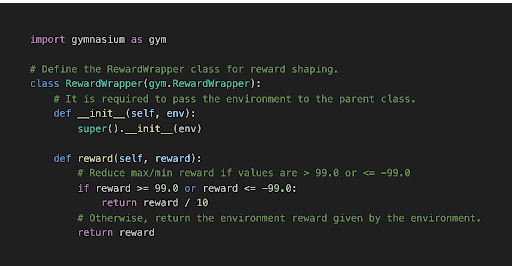

Figure 2. Code Snippet From quadx_waypoints.py Showcasing Reward Shaping

By default, in the PyFlyt QuadX-Waypoints-V1 environment, the agent receives a reward of 100 for reaching a waypoint and -100 for a crash (i.e., a done state). The agent with the original reward function eventually learns how to fly in the environment; however, it’s unable to quickly recover from large punishments at the beginning of training. To address this issue, the reward function was refined using the Gymnasium API Wrapper Class to reduce the min/max rewards by a factor of ten (see figure 2); this is known as “reward shaping”. Through reward shaping, this reduced the amount of punishment given to the agent for a crash, encouraging more aggressive and continuous exploration.

LinkChoosing the Right Algorithm: Proximal Policy Optimization

Finding the right balance between exploration and exploitation is crucial for an RL agent's success. If the agent explores too much, it may take a long time to learn the optimal policy. Conversely, if the agent exploits too much, it may miss out on better options that it has not yet discovered.

Proximal Policy Optimization (PPO) is a robust and efficient on-policy RL algorithm that balances exploration and exploitation. In RL, on-policy means that an agent learns the optimal policy while interacting with the environment using the current policy being learned. PPO's sampling method strikes an appropriate balance between exploration and exploitation, making it effective for various RL tasks.

Additionally, PPO uses an actor-critic approach, where the actor selects actions based on the current state of the environment, and the critic evaluates the quality of the actions taken by the actor. The feedback provided by the critic helps the actor improve its policy and make better decisions over time.

While other algorithms may outperform PPO in specific environments, its effectiveness and generalizability makes it a solid choice for many tasks.

LinkTraining the Agent

The training loop integrates three essential libraries to solve the RL problem:

RLlib is the framework we used to build and train the agent.

See the quadx_waypoints.py example that is used in this post.

PyFlyt provides the UAV QuadX-Waypoints environment.

Gymnasium acts as the interface between the RL algorithm and environment.

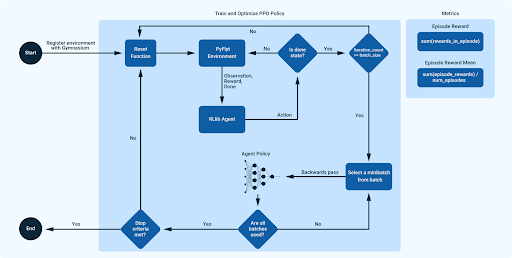

Figure 3. Train and Optimize RLlib PPO Policy

This diagram illustrates how the agent is trained to maximize the policy. Training is complete once the stopping criteria is reached. These stopping criteria may be a certain number of steps or performance metrics.

In Figure 3, the first step is to register the environment using Gymnasium, and it is initialized through the Reset Function. Next, the agent interacts with the environment and synchronously stores trajectories via the policy, while the environment exchanges observations, actions, and states with the agent. This loop occurs until the iteration count is greater than or equal to the batch size.

Next, policy training occurs. A subset of trajectories (minibatch) is sampled from the collected batch and used to update the policy network using the PPO algorithm, with the goal of maximizing the reward. This training is finished after all trajectories have been used. If the stop criteria are met, then training is complete. If not, then the environment is reset, and metrics are used to evaluate the agent’s performance over time.

It’s important to note that using PPO with GPUs is slower when compared to only using CPUs. This is because it takes longer to load the CUDA with the data than directly processing on CPU and in memory. With RLlib, you have the flexibility to choose what parts of the RL loops leverages GPUs i.e., retraining policies, environment exploration, etc.

LinkModel Training

LinkDeveloper Workflow

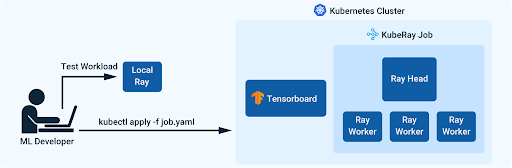

Figure 4. Training QuadX-Waypoints-V1 with KubeRay

Libraries like Ray make it easy to test your workload in any development environment because you can instantiate a single node Ray Cluster for fast, iterative, and local testing. For the UAV waypoint-to-waypoint use case, the training script was first tested (to verify a successful training step), then trained on a Ray Cluster orchestrated via a KubeRay job for distributed training. Training on a cluster can be done by creating a RayJob YAML configuration file and applying it through the Kubernetes Command Line Interface (kubectl).

The KubeRay job creates its own Ray Cluster with the declared amount of Ray Workers. Because each job has its own cluster, reliability and stability is improved when compared to Ray Clusters running multiple workloads. This improvement is especially noticeable for large, long-running jobs. The KubeRay Job QuickStart Guide offers guidance for using this feature.

Checkpointing model weights during training ensures that training can be easily resumed in case of interruptions. Thankfully, the Ray ecosystem makes it easy for you to track training results in an experiment tracker, such as AimStack or Tensorboard, enabling reproducibility and real-time insights into the training process.

The RLlib PyFlyt example leverages Ray Tune with a single trial using most of the default hyperparameters for PPO.

LinkTraining Results

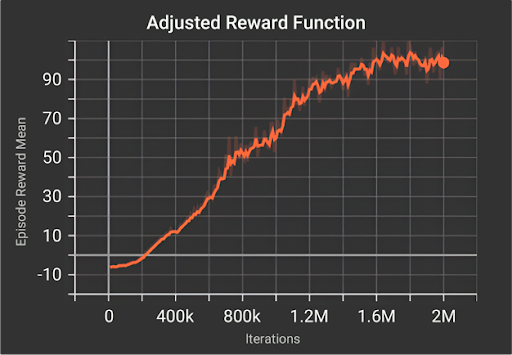

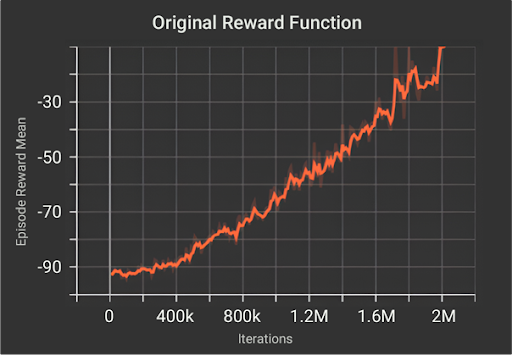

Figure 5. Training Results

In Figure 5, two training workloads were submitted using KubeRay and Ray Jobs; the first contains no reward shaping, defaults to using -100 as the minimum reward, and 100 for the maximum reward. The second training job reduces these minimum and maximum rewards by a factor of ten. Both training jobs have the agent's stopping criteria set to two million iterations, and the “episode reward mean” is used to evaluate the agent's performance.

When training with the adjusted rewards, there is a rapid growth in the “episode reward mean” value at 350k-800k iterations, which indicates that the algorithm is starting to converge. This agent stays stagnant after about 1.6M iterations and performs well during evaluation.

Figure 6. PyFlyt QuadX Trained with Reward Shaping

As shown in Figure 6, the agent with the adjusted reward function successfully reached all four waypoints. For this use case, the results illustrate the significance of reward shaping for solving the task.

LinkFuture Work

In future iterations, Curriculum Learning will be applied to these experiments, a training strategy that progressively enhances task complexity to align with how humans learn. The objective is to create a resilient agent capable of excelling in diverse conditions. To build upon the agent's abilities, multi-agent reinforcement learning will be merged with Curriculum Learning, enabling scenarios like a UAV skillfully evading an adversarial UAV or a swarm of UAVs collaborating on a mutual goal.

LinkConclusion

To facilitate your exploration of the discussed concepts, Peter has made the quadx_waypoints.py example available. Additionally, Peter has enhanced the PyFlyt project with headless rendering capabilities for the QuadX-Waypoints environment, making it even easier to visualize your UAV's results.

You can run the example directly on Anyscale in minutes - simply click to create an account, clone the repository, and start training!