Your Data and AI Frameworks Evolved – What About Your Distributed Compute Framework?

Summary

Unstructured data such as text, logs, images, video is exploding in both scale and complexity, but most data and AI infrastructure still centers on structured data and SQL-style workloads. At the same time, Python-based AI models and frameworks are also growing in volume and size outpacing what traditional, non-Python distributed engines can handle. The convergence of these trends is creating compute bottlenecks that prevent organizations from putting more of their AI ambitions into production. At Anyscale we continue to work on solving these challenges with Ray.

LinkIntroduction

For years, companies have done their best to squeeze value from structured data – tracking new customer signups, running attribution models, and generating forecasts to guide growth. This was made possible by batch pipelines, SQL, and DataFrame operations that ingested, transformed, and aggregated terabytes of relational data.

Today, unstructured data represents the real prize. For many organizations, it's already outpacing structured data in volume by a factor of 10 or more. Modalities like images, audio, reviews, and chat logs – to name a very few – make up a large body of rich, yet untapped assets that can enhance user experiences and power automations. An e-commerce site might blend user attributes with product images to improve recommendations. A bank might combine transaction history with foundation models to catch fraud in real time.

This shift to multimodal (structured + semi-structured + unstructured) pipelines for AI, alongside larger models (ML → DL → GenAI) being used which make processing more GPU-centric, are introducing new pressure to organization’s AI infrastructure. The processing of these more complex data and AI models are tasks that the tools that powered the BI and traditional ML revolution – built for counts and joins on tables – aren’t enough for a world of embeddings, transformers, and video inputs.

To understand where AI is today and why processing bottlenecks are starting to become the norm across every organization with this new wave of multimodal AI, we have to go through a history of large scale processing in business environments.

Link2013: DataFrames for Structured Data Computing

The DataFrame abstraction first appeared in R in the early 1990s, pandas later started to popularize it in Python and ultimately it was Apache Spark that made it scalable and performant for the era of big data. Spark Scala DataFrames gave engineering teams a familiar tabular interface while enabling computation at petabyte scale across clusters.

What made Spark DataFrames so effective and consequently so popular?

Tabular format alignment: Ideal for structured data (SQL tables, CSVs), which surged in the 2010s with the rise of user-generated content, IoT logs, and e-commerce transactions.

Schema awareness: For data engineers, schemas tracked types, uniqueness, and null handling – essential for keeping pipelines from breaking at scale.

Database interoperability: DataFrames integrated smoothly with SQL and downstream systems like dashboards and applications.

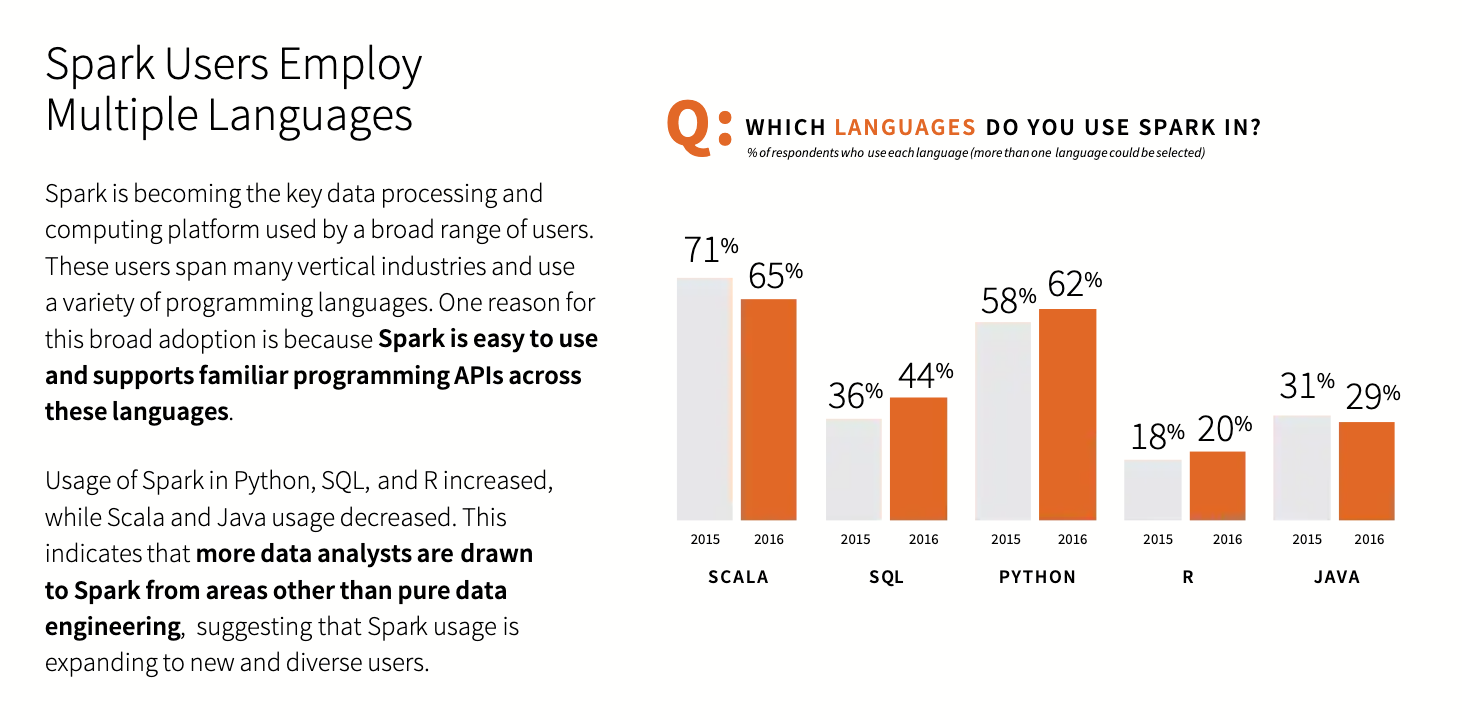

Figure 1: Python overtakes Scala and Java APIs in Spark. Source: Apache® Spark™ Survey 2016 Report

Figure 1: Python overtakes Scala and Java APIs in Spark. Source: Apache® Spark™ Survey 2016 Report

Spark naturally runs in Scala, but through their Python API, PySpark, they were able to pick up traction among data scientists and data engineers who at this point had converged on Python being the programming language for AI and found this API more familiar and easier to use.

While PySpark abstracted the need to write Scala for DataFrame operations (e.g. filter, join) to be distributed using Java Virtual Machine (JVM), the native Python code could not run there. The workaround for this is to have the data serialized (usually with Apache Arrow or Pickle) from JVM to Python, the Python function is executed on a single-node bound Python worker, and then results are sent back. This introduces additional challenges around serialization overhead and significantly slows down performance compared to native Spark functions. And since Spark is fundamentally a CPU-centric, JVM-based engine, there is very limited support for GPU scheduling or for supporting heterogeneous compute workloads, which is vital for unstructured data processing and AI workloads.

Link2016: Pytorch for Unstructured Data and GPUs

While Scala led Spark’s rise, PySpark brought its power to Python for structured data transformations just as Python itself was becoming the dominant language among data scientists and ML engineers. That same year, Meta (then Facebook) released PyTorch, a framework that would redefine how teams worked with unstructured data. With dynamic computation graphs, Python-native APIs, and native GPU acceleration, it offered flexibility and performance for building deep learning models – from CNNs for image recognition to RNNs for NLP, and more.

But structured data wasn’t going anywhere – it continued to grow in volume and relevance for both BI and predictive ML tasks. To take a hold of all this untapped data, the modern data stack took shape around cloud data warehouses, and Spark saw renewed adoption through services like Databricks and Amazon EMR.

And for unstructured data, Pytorch was not the answer to all the problems. As new AI frameworks surfaced to fill other workload gaps (e.g. vLLM for LLM inference) and compute services for structured data matured, an inefficient pattern emerged with processing split into two separate stages: structured data was processed with SQL or DataFrames on CPUs, while unstructured data was handled on separate compute pools with tools like PyTorch on GPUs. This split led to GPU-based training and inference jobs waiting on CPU-bound preprocessing – a workflow introduced underutilization of accelerated hardware and more fragmented pipelines.

Link2017: Attention is All You Need… and Ray

2017 was a pivotal year. The Transformer architecture, introduced by Vaswani et al. in “Attention Is All You Need,” replaced RNNs with self-attention. This new architecture revolutionized natural language processing (NLP) like sentiment analysis and translations and opened the door to large language models (LLMs). Open-source PyTorch implementations followed quickly, cementing PyTorch’s role in the open-source AI stack not only for deep learning but also for the unexpected surge of interest in generative AI.

Meanwhile, another breakthrough was taking shape at Berkeley. A team of researchers, frustrated by existing distributed compute frameworks, set out to solve three major pain points in scaling AI:

Python was dominant, but there was no native distributed compute framework for this programming language. Meanwhile all the AI frameworks were single-purpose engines (e.g. deep learning training focused). Building end to end workflows, at multi-node scale, was nearly impossible.

Data and model size was exploding, but existing systems couldn’t efficiently process it, especially unstructured data, alongside structured inputs.

GPUs were essential, but orchestration was fragmented. CPU and GPU workloads often lived on separate systems, causing wasteful transfers, frequent serialization, and slow pipelines.

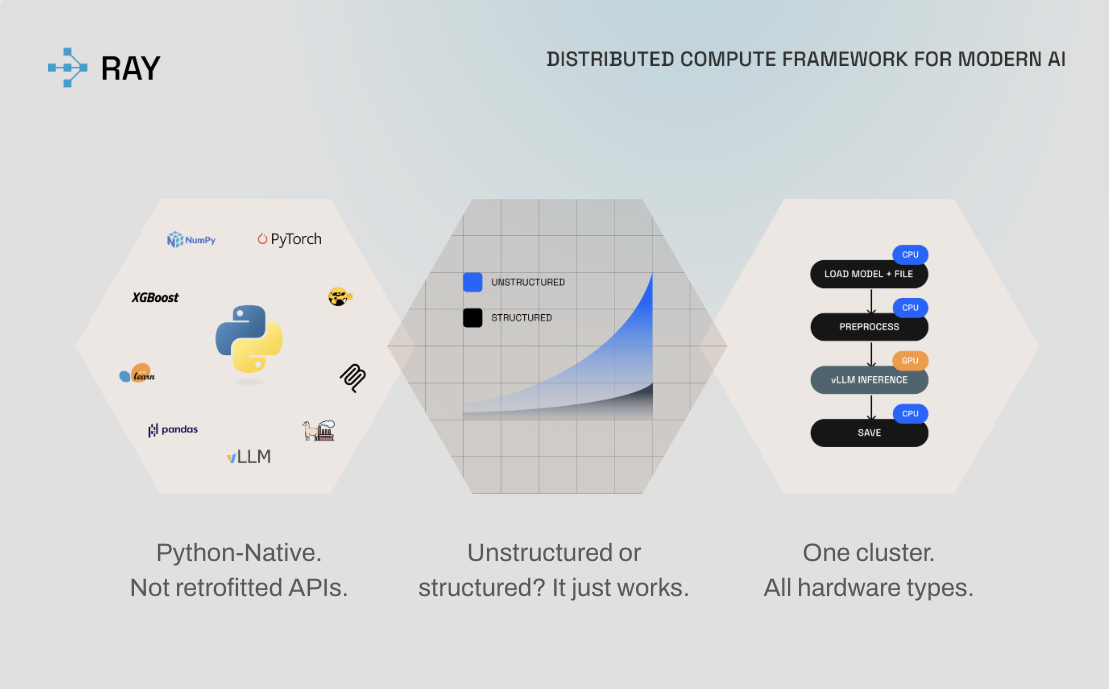

Their solution? Ray, an AI-native distributed compute framework, designed from the ground up for Python, multimodal data, and heterogeneous compute. Initially, like Transformers, Ray attracted mostly cutting-edge teams working on AI problems at unimaginable scale.

Figure 2: Ray is released in 2017 with three core design principles

Figure 2: Ray is released in 2017 with three core design principles

Link2022: ChatGPT Got Everyone’s Attention

In early 2022, OpenAI used Ray to train GPT-3.5 (Where GPT stands for Generative Pre-trained Transformer), one of the most complex AI models ever built and the first model released in ChatGPT – a chatbot that quickly went viral after its release. GPT-3.5 had hundreds of billions of parameters, which required massive scale and efficient orchestration. Ray delivered.

And just a year earlier, Ant Group had published their use of Ray to power a production model-serving system deployed on over 6,000 CPU cores. That system supported use cases like marketing recommendations and order allocation and ran at scale in the real world, delivering value to hundreds of millions of users.

From these workloads, Ray had proven it could meet the demands of both GPU-heavy generative AI, CPU-centric inference systems, or – as if often the case – hybrid GPU/CPU workloads. Other leaders followed:

Uber rearchitected its Michelangelo ML platform to incorporate Ray

Spotify expanded production support for ML beyond TensorFlow

Pinterest boosted developer velocity and pushed GPU utilization over 90%

Ray’s architecture for distributed Python, multimodal data, and heterogeneous compute – wasn’t just novel, it was a must-have. Finally, ML platform and engineering teams had a common framework – just in time for them to begin supporting the growing business demands to incorporate LLMs and embeddings for generative use cases. Ray enabled these teams to both embrace generative AI while also modernizing their recommendation systems and other machine learning pipelines that heavily relied on XGBoost or Pytorch.

Link2025: Turning Ideas into Multimodal AI in Production

It’s hard to imagine a single framework solving every AI challenge and the truth is, none does. Ray does not replace PyTorch, XGBoost, DeepSeek, vLLM or yet to be released Python AI frameworks, it complements them by offering a practical way for developers to distribute and orchestrate all of these frameworks at scale.

Ray simplifies the hard parts of distributed computing for multimodal AI: multi-node execution, task scheduling across heterogeneous clusters, data movement, process isolation, autoscaling, and failure recovery. And it does so while still giving teams fine-grained control over how workloads run across CPUs and GPUs – or fractions of those. This flexibility helps ML practitioners streamline training, fine-tuning, and post-training, while giving platform teams a way to build cost-efficient infrastructure that supports multiple production jobs – including offline batch inference and online serving – without maintaining separate processing infrastructure.

AI is changing fast and one thing’s clear: the tools that got us through the age of SQL, single node AI, and batch ETL won’t be enough for the multimodal AI era. That’s why the Ray open-source community is moving quickly to continue to streamline distributed processing, scheduling and orchestration of AI workloads across heterogeneous clusters. And at Anyscale, we’re working hard to democratize access to the power of Ray’s distributed compute engine so teams can get value out of it in a matter of days. All of this because we are seeing that regardless of industry or company size, every AI team is being asked for a variation of the same thing: to onboard more complex and more advanced AI use cases.

Follow us on LinkedIn to stay close to what’s next

Keep an eye on new case studies to see the stories of teams using Ray and Anyscale to lead the way in building the next wave of production AI, from recommendation systems to agentic workflows, and real-time NLP to multimodal data processing pipelines.