Redis in Ray: Past and future

Redis is a well-known open source in-memory store commonly used for caching and message pub/sub. Ray used Redis as a metadata store and pub/sub broker until version 1.11.0; starting in Ray 1.11, Redis is no longer the default. In this blog post, we will cover the history of Redis in Ray, and how the changes in 1.11 will allow us to focus on adding better support for fault tolerance and high availability in the future.

LinkRay 0.x: Handling all metadata via Redis

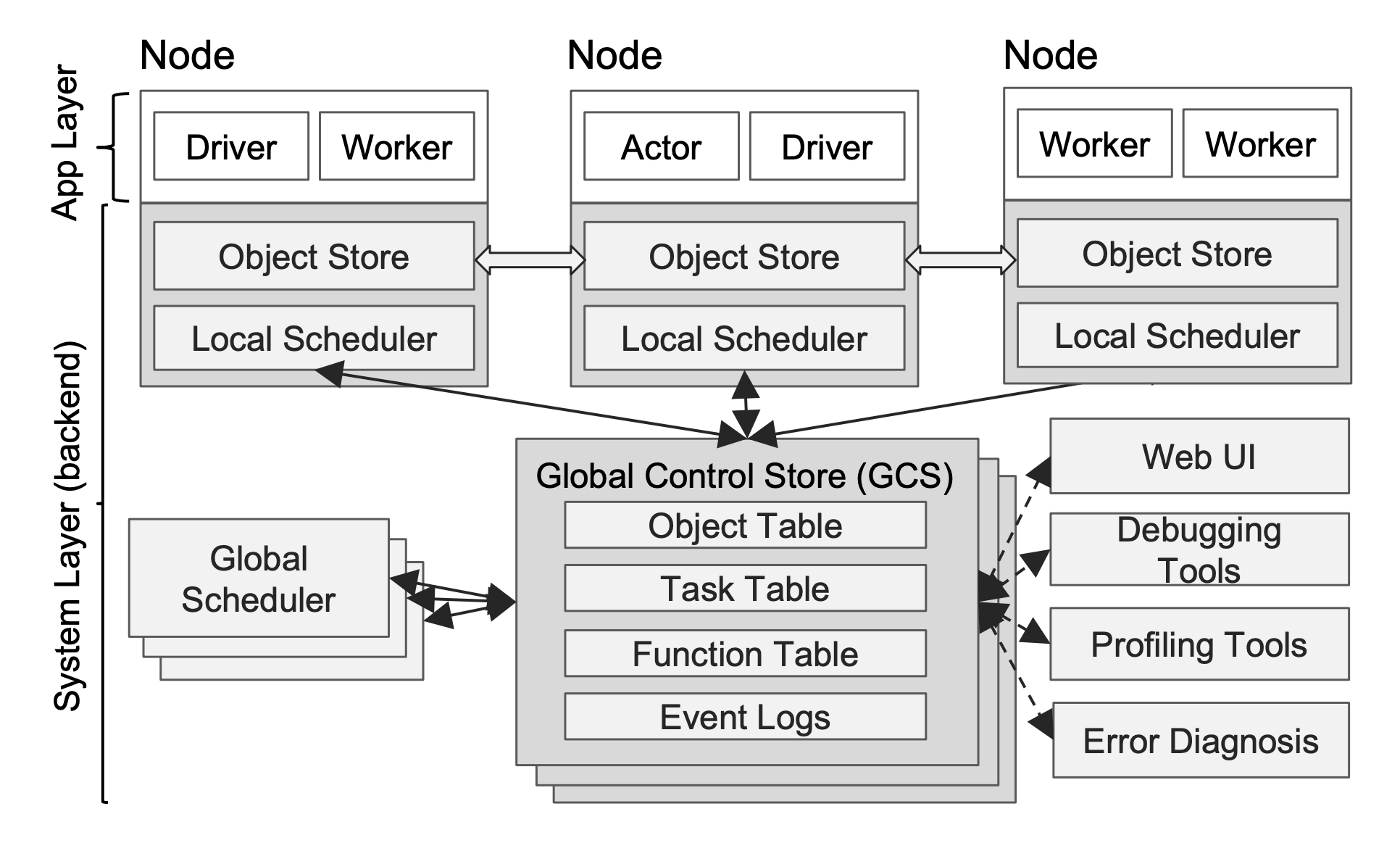

Since Ray’s inception, Redis has been used for key-value storage and message pub/sub. In the pre-1.0 Ray architecture shown below, Redis is the backing store for data in the Global Control Store (Object Table / Task Table / …). All components of Ray, such as the Global Control Store (GCS), raylets, and workers, read and write to the Redis instance directly.

Fig 1. Ray architecture pre-1.0, from Ray: A Distributed Framework for Emerging AI Applications

Before Ray 1.0, both cluster-level metadata (e.g., Task Table) and object metadata (Object Table) were stored in Redis. All other components can read and write to Redis directly. Redis was chosen as the shared key-value store because of its clean API, ease of deployment, and good client support across C++ and Python.

But one problem with this design is that all updates to object metadata need to go through Redis, which has finite CPU and memory. The Redis instance became a bottleneck with more objects in the Ray cluster — in fact, even before object refcounting was implemented. This bottleneck on object metadata was addressed in Ray 1.0 via distributed object metadata storage.

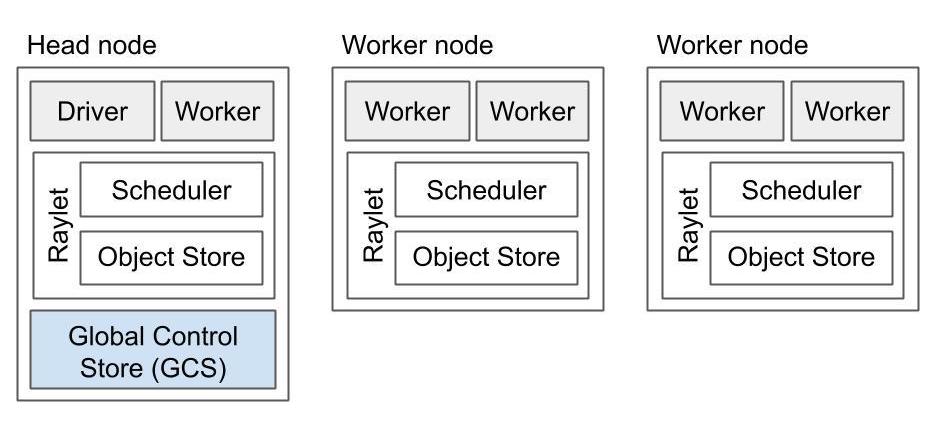

LinkRay 1.0-1.10: Handling cluster metadata via Redis

From Ray 1.0 onwards, object metadata including reference counts are stored with each worker (object owner) instead of Redis (more details at Ownership: A Distributed Futures System for Fine-Grained Tasks). The Redis instance only contains cluster / control plane metadata.

Fig 2. Ray 1.x architecture, from Ray 1.x Architecture whitepaper

In Fig 2 above, Redis is still the backing store of the GCS. The workloads on Redis now include:

Storing cluster metadata: actor / node / worker states, serialized Python functions and classes, etc.

Broadcasting actor / node / worker state updates

Streaming errors and logs from workers to the driver

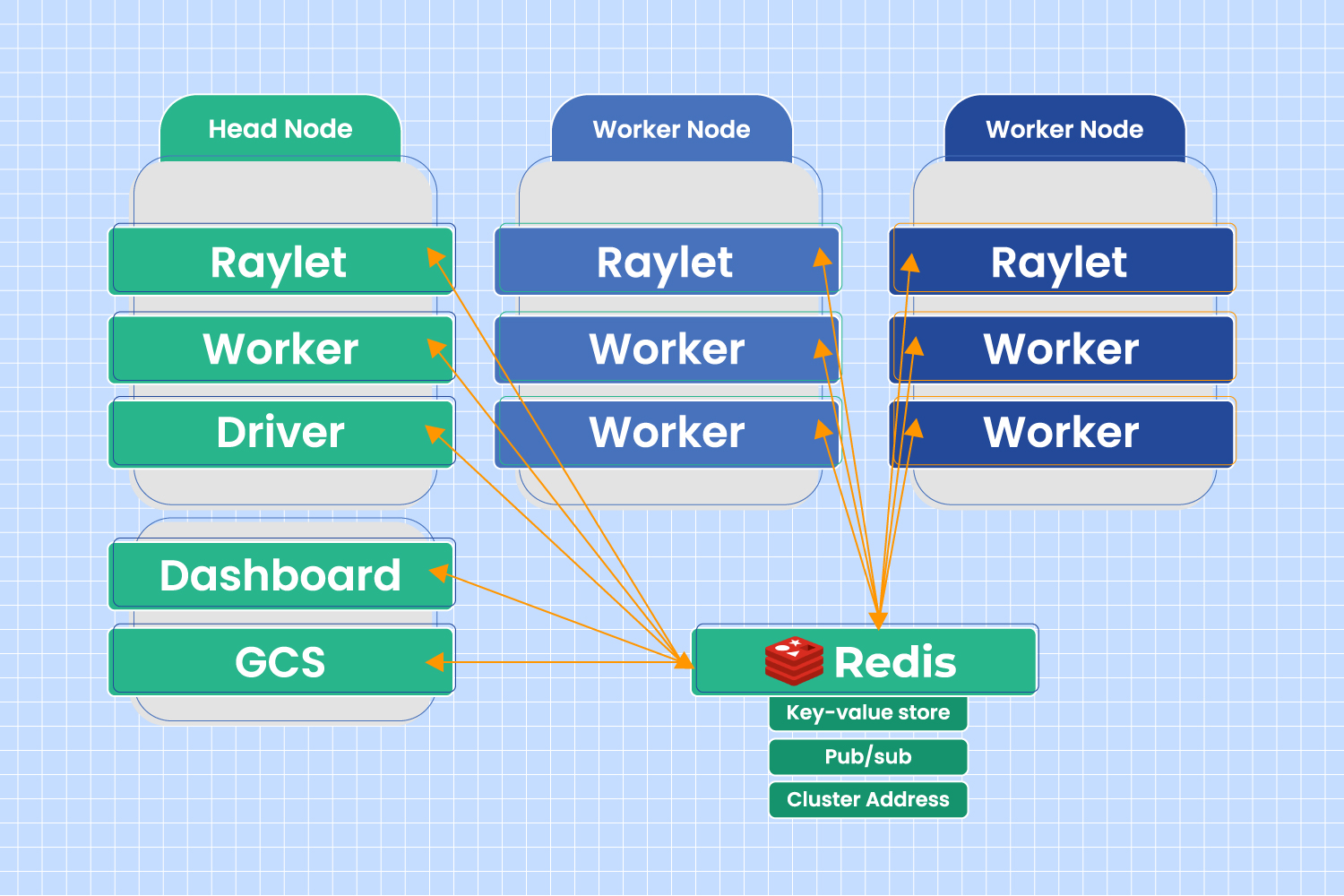

Although it was initially convenient to use Redis for these use cases, this approach has several shortcomings:

Redis is not a persistent database, so a Redis instance going down can fail its Ray cluster. To support fault tolerance and high availability in Ray, loss of cluster metadata must be avoided.

Using Redis pub/sub can be inefficient, such as for resource broadcasting, when the subscriber only needs the most recent state instead of the full stream of updates.

Allowing all components direct access to the underlying storage is not a good practice in general. It increases the likelihood of metadata corruption, and increases coupling between all Ray components with Redis (Fig 3).

In addition, the original reasons we chose Redis were no longer valid after the Ray 1.0 architecture improvements. All components of Ray have integrated with gRPC, so Redis is not significantly easier to use compared to a gRPC interface on top of in-memory key-value store. Redis was also no longer on the critical path of most data plane operations (calling actor methods, transferring data, etc.), so we are not worried about a minor latency / performance difference between Redis and gRPC, unless it becomes very significant.

Fig 3. All components can read / write to Redis, before Ray 1.9

To address these issues, we embarked on a project to support Redisless Ray and pluggable metadata storage. The first step, Redisless Ray, was released with Ray 1.11.

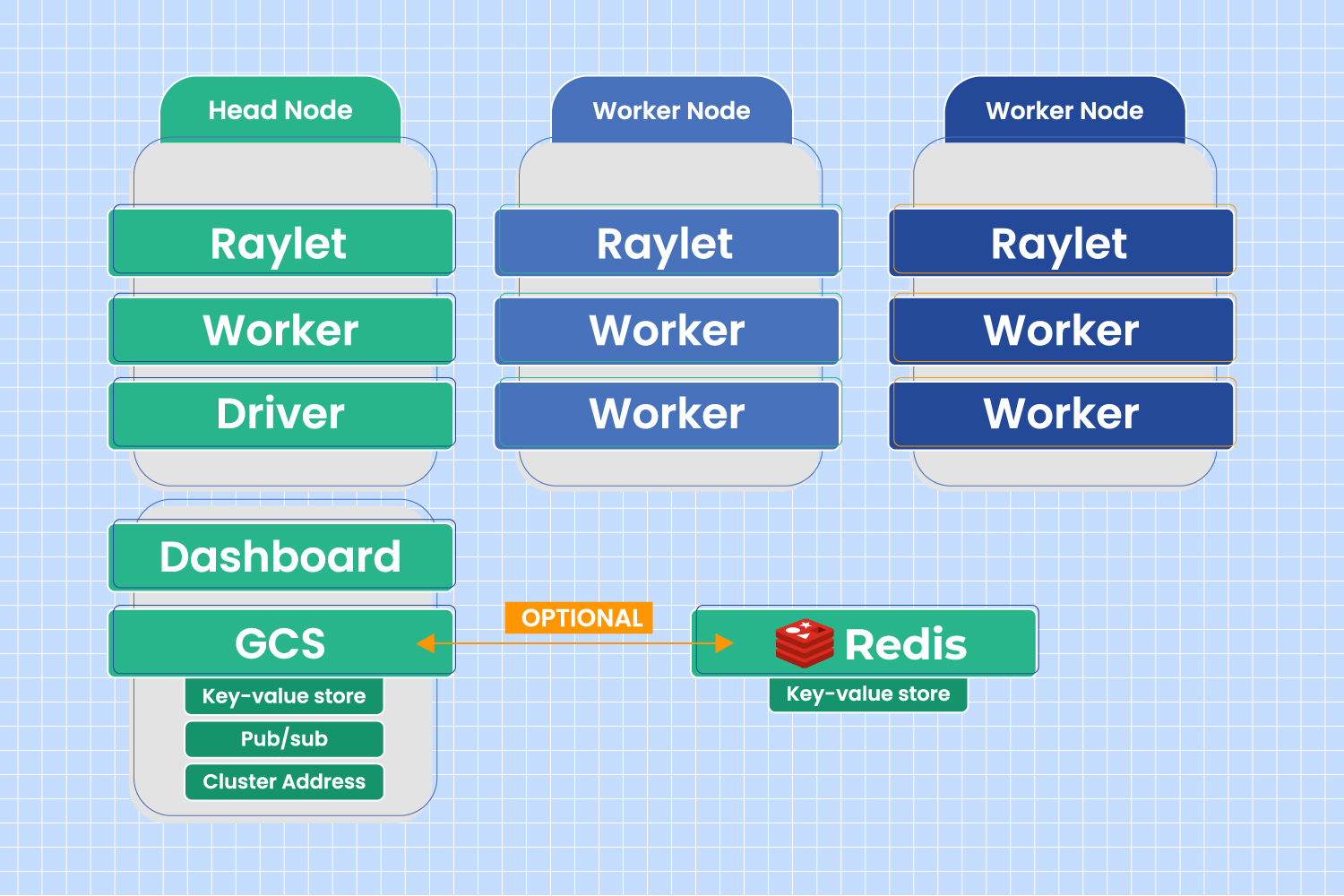

LinkRay 1.11 onwards: Redisless by default

The most significant change in Ray 1.11 is that Ray no longer launches Redis by default. For a Ray cluster not running Redis:

Cluster metadata is stored inside GCS by default instead of Redis. We implemented a GCS internal key-value store, and a common interface for Redis and GCS key-value stores, to facilitate the migration of the default storage.

Broadcasting actor / node / worker state updates, streaming errors, and logs from workers to the driver are done via Ray’s internal broadcast / pub/sub implementations, instead of using Redis pub/sub.

Fig 4. Redis becomes optional in Ray cluster after Ray 1.11

The benefits of Redisless Ray we have observed so far include:

Fewer moving parts without Redis in the system

Easier monitoring of Ray internal traffic, as all of it comes through gRPC

Scheduling performance improvements due to more efficient broadcasting and avoiding the round trip to Redis for key-value operations

LinkExternal Redis is still supported

Although Ray no longer stores metadata in Redis by default starting in version 1.11, Ray still supports Redis as an external metadata store. A number of Ray users have been running Ray clusters with external Redis instances — for example, to take advantage of custom highly available Redis implementations. Since only GCS can access Redis after Ray 1.11, the way to configure a Ray cluster to use external Redis has changed.

Before:

1# When starting head node, –address=10.0.0.1:6379 specifies the external Redis address.

2$ ray start –head –address=10.0.0.1:6379

3# Worker nodes connect directly to to the external Redis address, 10.0.0.1:6379

4$ ray start –address=10.0.0.1:6379After:

1# When starting head node, specify the external Redis address with the RAY_REDIS_ADDRESS environment variable.

2$ RAY_REDIS_ADDRESS=10.0.0.1:6379 ray start –head

3# Assuming GCS is started at 10.0.2.2:6379 in the head node, this is the address worker nodes need to connect to.

4$ ray start –address=10.0.2.2:6379LinkFuture work

As mentioned earlier, Redisless Ray is just the first step. We are actively working to support fault tolerance and high availability in Ray. Stay tuned for future updates!