Ray version 1.7 has been released

Ray version 1.7 has been released. Release highlights include:

Ray SGD v2 is now in alpha. The v2 version introduces APIs that focus on ease of use and composability.

Ray Workflows is now in alpha. Try it out for your large-scale data science, ML, and long-running business workflows.

Major enhancements to the C++ API which enables you to build a C++ distributed system easily, just like the Python and the Java API.

You can run pip install -U ray to access these features and more. With that, let’s dive into the highlights.

LinkRay SGD v2 is now in alpha

In Ray 1.7, we are rolling out a new and more streamlined version of Ray SGD that focuses on usability and composability. Ray SGD v2 has a much simpler API, has support for more deep learning backends, integrates better with other libraries in the Ray ecosystem, and will continue to be actively developed with more features.

So, what is changing with the API and how does it affect you?

1. There is now a single Trainer interface for all backends (torch, tensorflow, horovod), and the backend is simply specified via an argument. Any features that we add to Ray SGD will be supported for all backends, and there won’t be any API divergence like there was with a separate TorchTrainer and TFTrainer.

2. You no longer have to make your training logic fit into a restrictive interface as TrainingOperator and creator functions have been replaced by a more natural user-defined training function. This allows you to have more flexibility over your training code and reduces the overhead to move to Ray SGD.

3. Rather than iteratively calling trainer.train() or trainer.validate() for each epoch, the training function now defines the full training execution and can be run via trainer.run(train_func).

Most importantly, Ray SGD v2 will continue to have all the great features of v1, such as out of the box fault tolerance and checkpoint management, with more features on the roadmap. It is in alpha in version 1.7 and is quickly maturing; we would love your feedback. To learn more about migrating from Ray SGD v1, visit the migration guide.

LinkRay Workflows is now in alpha!

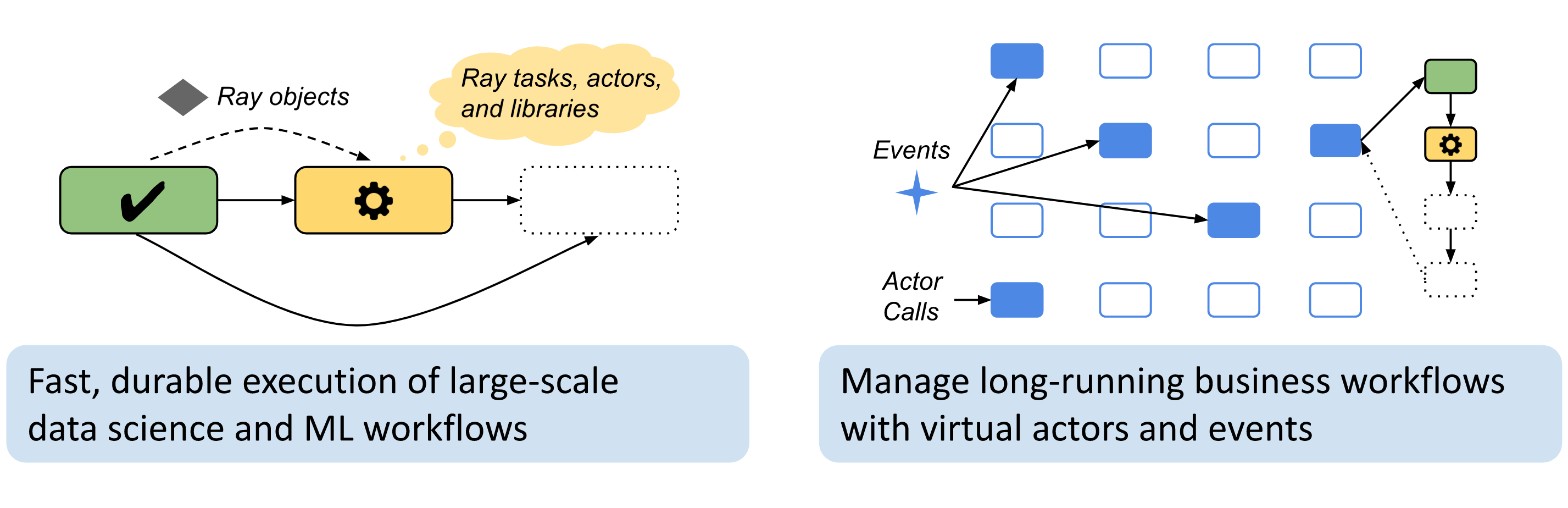

Workflow engines often impose trade-offs in flexibility or performance. By building on Ray core and adding durability, Ray Workflows aims to be the best of both worlds: very high performance/flexibility and durability. It provides high-performance, durable application workflows using Ray tasks as the underlying execution engine. It is intended to support both large-scale workflows (e.g., ML and data pipelines) and long-running business workflows (when used together with Ray Serve). More specifically, benefits of Workflows include:

Flexibility: Combine the flexibility of Ray’s dynamic task graphs with strong durability guarantees. Branch or loop conditionally based on runtime data. Use Ray distributed libraries seamlessly within workflow steps.

Performance: Workflows offers sub-second overheads for task launch and supports workflows with hundreds of thousands of steps. Take advantage of the Ray object store to pass distributed datasets between steps with zero-copy overhead.

Dependency management: Workflows leverages Ray’s runtime environment feature to snapshot the code dependencies of a workflow. This enables management of workflows and virtual actors as code is upgraded over time.

Workflows is available as alpha in Ray 1.7. You can learn more about the library in the documentation and by giving it a try! Let us know what you think.

LinkMajor enhancements to C++ API!

Originally, Ray only supported the Python API. In mid-2018, Ant Group contributed the Java API to the Ray Project. Recently, Ant Group contributed the experimental C++ API to Ray. Since Ray Core is primarily C++, this new API can be used to seamlessly connect the user layer and the core layer so that there can be no inter-language overhead.

Besides allowing you to build C++ distributed systems easily, we envision that the C++ API can be used to better meet business needs in some high-performance scenarios due to a lack of inter-language overhead and it’s lightweight characteristics.

The C++ API can be installed by running pip install -U ray[cpp]. You can also learn how to use the API by running ray cpp --help. If you would like to be notified of an upcoming deep-dive blog on this topic, you can follow us on Twitter or sign up for the Ray Newsletter

LinkLearn more

That sums up the release highlights. To see all the features and enhancements in this release, visit the release notes.