Ray Spotlight Series: Multitenant Serve Applications with Runtime Envs as Containers

Interview between:

LinkOverview ⚡

In this enlightening conversation, Sam Chan and Cindy Zhang dive into the advancements and challenges of multi-application serve clusters with different dependencies. Read on to discover how different containers can now be deployed on the same serving cluster.

LinkExploring the Basics 🔎

Sam Chan: Can you start with a basic explanation of what multi-application support is and why it's important?

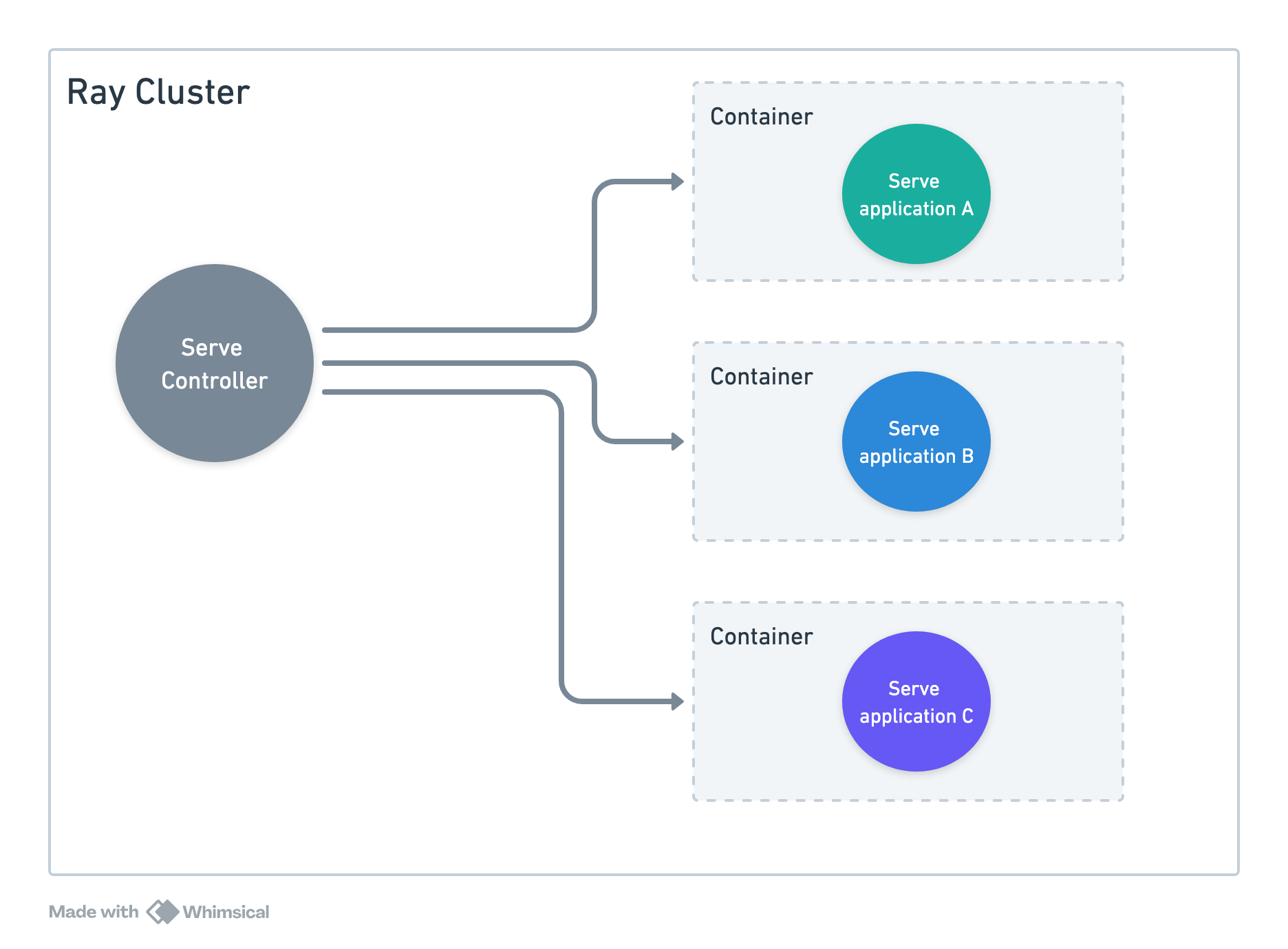

Cindy Zhang: Sure. Basically, multi-application support allows different applications to run on the same cluster, with each application using the same runtime environments as containers. Multi-application support helps in managing resources more effectively and reduces operational complexity as different applications can be upgraded independently.

S: How have we previously supported multiple applications on the same cluster? Were there drawbacks?

C: So last summer we launched multi application support on Ray Serve which enables you to serve multiple independent applications on a single Ray Cluster. However, many users who prefer not to install their dependencies at runtime using Ray runtime environments still had to bundle all their model dependencies into one big Docker image. Some customers were telling us that's a pain, especially when they have a lot of separate apps they want running together on the same Ray cluster.

S: What was the pain with having one larger image?

C: It leads to crazy bloated images and all the dependencies getting mixed together. A real headache to maintain. For instance, one of our customers has many different research teams working on their own models, and they want to deploy each team's code in its own neat little image (easier to maintain, faster to iterate etc).

Most importantly enabling separate images allows teams to work through cases where different Serve applications have conflicting dependencies.

S: Is there a reason that when we built multi-applications we only supported one container? Did we consider different scenarios?

C: Historically, the need for supporting multiple containers wasn't as prevalent, especially for training workloads. However, with the shift towards more dynamic applications, we’re seeing a greater need for this feature.

LinkRuntime Envs as Containers 🧑💻

S: How does this new “runtime envs as containers” feature help out?

C: With this, they can now specify a different Docker image per team’s application! When Ray needs to start up a replica for an app, it'll just fire up a container from that app's image and run the worker process inside there. Super clean isolation between apps.

S: Any other benefits beyond the isolation aspect? How does this support multi-tenancy of multiple Serve applications?

C: Oh yeah, it really unlocks the power of Ray's multi-tenancy capabilities. Since the apps are in separate containers but still running on the same cluster, they can share resources way more efficiently.

For example you could squeeze 8 apps onto a single large VM with 8x GPUs on it and configure each Ray Serve application to use one GPU each.

This lets users be more granular and precise in utilizing their GPU capacity and not have any underutilized resources on a single VM.

Operational complexity also goes down since you only have one Ray cluster to manage; for you can now leverage Ray Serve in-place updates for more scenarios.

LinkTechnical Details and Challenges ‼️

S: Can you share a little bit about what’s happening under the hood?

C: It’s actually pretty straightforward! We’ve integrated Ray with Podman to pull the images and spin up containers. Now, when a new Ray Worker needs to start, Ray will call out to Podman to orchestrate the pull of the relevant image and spin up the container. Ray then orchestrates the running of the Ray Worker code inside that container instead of directly on the host. We had to put some work into getting the Ray Serve lifecycle events hooked up properly and all.

S: All of these benefits and enhancements sound great but are there any drawbacks or downsides of having multiple Docker images on a single Ray Cluster?

C: Firstly, just a word of caution that the feature is new and still experimental. The big one is startup delays the first time an image needs to be pulled. We also haven't tested it at a huge scale.

Another thing is, at least presently, other runtime env fields are not supported with container runtime env, such as Python environments or working dirs. Everything has to be declared in the container.

LinkLooking Ahead ⏩

S: What's the plan for evolving this going forward?

C: Great question, short-term we want to clean up that UX around combining containers with other runtime env fields, for example specific environment variables you want interpreted for each application.

We’re still gathering user feedback on which fields make sense to include and which don't. We’re also planning to add more scalability testing and consolidating user feedback in general as people start kicking the tires.

LinkGetting Started ✅

Get started today with multiple Ray Serve applications and runtime envs as containers with Anyscale!