Introducing RLlib Multi-GPU Stack for Cost Efficient, Scalable, Multi-GPU RL Agents Training

In this blog post we'll discuss how you can achieve up to 1.7x infrastructure cost savings by using the newly introduced multi-gpu training stack in RLlib.

LinkIntroduction

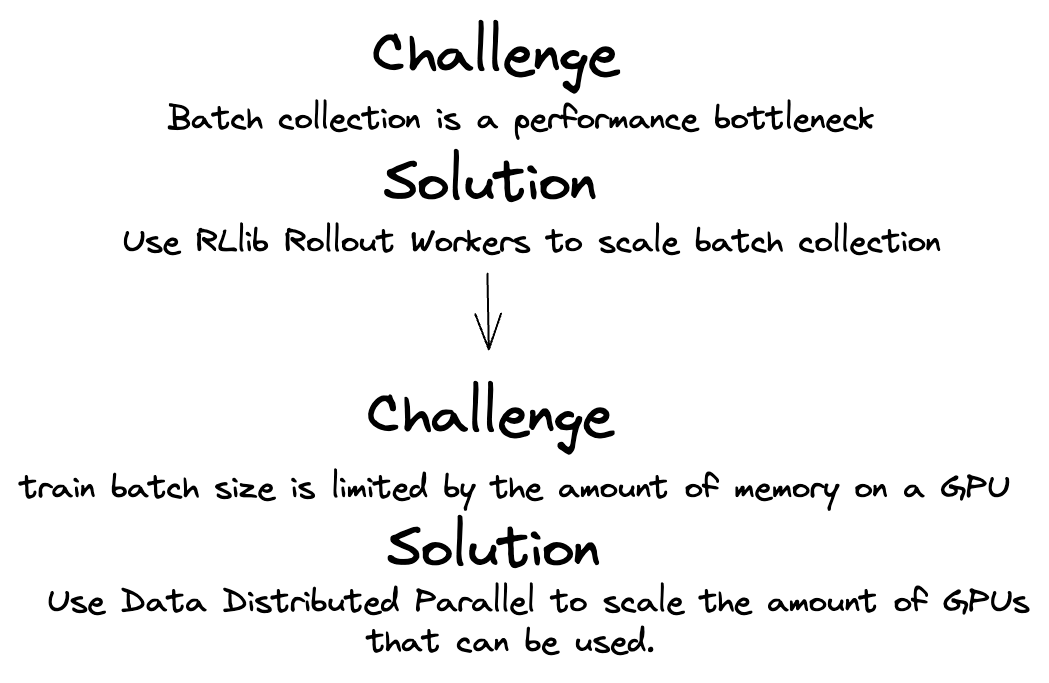

The sampling process is the bottleneck in the training of reinforcement learning (RL) agents, limiting the speed of training to how fast you can collect data for a new batch from an environment or simulator. With RLlib, we’ve already parallelized the sampling problem with rollout workers, allowing developers to efficiently scale to thousands of simulators across multiple compute nodes. However, the training can only be run on a single compute node, limiting the amount of GPUs that can be used for training. This creates a bottleneck on the size of batches that can be trained on because of the size of memory on a single gpu.

In Ray 2.5, we’re thrilled to introduce multi-node, multi-gpu training in RLlib. In a typical compute budget for RL, we observed that with the new stack we could combine different types of compute to reduce costs by 1.7x.

LinkWhy does distributed training matter for RL?

All training reinforcement learning agents follow these steps:

Sampling process: RL agents collect batches of experience, following their policy for picking actions in an environment. They might explore and exploit by trying random actions.

Policy improvement: The RL Algorithm updates the agent’s policy using the batches of experience that agents have collected.

Repeat from step 1.

Because RL agents heavily rely on the experience batches they collect, this collection determines the quality of an RL agent. Collecting smaller batches limits the exploration of potentially optimal behaviors. By actively exploring more, RL agents can accelerate their improvement process and uncover new avenues for enhanced performance. Therefore, it is crucial to prioritize collecting the largest possible batch of experiences between each policy update.

RLlib offers the advantage of scaling up batch collection by increasing the number of sampling RolloutWorkers. However, as the batch size increases, the primary bottleneck shifts to the compute resources used for policy improvement. While GPUs can accelerate training, challenges arise when the batch exceeds a single GPU's capacity, and using larger GPUs becomes costly. To overcome these limitations, a data-distributed-parallel strategy can be employed. This strategy involves training model replicas across multiple GPUs, with each processing a portion of the batch. The gradients of model updates are then aggregated, enabling efficient and scalable training. With RLlib's distributed training stack, you can scale the compute budget for learning independently from sampling, significantly reducing training runtime.

In addition to faster runtimes, this approach unlocks potential cost savings by isolating training compute requirements from sampling compute requirements. Instead of using large machines with excess CPU resources, Ray allows for the connection of multiple smaller GPU machines, enabling payment for only the necessary resources. The experiments section illustrates a concrete case study showcasing this value proposition.

LinkExperiments with Proximal Policy Optimization (PPO)

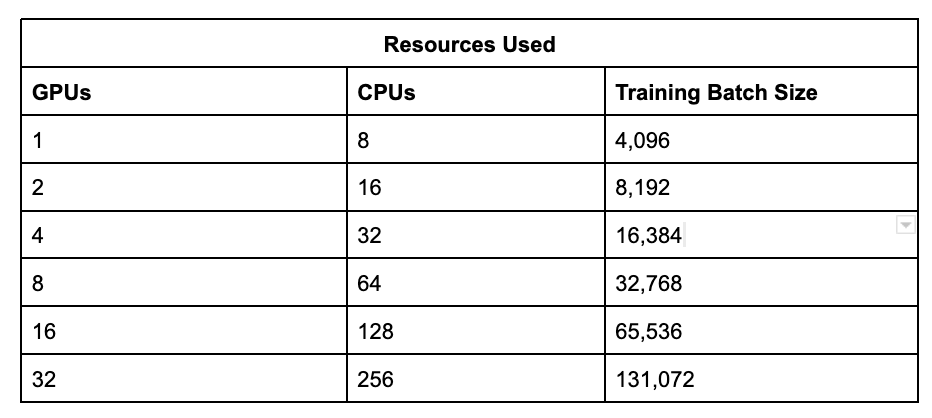

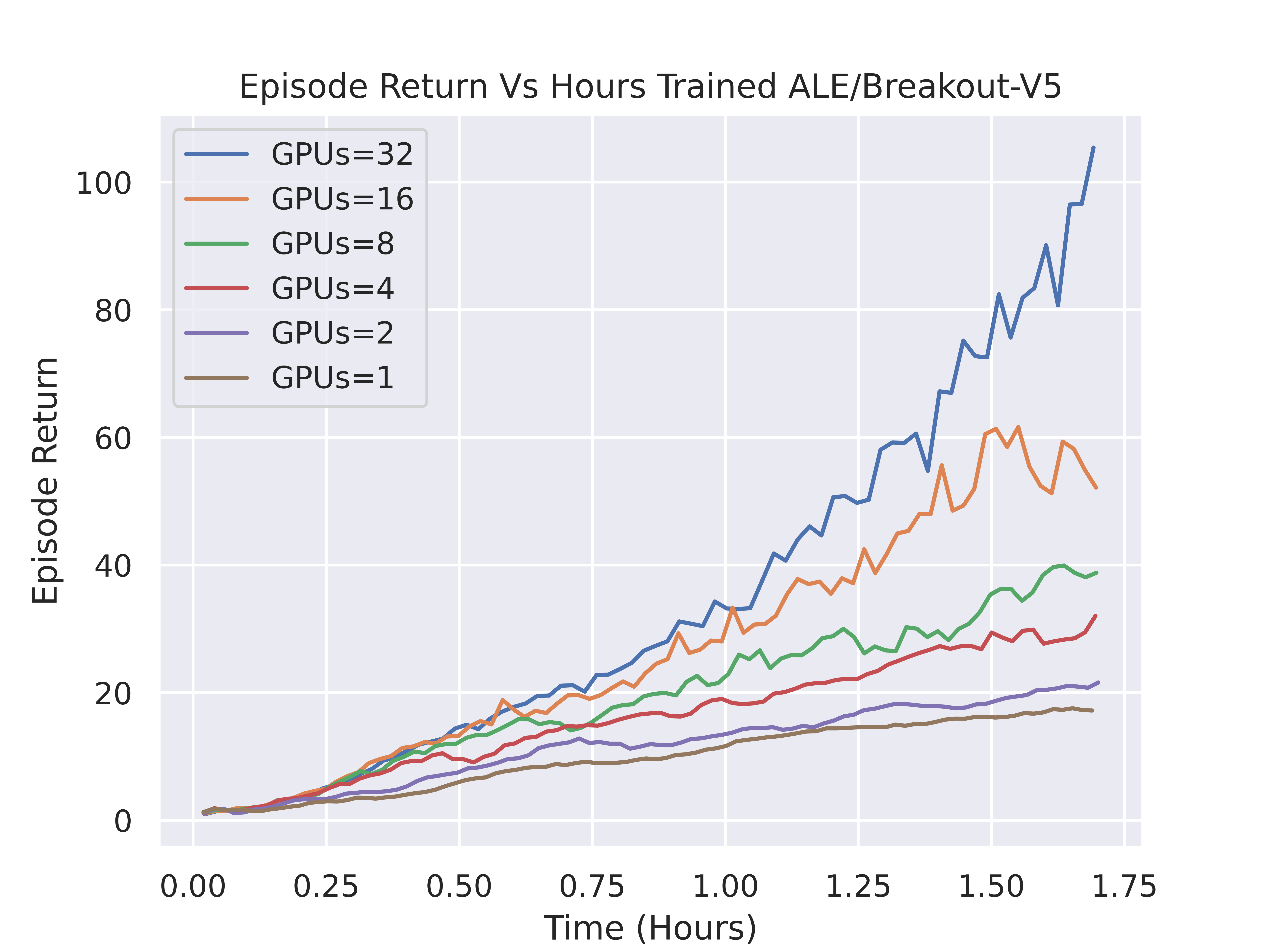

Our team conducted experiments using RLlib's Proximal Policy Optimization (PPO) implementation on the ALE/Breakout-V5 environment on the new multi-GPU training stack. These experiments were performed across a range of 1 to 32 GPUs, with each GPU having 8 CPUs dedicated to sampling for training purposes. For each experiment, the batch size is increased proportionally to the number of GPUs used.

Table 1. Comparing GPUs vs CPUs and corresponding batch size

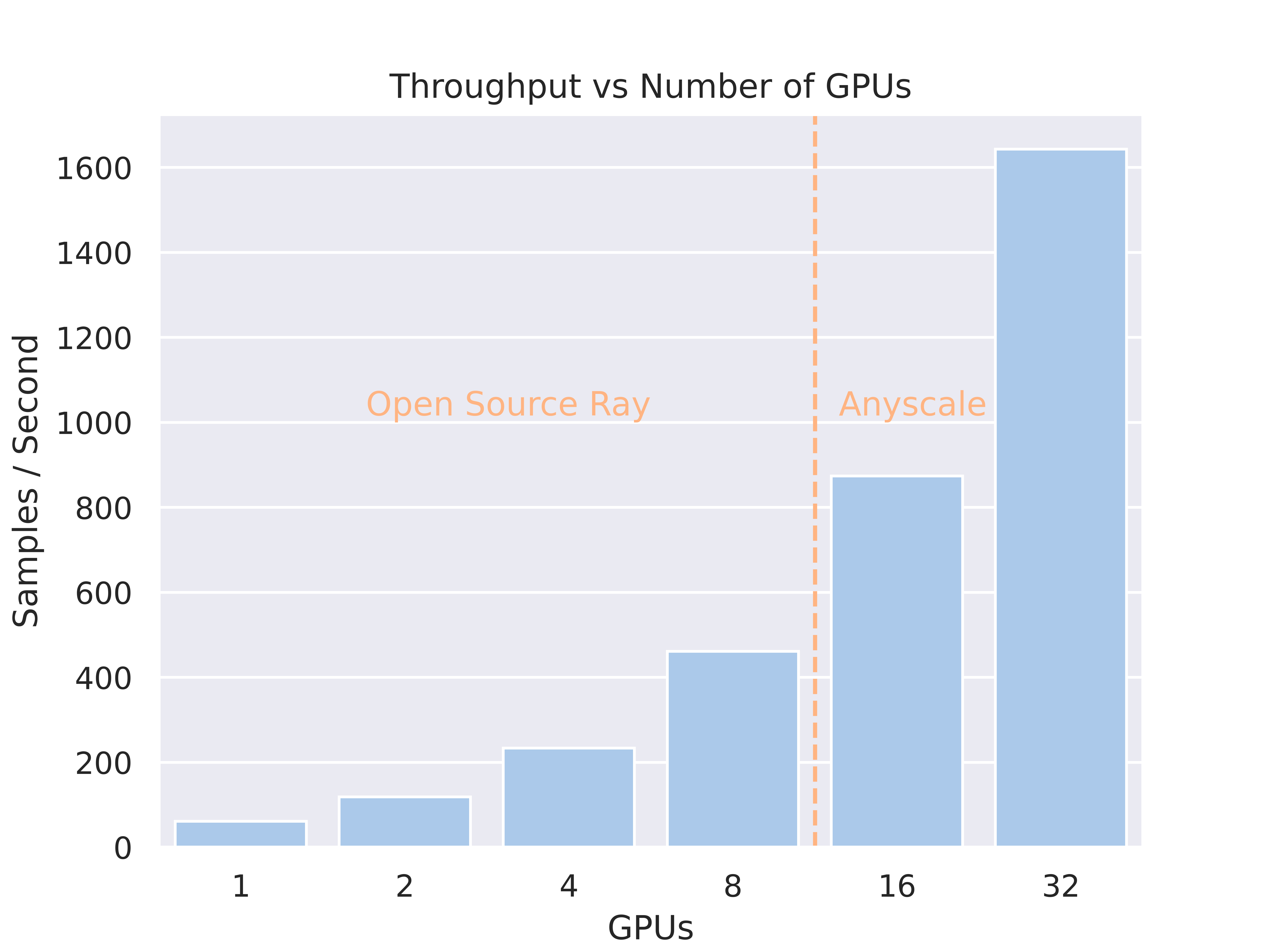

Table 1. Comparing GPUs vs CPUs and corresponding batch size Figure 1. Throughput of PPO on differing amounts of GPUs. A typical cloud compute node supports up to 8 GPUs. To enable multi-node training use the Anyscale platform. In our experiments we combined multiple single-GPU compute nodes due to availability and to reduce costs, but were not required to do so. To leverage 16 GPUs multi-node multi-node training is required.

Figure 1. Throughput of PPO on differing amounts of GPUs. A typical cloud compute node supports up to 8 GPUs. To enable multi-node training use the Anyscale platform. In our experiments we combined multiple single-GPU compute nodes due to availability and to reduce costs, but were not required to do so. To leverage 16 GPUs multi-node multi-node training is required.

Figure 2. Episodic Return of PPO Agents trained using differing amounts of Nvidia T4 GPUs. Using more GPUs led to significantly higher performance in the same time-frame.

Figure 2. Episodic Return of PPO Agents trained using differing amounts of Nvidia T4 GPUs. Using more GPUs led to significantly higher performance in the same time-frame.

This case study demonstrates that training throughput scales linearly with the number of GPUs utilized. By utilizing open-source Ray, you can employ RLlib on a single node instance and leverage all available GPUs on a single machine. Anyscale, on the other hand, enables the utilization of multi-node training, unlocking additional value. Our investigation revealed that increasing the number of GPUs for training improved the overall model return, following a logarithmic growth pattern. This approach reduces the training cost for agents, indicating that faster training does not necessarily translate to higher costs.

LinkCost savings with the new RLlib Multi-GPU training stack

The new RLlib Multi-GPU training stack in Ray 2.5 is a game-changer for developers seeking to efficiently scale their compute resources. With this latest update, developers can now combine smaller instances from cloud providers to achieve optimal compute utilization for training and not pay for unused compute.

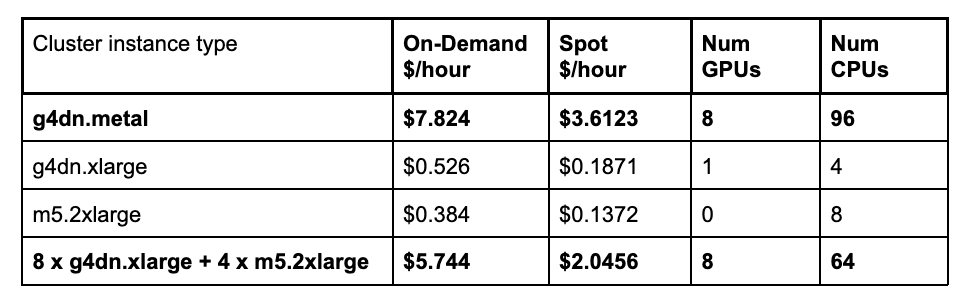

Table 2. The old RLlib training stack only supported single node training. The new RLlib training stack allows you to combine multiple small spot instances so that you can use the CPUs and GPUs that you need.

Table 2. The old RLlib training stack only supported single node training. The new RLlib training stack allows you to combine multiple small spot instances so that you can use the CPUs and GPUs that you need.

In our experiments, we opted to use AWS g4dn instances, which come with varying numbers of Nvidia T4 GPUs and Intel CPUs. For our specific 8 GPU experiment, each GPU requires 8 CPUs to be effectively utilized. If we use a single compute node, we are constrained to use the g4dn.metal spot (or on demand) instance, which provides 96 CPUs. However, for this experiment, we found that increasing the number of CPU workers beyond 64 results in no improvement in the training speed. Therefore, 32 CPUs would remain underutilized with this instance type, leading to an hourly cost of $3.6123.

To overcome this, we decided to combine 8 g4dn.xlarge and 4 m5.2xlarge spot instances, totaling 8 GPUs and 64 CPUs, resulting in an hourly cost of $2.0456. By leveraging multiple smaller compute nodes instead of a single larger one, we were able to achieve a cost reduction of 1.76X. This approach allowed us to optimize our resource allocation and significantly reduce expenses while achieving the desired performance for our experiments.

The multi-gpu training with RLlib is available today with Ray 2.5, and to take advantage of multi-node training, sign-up for a product demo on Anyscale.

The new multi-gpu training stack is in alpha release and can be enabled in PPO, APPO, and IMPALA by simply setting the _enable_learner_api and _enable_rl_module_api flags in the AlgorithmConfig:

1config = (

2 PPOConfig()

3 .resources(

4 num_gpus_per_learner_worker=1

5 num_learner_workers=NUM_GPUS_TO_USE)

6 )

7 .training(_enable_learner_api=True)

8 .rl_module(_enable_rl_module_api=True)

9LinkConclusion

In the above discussion, we introduced the new multi-GPU training stack in RLlib that offers a cost-efficient and scalable solution for training reinforcement learning agents. By leveraging distributed training across multiple compute nodes and GPUs, we explained that developers can achieve substantial infrastructure cost savings of up to 1.7x.

Through experiments conducted on the ALE/Breakout-V5 environment, we observed that increasing the number of GPUs used for training resulted in improved model performance. The throughput of training scaled linearly with the number of GPUs employed, and the overall return of the model exhibited a logarithmic growth pattern.

To enable the multi-GPU training stack in RLlib, developers can simply set the appropriate flags in the AlgorithmConfig for algorithms like PPO, APPO, and IMPALA.

If you have any questions check out our docs, our discussion forums, or consider attending our in person training or attend our Ray Summit 2023 to learn more about Ray and RLlib.