Best Reinforcement Learning Talks from Ray Summit 2021

An overview of some of the best reinforcement learning talks presented at the second Ray Summit

Ray Summit 2021 had numerous impressive technical sessions about using Ray for scalable Python, machine learning (including deep learning), reinforcement learning, and data processing. All 57 talks, including 12 keynotes, 45 sessions, and 2 tutorials, are available for free on-demand on the Ray Summit platform and will be published on the Anyscale YouTube channel August 2nd. This post will highlight a few of the most watched reinforcement learning talks from Ray Summit 2021 that showcase some impressive uses of RLlib.

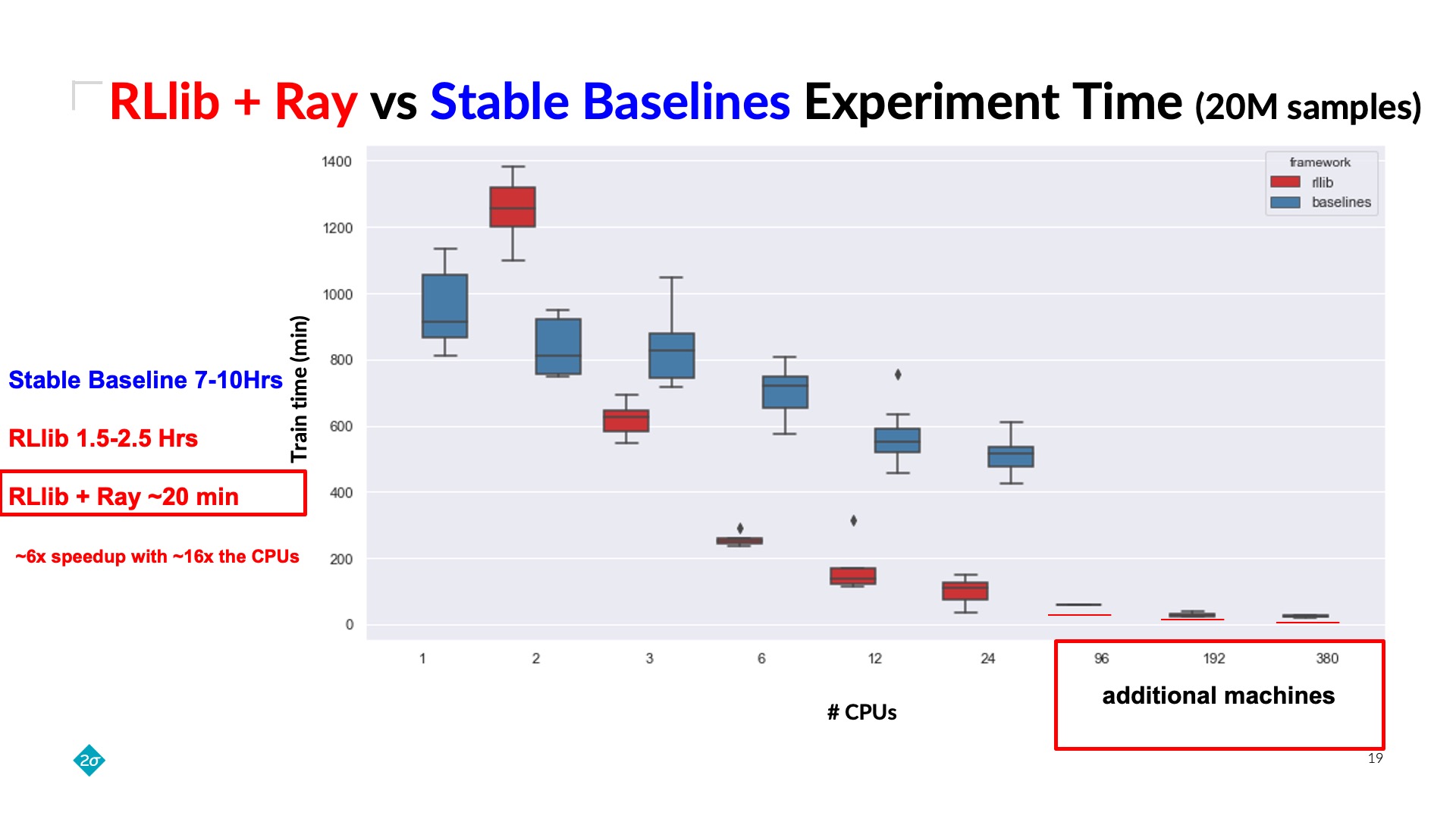

Link24x Speedup for Reinforcement Learning with RLlib + Ray

RLlib + Ray Clusters cut experiment time down to ~20 minutes from ~7-10 hours with Stable Baselines.

RLlib + Ray Clusters cut experiment time down to ~20 minutes from ~7-10 hours with Stable Baselines.Training a reinforcement learning agent is compute intensive. Under classical deep learning assumptions, bigger and better GPUs reduce training time. In practice, reinforcement learning can require millions of samples from a relatively slow CPU-only environment, leading to a bottleneck in training that GPUs do not solve. Raoul Khouri’s talk showed that training agents with RLlib removes this bottleneck because its Ray integration allows scaling to many CPUs across a cluster of commodity machines. This greatly cuts training wall-time down by orders of magnitude. Check out the talk “24x Speedup for Reinforcement Learning with RLlib + Ray” to learn more.

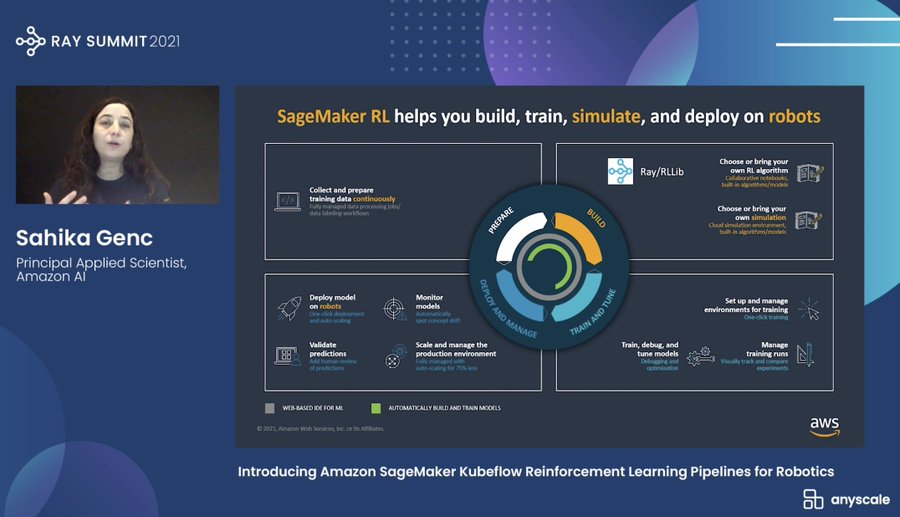

LinkOrchestrating robotics operations with SageMaker + RLlib

RLlib and SageMaker RL make it faster to experiment and manage robotics RL workflows and create end-to-end solutions without having to rebuild each time.

RLlib and SageMaker RL make it faster to experiment and manage robotics RL workflows and create end-to-end solutions without having to rebuild each time.Robots require the integration of technologies such as image recognition, sensing, artificial intelligence, machine learning (ML), and reinforcement learning (RL) in ways that are new to the field of robotics. Orchestrating robotics operations to train, simulate, and deploy reinforcement learning applications is difficult and time-consuming. Sahika Genc's talk, “Introducing Amazon SageMaker Kubeflow Reinforcement Learning Pipelines for Robotics”, examines use cases from Woodside Energy and General Electric Aviation, which demonstrate how RLlib and SageMaker RL make it faster to experiment, manage robotics RL workflows, and create end-to-end solutions without having to rebuild each time. This talk is recommended for anyone interested in robotics.

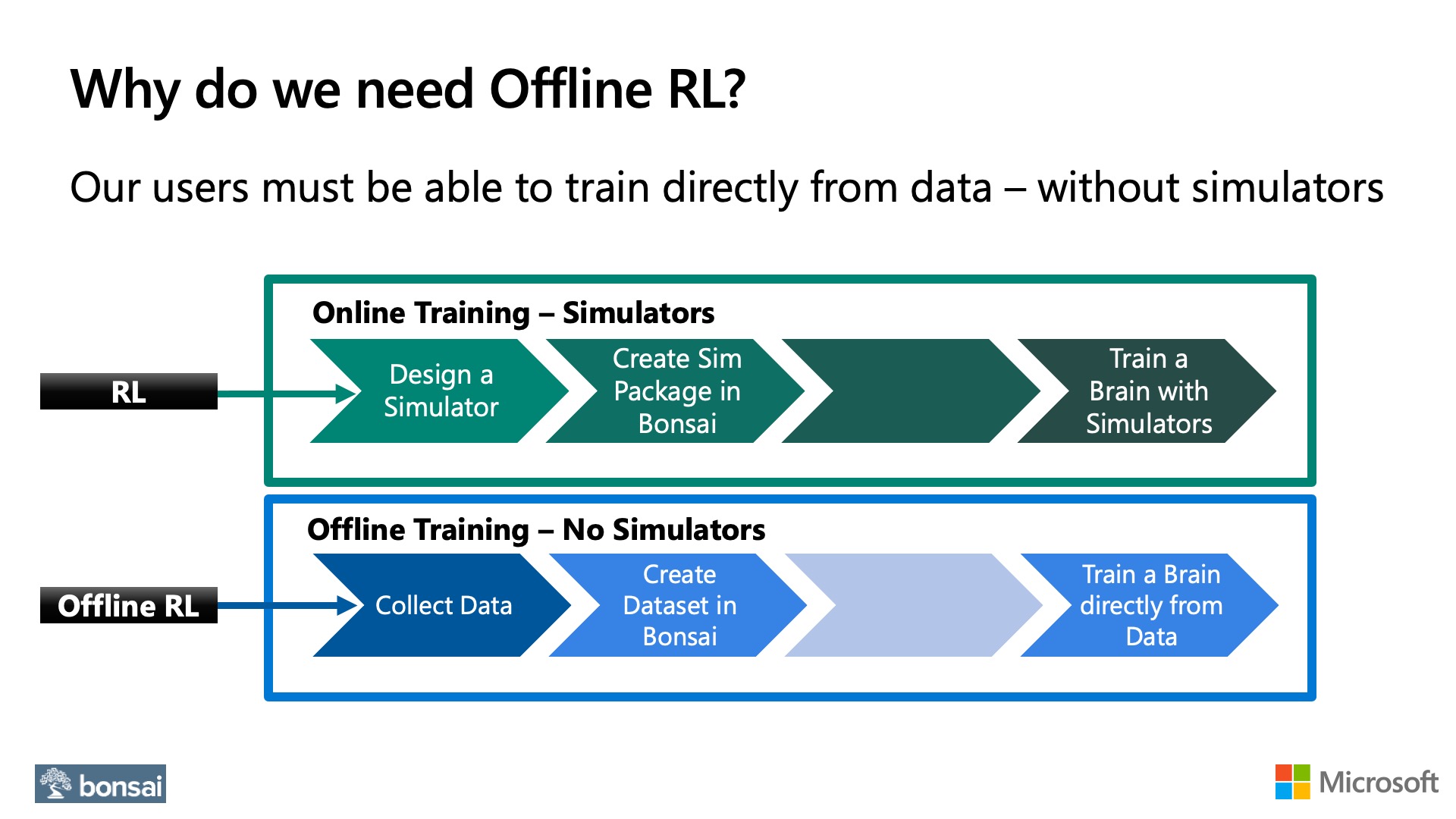

LinkOffline Reinforcement Learning with RLlib (Microsoft)

The need of an environment is a constraint that prevents the application of reinforcement learning in fields where having a simulator is difficult or unfeasible, such as healthcare.

The need of an environment is a constraint that prevents the application of reinforcement learning in fields where having a simulator is difficult or unfeasible, such as healthcare.Reinforcement learning is a fast growing field that is starting to make an impact across different engineering areas. However, reinforcement learning is typically framed as an online learning approach where an environment (simulated or real) is required during the learning process. The need of an environment is typically a constraint that prevents the application of reinforcement learning in fields where having a simulator is difficult or unfeasible, such as healthcare.

Check out Edi Palencia’s highly practical talk “Offline Reinforcement Learning with RLlib” that covers how to apply reinforcement learning with RLlib to AI/ML problems where the only available resource is a dataset, i.e., a recording of interactions of an agent in an environment.

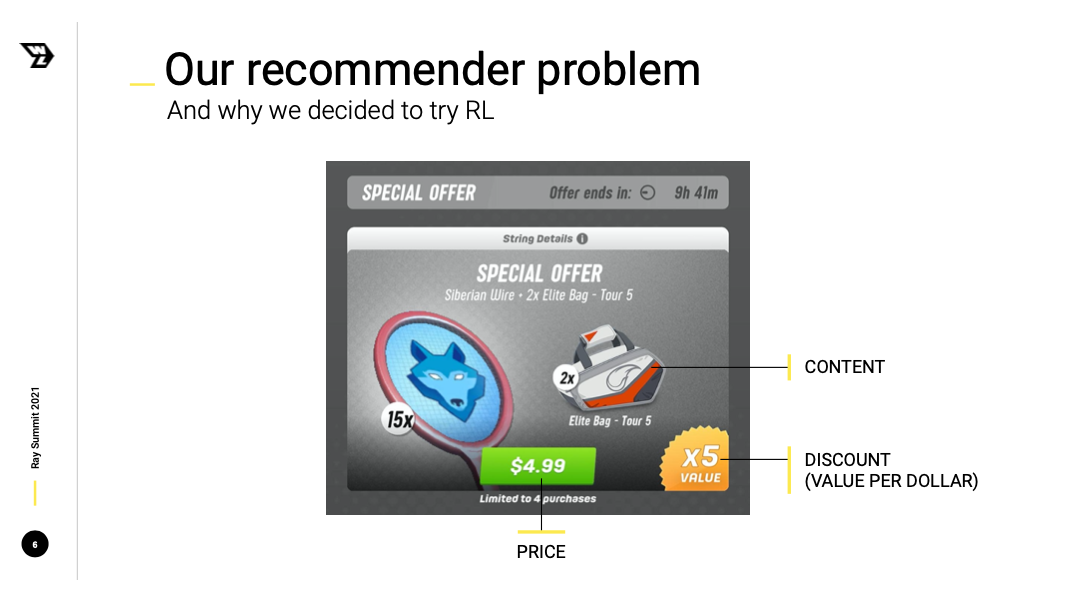

LinkReinforcement Learning to Optimize IAP Offer Recommendations in Mobile Games

Learn how Wildlife Studios provides personalized offer recommendations to each player.

Learn how Wildlife Studios provides personalized offer recommendations to each player. A large part of mobile game revenue comes from In-App Purchases (IAP), and offers play a relevant role there. Offers are defined as sales opportunities that present a set of virtual items (like gems, for example) with a discount when compared to regular purchases in the game store. Wildlife Studios has a key goal which is to define, for any given player at any given time, what is the best offer that they can show to maximize long-term profits. Check out Wildlife Studies' talk "Using Reinforcement Learning to Optimize IAP Offer Recommendations in Mobile Games" to learn how they use Reinforcement Learning (RL) algorithms and Ray to tackle this problem, from formulating the problem and setting up their clusters, to the RL agents' deployment in production. This talk was very popular for both reinforcement learning and game enthusiasts!

LinkNeural MMO: Building a Massively Multiagent Research Platform with Ray and RLlib

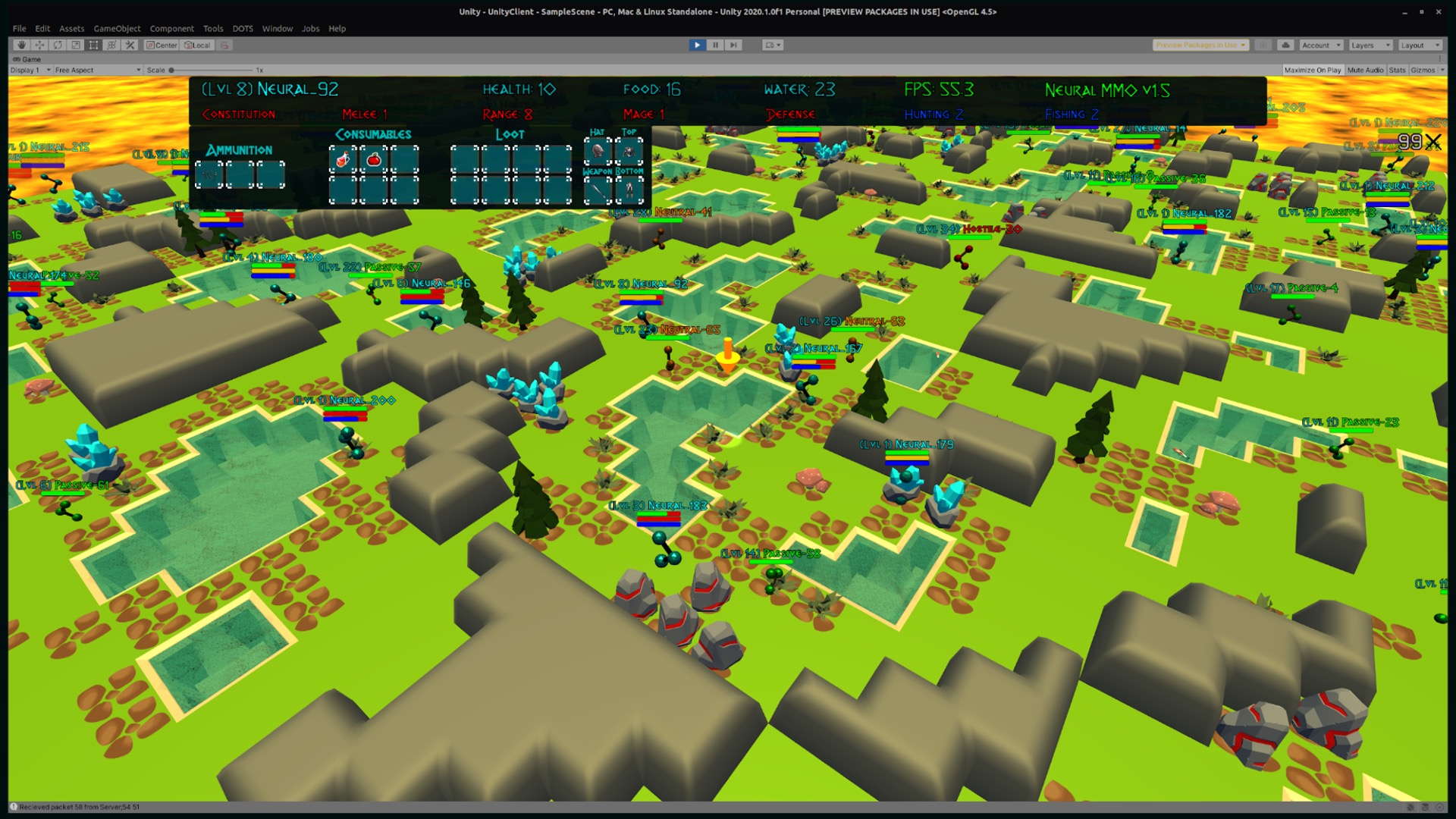

Neural MMO is an environment modeled from Massively Multiplayer Online games — a genre supporting hundreds to thousands of concurrent players.

Neural MMO is an environment modeled from Massively Multiplayer Online games — a genre supporting hundreds to thousands of concurrent players.Reinforcement learning has solved Go, DoTA, Starcraft — some of the hardest, most strategy intensive games for humans. Nonetheless, these games are missing fundamental aspects of real-world intelligence: large agent populations, ad-hoc collaboration vs. competition, and extremely long time horizons, among others. Joseph Suarez’s talk started by introducing Neural MMO, which is an environment modeled off of Massively Multiplayer Online games. The talk then proceeded to discuss how Ray + RLlib enables some key enabling features of the project.

LinkConclusion

This article only highlighted a few of our most watched reinforcement learning talks from Ray Summit 2021. Ray Summit also had many impressive machine learning talks which a previous post covered. If you would like to learn about how to get started with RLlib as well as its best practices, check out RLlib tutorial from Sven Mika, lead maintainer of RLlib. If you would like to keep up to date with all things Ray, consider following @raydistributed on twitter, sign up for the newsletter, and be sure to attend the next Ray Summit.