What’s new in the Ray Distributed Library Ecosystem

The Ray community of users, contributors, libraries, and production use cases have grown substantially since we first described the Ray ecosystem over a year ago. The majority of Ray users continue to come from libraries and third-party integrations. They include developers who use Ray-native libraries, or tools that integrate with Ray. Ray has three main groups of users besides those who use the libraries: users who need to scale a Python program or application, users who need to create scalable, high-performance libraries, and users who are gluing Ray libraries together to build an application.

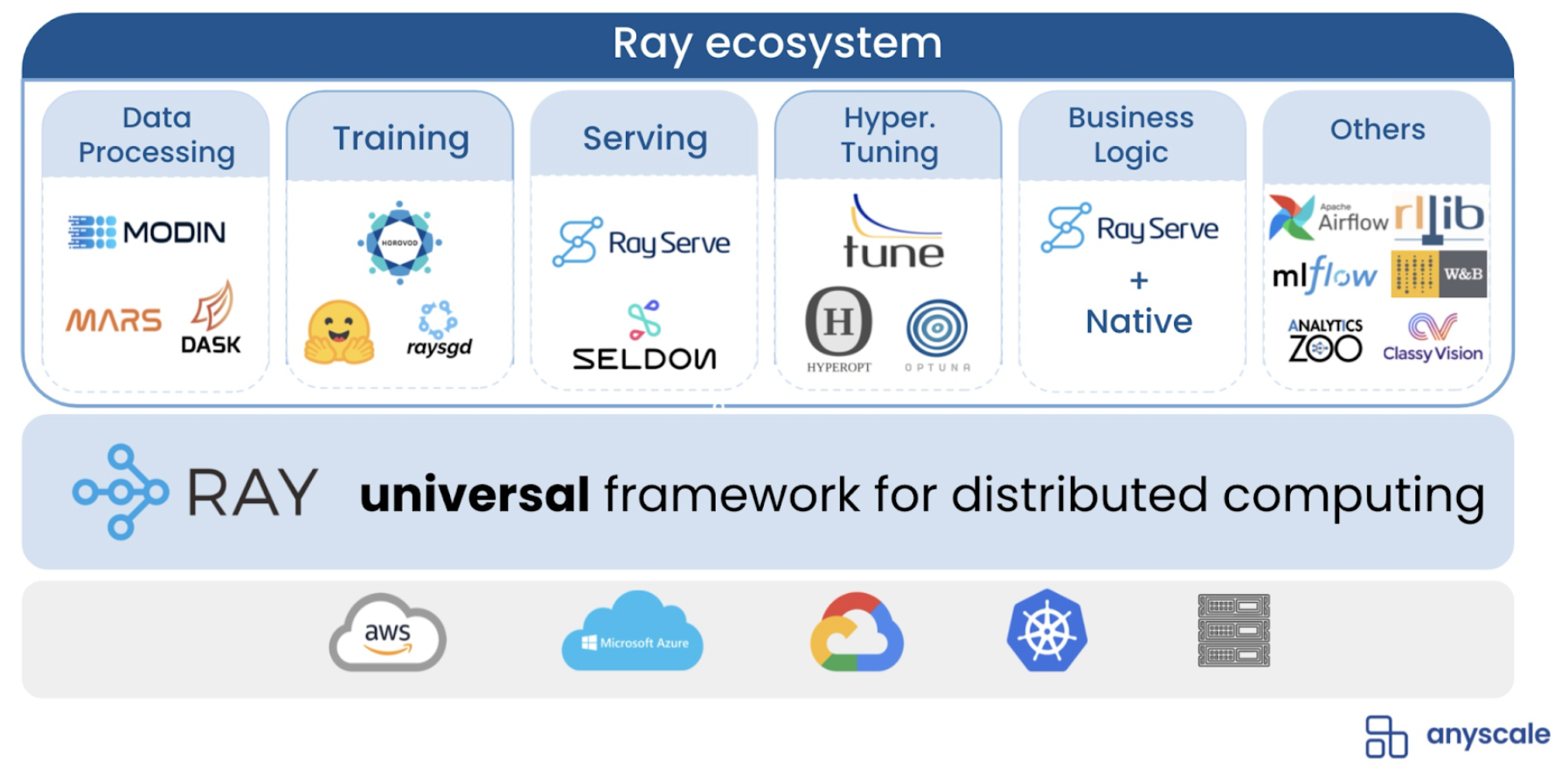

Building AI and machine learning applications with Ray becomes easier and more efficient as the Ray ecosystem grows and matures. Ray's greatest advantage is that integrated libraries do not exist in isolation - they can be used together seamlessly in a single application. This allows developers to use a single distributed computing system - Ray - to implement any machine learning and AI application.

External libraries can take advantage of Ray's elasticity and autoscaling, and also hook into other libraries, such as Ray Tune for hyperparameter tuning and Ray Serve for model serving.

Applications benefit from significantly reduced overhead incurred from sharing distributed objects, or exchanging data between systems that store data in different formats.

Ray enables easy 'gluing' together of distributed libraries in code that appears like standard Python, but is highly performant.

This post provides updates to existing libraries and highlights recent additions to Ray's ecosystem. We make the case that the evolution and expansion of the ecosystem will make Ray's collection of libraries the dominant foundation for end-to-end machine learning applications and platforms.

Ray Ecosystem, from Ion Stoica’s keynote at Ray Summit 2021.

Ray Ecosystem, from Ion Stoica’s keynote at Ray Summit 2021. LinkUpdate on Existing Ray Libraries and Integrations with External Libraries

In the machine learning model building process, hyperparameter optimization (HPO) are computationally intensive and crucial steps. HPO involves finding optimal settings for configuring or describing a model. It is rare for developers to write code for HPO since they typically have access to a number of open source or proprietary tools available on-premise or in the cloud. About a year ago Max Pumperla, the maintainer of HyperOpt (a popular open source HPO library) remarked that Ray Tune was poised to be the leading hyperparameter tuning library in the future. Judging by the number of Ray Tune related presentations at the 2021 Ray Summit, it definitely appears to be gaining a foothold in the machine learning community.

Another Ray library has also been gaining high-profile, real-world use cases. The reinforcement learning library RLlib was instrumental in helping design the winning boat at the most recent America’s Cup. McKinsey / QuantumBlack used RLlib to design an AI agent that could sail a boat like a world-class sailor to test various hydrofoil designs. This AI-driven "sailor bot" sped up design testing by 10x, and enabled 24x7 testing -- which is crucial when you are literally racing against time to design the best boat. Another recent example is a recommendation system developed by Wildlife Studios. The company used RLlib to optimize offers for in-app purchases in mobile games.

Ray Serve is a relatively new model serving library built on top of Ray that is already in use at companies like Wildlife Studios, Widas, Dendra Systems, and Ikigai Labs. Ray Serve was used by Wildlife Studios to parallelize model inference and enable model disaggregation and model composition (splitting the models into separate components and running them in parallel). Ikigai recently wrote about how they use Ray to build interactive data processing pipelines, and in the process they highlighted one of Ray Serve’s core advantages: Ray Serve can be used not only to serve models, but also arbitrary Python code and microservices applications. Its combination of flexibility and scalability will make Ray Serve one of the most popular open source model serving systems.

The last twelve months also saw the strengthening of integrations with external libraries that are widely used for machine learning and data science. This includes the introduction of Dask on Ray, the release of a Ray provider for Apache Airflow, an integration with MLflow (Tracking and Models), and Ray + Optuna integration. At the 2021 Ray Summit, Patrick Ames of Amazon described how they managed to speed up an important Dask data processing workload by up to 13X by deploying Dask on Ray. Amazon was able to scale the cluster they were using to one that was seven times larger.

Other recent integrations brought the power of Ray’s distributed computing engine to popular open source machine libraries. XGboost on Ray and LightGBM-Ray provide users of two popular ML libraries with multi-node and multi-GPU training, distributed data loading and distributed dataframes, advanced fault tolerance, elastic training, and seamless integration with Ray Tune. Uber is using both XGBoost on Ray and Horovod on Ray in production. A new plugin for PyTorch Lightning, a popular deep learning library, allows easy parallel training while retaining all of PyTorch Lightning's benefits.

LinkNew Ray Libraries

LinkRay Datasets

Dask, PySpark, and Modin are just some of the libraries Ray developers turn to for their data processing needs. Ray allows data processing code written using these libraries to run faster and scale to larger clusters. According to Amazon, they managed to speed up an important large-scale data processing workload by up to 13X by deploying Dask on Ray.

But the use of external libraries also means that libraries built on Ray don't have a standard way to interoperate. Ray Datasets (RD) is a high-level library that addresses this limitation. Users can create Ray Datasets from data processing frameworks like Pandas, Modin, Spark on Ray, or Dask on Ray. RD is a new abstraction that allows Ray applications to seamlessly process and exchange terabyte-scale datasets. It provides developers with a Ray native I/O system so they can efficiently ingest and move data between libraries. RD also provides basic data transformations (map, filter, flat_map) needed for routine data processing and machine learning applications.

LinkRay Workflows

A typical machine learning project involves data processing, model training, model testing, and model inference. Developers focus on building operators for each stage, and proceed to combine these operators into a pipeline using a workflow management framework. For example Kubeflow was created to make it easy for developers - who know little about containers or Kubernetes - to build and deploy machine learning pipelines and projects on Kubernetes. Developers who use the Julia programming language can use Dagger.jl to execute pipelines composed of operators built from Julia packages for data science and machine learning.

As Ray is widely used to develop large-scale machine learning products and services, it makes sense to make similar capabilities available to Ray users. At the recent Ray Summit, there were numerous presentations by users who built ML pipelines or even machine learning platforms using Ray’s ecosystem of libraries. Ray Workflows is a newly released library that lets developers easily build end-to-end, Ray-native pipelines. With Ray Workflows, developers can focus on optimizing and tuning individual pipeline operators rather than having to install and learn an external workflow management system. Ray Workflows lets developers use Ray distributed libraries seamlessly within workflow steps.

Note that Ray Workflows is still in Alpha. To learn more checkout the documentation or join the Ray Slack channel.

LinkClosing Thoughts

A diverse collection of organizations are choosing Ray as the foundation for their machine learning applications and platforms. One of the reasons behind this growing groundswell is that the Ray ecosystem of libraries and integrations now covers all aspects of scaling AI and machine learning. In addition, Ray’s simple API means that Python developers can scale their existing applications with minor modifications. There are Ray libraries for data processing, training and tuning, and model inference and deployment. Ray also has formal integrations with major libraries in the data and machine learning ecosystem with more planned over the next few months.

If you would like to keep up to date with all things Ray, you can follow the project on Twitter or sign up for the Ray Newsletter.