The Ideal Foundation for a General Purpose Serverless Platform

LinkWhy Ray is poised to play a central role in future serverless offerings

The history of computing infrastructure is one of steady improvements over extended periods. Cloud computing brought several capabilities that we now take for granted including the elimination of upfront investments in hardware, the ability to pay for compute resources on an as-needed basis, and elasticity. The move to the cloud has accelerated in recent years and many companies now use a mix of on-premise infrastructure alongside multiple cloud providers.

In 2015, AWS introduced Lambda, a service that offered cloud functions and introduced the concept of serverless computing. Serverless and cloud functions differ from traditional cloud computing in two critical ways: (1) serverless abstracts away infrastructure (scaling, provisioning servers) freeing developers to focus on writing programs, (2) serverless provides finer-grained increments for billing (sub-second, compared to traditional cloud computing which uses minutes).

Since the introduction of AWS Lambda, interest in serverless and cloud functions has grown and all major cloud providers now have similar offerings. Experts predict that serverless will continue to grow in importance and more applications will use serverless computing platforms in the years ahead. But we are still in the early stages of serverless computing. A widely read 2019 paper (“A Berkeley View on Serverless Computing”) described “challenges and research opportunities that need to be addressed for serverless computing to fulfill its promise”.

In this post we examine some limitations of current cloud functions (also referred to as FaaS or serverless). We note that the distributed computing framework Ray addresses many of these challenges and argue that Ray is the right foundation for a general purpose serverless framework.

LinkServerless: Special Purpose vs General Purpose

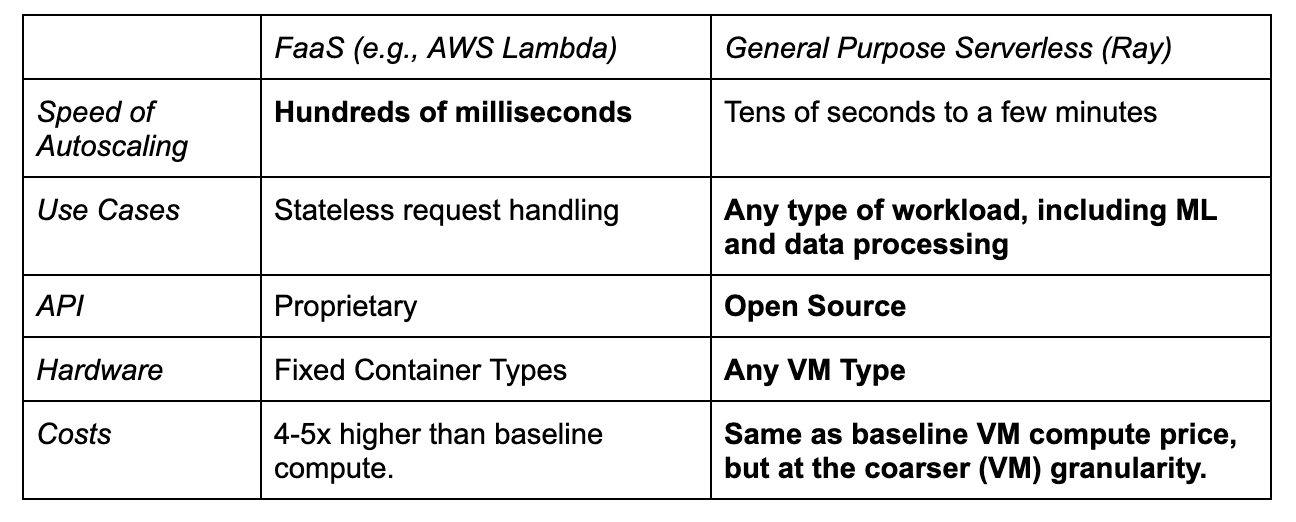

There have been multiple efforts to expand the scope of serverless. Projects like ExCamera, PyWren, TF-on-serverless, and Spark-on-Lambda try to run more complex workloads on FaaS platforms like Lambda. Several industry platforms provide serverless-like capabilities and user experiences, including Amazon Aurora, Databricks, BigQuery, and others. However, these offerings generally target specific workloads and do not aim to run arbitrary applications. We define the characteristics of such a framework (Ray) in the following table, along with key tradeoffs:

LinkWhy Ray for General Purpose Serverless?

Ray hides servers

Like existing FaaS platforms, Ray abstracts away servers from the applications. With version 1.0, users do not need to specify the cluster size or the type of instances when launching an application. Instead, they only need to provide Ray with the set of available resources (e.g., a list of instance types in AWS or a list of nodes and their capabilities in an on-prem cluster) and Ray will automatically pick the instance types and dynamically scale up and down to match the application demands.

Ray supports stateful applications

Serverless platforms scale and provision storage and compute separately, thus computations on serverless platforms are stateless. Cloud functions do not store previous transactions or knowledge. The typical flow is to run a computation (a function call), write results to a storage service, and if needed, another function can take that output and use it.

The Actor model is a powerful programming paradigm that focuses on the semantics of message passing, and works seamlessly when local or remote. Ray supports actors, which are stateful workers (or services). Having a serverless platform that can support stateful computations dramatically increases the number of possible applications. Stateful operators allow Ray to efficiently support streaming computations, machine learning model serving, and web applications. In fact there are already companies who use Ray in applications that combine both streaming and machine learning. For example, Ant Group “built a multi-paradigm fusion engine on top of Ray that combines streaming, graph processing, and machine learning in a single system to perform real-time fraud detection and online promotion.”

Ray supports direct communication between tasks

Almost every distributed application, such as streaming or data processing, exchanges data between tasks. Unfortunately, existing serverless platforms do not allow direct communication between functions. The only way two functions can communicate with each other is via a cloud storage system like S3. Unfortunately, this is slow and expensive.

In contrast, Ray enables arbitrary tasks and actors to communicate with each other. This enables applications to efficiently exchange data and implement arbitrary communication patterns.

Ray lets developers access hardware accelerators

Developers know what type of hardware resources best serve their applications so giving them the ability to control resources is an important feature of any compute platform. FaaS services currently let developers specify execution time limits and memory sizes. But in some applications - notably machine learning model training - developers need to access specific accelerators (GPUs, TPUs, etc.). At this time FaaS providers do not offer this level of control over resources.

A serverless platform built on top of Ray would have no limitations in terms of specifying resources. Developers who use Ray can already describe the hardware resources they need (number of CPUs, GPUs, TPUs, and other hardware accelerators). Future versions of Ray will even allow developers to specify the precise type of chip they prefer (e.g., “two V100 GPUs”).

Ray provides an open source API

There is no “standard API” for writing serverless applications. We believe that Ray is a strong candidate for such an open, serverless API. Ray allows developers to write general programs, not just functions. It combines support for both stateless and stateful applications and access to widely used programming languages (Python and Java, with more to follow). In fact, Ray is usually described as a distributed computing platform that can be used to scale applications with minimal effort.

Ray supports fine-grained coordination and control, which can lead to better performance

There are many applications where control over data locality and scheduling are critical. This includes the broad range of applications that need to distribute and share data across compute nodes. Current serverless offerings are a poor fit for this class of applications. For example, a developer who uses serverless to perform a gradient computation in machine learning will not have control over where data and cloud functions are located. Another example comes from reinforcement learning, where developers will want to use the same compute node for training policies and performing rollouts.

Ray overcomes these limitations by providing data locality and scheduling control. A developer who uses Ray can specify which actors should run on the same machine.

Ray has no runtime limits

Current offerings (Lambda, Cloud Functions, Azure Functions) have execution times that are capped at around 15 minutes or less. This limits the types of applications that a FaaS platform can support. In contrast, since Ray runs on top of existing clouds or clusters, there are no time limits.

LinkBut what about costs?

One of the most popular aspects of serverless is that users are billed based on usage. Serverless platforms use accounting units that are measured in milliseconds, compared to traditional cloud computing which uses minutes.

Since cloud providers charge at the level of an instance, as a software layer, (open source) Ray by itself cannot instantiate and release compute resources quickly enough to deliver fine-grained accounting units. For example, if you have an actor running on a server with 32 cores (and you only have one actor running on it), your cloud provider will likely charge you for 32 cores. Ray does not address this discrepancy between utilization and cost. Getting to fine-grained accounting units would require that you build a serverless platform on top of Ray.

With that said, Ray can automatically allocate new instances and shutdown existing instances in minutes. Thus, Ray can already provide serverless functionality for coarse grained jobs that run for say 30 minutes. Examples that might fall under the realm of coarse grained jobs include streaming, model training, and model serving jobs.

Finally, Ray also has the potential to provide substantial cost savings for coarse-grain workloads. The cost of using cloud functions can be 4x-5x higher than a cloud computing instance operating at 100% utilization. Indeed, at the time of writing, a 3GB RAM AWS Lambda costs $0.0000048958 per 100ms or $0.1762488/hour. In comparison, a t3.medium instance with 4GB RAM costs just $0.0416/hour which is 4.2x cheaper, and when considering per GB RAM pricing, it is 5.65x cheaper. This is an underestimate because it does not include the per-request costs.

LinkSummary

As far back as 2016, experts were already noting how serverless tools enable teams to quickly build extremely functional and scalable applications. Since then a growing number of developers are turning to serverless technologies to build applications. But for serverless to live up to its promise, current offerings (cloud functions) need to address the limitations listed in this post.

Companies are still in the early stages of exploring serverless technologies. We believe that Ray is the ideal foundation for a general purpose serverless framework. With the rise of AI and other data intensive applications, Ray is poised to play a central role in future serverless offerings.