Introducing the Ray Kubectl Plugin: A Simpler Way to Manage Ray Clusters on Kubernetes

Special thanks to these KubeRay contributors: Anyscale (Chi-Sheng Liu), Google (Aaron Liang), and Spotify (David Xia).

LinkPreface

Ray has quickly become the go-to distributed computing engine for AI and machine learning, offering a powerful runtime and a suite of AI libraries to accelerate ML workloads. When deployed on Kubernetes, Ray users can take advantage of both its seamless development experience and Kubernetes' robust, production-grade orchestration. To make the most of this powerful combination, KubeRay was created to streamline the experience of running Ray on Kubernetes.

KubeRay, an open-source project, simplifies the process of deploying and managing Ray clusters on Kubernetes by handling the underlying complexities. However, many data scientists and AI researchers, who may not be familiar with Kubernetes, find it challenging to get started with Ray in such an environment. While Kubernetes offers significant advantages for running Ray at scale, its steep learning curve can be a barrier. To bridge this gap, the Ray kubectl plugin was introduced.

With KubeRay v1.3, we are excited to promote the Ray kubectl plugin to Beta. This release brings improved stability and new commands, enhancing the overall user experience. We have refined existing commands like kubectl ray log, kubectl ray session, and kubectl ray job submit, enabling users to connect to Ray clusters, submit jobs, and retrieve logs more seamlessly. Additionally, we introduced new commands such as kubectl ray create cluster and kubectl ray create workergroup, allowing users to create Ray clusters and add worker groups without manually editing YAML files.

In this post, we’ll explore how the Ray kubectl plugin simplifies cluster management and enhances the Ray experience for Kubernetes users of all levels.

LinkRay Kubectl-plugin Deep Dive

First, let’s install the kubectl plugin. You can download it directly from the GitHub release, extract the kubectl-ray binary and put it in your PATH. However, we recommend installing it using the Krew plugin manager.

1$ kubectl krew update

2$ kubectl krew install rayVerify the installation by running

1$ kubectl ray --helpLet's dive into the create cluster command. To create a Ray cluster using kubectl ray create cluster, users can run the command with additional flags that form the shape of the Ray cluster. For example,

1$ kubectl ray create cluster sample-cluster --head-cpu 1 \

2 --head-memory 5Gi \

3 --worker-replicas 1 \

4 --worker-cpu 1 \

5 --worker-memory 5Gi

6Created Ray Cluster: sample-clusterWe have default values in place in case certain flags are not set:

Parameter | Default Value |

Ray version | 2.41.0 |

Ray image | rayproject/ray:<ray version> |

Head CPU | 2 |

Head memory | 4Gi |

Head GPU | 0 |

Worker Replicas | 1 |

Worker CPU | 2 |

Worker Memory | 4Gi |

Worker GPU | 0 |

With the create cluster command, users can also use the --dry-run flag to print out the YAML instead of creating the cluster, allowing you to make any necessary changes and additions before using kubectl apply!

By default, create cluster creates one worker group, but we’ve added the create workergroup command that adds additional worker groups to existing Ray clusters!

1$ kubectl ray create workergroup example-group --ray-cluster sample-cluster --worker-cpu 2 --worker-memory 5Gi

2Updated Ray cluster default/sample-cluster with new worker groupWe can use kubectl ray get cluster and kubectl ray get workergroup to query the information about Ray clusters and worker groups.

1$ kubectl ray get cluster

2NAME NAMESPACE DESIRED WORKERS AVAILABLE WORKERS CPUS GPUS TPUS MEMORY AGE

3sample-cluster default 2 2 4 0 0 15Gi 32s

4

5$ kubectl ray get workergroup

6NAME REPLICAS CPUS GPUS TPUS MEMORY CLUSTER

7default-group 1/1 1 0 0 5Gi sample-cluster

8example-group 1/1 2 0 0 5Gi sample-cluster

9

10$ kubectl get pods

11NAME READY STATUS RESTARTS AGE

12sample-cluster-default-group-worker-rqklx 1/1 Running 0 55s

13sample-cluster-example-group-worker-nx4mg 1/1 Running 0 47s

14sample-cluster-head-dsbnm 1/1 Running 0 55s

15The kubectl ray session command can forward local ports to Ray resources, allowing users to avoid remembering which ports are exposed by RayCluster, RayJob, or RayService. It now supports automatic reconnection in the event of pod disruptions or port-forward failures, ensuring uninterrupted access to the Ray cluster.

1$ kubectl ray session sample-cluster

2Forwarding ports to service sample-cluster-head-svc

3Ray Dashboard: http://localhost:8265

4Ray Interactive Client: http://localhost:10001And then you can open http://localhost:8265 in your browser to access the dashboard.

The kubectl ray log command capabilities have been significantly enhanced to encompass all Ray types, including Ray services and Ray jobs. This expansion allows developers to gain deeper insights into the behavior and performance of their Ray applications. By capturing detailed logs for each Ray type, developers can more effectively debug issues, monitor the progress of their applications, and optimize their performance.

1$ kubectl ray log sample-cluster

2No output directory specified, creating dir under current directory using resource name.

3Command set to retrieve both head and worker node logs.

4Downloading log for Ray Node sample-cluster-default-group-worker-rqklx

5Downloading log for Ray Node sample-cluster-example-group-worker-nx4mg

6Downloading log for Ray Node sample-cluster-head-dsbnmA folder named sample-cluster will be created in the current directory containing the cluster’s logs.

We’ve also made improvements to kubectl ray job submit. Originally, users had to provide a RayJob YAML in order to submit a Ray job, but in the latest release users can create one on the fly! Similar to kubectl ray create cluster, users can also set flags to determine the shape of the Ray cluster for the Ray job!

1$ mkdir working_dir

2$ cd working_dir

3$ cat > example.py <<EOF

4import ray

5ray.init()

6

7@ray.remote

8def f(x):

9 return x * x

10

11futures = [f.remote(i) for i in range(4)]

12print(ray.get(futures)) # [0, 1, 4, 9]

13EOF

14

15$ kubectl ray job submit --name rayjob-sample --working-dir . -- python example.py

16Submitted RayJob rayjob-sample.

17Waiting for RayCluster

18...

192025-01-06 11:53:34,806 INFO worker.py:1634 -- Connecting to existing Ray cluster at address: 10.12.0.9:6379...

202025-01-06 11:53:34,814 INFO worker.py:1810 -- Connected to Ray cluster. View the dashboard at 10.12.0.9:8265

21[0, 1, 4, 9]

222025-01-06 11:53:38,368 SUCC cli.py:63 -- ------------------------------------------

232025-01-06 11:53:38,368 SUCC cli.py:64 -- Job 'raysubmit_9NfCvwcmcyMNFCvX' succeeded

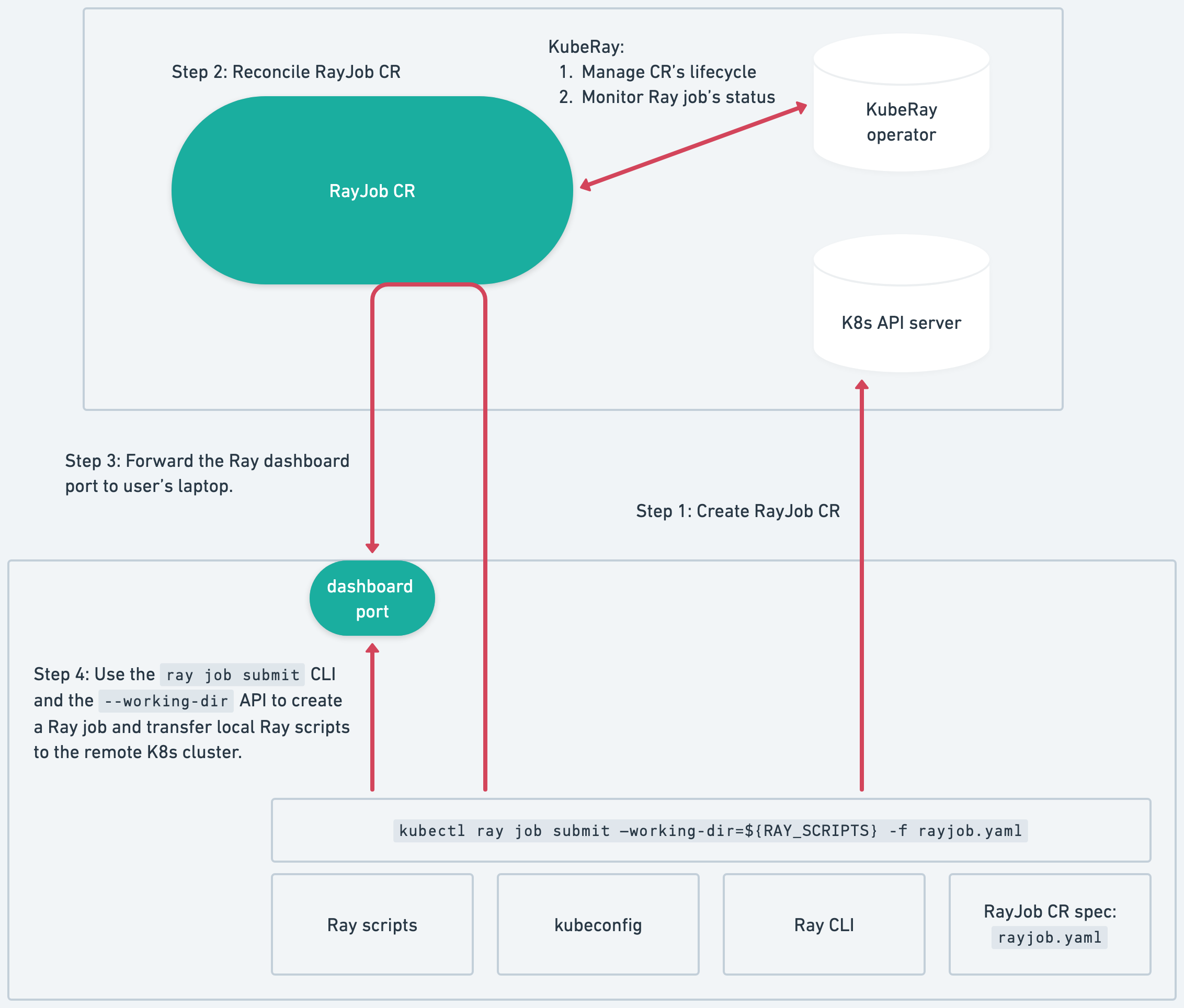

242025-01-06 11:53:38,368 SUCC cli.py:65 -- ------------------------------------------ Figure 1: What the kubectl ray job submit command does behind the scene

Figure 1: What the kubectl ray job submit command does behind the sceneFinally, use kubectl ray delete command to clean up resources.

1kubectl ray delete sample-cluster

2kubectl ray delete rayjob/rayjob-sampleLinkConclusion

The Ray kubectl plugin makes it easier than ever to manage Ray clusters on Kubernetes, especially for users who may not be familiar with Kubernetes. This powerful tool combined with the seamless integration of Ray and Kubernetes through KubeRay, unlocks new possibilities for your AI workloads.

Ready to experience the kubectl plugin and KubeRay today? Visit the official documentation to get started. For more details on the Ray kubectl plugin, check out the official documentation.

Have any questions or need assistance? Feel free to open an issue in the GitHub repository. You can also join the Ray community on Slack via this link and ask questions in the #kuberay-questions channel.