Anyscale Endpoints: JSON Mode and Function calling Features

Update June 2024: Anyscale Endpoints (Anyscale's LLM API Offering) and Private Endpoints (self-hosted LLMs) are now available as part of the Anyscale Platform. Click here to get started on the Anyscale platform.

We're thrilled to announce the introduction of JSON mode and function calling capabilities on Anyscale Endpoints, significantly enhancing the usability of open models. Currently available in preview for the Mistral-7B model, we aim to extend these features to additional models soon.

JSON mode ensures the outputs from our Large Language Models (LLMs) are not only valid JSON, but also tailored to your specific schema requirements.

Function calling is another feature that has been missing from open models like Llama-2 and Mistral. It empowers models to use APIs, choosing the right function to call and the appropriate arguments to that function. In this blogpost, we also delve into the evaluation of function calling and share insights on assessing quality.

LinkJSON Mode API: A Missing Piece From Open Models

OpenAI recently introduced JSON Mode, enabling GPT models to return JSON formatted objects. This significantly enhances their utility beyond traditional chatbot applications. However, this feature is missing from the open model ecosystem.

Although the OpenAI API does not allow the specification of a json schema we have extended the response_format argument to allow a schema to be passed in so that callers can control the exact output schema.

Example:

1import openai

2from pydantic import BaseModel, Field

3

4client = openai.OpenAI(

5 base_url = "https://api.endpoints.anyscale.com/v1",

6 api_key = "esecret_YOUR_API_KEY"

7)

8

9# Define the schema for the output

10class Result(BaseModel):

11 winner_team_name: str

12 loser_team_name: str

13 winner_score: int

14 loser_score: int

15

16chat_completion = client.chat.completions.create(

17 model="mistralai/Mistral-7B-Instruct-v0.1",

18 response_format={

19 "type": "json_object",

20 "schema": Result.schema_json()

21 },

22 messages=[

23 {"role": "system", "content": "You are a helpful assistant designed to output JSON."},

24 {"role": "user", "content": "Who won the world series in 2020?"}

25 ],

26 temperature=0.7

27)

28

29print(repr(chat_completion.choices[0].message.content))Output:

1' {\n "winner_team_name": "Los Angeles Dodgers",\n "winner_score": 4,\n "loser_team_name": "Tampa Bay Rays",\n "loser_score": 2\n}'LinkHandling Arrays

Example:

1from typing import List

2

3class Result(BaseModel):

4 """The format of the answer."""

5 sorted_numbers: List[int] = Field(description="List of the sorted numbers")

6

7chat_completion = client.chat.completions.create(

8 model="mistralai/Mistral-7B-Instruct-v0.1",

9 response_format={

10 "type": "json_object",

11 "schema": Result.schema_json()

12 },

13 messages = [

14 {"role": "system", "content": "You are a helpful assistant designed to output JSON."},

15 {"role": "user", "content": "Sort the following numbers: 2, 8, 6, 7"}

16],

17 temperature=0.7

18)

19

20print(repr(chat_completion.choices[0].message.content))Output:

1' {\n "sorted_numbers": [\n 2,\n 6,\n 7,\n 8\n ]\n}'LinkHandling Nested Structures

1class Person(BaseModel):

2 """The object representing a person with name and age"""

3 name: str = Field(description="Name of the person")

4 age: int = Field(description="The age of the person")

5

6class Result(BaseModel):

7 """The format of the answer."""

8 sorted_list: List[Person] = Field(description="List of the sorted objects")

9chat_completion = client.chat.completions.create(

10 model="mistralai/Mistral-7B-Instruct-v0.1",

11 response_format={

12 "type": "json_object",

13 "schema": Result.schema_json()

14 },

15 messages = [

16 {"role": "system", "content": "You are a helpful assistant designed to output JSON."},

17 {"role": "user", "content": "Alice is 10 years old, Bob is 7 and carol is 2. Sort them by age in ascending order."}

18],

19 temperature=0.7

20)

21

22print(repr(chat_completion.choices[0].message.content))Output:

1' {\n "sorted_list": [\n {\n "name": "carol",\n "age": 2\n },\n {\n "name": "Bob",\n "age": 7\n },\n {\n "name": "Alice",\n "age": 10\n }\n ]\n}'LinkFunction Calling: Enabling Open LLMs to Use Tools

JSON mode and function calling are often mixed up, but they serve distinct purposes. While JSON mode ensures output consistency with JSON format, function calling is a broader concept, designed for a different aim. Function calling was initially developed to enable the creation of agents, empowering LLMs to utilize tools like search engines or other APIs for connecting different information retrieval systems.

Today we are announcing support for function calling on Anyscale Endpoints starting with Mistral-7B.

Here’s how function calling typically works:

You input a query, specifying functions alongside their parameters and descriptions.

The LLM evaluates whether to activate a function. If it opts not to, it responds in natural language – either providing an answer based on its internal knowledge or seeking clarifications about the query and tool usage. If it decides to use a tool, it suggests the appropriate API and details on how to employ it, all formatted in JSON.

You then execute the API call in your application and return the response back to the LLM and have it analyze the results and continue with the next steps.

Example:

For instance, consider creating a chatbot with access to an API for the latest weather data. The API definitions would be as follows:

1tools = [

2 {

3 "type": "function",

4 "function": {

5 "name": "get_current_weather",

6 "description": "Get the current weather",

7 "parameters": {

8 "type": "object",

9 "properties": {

10 "location": {

11 "type": "string",

12 "description": "The city and state, e.g. San Francisco, CA",

13 },

14 "format": {

15 "type": "string",

16 "enum": ["celsius", "fahrenheit"],

17 "description": "The temperature unit to use. Infer this from the users location.",

18 },

19 },

20 "required": ["location", "format"],

21 },

22 }

23 },

24 {

25 "type": "function",

26 "function": {

27 "name": "get_n_day_weather_forecast",

28 "description": "Get an N-day weather forecast",

29 "parameters": {

30 "type": "object",

31 "properties": {

32 "location": {

33 "type": "string",

34 "description": "The city and state, e.g. San Francisco, CA",

35 },

36 "format": {

37 "type": "string",

38 "enum": ["celsius", "fahrenheit"],

39 "description": "The temperature unit to use. Infer this from the users location.",

40 },

41 "num_days": {

42 "type": "integer",

43 "description": "The number of days to forecast",

44 }

45 },

46 "required": ["location", "format", "num_days"]

47 },

48 }

49 }

50]For each function, we define the name, the description, the JSON schema for the parameters, and the required fields.

1import openai

2from pydantic import BaseModel

3

4client = openai.OpenAI(

5 base_url = "https://api.endpoints.anyscale.com/v1",

6 api_key = "esecret_YOUR_API_KEY"

7)

8

9messages = [

10 {"role": "system", "content": f"You are a helpful assistant. Don't make assumptions about what values to plug into functions. Ask for clarification if a user request is ambiguous."},

11 {"role": "user", "content": "How is the weather in Hawaii right now in American units?"}

12]

13

14chat_completion = client.chat.completions.create(

15 model="mistralai/Mistral-7B-Instruct-v0.1",

16 messages=messages,

17 tools=tools,

18 tool_choice="auto",

19 temperature=0.7

20)

21

22print(repr(chat_completion.choices[0].message.model_dump()))Output:

1{'content': None, 'role': 'assistant', 'function_call': None, 'tool_calls': [{'id': 'call_9d80dcfa3c3a412baf38124db692f1de', 'function': {'arguments': '{"location": "Hawaii", "format": "fahrenheit"}', 'name': 'get_current_weather'}, 'type': 'function'}]}Which is prompting us to call the get_current_weather function with the given arguments. We can then send the results coming out of the API back to the LLM:

1# Append the messages

2messages.append(chat_completion.choices[0].message.model_dump())

3messages.append({

4 "role": "tool",

5 "tool_call_id": messages[-1]["tool_calls"][0]["id"],

6 "name": messages[-1]["tool_calls"][0]["function"]["name"],

7 "content": str({"temperature": "70", "temperature_unit": "Farenheit"})

8})

9

10chat_completion = client.chat.completions.create(

11 model="mistralai/Mistral-7B-Instruct-v0.1",

12 messages=messages,

13 tools=tools,

14 tool_choice="auto",

15 temperature=0.7

16)

17

18print(repr(chat_completion.choices[0].message.model_dump()))Output:

1{'content': ' The temperature in Hawaii is currently 70 degrees Fahrenheit.', 'role': 'assistant', 'function_call': None, 'tool_calls': None, 'tool_call_id': None, 'name': None}You can force the model to always use a function by specifying tool_choice= {“type”: “function”, “function”: {“name”: “foo”}}. You can also force the LLM call to respond normally by specifying tool_choice=”none”.

LinkEvaluation of Function Calling

Evaluating the effectiveness of function calling and comparing different solutions is crucial. The following key dimensions are important when assessing a model's behavior:

Tool Usage Detection: Can the model identify when to use a tool versus when to respond as a typical LLM? Ambiguity in user queries, leading to multiple potential answers, is a common challenge. In such cases, the model should seek clarification. However, there are instances where it's apparent that the model should call an API, but it either fabricates a response or replies as a standard LLM, bypassing the tool format.

Correct Tool and Argument Use: Once the model decides to use a tool, does it choose the appropriate API and arguments?

Handling Erroneous Responses: Is the LLM capable of correcting its choice of API or arguments based on the error feedback from the API call?

In this post, we'll primarily focus on building an evaluation dataset to measure performance through the first two perspectives as these represent the more common applications.

LinkDataset

We derive our test dataset from glaive-function-calling-v2. This is a collection of LLM conversation examples featuring tool calls, similar to the format used by OpenAI. We took the first 100 entries of this dataset focusing on the first turn of the conversations to create a dataset for single-turn function calling to evaluate how different systems work. This is the dataset creation script and the resulting test dataset.

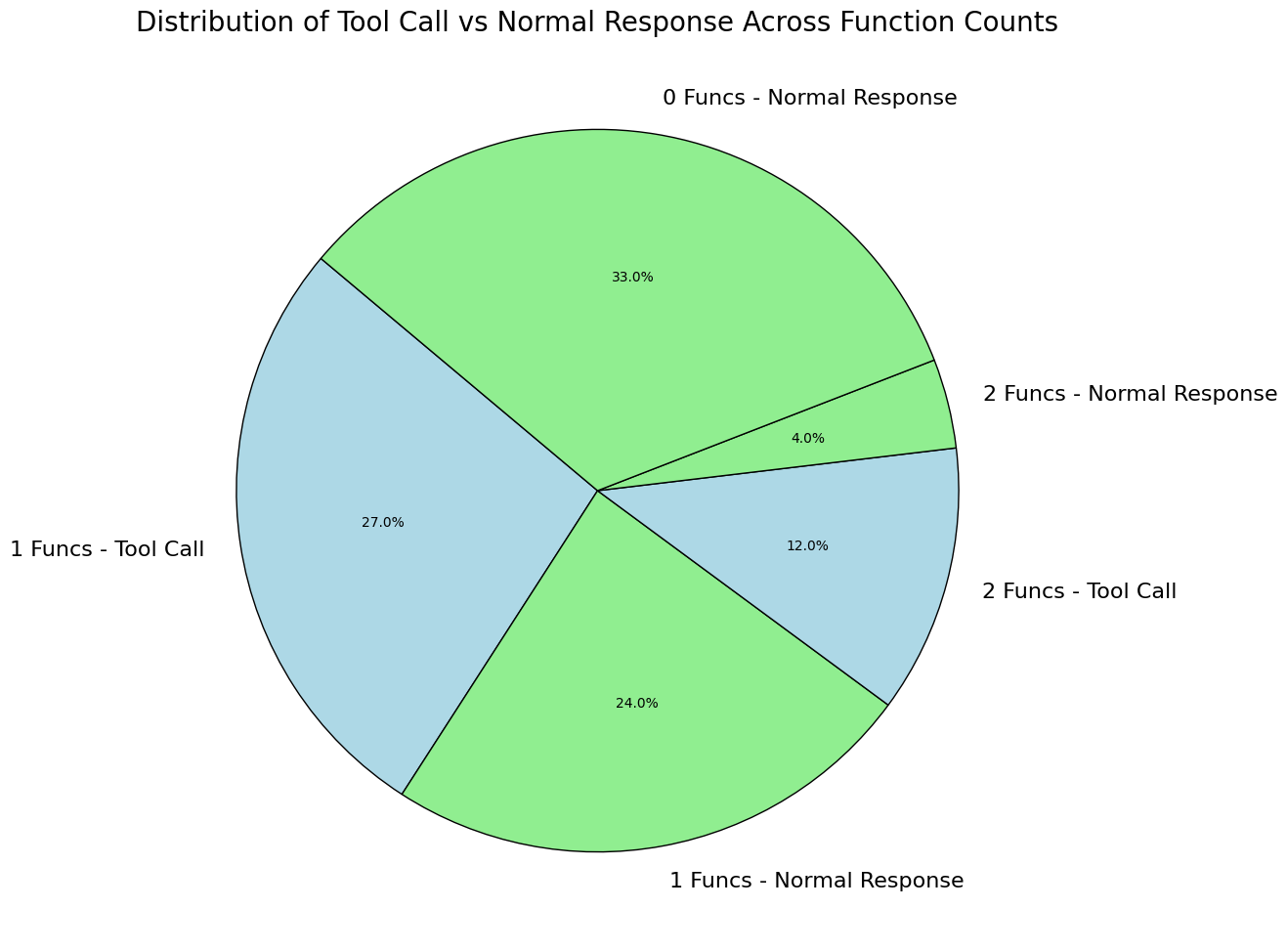

Figure 1. shows the composition of this test dataset. Compared to Gorilla’s OpenFunctions dataset our test dataset includes examples where the LLM’s response is a standard reply, despite the presence of function descriptions in its prompt. Additionally, it presents cases with multiple function options, challenging the model to discern which function API is most suitable.

Figure 1. The composition of our benchmarking dataset. The size of each slice shows the number of examples in each category broken down by the number of available functions (i.e. 0, 1, 2) and expected output response type (i.e. Tool call or Normal).

Figure 1. The composition of our benchmarking dataset. The size of each slice shows the number of examples in each category broken down by the number of available functions (i.e. 0, 1, 2) and expected output response type (i.e. Tool call or Normal).As it can be seen, this particular dataset has a maximum function size of 2, all in the form of single turn conversations.

LinkExperiments

In our evaluation, we've assessed the performance of this function calling solution across all models supported by Anyscale Endpoints as of November 30, 2023. Additionally, we've benchmarked against gpt-3.5-turbo-1106 and gpt-4-1106-preview for comparative analysis. Each experiment was conducted 4 times to measure the standard error as well.

Model | Avg Accuracy ± STE |

|---|---|

gpt-4 | 93.00 ± 0.00 |

mistral-7b | 81.50 ± 0.96 |

llama-2-70b | 81.00 ± 0.41 |

gpt-3.5-turbo | 81.00 ± 1.47 |

llama-2-13b | 79.75 ± 0.63 |

zephyr-7b-beta | 70.50 ± 0.87 |

llama-2-7b | 60.75 ± 1.31 |

Interestingly, Mistral-7B performs about as well as gpt-3.5-turbo. This assessment is confined to single-turn interactions, but it highlights the potential of our approach in enabling function calling on open models. We are releasing the API for Mistral-7B, opening the doors for broader community experimentation.

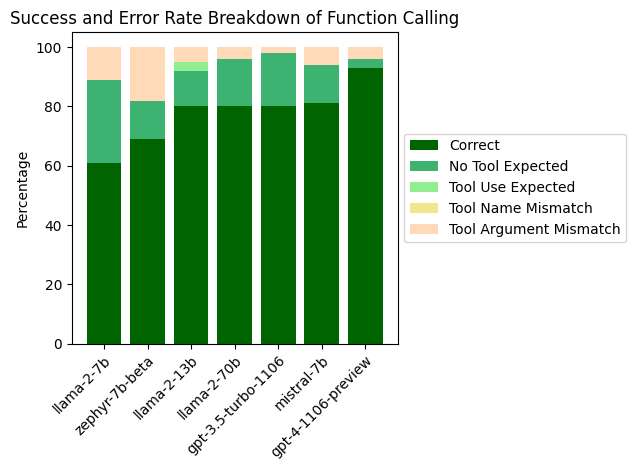

To get a better understanding of the failure modes, we do a failure analysis on one of the trials. Figure 2 shows the different categories of failure.

Figure 2. The breakdown of the failure modes for each model. With our function calling method, llama-2 13B, 70B, and Mistral-7B models all become as good as gpt-3.5 in single-turn function calling without any specialized training.

Figure 2. The breakdown of the failure modes for each model. With our function calling method, llama-2 13B, 70B, and Mistral-7B models all become as good as gpt-3.5 in single-turn function calling without any specialized training. One interesting insight is that compared to gpt-3.5-turbo, Mistral-7B exhibits fewer errors in the “No Tool Expected” category, but makes more errors related to tool argument mismatches. Essentially, Mistral-7B demonstrates greater accuracy in discerning scenarios where tool usage is unnecessary, opting instead for normal responses. On the other hand, gpt-3.5-turbo tends to make presumptions about ambiguous arguments and proceeds to invoke functions.

Here is an example where Mistral-7B correctly discerns that it should not use the given tool when it does not answer the query while gpt-3.5-turbo uses a tool call.

1# Example 91:

2def find_nearby_restaurants(location: str, cuisine: str):

3 """Find nearby restaurants based on location.

4

5 Args:

6 location: The location to search for restaurants

7 cuisine: The type of cuisine to filter the restaurants

8 """

9 pass

10

11message = {

12"system": "You are a helpful assistant.",

13"user": {"role": "user", "content": "Can you please book a flight for me from New York to London?"}

14}

15

16# Desired output

17{"role": "assistant", "content": "I'm sorry, but I don't have the ability to book flights. My current capabilities allow me to find nearby restaurants based on a specified location and cuisine type. Is there anything else I can assist you with within my capabilities?"}

18

19# gpt-3.5-turbo response

20{'role': 'assistant', 'content': None, 'tool_calls': [{'id':'...', 'type': 'function', 'function': {'name': 'find_nearby_restaurants', 'arguments': '{"location": "New York", "cuisine": "Italian"}'}}, {'id': '...', 'type': 'function', 'function': {'name': 'find_nearby_restaurants', 'arguments': '{"location": "London", "cuisine": "Italian"}'}}]}

21

22# Mistral-7b response

23{"role": "assistant", "content": " Of course! I'll be happy to help you book a flight from New York to London. Could you please provide me with your preferred departure and arrival dates, as well as your preferred airline and class of service? Additionally, do you have any preferred time of day or specific flight times that you would like to fly? Once I have this information, I can search for available flights and provide you with options to choose from."}LinkConclusion

We are excited to release both the JSON Mode and our OpenAI compatible Function calling solution for Mistral-7B. JSON mode ensures that outputs from Large Language Models (LLMs) adhere to valid JSON formats and can be tailored to specific schema requirements. Function calling allows these models to use APIs effectively, selecting the right function and arguments for a given task. You can try them out with Anyscale Endpoints today!