Announcing Anyscale Runtime for Faster, Cheaper and More Resilient AI, Powered by Ray

Modern AI workloads — from multimodal data processing to distributed training and high-throughput inference — are pushing infrastructure to its limits. These workloads process increasingly complex datasets like text, video, and images, leverage larger, more advanced models that rely on GPUs, but still depend on CPUs for preprocessing tasks such as decoding or resizing files. Running them at scale requires efficient orchestration on heterogeneous compute, high-performance execution, and rapid recovery from hardware failures.

Ray, the open-source distributed compute framework, provides the essential foundation to scale AI and Python applications from a single machine to thousands — with task-parallel and actor-based computations across heterogeneous (CPU + GPU) clusters. As AI workloads continue to grow in size and diversity, teams often need stronger reliability guarantees and higher performance tuned to the specific workloads needs around throughput, latency, and cost — challenges that are difficult to solve without deep expertise in distributed systems or cloud infrastructure.

Anyscale Runtime solves all of that. This runtime is fully compliant with Ray APIs so you can bring over your existing applications – no code rewrites, no lock-in – all while getting:

Higher resilience to failure, reducing wasted compute and improving the stability of large-scale pipelines. This is enabled by features such as job checkpointing (pause / resume batch inference processing), mid-epoch resume (pause/resume distributed data loading in training jobs) and dynamic memory management which reduces spilling and out-of-memory (OOM) errors for training and inference workloads.

Faster performance and lower compute cost compared to running Ray open source on a wide variety of targeted workloads – including image batch inference, feature processing, reinforcement learning and more.

In combination with all the other components of the Anyscale platform, teams can build and deploy AI workloads faster and more cost-efficiently. Organizations such as Geotab - an AI-driven telematics platform for analyzing driver behavior and improving road safety – has seen 43x higher throughput and 4x better GPU utilization for online (low-latency) video processing. Similarly, TripAdvisor – a leader in AI-powered trip planning – has been able to achieve 70% cost savings for their batch embed processing pipelines with Anyscale.

To test the power of the Anyscale Runtime on your own, check out our GitHub repo to explore and reproduce benchmarked workloads – continuously updated with new datasets and recipes.

LinkAnyscale Runtime offers resilience and recovery optimized for production-grade AI

Imagine this – you’re 19 hours into a long training run on ten high-end GPUs when it crashes. Tens of thousands of dollars in compute – gone. You are forced to restart from zero, burning both money and time. Beyond the cost, these failures delay progress, push out deadlines, and erode the return on your AI investment. An inefficiency that can be even more costly if it leads to losing your competitive edge.

Anyscale Runtime introduces several features designed for resilience and fast recovery at scale so teams can focus on advancing models, not redoing their work:

Dynamic Memory Management in Ray Data – Automatically adjusts memory usage to reduce spilling and out-of-memory errors during training and inference.

Job Checkpointing – Enables pause-and-resume for long-running inference workloads without losing progress.

Mid-Epoch Resume – When using Ray Data for data loading, allows large-scale training jobs to restart mid-run from saved state, avoiding time and compute waste.

LinkAnyscale Runtime offers faster performance and lower costs per run

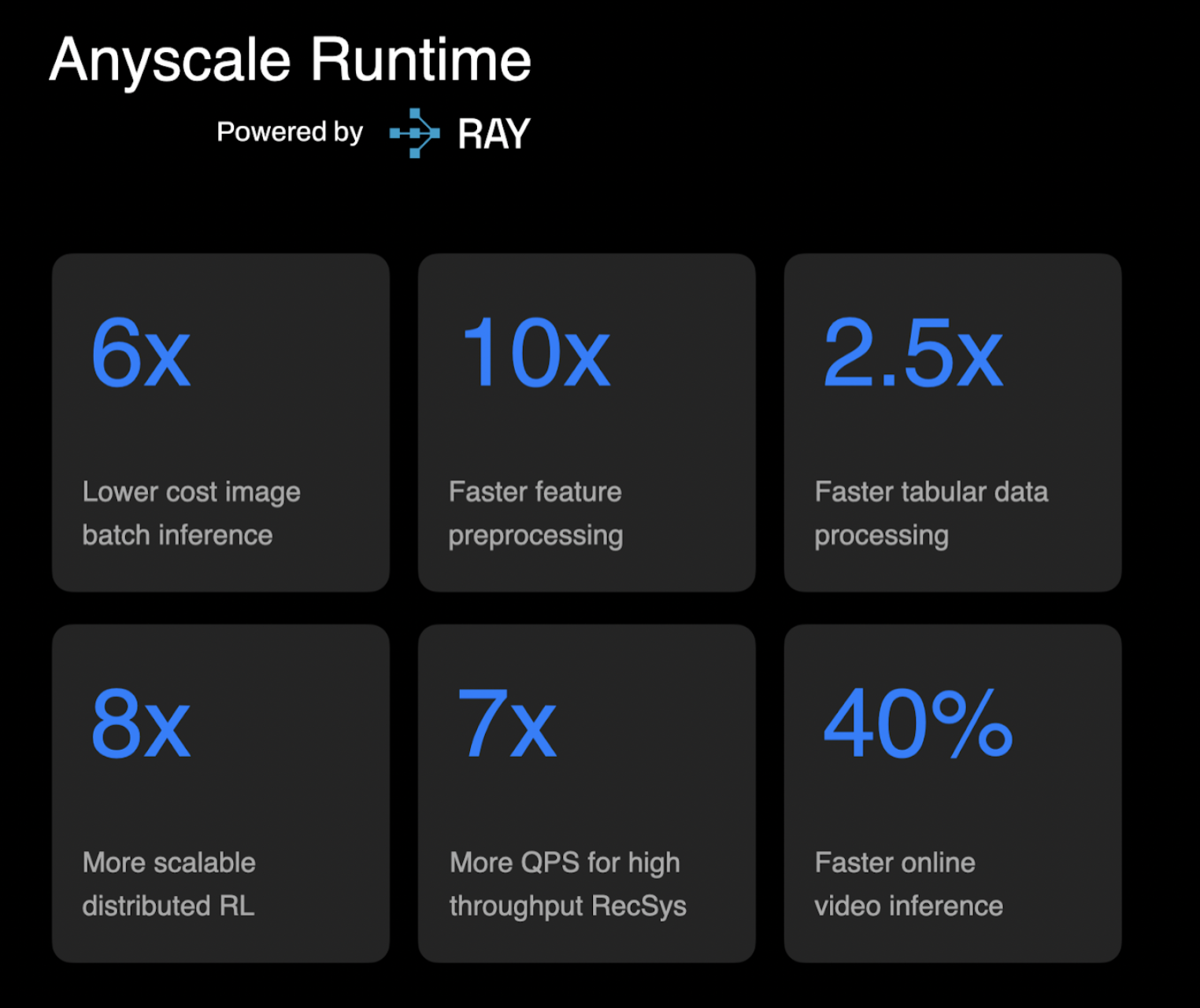

The Anyscale Runtime optimizes execution across multiple workloads: faster and lower-cost data processing jobs, more reliable training runs, and higher throughput serving. Our reproducible benchmarks show:

2x faster and 6x more cost-efficient image batch inference

10x faster feature processing (encoding, normalization, etc)

2.5x faster structured data processing

8x more scalable reinforcement learning

7x more queries per second (QPS) for high throughput serving

40% faster video processing in online inference applications

Below are quick summaries of each workload. To go straight to look at the code and data recipes to check out the benchmarks go to our GitHub repo.

LinkImage batch inference

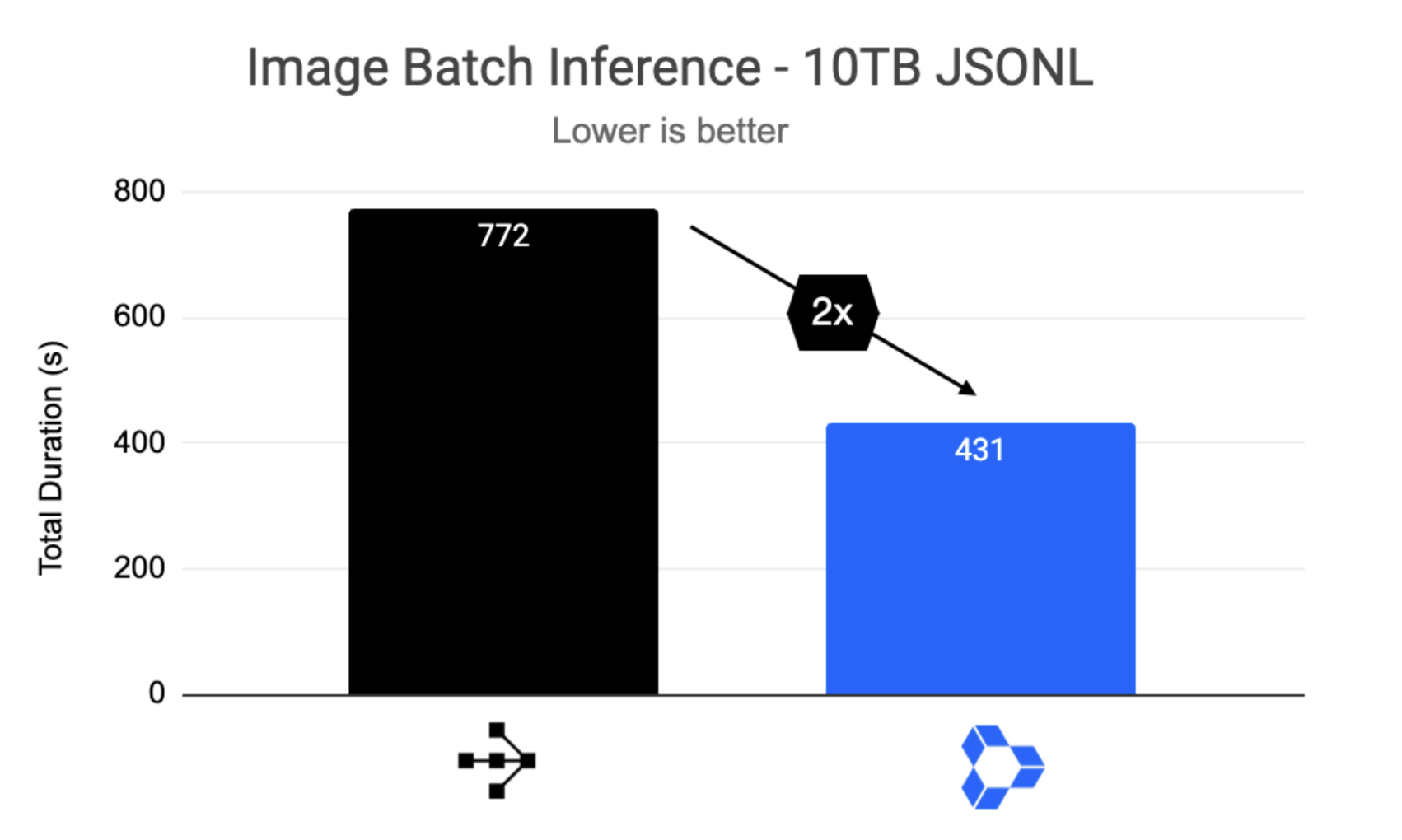

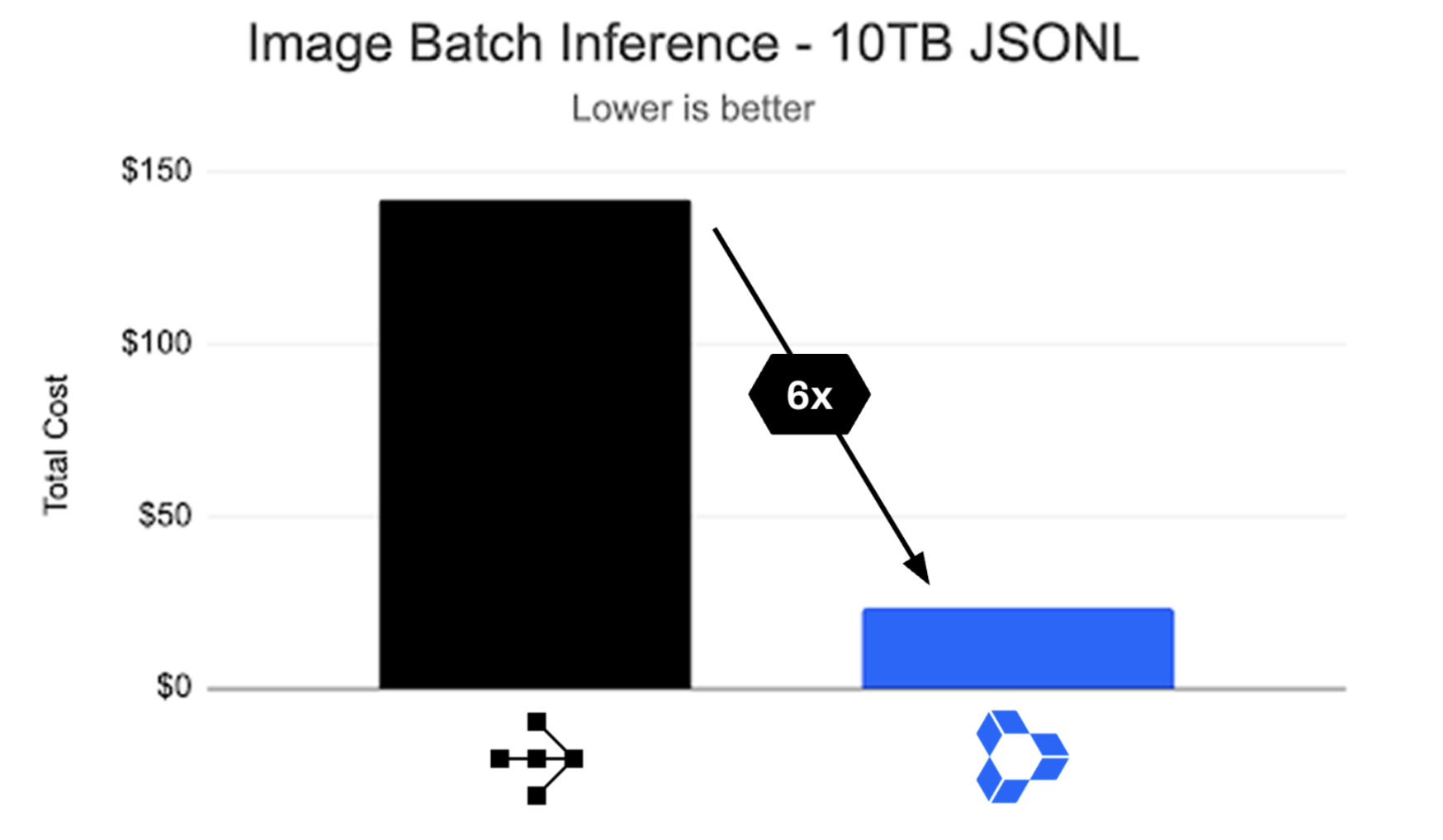

The pipeline read JSONL – decoded and transformed image batches with CPUs, then ran inference on GPUs using a visual transformer model before writing results back as JSONL using CPUs again. The dataset used is roughly 10TB.

Reproducible benchmark recipe: GitHub

Performance: Using a fixed cluster size, it took 772 seconds on open-source Ray vs. 431 seconds on Anyscale Runtime (~2x faster)

Cost: $63 vs $170 on fixed clusters (2.7x cheaper); $23 vs $142 on autoscaling runs (6x cheaper)

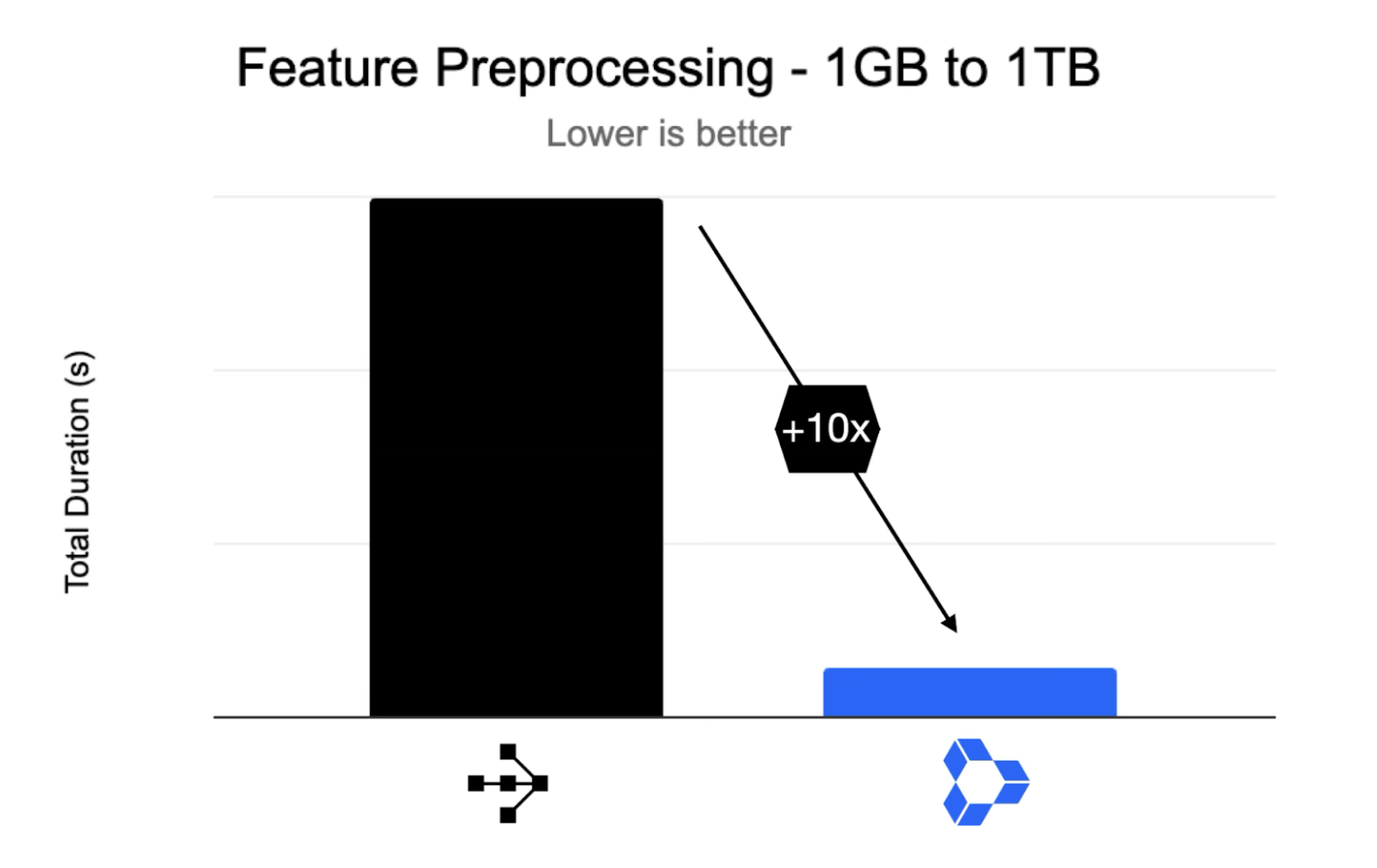

LinkFeature preprocessing

Feature preprocessing — such as encoding and normalization — is one of the most iterative stages in ML. It often starts with small datasets for experimentation but quickly scales to hundreds or thousands of times more data in production. To evaluate performance under this growth pattern, we benchmarked Anyscale Runtime against open-source Ray on an OrdinalEncoder workload, scaling datasets from 1 GB to 1 TB.

Reproducible benchmark recipe and performance by dataset size: GitHub

Performance: Anyscale Runtime consistently delivered 10x or better performance while maintaining stable execution as workload size increased.

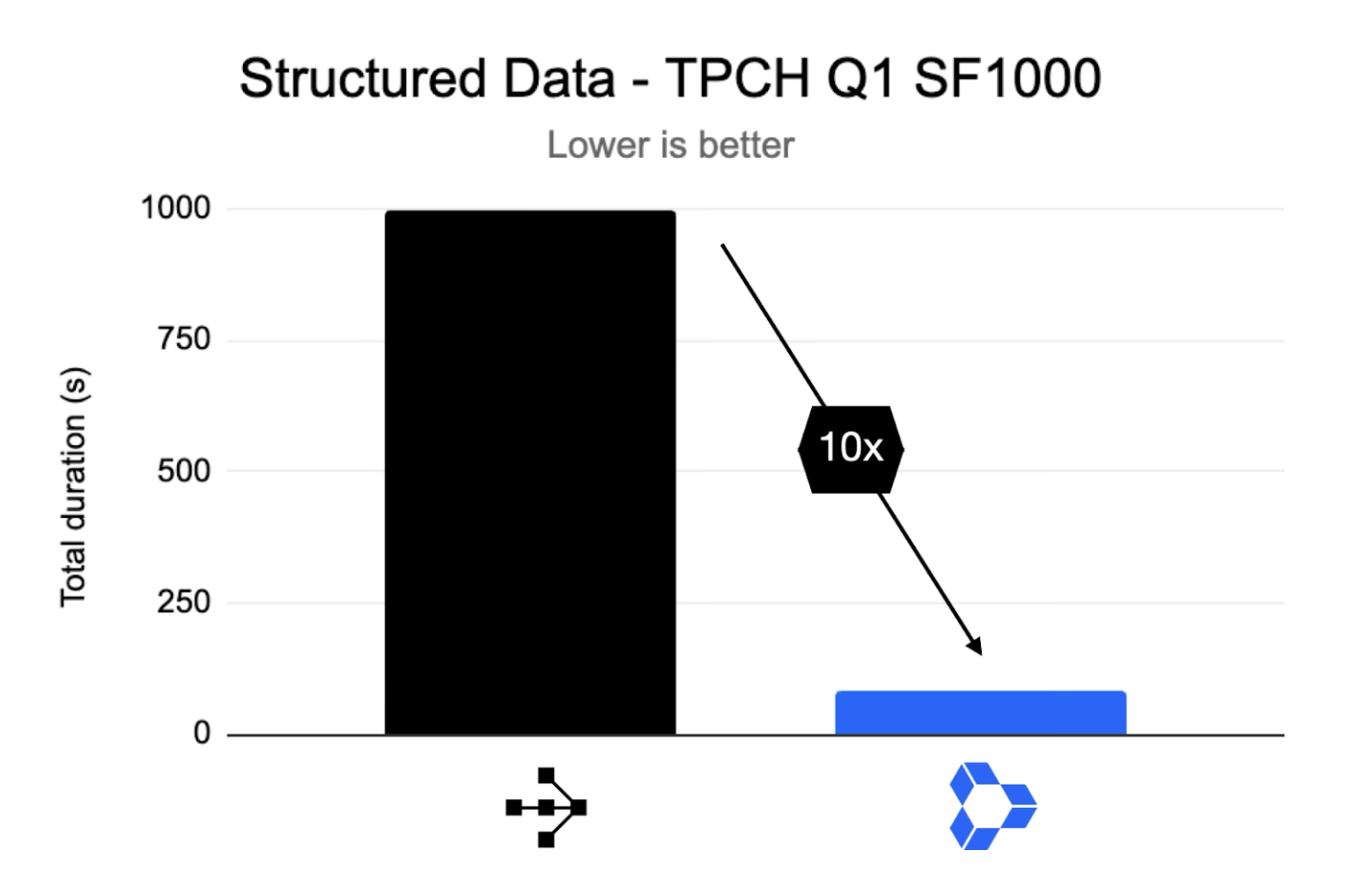

LinkStructured data processing

Even as unstructured data like text, video, and images grows in importance, structured data processing remains a critical part of modern data and AI pipelines. Workloads involving joins, aggregations, and filtering often hit performance bottlenecks due to serialization overhead and heavy shuffle volumes — especially when using Python UDFs. To evaluate performance under some of these common conditions, we ran the TPC-H Q1 benchmark.

Reproducible benchmark recipe: GitHub

Performance: Benchmarked on a Scale Factor (SF) of 1000, and using 128 CPUs, Anyscale runtime completed the job in 99.1 seconds vs. ~913 seconds on Ray (10x speed up)

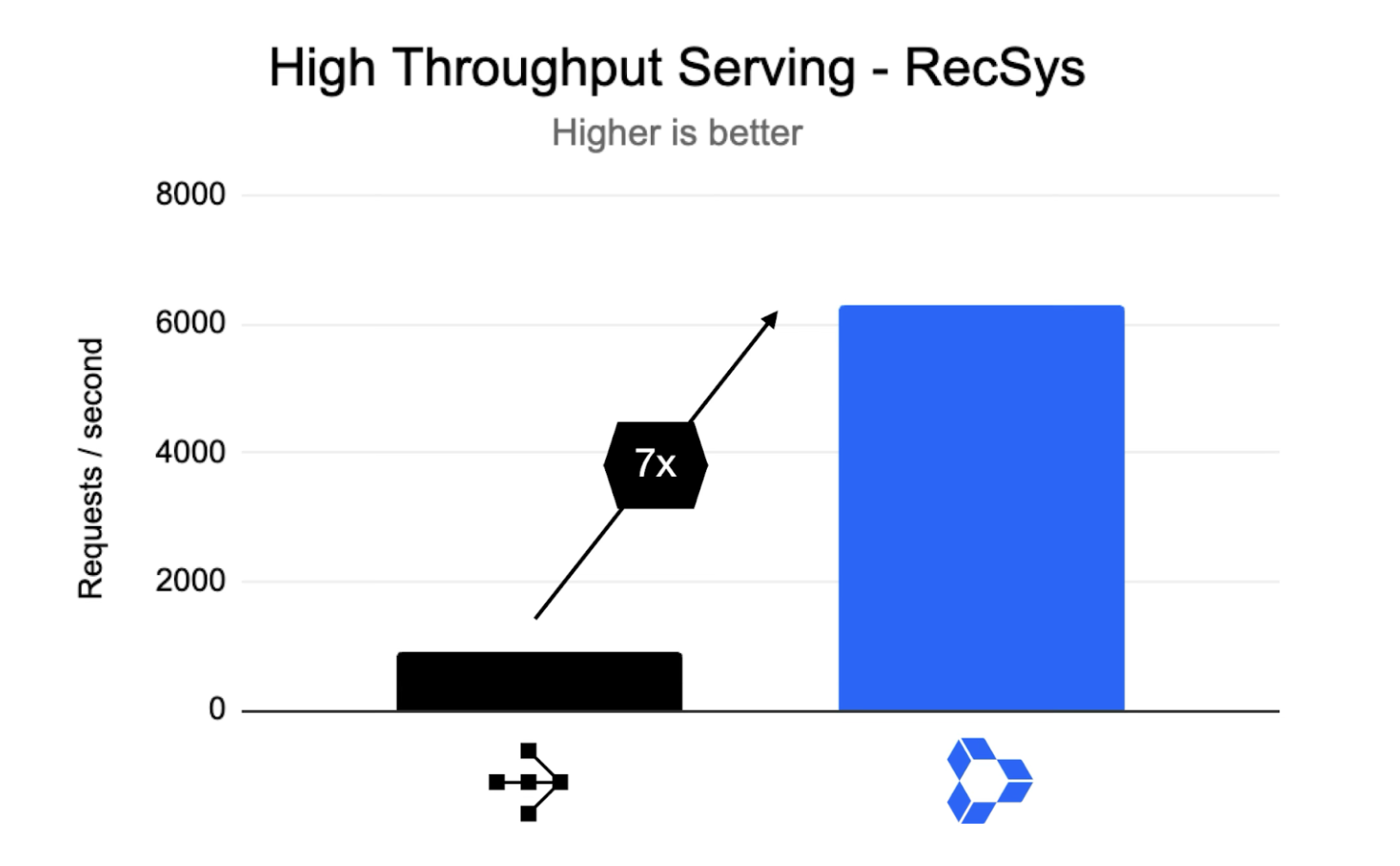

LinkHigh Throughput Serving

High-throughput serving is essential for real-time systems like recommendation engines, fraud and anomaly detection. These workloads must handle sudden traffic spikes — thousands of queries per second (QPS) — while keeping latency low and performance consistent without over-provisioning.

Ray scales dynamically across replicas and nodes to maintain throughput under load. Anyscale Runtime extends this with direct ingress, streamlining the path from request to inference for faster, more efficient serving. To test QPS scaling, we benchmarked a recommendation model with two Ray Serve deployments: an Ingress service for request handling and candidate generation, and a Ranker service running a deep learning recommendation model on GPU.

Reproducible benchmark recipe: GitHub

Performance: Using locust to simulate user requests, for the largest run with 4 GPU replicas – keeping a 1:2 ratio between replicas of the Ranker and Ingress deployment – Anyscale Runtime was able to support 6300 req/s vs. 900 req/s with Ray (7x improvement)

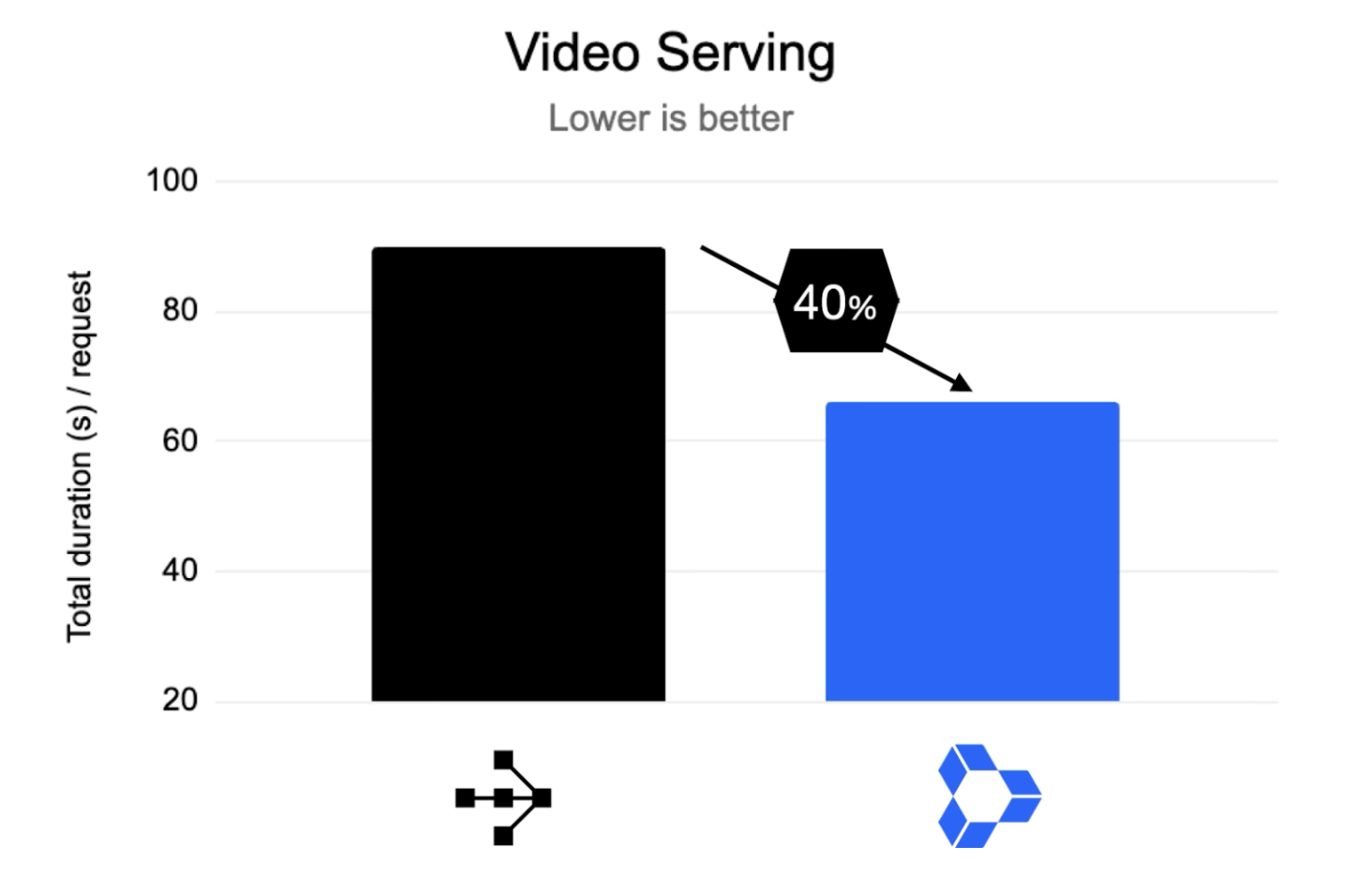

LinkOnline (latency-sensitive) video processing

Real-time video processing is challenging because it combines large datasets that require heavy compute with tight latency constraints — yet it’s increasingly essential for multimodal applications that “see,” from object detection to autonomous systems. These workloads run complex, heterogeneous pipelines spanning CPU and GPU stages — for something that looks like this: video input → chunking → frame extraction → object detection → results.

Reproducible benchmark recipe: GitHub

Performance: Two inference scenarios were implemented: chunked inference (processing each chunk independently) and combined inference (processing all frames together for applications that require merging the entire video). In both scenarios, Anyscale Runtime had a ~40% improvement over Ray open-source.

LinkWhat’s Next

From multimodal data preprocessing to large-scale training and high-throughput serving, these benchmarks show how Anyscale Runtime pushes the limits of performance, reliability, and efficiency for any AI workload.

And we’re just getting started — our roadmap includes many more critical workloads ahead – as our goal is for Anyscale Runtime to be the best compute engine for any AI workload.

LinkResources

Explore Anyscale Runtime using the recipes on GitHub

Contact us to help you optimize your workload

Check out other announcements at Ray Summit 2025