Announcing Anyscale First-Party Offering on Azure: Build, Run and Scale AI-Native Workloads Securely on Azure Infrastructure

Enterprises are entering a new wave of digital transformation defined by the shift to become an AI-first organization, one that not only embeds AI into every decision, process or experience but one that builds all of these with AI at the core.

Yet delivering on this vision isn’t simple. Building AI platforms that can handle the processing requirements for the entire spectrum of AI -- from classical machine learning to multimodal and agentic systems -- exposes the limits of legacy infrastructure. Compute platforms designed for structured datasets and architectures siloed for batch processing and online inference often struggle with today’s challenges: massive model sizes, diverse data modalities, and GPU-intensive training and inference.

These challenges are solved by Ray’s AI-native compute framework. Ray’s scale and performance has been proven to meet the processing demands of some of the largest AI workloads. Yet this powerful distributed compute framework requires a platform around it so that teams can quickly build, manage and productionize their AI workloads.

To help Azure customers accelerate their ambitious AI initiatives, we’re excited to announce the private preview of Anyscale as a first-party offering on Azure. This AI-native compute service, co-engineered with Microsoft and delivered as a fully managed, first-party offering on Microsoft Azure. This service makes it effortless to build, deploy, and scale AI-native workloads, powered by Ray, all within the perimeter of the secure and trusted Azure infrastructure.

As part of this launch, Azure customers get:

First-party service experience: Provision and manage Anyscale directly from Azure Portal and pay for Anyscale with existing commitments.

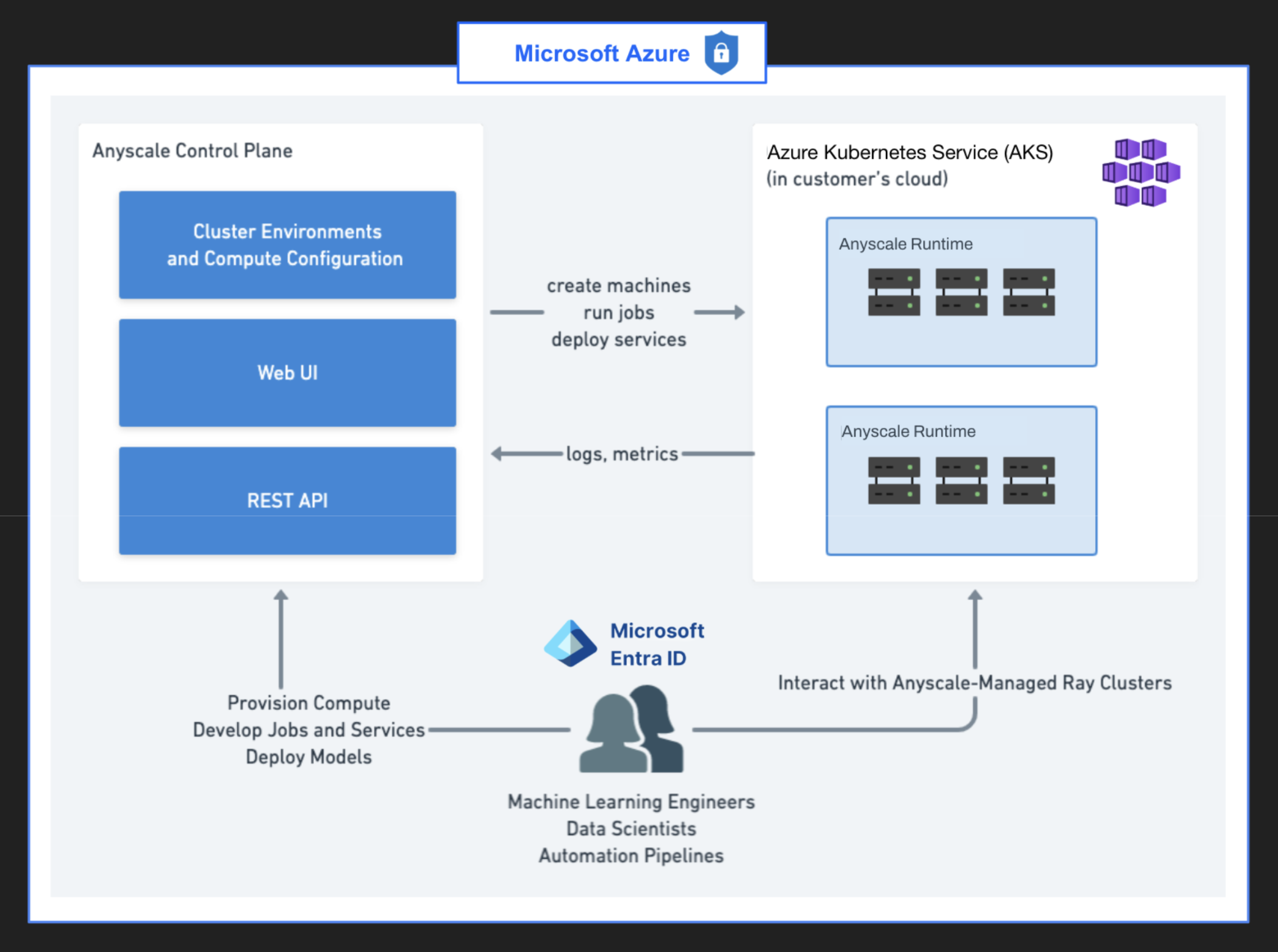

Secure AI from dev to prod: Anyscale integrates natively with Azure’s security frameworks, including Azure Entra ID for authentication. Anyscale-managed Ray workloads run on Azure Kubernetes Service (AKS) with your organization’s existing IAM policies, secure data access, and unified governance.

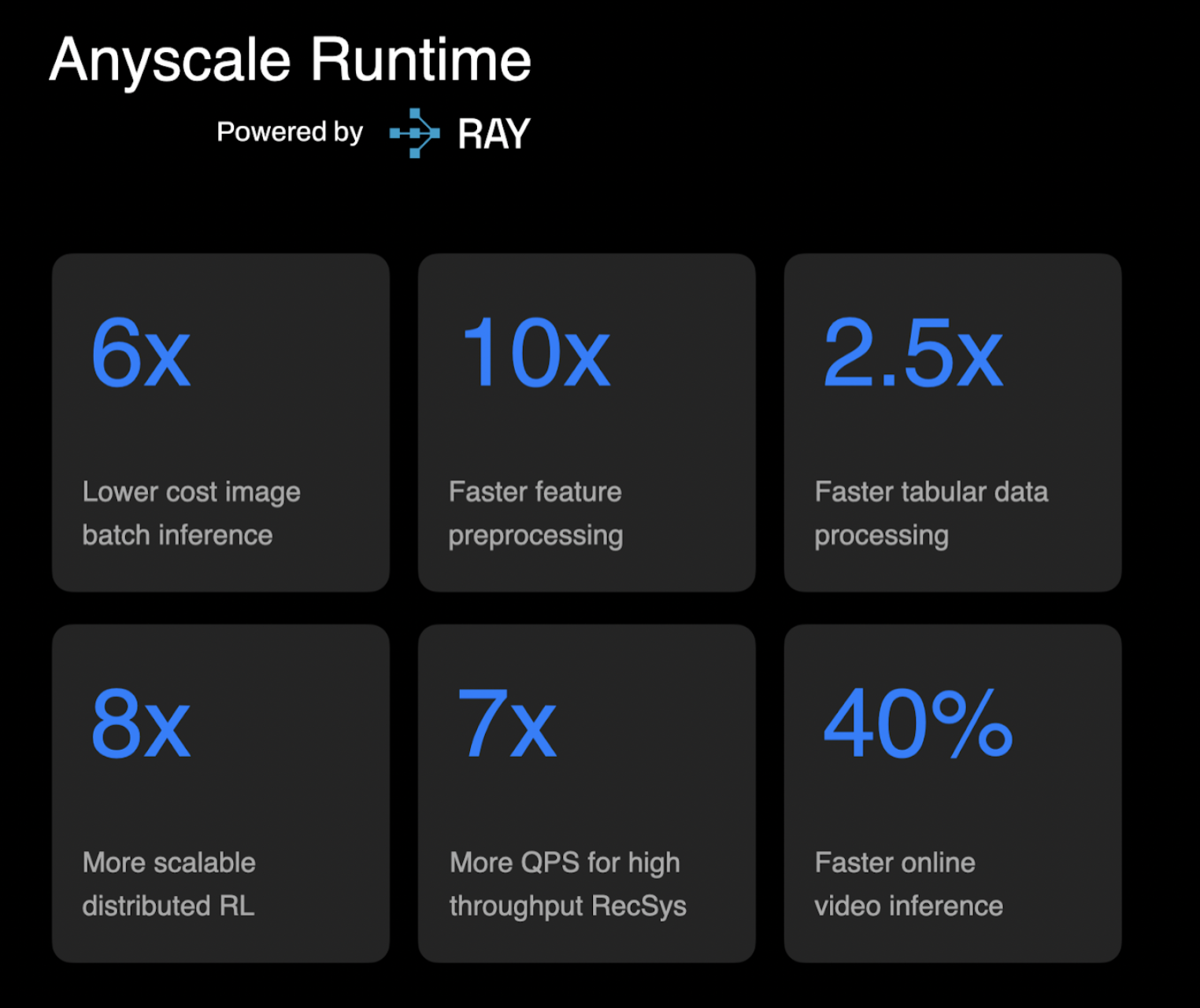

Cost-efficient and performant processing: Execute workloads with the Anyscale Runtime – a Ray-compatible, runtime optimized for faster performance and higher reliability at scale vs. Ray open-source. This runtime is available as part of any Anyscale-managed compute cluster whether it is a workspace, job or service. To see how it achieves 10x faster feature preprocessing and batch image inference check out the Anyscale Runtime announcement blog.

To get access to the preview, submit interest via this form.

LinkFirst Party Service Experience: Effortlessly Provision and Manage Anyscale Alongside Other Azure Services

With the natively integrated Anyscale service, developers can now provision and manage Ray-based workloads directly within the Azure Portal, just like any other Azure service so that organizations can:

Deploy Anyscale via a guided click-through process and manage those deployments from within the Azure Portal.

Pay for Anyscale with unified billing through Azure, and allowing enterprises to draw down existing Microsoft Azure Consumption Commitments (MACC).

Anyscale clouds run in Azure alongside your existing Azure-native storage, networking, and other services. With this tightly integrated experience, Azure developers can spin up powerful distributed AI environments in minutes without the operational overhead of manual networking and other infrastructure setup. Setting up the Anyscale operator, which manages the relationship between the Anyscale control plane and your Kubernetes cluster, is done in the matter of a few clicks as an AKS extension and there is no need to manually install via helm chart.

LinkSecurely Build and Productionize Your AI Alongside Your Data

Anyscale integrates seamlessly with Azure’s security frameworks for secure authentication and authorization. Managed Ray workloads run directly within the customer’s Azure Kubernetes Service (AKS) environment, ensuring enterprise-grade security and compliance by design. Azure customers can extend their existing access controls with Azure Entra ID across Anyscale resources – including clouds, workspaces, jobs, services, and more – to help ensure security and compliance are built into the compute foundation of enterprise AI.

With a bring-your-own-cloud (BYOC) model, teams can develop and deploy AI-native workloads alongside their data and applications. This architecture delivers:

Unified security and compliance across AI and other enterprise applications

Seamless development-to-production flow, reducing friction in deploying AI pipelines, models, and agents

LinkAchieve Faster, Cost-Efficient Performance Than with Open-Source Ray

Through its deep integration with Azure, customers gain access to the Anyscale Runtime -- a fully Ray-compatible, runtime that enhances performance and reliability without requiring code changes. This helps teams extract more value out of every core to get more ROI from every AI initiative.

This runtime delivers:

Faster performance (e.g. 10x faster feature processing) for multimodal data (video, image, text, and document processing), large scale training and latency-sensitive serving systems like agentic apps.

Greater stability and scalability for long running and other large scale processing with with job checkpointing (pause / resume batch processing), mid-epoch resume ( pause/resume training jobs) and dynamic memory management which reduces spilling and out-of-memory (OOM) errors for training and inference workloads.

For full details on Anyscale Runtime, check out the announcement and benchmarks blog.

LinkHow you to get started with Anyscale from Azure Portal

Request access to the Private Preview (request access)

Through a click through process, you’ll be guided through the following:

Head to your Azure Portal and click on Anyscale under the AI + machine learning service category.

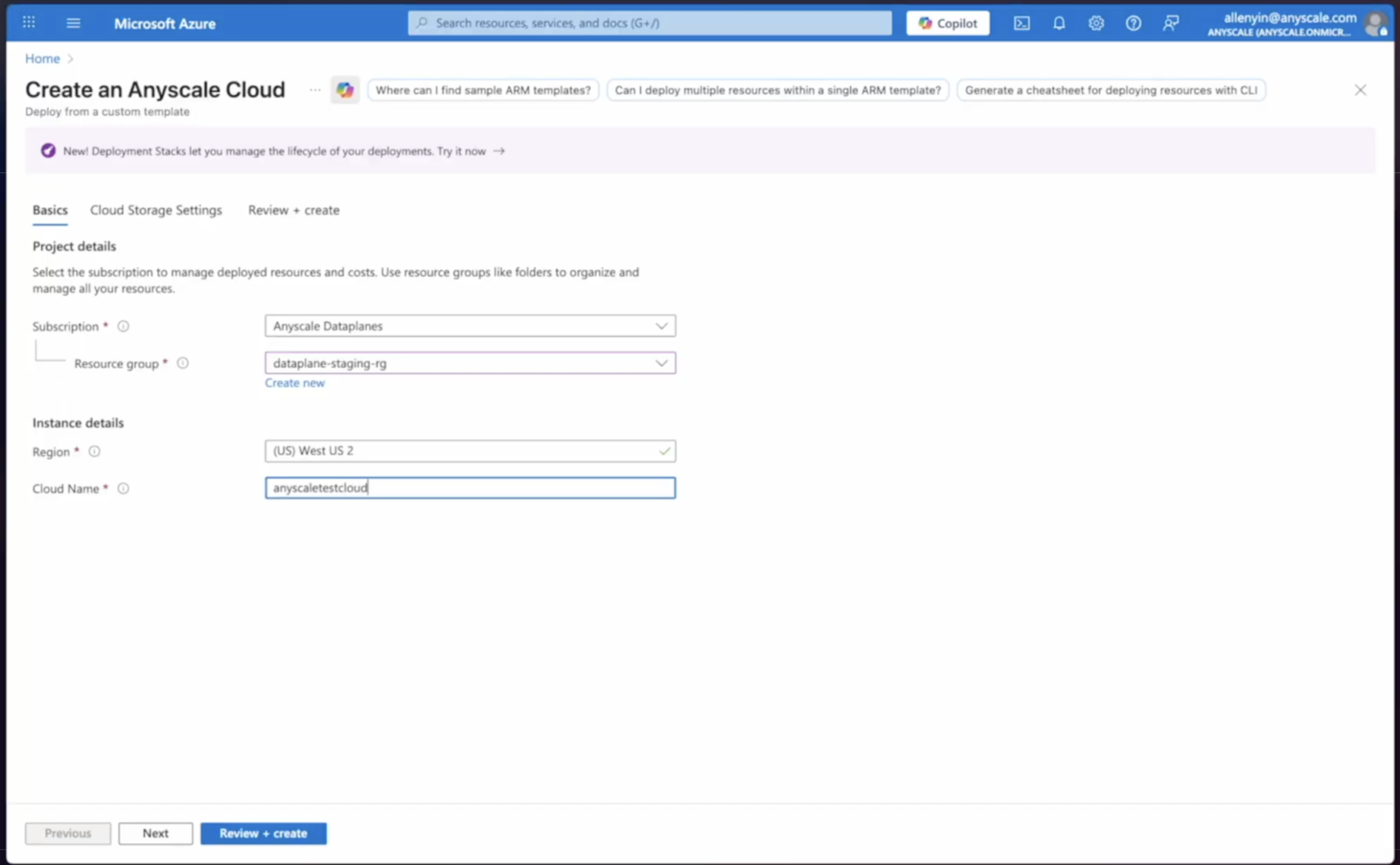

azure demo Select the Anyscale subscription you want to use for billing and complete other steps of the process like assigning your resource group, the region where you want to deploy, etc.

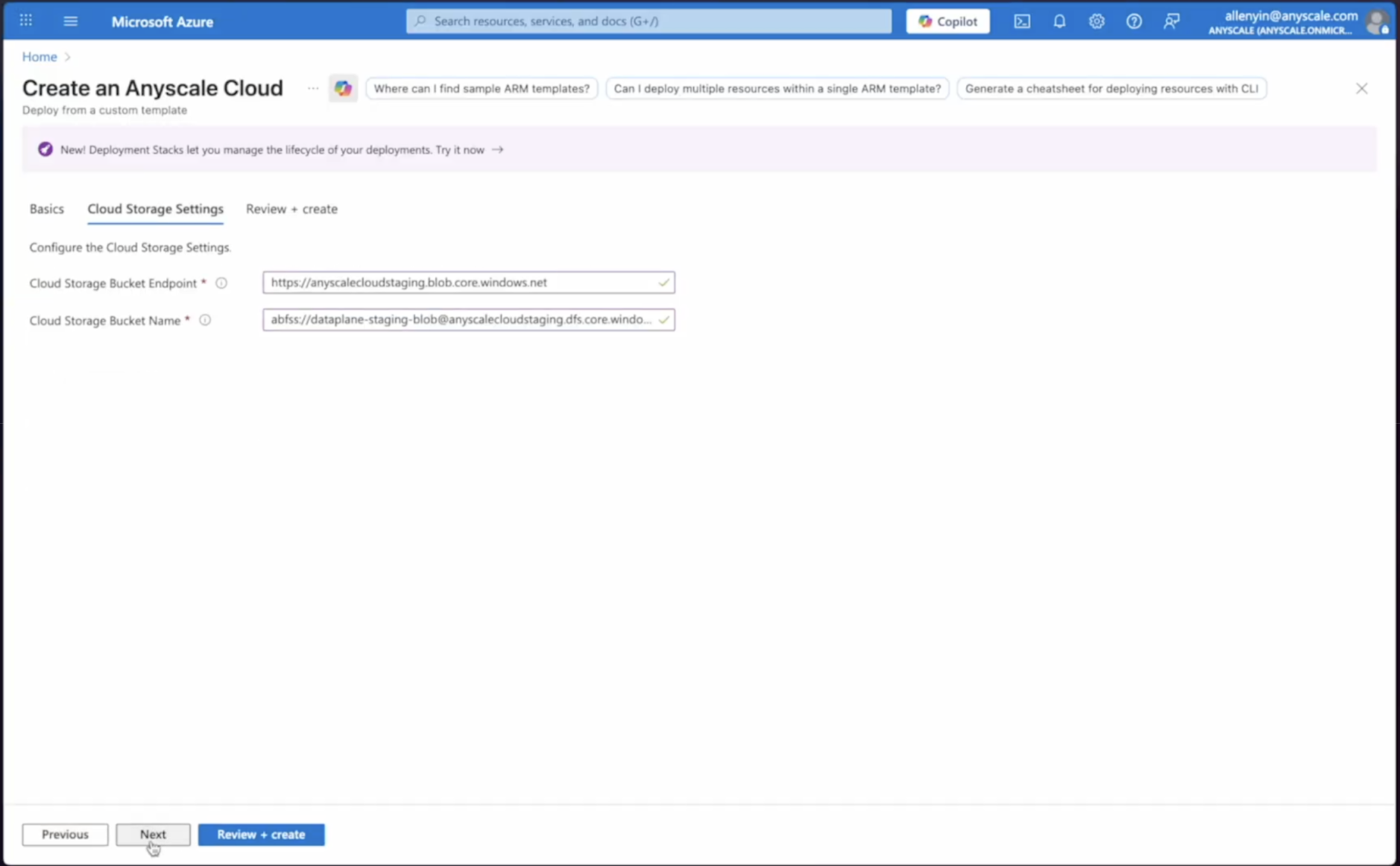

azure create anyscale As a next step, specify the cloud storage where you’ll grab data from.

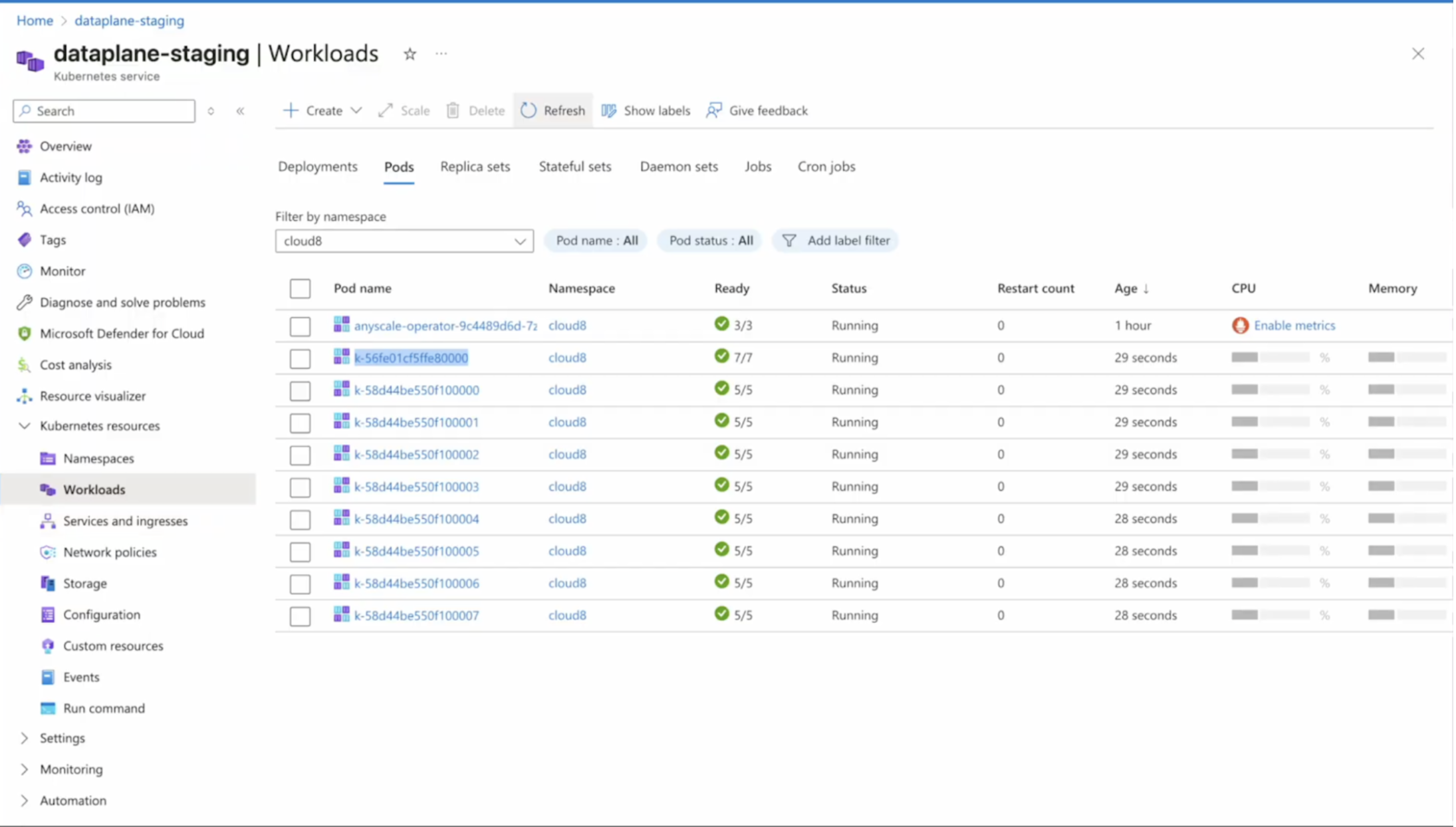

Under the hood, Anyscale is deploying the Anyscale operator as an AKS extension so you don't have to manually install via helm chart.

This operator manages the relationship between the Anyscale control plane and your Kubernetes cluster.

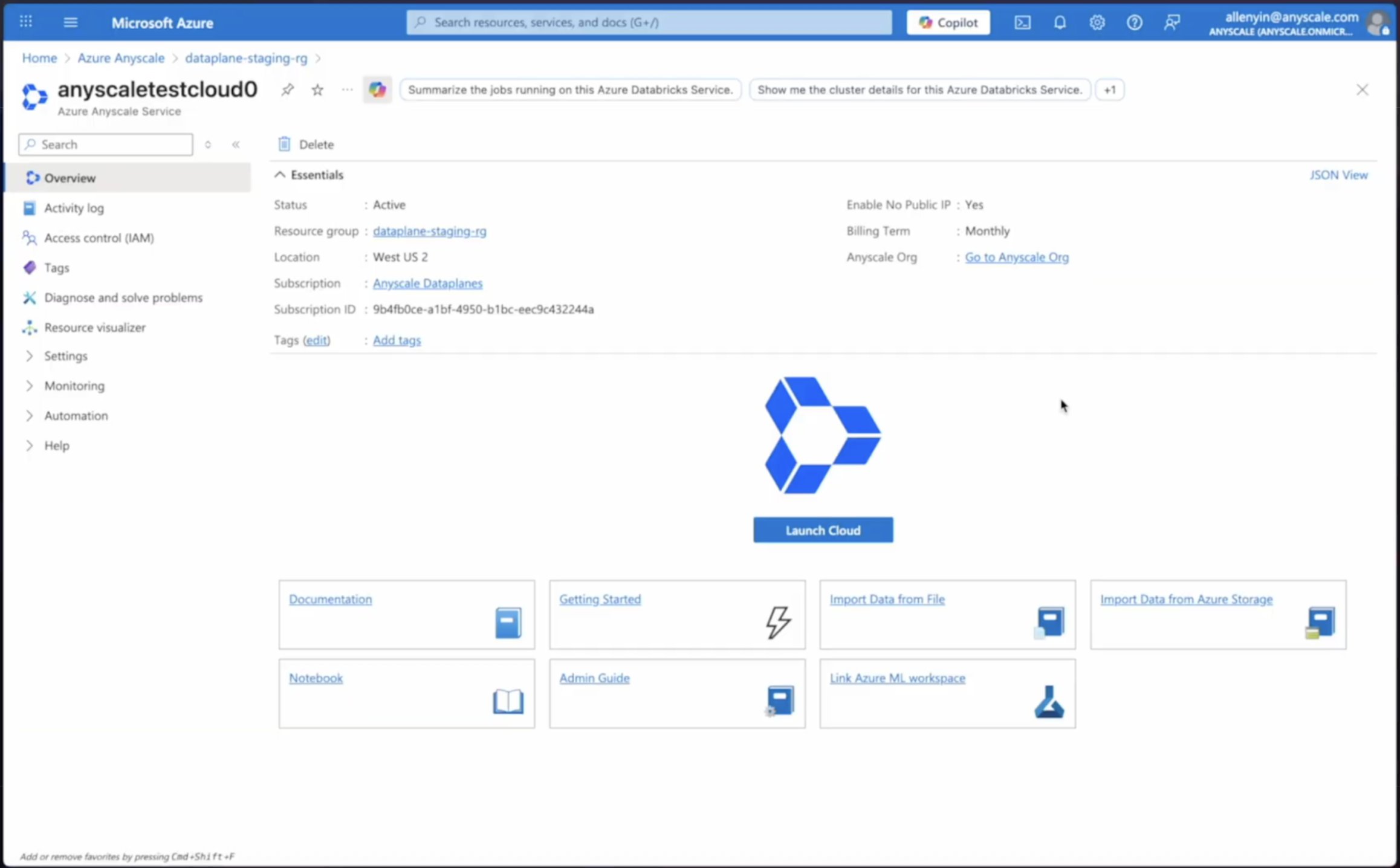

azure create anyscale 2

Now your cloud is ready to use— if you needed to, you could adjust access controls, tags, and other settings directly in the Azure Portal.

azure anyscale cloud settings Once you complete the guided step-by-step workflow, you are ready to start your development!

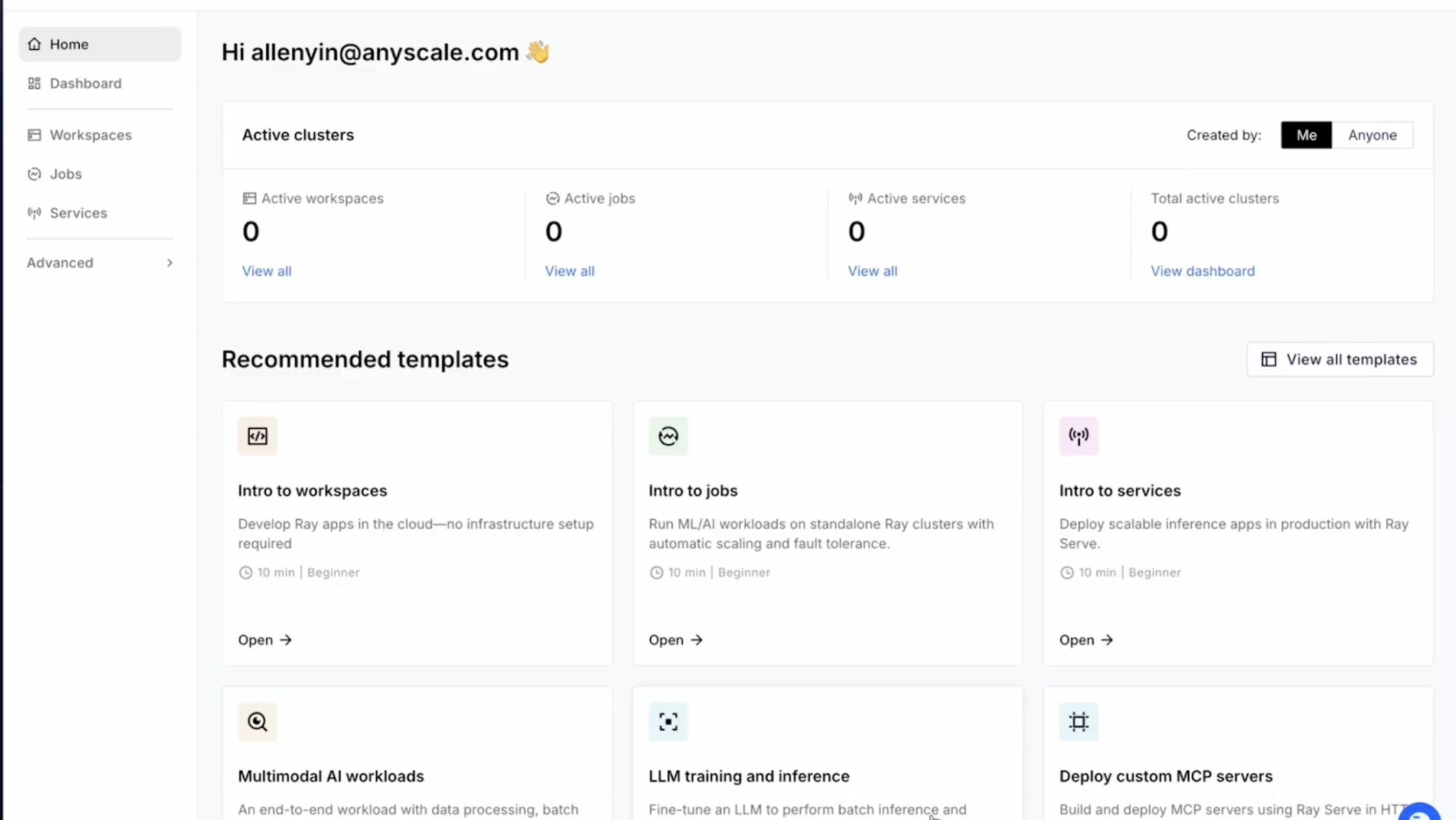

workspace template azure

Once you have completed this process, you will be able to launch your Anyscale cloud. As an Azure customer, your organization can have multiple Anyscale clouds as each of these can have independent tracking of resources for billing and consumption. Within each cloud you can use Anyscale projects to organize and manage different compute resources.

Once deployed from Azure Portal, you can start to use your Anyscale service. You can get started with one of the existing templates or build your own workload leveraging the Anyscale documentation.

Just like any other AKS pod, monitor status and other metrics from the Azure portal.

Link

Resources:

Request access to the private preview

Subscribe to Anyscale in the Azure Marketplace

Check out Anyscale templates to see code examples for common workloads

Learn more about the Anyscale platform