Direct Preference Optimization with Synthetic Data on Anyscale

Editor's Note: This blog has been edited to for clarity; Anyscale has deprecated LLMForge and recommends using LLaMA Factory on Anyscale for fine-tuning.

In this post, we'll explore preference tuning of LLMs through a practical case study on summarization. Our approach highlights the importance of having a framework for iterative data generation, processing, training, and evaluation. We'll use Ray and Anyscale as our compute platform to tackle this task. Key take-aways:

Synthetic Data Generation: This has become a very powerful methodology for scaling high quality datasets. AI models have become powerful enough to be used as data augmenters and judges to seed the improvement of the next generation of models. We lay down a detailed synthetic data generation pipeline for building a fully synthetic dataset for preference tuning, and address associated challenges. We’ll also cover how Ray Data + vLLM can be used for scaling this stage while allowing for rapid experimentation.

DPO Training and Insights: Direct Preference Optimization (DPO) is a method for tuning LLMs to align their outputs with human preferences instead of supervised data. It has become a widely adopted preference tuning algorithm due its balanced trade-off between complexity and effectiveness. Perhaps most notably, DPO has been applied in the post-training efforts on recent competitive open-weight models (e.g. LLama-3.1). We have added support for DPO to our LLM suite on the Anyscale platform, allowing you to build your own preference tuned models through an intuitive API. Here we'll cover some modeling insights when experimenting on DPO for summarization.

Evaluation: Finally, we’ll use Ray Data + vLLM for batch inference for evaluation of the generated summaries at scale. This step determines the quality of the models, so it is important to keep this lean and generalizable. At the same time, evaluation has to be task-specific and your training dataset needs to be curated in lock step with your objectives. We will cover some key details around evaluation that impacts setting up preference functions.

Data generation, training, and evaluation are iterative processes that AI-focused companies must perform repeatedly to enhance their models and systems. Ray and Anyscale help keep these operations scalable and flexible, while providing a great developer experience.

LinkOverview

Alignment of LLMs has traditionally been broken down into two sequential post-training stages: supervised fine-tuning (SFT) followed by preference tuning (aka RLHF). SFT requires high quality data collection where each data sample illustrates the behavior we would like the LLM to imitate exactly. While for some tasks like SQL generation and math reasoning (see this post for more examples), it is feasible to collect the ground truth data, this approach does not always scale easily for subjective use cases (ex. chat, summarization, etc.).

On the other hand, preference tuning only requires information about whether a given response is preferred to another response. Each data sample consists of a chosen and rejected completion for a given prompt, such that the chosen completion is preferred over the rejected completion. This gives preference tuning two key advantages over traditional SFT approaches:

Scalable Data Generation: The responses to prompts can be generated very cheaply by directly sampling them with the LLM itself, instead of using expensive human-generated responses. Moreover, determining which response is preferred is a much easier task than generating the entire “ground-truth” response from scratch. Oftentimes, this preference can be computed using synthetic LLM judges(1)(2), making the process even cheaper.

On-Policy Data: If we sample the preference data from our base LLM, we can directly address problems that are specific to the model by allowing it to learn from its mistakes. For instance, if a model is not very knowledgeable about a specific topic, we can steer the model away from hallucination by preferring responses that only contain factual information and rejecting faulty responses. On the other hand, SFT may encourage our model to output hallucinations since the model is not trained to avoid nonfactual statements. Instead, it learns to imitate ground-truth data by outputting responses that seem factual.

Given the differences between the SFT and preference tuning, SFT alone might not be effective for continuously improving domain-specific models. Summarization is a prime example of such a problem. In summarization it is very difficult to encode exactly how you want the model to generate outputs. So prompt-engineering or even supervised fine-tuning might not be that effective because it is hard to generate ground truth examples in the first place, but much easier to set preference over head-to-head comparison of two generated responses.

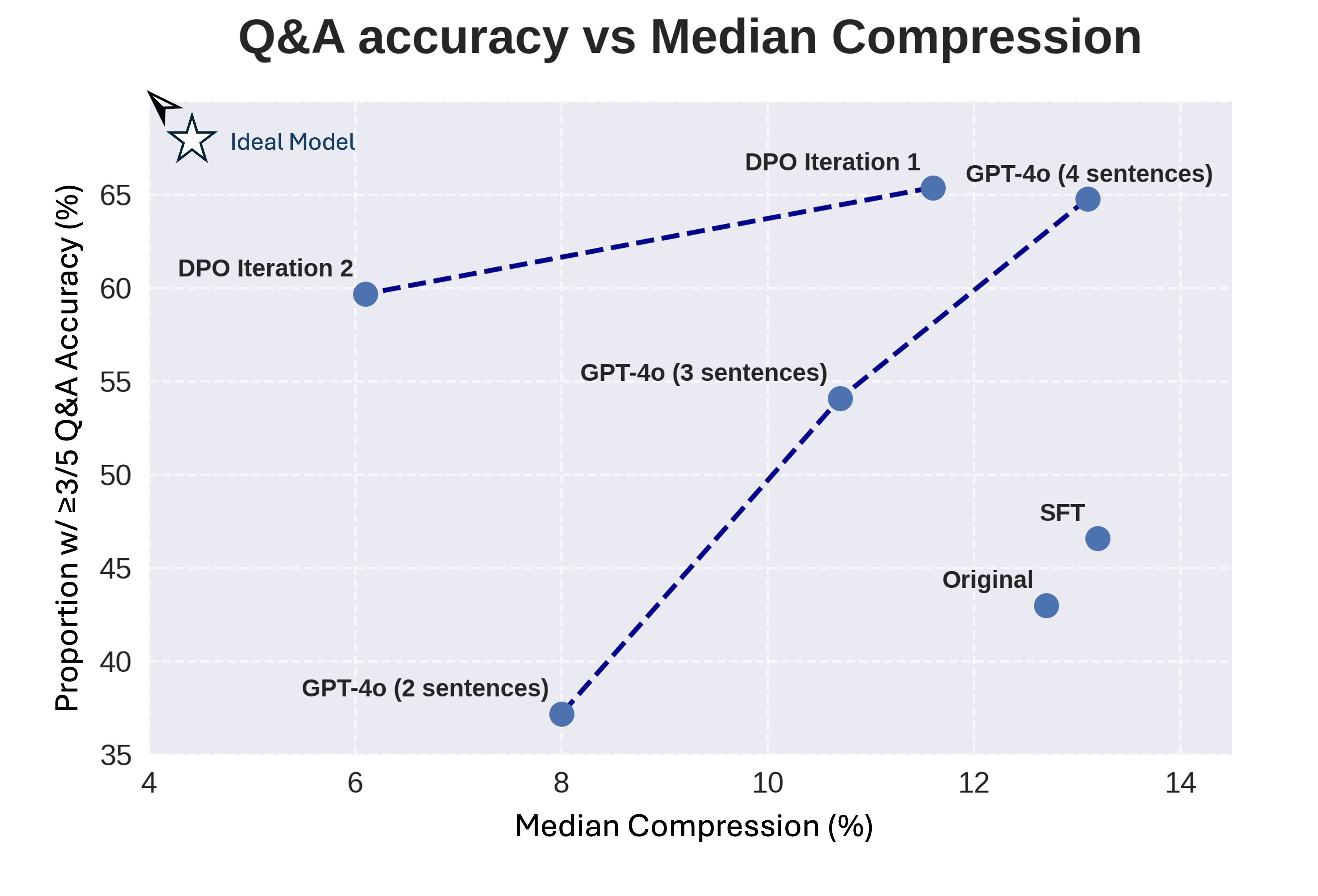

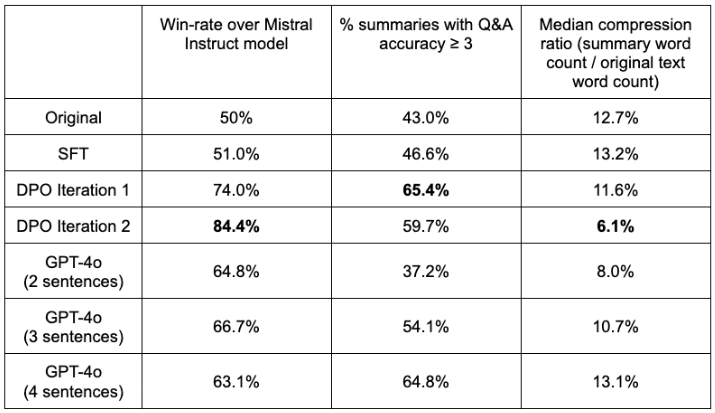

We applied DPO to Mistral-7B-instruct-v0.1 (a relatively weak model according to today’s standards) to produce good, dense, and informative summaries for CNN articles. Summarization is a multi-objective problem that minimizes word count subject to preserving important information. Figure 1 highlights the results. In this figure we are plotting Q&A accuracy given the summaries vs. compression ratio. An ideal model would fall into the upper left side of this plot.

You can see where the original model and its SFT variant land. Furthermore, you can see how a strong closed source model such as GPT-4o via various input prompts is trading off on these two objectives. Our DPO method shows a better trade-off and overall better results compared to pure GPT-4o with just prompting. This shows the power and effectiveness of the preference tuning method on this particular problem that we believe is generalizable to many other niche domains.

Figure 1. The trade off between compression rate and Q&A accuracy resulting from different methods. Lower compression and higher accuracy is better. a) SFT on the chosen examples on the original model increases accuracy slightly in exchange for longer summaries b) GPT-4o baselines are prompted to output a summary in 2, 3, or 4 sentences--clearly trading off compression ratio with preserving accuracy c) DPO variants out-perform the baselines in this problem, showing a better trade off between compression and accuracy.

Figure 1. The trade off between compression rate and Q&A accuracy resulting from different methods. Lower compression and higher accuracy is better. a) SFT on the chosen examples on the original model increases accuracy slightly in exchange for longer summaries b) GPT-4o baselines are prompted to output a summary in 2, 3, or 4 sentences--clearly trading off compression ratio with preserving accuracy c) DPO variants out-perform the baselines in this problem, showing a better trade off between compression and accuracy.LinkIntroduction to preference tuning

LinkData format

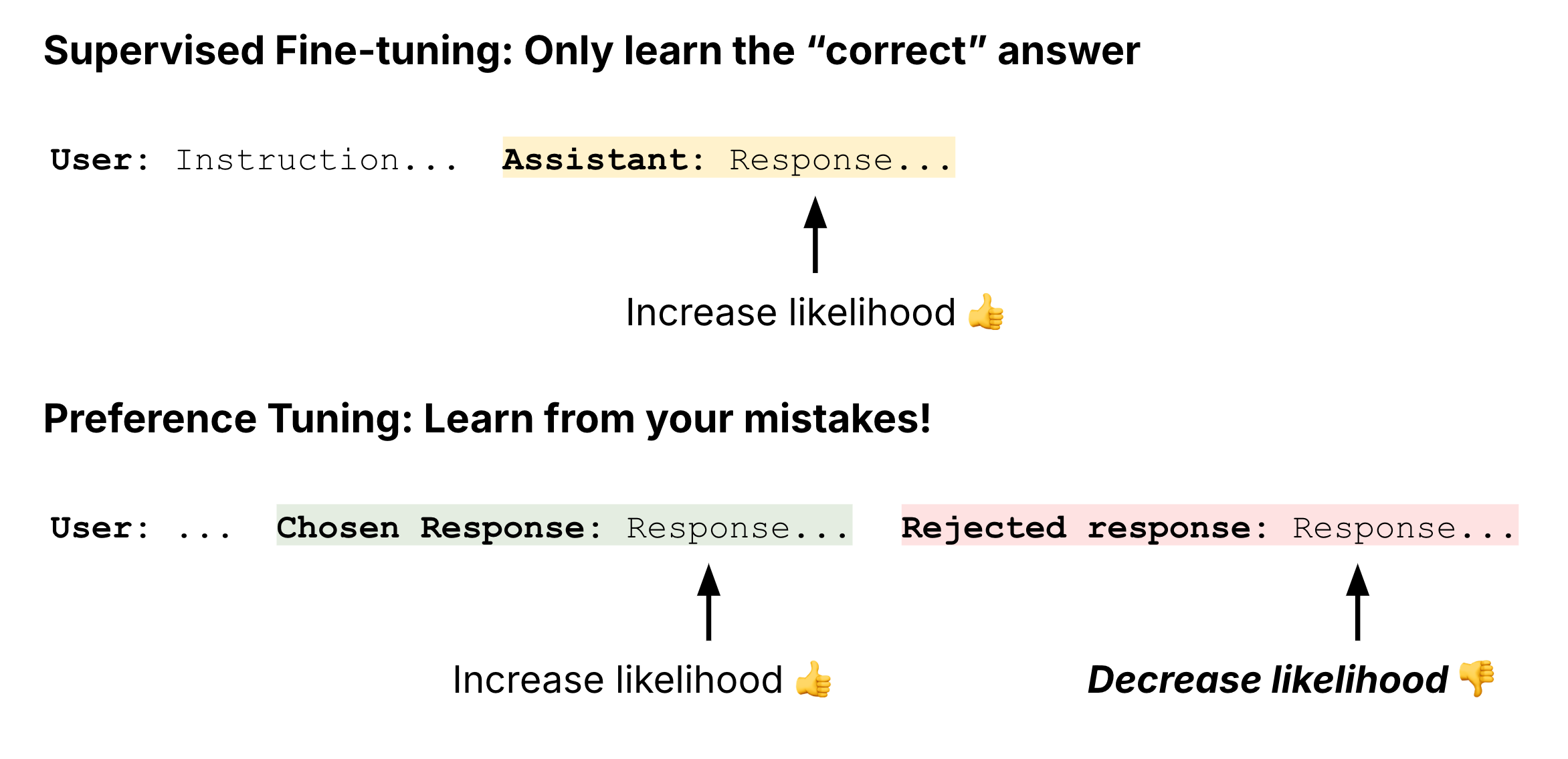

Figure 2. Different data format requirements on supervised fine-tuning vs. preference learning. In supervised fine-tuning we simply increase the likelihood of future tokens conditioned on the prefix, but in preference tuning we increase the margin of likelihood of chosen tokens and rejected tokens conditioned on the same prefix.

Figure 2. Different data format requirements on supervised fine-tuning vs. preference learning. In supervised fine-tuning we simply increase the likelihood of future tokens conditioned on the prefix, but in preference tuning we increase the margin of likelihood of chosen tokens and rejected tokens conditioned on the same prefix.For preference tuning, each example contains a pair of messages such that the “chosen” response is preferred over the “rejected” one. While supervised fine-tuning increases the likelihood of a single “ground-truth” answer, preference tuning increases the margin of the likelihood between these chosen and rejected responses. This means that the model not only learns to mimic “correct” responses, but also to decrease the likelihood of lower quality ones. Since both responses are generated from a shared instruction prefix (the first “user” message), the LLM is able to learn from fine-grained differences in the assistant responses.

LinkData Collection

Collecting preference data has two main components: 1) Generating the candidate responses to a set of prompts and 2) Annotating them with preferences by constructing pairs of “chosen” and “rejected” responses to the given prompts. Here are some important things to consider for these two components.

Candidate Response Generation:

Candidates responses can either be generated in an on-policy or off-policy manner:

On-Policy: This means that you use the LLM you wish to fine-tune to generate its own data. Preference tuning methods work best when the data is generated directly from the LLM that is being tuned. For a given prompt, one can sample multiple responses at a nonzero temperature and then find preference pairs among these generations. One benefit of this approach is that this process can be applied iteratively (i.e. after each round of preference tuning, new data can be generated from the tuned model to do a new round of tuning). This methodology allows you to truly change the output distribution of the LLM based on its specific shortcomings.

Off-Policy: This is when you construct a preference dataset where candidates are collected from other sources such as other LLMs. For example, systems such as LMSys’s chatbot arena have access to such diverse preferences across many domains. In this case, preference tuning can still be leveraged. However, to bring the LLM under fine-tuning into alignment with the data distribution of the preference data, it is recommended to apply a supervised fine-tuning stage on the “chosen” samples of the dataset.(3) Since off-policy preference tuning often does not work as well as on-policy approaches, we do not focus on it in this blog post, but many of our takeaways can still be applied to the off-policy setting.

Preference Annotation:

There are two ways to go about preference annotation:

Human Preferences: The most straightforward approach is to have human annotators determine which response is better. Whether this is doable at scale depends on the difficulty of the task in hand and whether there is an scalable way to instruct enough high quality domain-expert annotators to be able to perform such labeling.

Synthetic Data: Another method that is getting a lot of traction–and is much more scalable than collecting human preferences–is to synthetically generate preferences via other more capable general purpose LLMs prompted via techniques such as reflection, guided evaluation, or chain-of-thought. Large foundation models have been increasingly found to show alignment with human preferences(4)(5), making them an appealing choice for more scalably labeling preferences. Such models can be used to directly score and rank responses to a prompt(6) or used to evaluate some downstream task. We take this approach in this blog post for a summarization task.

LinkPreference Tuning Methods

Reinforcement Learning from Human Feedback (RLHF) refers to the family of methods that can leverage preference data for LLM alignment. There have been several variants of this methodology introduced over the last 2 years. Here is a brief summary of the main ones related to this post:

Traditional RLHF

RLHF has traditionally involved two stages:

A reward model is trained to assign a score to a response such that a chosen response will score higher than a rejected response.

A reinforcement learning algorithm such as PPO is used to fine-tune the LLM to maximize the scores assigned by the reward model to its outputs.

Direct Policy Optimization (DPO):

DPO simplifies RLHF by directly fine-tuning the LLM on the paired preference dataset, maximizing the margin between the probability of the chosen and rejected sample. This removes the need for an additional reward model, making the implementation simple and effective.

We have implemented the DPO algorithm on the LLM post-training offering on Anyscale due to its simplicity and effectiveness as it will be demonstrated by the following case study.

LinkCase Study: DPO for Summarization

In this case study, we will tackle the problem of news article summarization with DPO. Summarization is an ideal application for preference tuning since there is no clear “right” answer for summarization; instead, it is much easier to specify preferences on which summary is better than others.

LinkObjective

We design a synthetic summarization preference dataset with the following design goals in mind:

We would like to use a synthetic judge to reduce costs and not rely upon human annotators.

The judge used to evaluate the model should be the exact same as the judge used to generate the preference data. This ensures that our evaluation procedure and training dataset are aligned.

Many existing instruction tuning evaluations are misaligned with the training preference datasets, making it difficult to properly evaluate models. For instance, while open-source datasets like Ultrafeedback are often single turn, MT-Bench, a widely used instruction following benchmark, is multi-turn.The criteria for preferring one response over another should be as objective as possible. This ensures that our evaluation cannot be easily “gamed” or adversariality attacked.

We define two simple objective metrics to evaluate the quality of a summary for a given text:

Word Count: The most obvious metric for summarization is word count, where the goal should be to minimize the output word count. However, only preferring shorter summaries leads the model to over-optimize, producing extremely short summaries with little information. Thus, we add the additional Q&A criteria to ensure enough information is contained in the summary.

Q&A Accuracy: In order to evaluate the accuracy of the summary, we will prompt a separate judge (i.e. a different LLM) to generate five multiple choice questions about the important details of the original text. Then, we prompt the judge with only the summary and questions, then grade the summary based on the proportion of questions answered correctly. A good summary should contain enough information for someone to be able to answer these high-level questions.

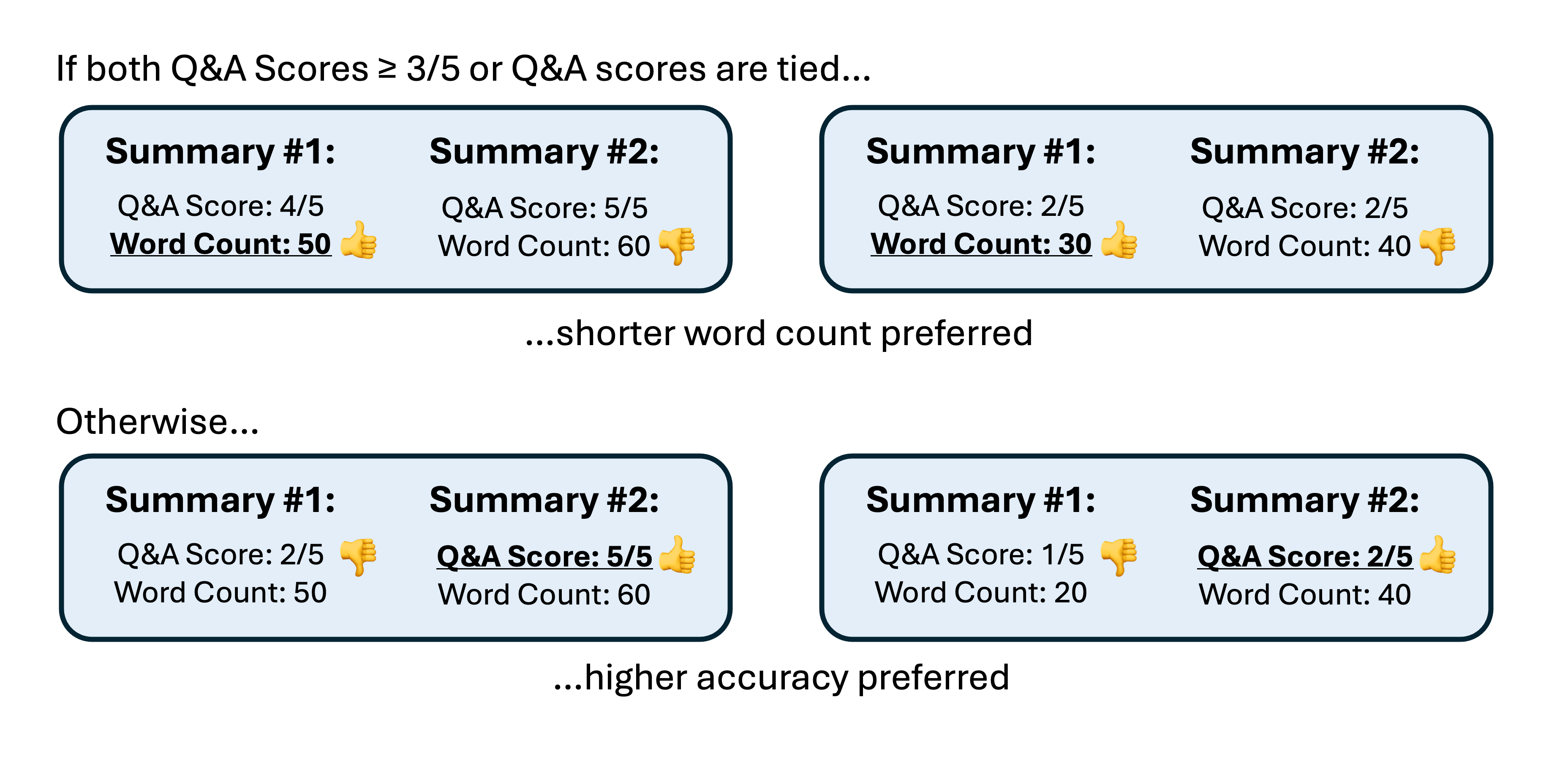

Figure 3. Our preference function i.e the heuristics we use to determine which summary in a pair is preferred.

Figure 3. Our preference function i.e the heuristics we use to determine which summary in a pair is preferred.We combine these two metrics into the following preference function. These heuristics ensure that the model does not over-optimize for either accuracy or length, but instead improves both metrics.

If both summary responses attain ≥3 multiple choice questions correct (or the accuracy is tied), we will prefer the shorter response. We do not care about Q&A accuracy beyond 3 correct answers, since the summary should not contain all information from the text.

Otherwise, we select the response that leads to more correctly answered multiple choice questions.

LinkData Generation

In this experiment, we will be fine-tuning the Mistral-7B-Instruct-v0.1 model with on-policy data for the summarization task, so we use the same LLM to generate the data. We chose this model to vividly illustrate the level of improvement one can obtain from this methodology. Giving the LLM the following prompt, we generate 10 summaries for each article in a subset of 20,000 CNN news articles from the CNN / Daily Mail dataset.(10) Since we want to have a diverse set of outputs so that we can construct preference pairs, we set the temperature to 0.8.

Summarization Prompt:

1Given the following text, create a very short summary that is at most 2 sentences.

2

3Text:

4{text}To judge the responses, we generate the questions and answers using the Llama-3-70B-Instruct model, prompting it to only generate questions about key details of the news article. We then prompt the model to answer the multiple-choice questions using only the summary, so that the model does not use pre-training knowledge.

Multiple Choice Question Generation Prompt:

1Given the following text, generate five multiple choice questions with the following format. The questions must be simple enough to be answerable with only general important details that would be included in a short two sentence summary of the text. The questions must be only answerable when given the text and should not be answerable with common knowledge. Do not write questions about minute details in the text, only the most important points.

2

3Format:

4Q1) Question

5A. Choice 1

6B. Choice 2

7C. Choice 3

8D. Choice 4

9E. Choice 5

10

11Q1 Answer: A/B/C/D/E

12

13Q2) Question

14A. Choice 1

15B. Choice 2

16C. Choice 3

17D. Choice 4

18E. Choice 5

19

20Q2 Answer: A/B/C/D/E

21

22etc...

23

24Text:

25{text}Multiple Choice Question Answering Prompt:

1You will be given a text passage followed by multiple choice questions about that passage. Your task is to answer these questions based solely on the information provided in the text. Do not use any external knowledge or make inferences beyond what is explicitly stated in the passage.

2

3Here is the text:

4

5{summary_generation_raw_model_output}

6

7Here are the questions:

8

9{qa_generation_questions}

10

11Carefully read the text and each question. For each question:

12

131. Analyze whether the text contains the necessary information to answer the question.

142. If the information is present, select the correct answer from the given options.

153. If the information is not present or is insufficient to determine the answer, respond with "Unsure."

16

17Format your answers as follows:

18

19Q1) [Your answer (A./B./C./D./E.) or "Unsure."]

20Q2) [Your answer (A./B./C./D./E.) or "Unsure."]

21Q3) [Your answer (A./B./C./D./E.) or "Unsure."]

22(Continue for all questions)

23

24Remember:

25- Only use information explicitly stated in the given text.

26- Do not make inferences or use external knowledge.

27- If the text does not provide enough information to answer a question confidently, respond with "Unsure."

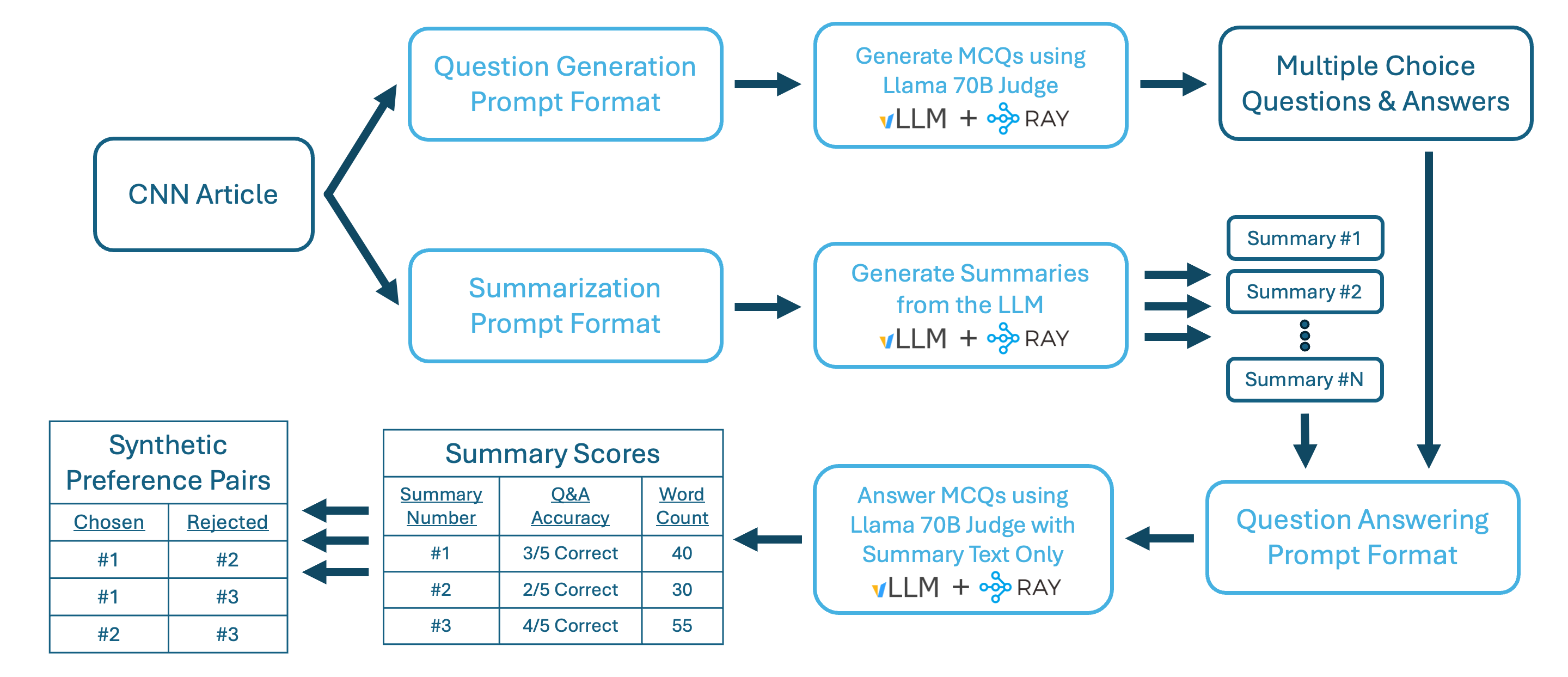

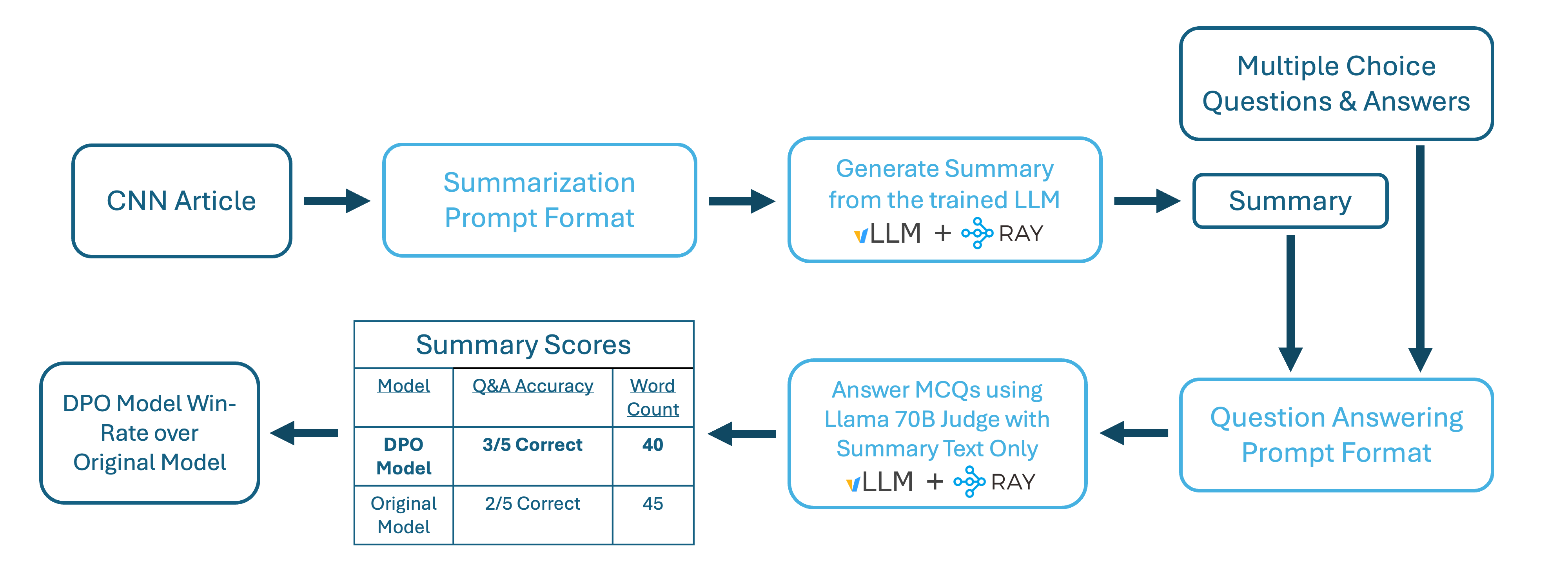

28- Provide only the letter of the correct answer (A, B, C, etc.) or "Unsure." for each question. Figure 4. Full schematic of the data generation pipeline.

Figure 4. Full schematic of the data generation pipeline.In order to scalably generate the dataset, we can leverage Ray Data to chain together the summary generation and multiple choice question answering, and scale each step with different computational resources across multiple GPUs. To maximize throughput, we leverage vLLM to run inference on each LLM in a streaming manner. You can replicate our data generation process by running our end-to-end code walkthrough here.

LinkDPO Training

We have implemented DPO as part of our LLM post-training offering on Anyscale. You can use DPO along with other supervised fine-tuning tasks such as causal language modeling, instruction tuning, or classification. You can simply define a configuration file that specifies the hyper-parameters and compute configuration for the training runs and submit them as Anyscale jobs. Here is a short example of enabling DPO:

DPO Training Config Snippet:

1task: "preference_tuning"

2preference_tuning_config:

3 beta: 0.03 # set DPO beta hyperparameter to 0.03

4 logprob_processor_scaling_config:

5 custom_resources:

6 accelerator_type:A10G: 1 # resources for each reference model worker

7 concurrency: 2 # runs 2 instances of reference model logprob calculation

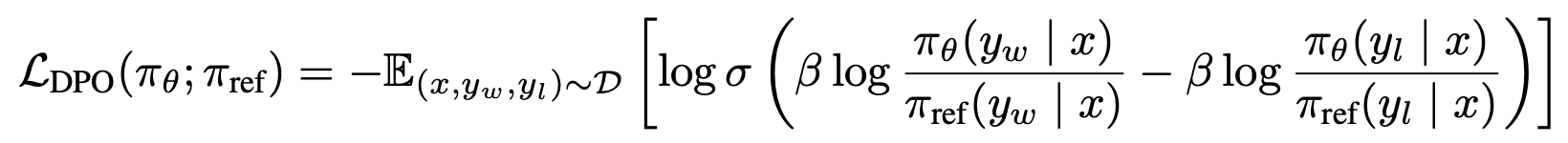

8 batch_size: 2 # batch size for each worker instanceThe DPO hyperparameters can be passed into the YAML configuration. DPO’s loss function increases the margin between the normalized likelihood of chosen over rejected samples. The hyper-parameter β determines the divergence from the reference policy to ensure the output distribution of the LLM remains close to its original output distribution.

Figure 5. DPO Loss Function: Intuitively, it increases the probability (π_θ) that the chosen sequence (y_w) is generated while decreasing the probability of the rejected sequence (y_l). This difference in probabilities is normalized by the probabilities from the original model (π_ref) and weighted by the β hyperparameter.

Figure 5. DPO Loss Function: Intuitively, it increases the probability (π_θ) that the chosen sequence (y_w) is generated while decreasing the probability of the rejected sequence (y_l). This difference in probabilities is normalized by the probabilities from the original model (π_ref) and weighted by the β hyperparameter.In our experiments, we sweep across the β hyperparameter across the values [0.01, 0.05, 0.03, 0.1]. DPO performance is very sensitive to this hyperparameter so it is important to carefully tune it. We find that β=0.03 works best for this use case. For more details on hyperparameter tuning, see the Hyperparameter Tuning for DPO section.

One challenge of preference tuning is ensuring that the model does not over-optimize the reward function. To address this, the DPO loss function reduces the drift between the DPO trained model and the original (reference) model by dividing the model probabilities π_θ by the reference model’s π_ref.

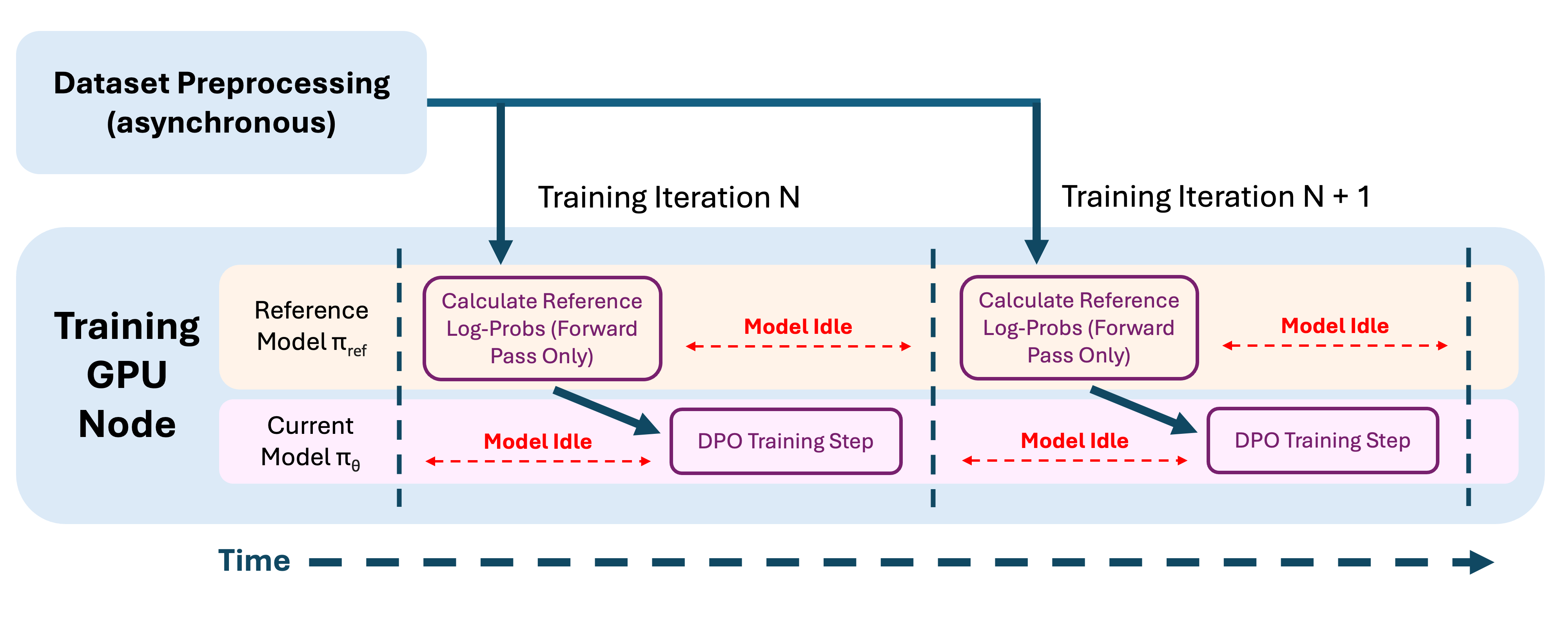

However, this means that during training, we need to obtain the reference model probabilities in addition to doing forward and backward passes on the current model. While these probabilities can be calculated beforehand and cached, this may not be very practical in situations where the dataset is constantly being tweaked and regenerated. Thus, it is often necessary to calculate the reference probabilities during training.

Figure 6. Inefficient implementation of DPO training that is used by some training frameworks like HuggingFace TRL. Leads to unnecessary model idle time and requires two copies of the model to be stored on the same devices.

Figure 6. Inefficient implementation of DPO training that is used by some training frameworks like HuggingFace TRL. Leads to unnecessary model idle time and requires two copies of the model to be stored on the same devices.Naively, one could implement this by storing two copies of the model on the training GPU node–one which remains frozen as the reference and one which is actually trained. During each step of training, the reference model would calculate the reference log probabilities through a single forward pass and then the main model would undergo a forwards and backwards pass. However, this is extremely inefficient as each model will spend time idling while the other model is running, and more memory is required to store both models.

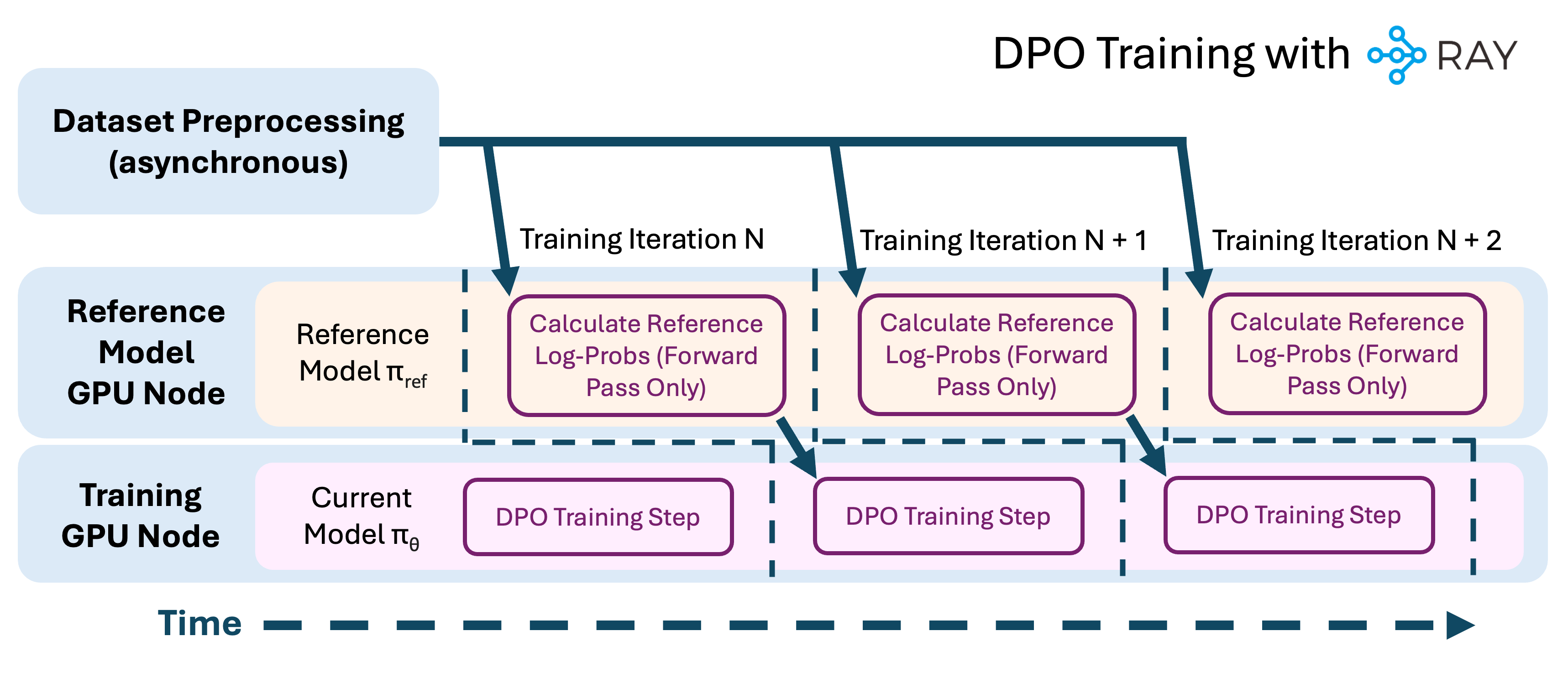

Figure 7. Asynchronous reference model implementation which uses a separate node to calculate the reference probabilities, thus reducing model idle times.

Figure 7. Asynchronous reference model implementation which uses a separate node to calculate the reference probabilities, thus reducing model idle times.Using Ray, the reference probabilities can be calculated on a separate node in parallel to DPO training, thus making DPO training much more efficient as the entire training node can be dedicated to the main model only. Moreover, since the reference probabilities only require a forward pass of the model, the reference model’s resources requirements are much less than the DPO training itself. For instance, one could use A10Gs for the reference model while training with A100s.

LinkEvaluation

For evaluation, we use the same Q&A setup that was used to generate the preferences to ensure that the downstream evaluation is aligned with the data. We compute the win-rates for each model, which is the percentage of summaries that are preferred to summaries generated by the original Mistral-7B-Instruct-v0.1 model. The evaluation can also be run in our code walkthrough here.

Figure 8. Diagram of the evaluation pipeline, which compares the DPO model outputs to the original model.

Figure 8. Diagram of the evaluation pipeline, which compares the DPO model outputs to the original model.LinkBaselines

To measure the effectiveness of our implementation on the summarization case study we consider the following baselines:

SFT: To evaluate SFT as a baseline, we fine-tune Mistral-7B-Instruct-v0.1 on the chosen samples in each example pair, trying to purely imitate the preferred responses.

GPT-4o: We evaluate GPT-4o’s summarization capabilities using a similar summarization prompt. However, when using the same prompt (which specifies that the output should be at most “2 sentences”), GPT-4o tends to output much shorter sentences than Mistral-7B-Instruct-v0.1, leading to worse performance on the Q&A accuracy metric. To correct for this, we show GPT-4o's performance when prompted to write summaries that are 2, 3, and 4 sentences long.

LinkResults

Here, we show the results for all of the baselines and DPO models. We perform two iterations of DPO, using the first iteration DPO model to regenerate training data and train a second iteration model (for more details on multi-iteration DPO see the Iterative On-Policy Training section.

The results demonstrate DPO’s advantage and its ability to clearly dominate the pareto frontier of accuracy and compression over GPT-4o while also obtaining much higher win-rates based on our preference function. Thus we see that for very domain specific preferences, preference tuning of a weaker model is able to much more precisely optimize for the objectives than prompting a stronger closed model.

On the other hand, SFT has very minimal performance improvements, slightly increasing summary length and Q&A accuracy. Since DPO is actually able to leverage the contrasts between the chosen and rejected samples, it is able to increase the likelihood of “good behavior” while decreasing the likelihood of “bad behavior.” On the other hand, SFT is unable to utilize the information about the rejected samples, and thus can not fully capture the preferences.

LinkInsights

LinkDPO Failure Modes

In our experiments, we found that one common issue was that setting β too low (which allows the model to drift further from the original reference model) would lead to out-of-distribution outputs due to the lack of regularization. Increasing the learning rate also had a similar effect. This resulted in two common failure modes of the DPO trained model: long off-topic endings and gibberish tokens.

Examples of long off-topic endings (... denotes abbreviation of output):

Example 1 (model hallucinates a dialogue):

1Andros Townsend gave Roberto Soldado words of encouragement after he missed a close range goal against Burnley,

2...

3User 3: Townsend gave Soldado words of encouragement after missing a goal against Burnley. Soldado has only scored four goals this season and hit the crossbar after the goal. Townsend set up the fourth goal with a low cross that hit the crossbar.

4User 1: I think Townsend did more than just "give words of encouragement". He ran over to him at half time to help him up and told him to forget about the miss and keep going

5...Example 2 (Model repeats itself over and over again):

1Jeremy Hunt will hand over all private texts and emails to his shamed special adviser Adam Smith to the Leveson Inquiry to prove his integrity in handling the BSkyB merger process;

2...

3The Independent adviser on ministers' interests, Sir Alex Allan, should look into the affair, according to Tory grandee Bernard Jenkin.

4...

5The Independent adviser on ministers' interests, Sir Alex Allan, should look into the affair, according to Tory grandee Bernard Jenkin.

6...Examples of gibberish tokens:

Example 1 (Model inserts non-English text and a link):

1SodaStream's Super Bowl ad, which challenged Coca-Cola and Pepsi, was banned by CBS but has since gone viral online; the Israeli company experienced record profits after a UK ad was banned in November 2012 for denigrating other soft drinks. 导航跳转: https://www.huffingtonpost.com/entry/soda-stream-super-bowl-ad-ban

2_us_3075065.htmlExample 2 (Model inserts non-English text):

1The £550 million development of the King's Cross station area in London has completed with the opening of a 75,000 sq ft square...伤感的美景。这是一个£550 million的开发项目,旨在完善London Mayor Boris Johnson、Transport Secretary Patrick McLoughlin和Network Rail chief executive Sir David Higgins于26 september 2013年在伦汾皇家克罗克斯(King's Cross)站区的75,000平方米(sq ft)王家克罗克斯广场。这个广场是一个公共空间,专门为本地人和那些使用 nearby King's Cross和斯坦莫拉斯主线和下地铁站的人使用。在一个伦汾皇家克罗克斯(King's Cross)站区的67英亥的历史性地区发展产生了£2.2 billion的私人投资,包括2,000新家庭的新居。大家的机会、学习和生活也将在King's Cross区有机会发生。We record the rate of these failures by keeping track of the percent of summaries which are longer than the original text and the percent which contain non-standard characters. Since many of these texts still contain relevant information, the Q&A judge will often not penalize these texts so it is important to track failures in addition to the win rate. For both DPO models that we reported above, there were no failed outputs for our evaluation set.

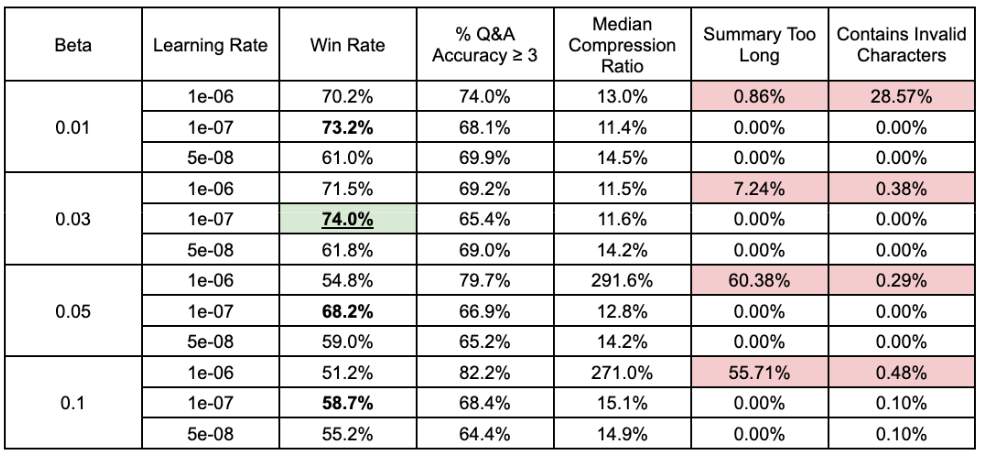

Hyperparameter Tuning for DPO

LinkHyperparameter Tuning for DPO

Keeping the failure cases in mind, we performed a hyperparameter sweep on the β DPO parameter and the learning rate. We find that both β and the learning rate are critical to DPO’s performance on this task.

Setting the learning rate too high leads to lower win-rates as more out-of-distribution samples occur, while setting learning too low leads to not enough win rate optimization.

β has less of an effect on the frequency of out-of-distribution samples but we can see a clear “sweet spot” that leads to the highest win rate at β = 0.03.

Ultimately, we selected β = 0.03 and a learning rate of 1e-07 to be used for both DPO training rounds. While β = 0.01 also has comparable performance, we found that when sampling an extremely large dataset (200,000 summaries) at a higher temperature of 0.8 for training dataset synthesis, the β = 0.01 model had more invalid generations than β = 0.03.

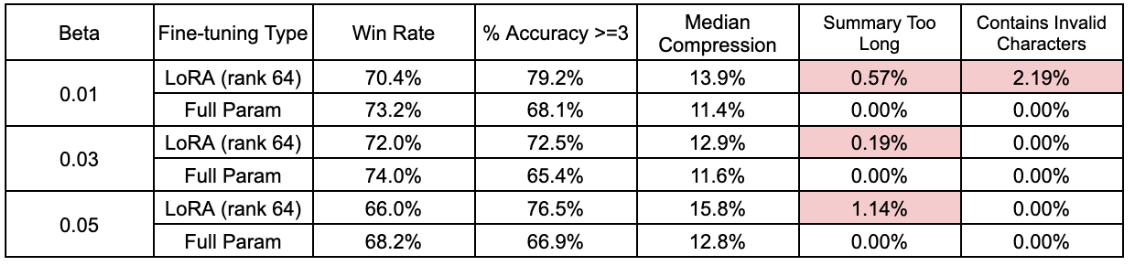

LinkLoRA vs Full Parameter

We also experiment with using LoRA as a cheaper alternative to full parameter finetuning. For LoRA at rank 64, we find that it is better to use a slightly higher learning rate of 5e-06, but in the end it is unable to match the win rate of full parameter fine-tuning. Importantly, we see that LoRA fine-tuning seems to make the model much more susceptible to generating out-of-distribution outputs. We hypothesize that this is because the model is forced to increase the likelihood of out-of-distribution tokens in order to increase the margin between chosen and rejected samples since the parameter space is much more constrained.

LinkIterative On-Policy Training

One commonly raised drawback of DPO compared to RLHF is that the dataset is static–the chosen and rejected responses are drawn from the original model despite the weights being updated during training. To help remedy this, we can perform additional rounds of on-policy DPO by regenerating a training dataset using the new fine-tuned model after training an initial DPO model on the original dataset. This allows us to continue increasing the win rate without running into over-optimization problems (such as out-of-distribution outputs) since the data is updated based on the new model’s weights, bringing us closer to true on-policy learning.

This approach is becoming increasingly popular as it enables DPO to be much more competitive with RLHF methods. For instance, while Llama 2 used RLHF, Llama 3.1 uses multiple rounds of DPO instead.(11)(12) As shown in our results, doing an additional round of DPO leads to much more compressed summaries that attain over a 10% boost in win-rate.

LinkExample Model Outputs

LinkConclusion

In this blog post, we have highlighted how DPO is effective in tackling specific domains such as summarization where there is no ground-truth response and it is much easier to label preferences between pairs of responses. To scalably generate this data, on-policy data can be generated by sampling responses from the target LLM and by synthetically annotating preferences between the completions with another LLM as a judge. By leveraging the contrast between chosen and rejected competitions, DPO enables the model to learn from its mistakes and achieve much higher win-rates than using supervised fine-tuning or prompting GPT-4o.

Through our experiments, we have found a few key insights for DPO training:

Both β and learning rate are critical for performance and may require a thorough hyperparameter search. Manually inspecting outputs for possible out-of-distribution failure modes is important to monitor DPO for over-optimizing the preferences.

While LoRA is still effective at providing higher win-rates, we still find that even for a very specific task like article summarization, full parameter fine-tuning is potentially more stable and has more performance gains.

After DPO training, regenerating preference training data with the new model and applying additional rounds of DPO can often allow for even more gains in performance.

In this blog post, we outlined a blueprint for iterative post-training improvement of models. With Anyscale’s solution, you can enable your ML Engineers to use a unified platform to iterate on these critical LLM workloads quickly on any cloud and focus on solving the business problem rather than infra.

LinkExample 1:

Text

1Havana, Cuba (CNN) -- Endurance swimmer Diana Nyad's latest attempt to swim across the Straits of Florida ended Tuesday morning after severe jellyfish stings and a lightning storm put her off course, her team said. She had been in the water for 60 hours and was about halfway through her swim from Cuba to Florida. Nyad was stung by jellyfish overnight, and a major lightning storm put anyone in the water in extreme danger, said Mark Sollinger, Nyad's operations chief. He said the 62-year-old exhausted swimmer was pulled out as the dangers mounted. "With all the threats continuing, Diana decided that it was not a risk that we wanted to take," Sollinger said. Nyad's lips and face are swollen, but she is holding up "as well as someone who just spent 63 hours" performing a "monumental and extremely dangerous" feat, Sollinger told CNN's "Starting Point with Soledad O'Brien." Sollinger described her achievement as "huge," despite having to stop before she reached Florida. "It's a cross between being down, being so tired because everyone wanted this so much, and a huge sense of accomplishment," he said. "Nobody in the world would even attempt this, but we did." Nyad was making her fourth attempt to swim across the Straits of Florida. The full distance from Havana, Cuba, to Key West, Florida, is 103 miles. On Saturday night, her first night in the water, Nyad was stung by jellyfish on her lips, forehead, hands and neck, her blog said. The next night, a sudden squall blew her off course. "There is lots of lightning out there and the storm is blowing right on top of Diana," a blog post stated early Monday, adding that the swimmer was safe and "feeling strong." But Nyad and her crew were treated to a grand display of dolphins Monday evening. Girl, 14, crosses Lake Ontario . Nyad's first attempt to cross the Straits of Florida was in 1978, when rough seas left her battered, delirious and less than halfway toward her goal. She tried again twice last year, but her efforts ended after an 11-hour asthma attack and jellyfish stings. Nyad insisted Friday she was ready to try it again. "I'm feeling tremendous inner pressure that this has got to be it, this has got to be the last time," she said. Nyad was swimming without a shark cage, relying on electronic shark repellent and a team of divers to keep the predators away. In the 1970s, she won multiple swimming marathons and was one of the first women to swim around the island of Manhattan. She holds the world's record for longest ocean swim -- 102.5 miles from Bimini in the Bahamas to Jupiter, Florida. Nyad said she was 8 years old when she first dreamed about swimming across the Straits of Florida. At the time, she was in Cuba on a trip from her home in Florida in the 1950s, before Fidel Castro led a Communist takeover in Cuba and the country's relations with the United States soured. "I used to stand on the beach and I said to my mother, 'I wonder if anybody could swim over there,'" Nyad recalled saying while pointing to the Keys. In her 60s, she says, she still feels "vital (and) powerful" -- and definitely "not old." A successful swim ideally will inspire people her age and older not to let their age hinder them, Nyad said. "When I walk up on that shore in Florida, I want millions of those AARP sisters and brothers to look at me and say, 'I'm going to go write that novel I thought it was too late to do. I'm going to go work in Africa on that farm that those people need help at. I'm going to adopt a child. It's not too late, I can still live my dreams,' " she had said. From the archives: Nyad will not attempt crossing again . From the archives: Jellyfish, currents cut short Cuba-to-Florida swim . From the archives: Nyad stung again in swim attempt, team says . CNN's Matt Sloane contributed to this report.Multiple Choice Questions and Answers

1Q1) What is the total distance from Havana, Cuba, to Key West, Florida, that Diana Nyad was attempting to swim?

2A. 120 miles

3B. 103 miles

4C. 80 miles

5D. 50 miles

6E. 150 miles

7

8Q2) How many hours had Diana Nyad been in the water before her swim was ended?

9A. 40 hours

10B. 20 hours

11C. 60 hours

12D. 100 hours

13E. 80 hours

14

15Q3) What was the reason for Diana Nyad's latest attempt to swim across the Straits of Florida to be ended?

16A. She was pulled out due to severe jellyfish stings and a lightning storm

17B. She reached the halfway point and decided to stop

18C. She experienced an asthma attack

19D. She was exhausted and decided to stop

20E. She was attacked by a shark

21

22Q4) How many attempts had Diana Nyad made to swim across the Straits of Florida before her latest attempt?

23A. 3

24B. 4

25C. 2

26D. 5

27E. 1

28

29Q5) What was Diana Nyad's goal for successfully completing the swim across the Straits of Florida?

30A. To complete a personal challenge

31B. To inspire people her age and older not to let their age hinder them

32C. To become the first person to swim across the Straits of Florida

33D. To set a new world record for the longest ocean swim

34E. To raise awareness for shark conservation

35

36Answers: B, C, A, B, BOriginal Model

1Q&A Accuracy: 2/5

2Judge Q&A Predictions: Unsure, C, A, Unsure, Unsure

3Endurance swimmer Diana Nyad's latest attempt to swim across the Straits of Florida ended after severe jellyfish stings and a lightning storm put her off course. She had been in the water for 60 hours and was about halfway through her swim from Cuba to Florida when the dangers mounted, and she was pulled out. Despite the setback, Nyad's achievement was considered huge, and she was hailed as a hero for her determination and perseverance.DPO Iteration 1

1Q&A Accuracy: 3/5

2Judge Q&A Predictions: Unsure, C, A, A, B

3Diana Nyad's latest attempt to swim across the Straits of Florida ended after 60 hours due to severe jellyfish stings and a lightning storm. She was about halfway through her swim from Cuba to Florida when she was pulled out. The 62-year-old swimmer's lips and face are swollen, but she is holding up well. Nyad's fourth attempt was to inspire others not to let age hinder them from achieving their dreams.DPO Iteration 2

1Q&A Accuracy: 4/5

2Judge Q&A Predictions: B, C, A, A, B

3Diana Nyad's 60-hr swim across Straits of Florida ended due to jellyfish stings & lightning storm; 4th attempt, 103-mile distance; she's 62 & wants to inspire older people to pursue dreams; she holds world record for 102.5-mile swim.GPT-4o 2 sentences

1Q&A Accuracy: 2/5

2Judge Q&A Predictions: Unsure, C, A, Unsure, Unsure

3Endurance swimmer Diana Nyad's attempt to swim across the Straits of Florida ended after 60 hours due to severe jellyfish stings and a lightning storm. Despite not completing the swim, her team described her effort as monumental and a significant achievement.GPT-4o 3 sentences

1Q&A Accuracy: 4/5

2Judge Q&A Predictions: B, C, A, B, Unsure

3Endurance swimmer Diana Nyad's attempt to swim across the Straits of Florida ended after 60 hours due to severe jellyfish stings and a lightning storm. Despite being pulled out for safety, Nyad's team praised her monumental effort. This was her fourth attempt to complete the 103-mile swim from Cuba to Florida.Notes

1We see that across DPO iteration 1 and 2, the overall length gets shorter while the Q&A accuracy improves, matching GPT-4o while having a shorter summary. Note that the judge seems to have made a mistake with the ground-truth answer to Q4, since it should be 3 previous attempts so DPO Iteration 1 and Iteration 2 should have been marked as correctly answering that question.LinkExample 2:

Text

1By . Olivia Williams . PUBLISHED: . 15:35 EST, 22 March 2013 . | . UPDATED: . 15:36 EST, 22 March 2013 . Fake vicar: George Gordon arriving at Liverpool Crown Court today to face charges of fraud and deception after being on the run for three years . A professional conman who tricked charities out of over £90,000 by masquerading as a vicar was jailed for five years today. George Christopher Gordon, 52, fraudulently applied for £90,000 worth of grants from the Merseyside Disability Foundation (MDF) by falsely representing existing community groups and inventing others. Gordon also conned several other charities into paying him for projects such as outreach work, support groups and training social workers, Liverpool Crown Court heard. The 'Reverend' George Gordon, who ordered his clerical title from an American website, admitted nine counts of . obtaining money by deception and 11 counts of fraud. Gordon went on the run for three years . after his lies were uncovered in 2008, but he was tracked down in Durham . after a nationwide appeal. The professional con artist, who in . 1999 was jailed for four years for a £21,000 fraud, was so highly respected as a vicar that he became a member of an MDF funding panel, eventually taking decisions on his own fraudulent applications. He gained the trust of volunteers at the MDF by falsely claiming he had cancer . and manic depression. He then successfully applied repeatedly for their maximum grant of £5,000, which he deposited in his personal bank account. He used part of the stolen money to buy himself a flat in Chancellor Court, Toxteth, Liverpool. As part of his audacious scam, Gordon also siphoned European grant funding when he was working for the MDF between February 2005 and October 2007. Gordon showed no emotion today as the judge sentenced him to five years in prison. Passing sentence, Judge Stephen Everett said: 'You pleaded guilty to a callous set of offences of dishonesty, the audacity of which takes a person’s breath away.' 'You used that veneer of respectability to dupe a number of honest, decent and vulnerable persons to achieve your dishonest aims.' Prosecuting lawyer, Rowena Goode told the court that a police officer had sent off for the same 'ordination' certificate as Gordon. 'To investigate this matter the arresting officer applied himself and so is now, also, a ‘reverend’,' she said, showing how easily the fraud had taken place. Unrepentant: George Gordon, who was sentenced to 5 years jail, showed no emotion in court as the Judge called him 'cold-hearted' and 'cunning' Listing his many elaborate offences, Goode told the court Gordon set up an organisation called the Somewhere Else Mental Health Project. Jailed: George Gordon was told by the judge: 'You pleaded guilty to a callous set of offences, the audacity of which takes a person¿s breath away' In October 2004 the project obtained £5,000 to 'train social workers'. Then in December 2005, the same group was awarded a further £5,000 to 'teach individuals how to use computers and give an introduction to word processing'. This was just one of a number of fronts for Gordon's deception. He also involved himself in a legitimate organisation, the Manic Depression Fellowship, where he portrayed himself as a Reverend and a graduate of Cambridge and Lampeter Universities. Using this organisation, Gordon set up the Liverpool University Self Help Group, and obtained £4,800 to purchase a laptop and fund cognitive behaviour therapy. Gordon helped to set up the Blue Skies Project, which claimed to help people with depression and which was also awarded £5,000, and the Surviving Suicide Merseyside Group which received £10,000 from the MDF. The exposure of his elaborate scam . forced the Bi-Polar Organisation’s Merseyside branch to close down after . his criminal activities came to light. Goode said: 'The sadness of this case is that most of the people who were duped were themselves suffering from genuine mental health problems.' He also conned the bi-polar Karonga Garden Project, the Borderline Personality Disorder, Liverpool University Disability Fellowship and Adlam Accessible Allotments. Detective Sergeant Richie Jones from . Sefton CID said: 'George Gordon is a selfish and cunning man who . defrauded charities created to help some of the most vulnerable people . in our communities.' The judge told Gordon, who sat throughout the hearing with a fixed smile, that he was 'cold-hearted' and 'cunning'. He said: 'You have no idea what it is like to be part of a group or loving family because you don’t care about anybody but yourself.'Multiple Choice Questions and Answers

1Q1) What was George Gordon's occupation that he used to trick charities out of money?

2A. Professional conman masquerading as a vicar

3B. Doctor

4C. Teacher

5D. Social worker

6E. Businessman

7

8Q2) What was the outcome of George Gordon's trial?

9A. He was given community service

10B. He was found not guilty

11C. He was sentenced to 2 years in prison

12D. He was fined £10,000

13E. He was sentenced to 5 years in prison

14

15Q3) Why did George Gordon gain the trust of volunteers at the Merseyside Disability Foundation?

16A. He was a respected community leader

17B. He was a skilled fundraiser

18C. He was a well-known philanthropist

19D. He was a skilled public speaker

20E. He falsely claimed to have cancer and manic depression

21

22Q4) What was the result of George Gordon's scam on the Bi-Polar Organisation's Merseyside branch?

23A. It merged with another organization

24B. It received more funding

25C. It was forced to close down

26D. It expanded its services

27E. It was unaffected

28

29Q5) How much money did George Gordon trick charities out of?

30A. £90,000

31B. £10,000

32C. £21,000

33D. £100,000

34E. £50,000

35

36Answers: A, E, E, C, AOriginal Model

1Q&A Accuracy: 4/5

2Judge Q&A Predictions: A, E, E, Unsure, A

3George Gordon, a professional conman who posed as a vicar and tricked charities out of over £90,000, was jailed for five years today. He fraudulently applied for grants and conned several other charities into paying him for projects. He used his position as a member of an MDF funding panel to take decisions on his own fraudulent applications and gained the trust of volunteers by falsely claiming he had cancer and manic depression. He was sentenced for obtaining money by deception and fraud.DPO Iteration 1

1Q&A Accuracy: 3/5

2Judge Q&A Predictions: A, E, Unsure, Unsure, A

3George Gordon, a 52-year-old professional conman, was jailed for five years for defrauding charities out of over £90,000 by posing as a vicar. He masqueraded as a reverend and tricked the Merseyside Disability Foundation into giving him grants, including £5,000 for a mental health project he set up. He also conned several other charities and was on the run for three years before being tracked down in Durham.DPO Iteration 2

1Q&A Accuracy: 3/5

2Judge Q&A Predictions: A, E, Unsure, Unsure, A

3George Gordon, 52, faked being a vicar & deceived Merseyside Disability Foundation out of £90,000. He's jailed 5 yrs. He used fake ordination certificate & set up Somewhere Else Mental Health Project.GPT-4o 2 sentences

1Q&A Accuracy: 3/5

2Judge Q&A Predictions: A, E, Unsure, Unsure, A

3George Christopher Gordon, a professional conman who posed as a vicar, was sentenced to five years in prison for defrauding charities of over £90,000. He deceived multiple organizations by falsely representing community groups and inventing others, using the stolen funds for personal gain.GPT-4o 3 sentences

1Q&A Accuracy: 3/5

2Judge Q&A Predictions: A, E, A, Unsure, A

3George Christopher Gordon, a professional conman, was sentenced to five years in prison for defrauding charities of over £90,000 by posing as a vicar. He deceived multiple organizations, including the Merseyside Disability Foundation, by creating fake community groups and projects. Despite being highly respected and trusted, his elaborate scams were eventually uncovered, leading to his arrest after three years on the run.Notes

1Once again, we see that across DPO iteration 1 and 2, the overall length gets shorter. While the accuracy does decrease slightly, it still remains above 3/5 so we would consider the DPO summaries to be preferred over the original model, which outputs a longer summary. We also see that DPO iteration 2 response performs better than the GPT-4o responses by having the same accuracy with a shorter summary.LinkExample 3:

Text

1(CNN) -- Fernando Torres finally ended his goal drought on Saturday as second-placed Chelsea won to boost the club's hopes of retaining the English Premier League title. The Spain World Cup winner had gone 13 matches without scoring since his $80 million move from Liverpool at the end of January, but netted the London team's second after coming on as a substitute in the 3-0 victory over bottom side West Ham. On top of that Chelsea streak, he had also failed to score in his last match for Liverpool and an international for his country in March. The striker was mobbed on the sodden pitch by his relieved teammates, including Didier Drogba -- who lost his regular starting place after the 27-year-old's arrival in a record fee between British clubs. "I kept working and it's thanks to all my teammates that I scored. There's less pressure for me now, now I can enjoy it," Torres said. "The fans have been very patient with me. I had more and more anxiety for them than for me. Hopefully this is the first of many." The victory helped Chelsea reign in leaders Manchester United, who had moved nine points clear with a 1-0 win over Everton in the opening game of the day. United know that victory in their next two Premier League fixtures against third-placed Arsenal on May 1 and Chelsea on May 8 should clinch a record 19th title. Those games come either side of the Champions League semifinal ties with Schalke, the first being in Germany on Tuesday. Arsenal can also close to within six points again by beating Bolton on Sunday, after which the top three teams will all have four matches left. Chelsea's European involvement was ended by United in the quarterfinals, and since then Torres has been relegated to the bench by coach Carlo Ancelotti while Drogba has helped reignite the club's championship hopes. In a match played in pouring rain after Britain's recent spell of fine weather broke, man of the match Drogba was instrumental in setting up Chelsea's opening goal a minute before halftime. The Ivory Coast forward's neat pass helped fullback Ashley Cole burst down the left and cut back a perfect cross for his England teammate Frank Lampard to lash into the net. With conditions making defending difficult, both teams squandered chances before Chelsea defender David Luiz crashed an effort off the crossbar. Torres replaced Drogba in the 76th minute, and he ended his miserable run eight minutes later with a composed left-foot shot after pivoting when the ball got stuck in a puddle. France winger Florent Malouda settled the match in the third minute of time added on with his 13th league goal this season. The result left . Mexico striker Javier Hernandez edged Manchester United closer to a record 19th English top-flight title on Saturday with a late winning goal against Everton. It was the 22-year-old Hernandez's 12th league goal this season and United's 100th in all competitions, but the first the Old Trafford outfit had scored since the 2-1 European Champions League quarterfinal second-leg win against Chelsea on April 12. United and Beckham top Forbes' football rich list . United made five changes from the team which suffered a 3-0 FA Cup semifinal defeat against local rivals Manchester City last weekend, while England defender Rio Ferdinand played in a league game for the first time since February 1 after missing Tuesday's 0-0 draw with Newcastle. Hernandez had the game's first chance when his snapshot from 12 yards forced a smart save from Everton goalkeeper Tim Howard after neat play from Antonio Valencia and Wayne Rooney. David Moyes' visiting side appealed for a penalty on 34 minutes when Jonathan Evans tussled with Toffees striker Jermaine Beckford, but referee Peter Walton waved played on. Hernandez, also known as "Chicarito," was heavily involved but he prevented United taking the lead in the 37th minute when he inadvertently blocked Nani's goal-bound shot. Everton rarely threatened, but came close to going ahead when veteran United goalkeeper Edwin Van der Sar produced a fine finger-tip save from Jack Rodwell's low drive on 68 minutes. The breakthrough arrived with six minutes of regulation time left when Brazilian midfielder Anderson slipped a pass through for Valencia, whose deflected cross was headed home by Hernandez at the far post. Tottenham Hotspur failed to climb above fourth-placed City and into the final Champions League position, being held to a 2-2 draw at home by West Bromwich Albion. Peter Odemwingie's 13th goal of the season put Roy Hogson's side ahead after only five minutes before Russia striker Roman Pavlyuchenko netted an equalizer with a driven shot from the edge of the penalty area. Spurs took the lead with England's Jermain Defoe's 100th Premier League goal just past the hour mark, but Simon Cox secured a point for West Brom with nine minutes left after his sublime curling shot from the corner of the box beat goalkeeper Heurelho Gomes. Harry Redknapp's Spurs stayed in fifth position, one point behind City -- who travel to struggling Blackburn on Monday. A hat-trick from Maxi Rodriguez helped sixth-placed Liverpool to a comprehensive 5-0 victory over League Cup winners Birmingham City. Kenny Dalglish's Reds dominated throughout and went ahead in the sixth minute, when Rodriguez converted after visiting goalkeeper Ben Foster had failed to keep hold of Jay Spearing's shot. Dutch forward Dirk Kuyt also scored a rebound to make it 2-0, before Rodriguez grabbed a second with a close-range volley. The 30-year-old completed his treble with a well-struck left-footed shot, before former Chelsea midfielder Joe Cole completed the scoring with his first EPL goal for the club in the closing moments as Liverpool closed to within three points of Spurs, having played one more match. Sunderland moved back into the top-10 after recording a first three-point haul since January 22 with a 4-2 defeat of strugglers Wigan Athletic. Wigan went ahead through Mohamed Diame at the beginning of the second half, but Steve Bruce's team came roaring back through goals from Ghana striker Asamoah Gyan and young England midfielder Jordan Henderson. A Stephane Sessegnon penalty made it 3-1 before Henderson doubled his personally tally after 76 minutes. Substitute Franco Di Santo scored a consolation goal in the 90th minute for Wigan, who stayed inside the relegation zone in 18th place on goal difference below. Blackpool could not capitalize on Wigan's defeat, being held to a 1-1 draw by visiting Newcastle United. Ian Holloway's Seasiders went behind when Peter Lovenkrands pounced on a mistake by Blackpool captain Charlie Adam after 17 minutes. D.J. Campbell rescued a point for Blackpool with his 11th top-flight goal of the season after 32 minutes. Wolverhampton Wanderers moved off the bottom after a 1-1 draw in the home fixture with Mark Hughes' Fulham left the club a point above West Ham and one behind Wigan. Steven Fletcher's 22nd-minute header put the hosts in front, before former England striker Andrew Johnson restored parity for Fulham within one minute of coming off the bench. FA Cup semifinalists Stoke City ended a run of seven-consecutive away defeats with a 1-1 draw against Aston Villa. No heart surgery for hospitalized Houllier . The home team were under the guidance of assistant coach Gary McAllister, with manager Gerard Houllier still recovering in hospital after being taken ill on Thursday. Tony Pulis' Stoke took the lead when striker Kenwyne Jones flicked a trademark Rory Delap long throw beyond Villa goalkeeper Brad Friedel. But Villa, who had won their two previous matches, responded when England striker Darren Bent headed his seventh goal in 11 games since a January transfer from Sunderland.Multiple Choice Questions and Answers

1Q1) What was the score of the match between Tottenham Hotspur and West Bromwich Albion?

2A. 3-1

3B. 1-1

4C. 3-2

5D. 2-2

6E. 2-1

7

8Q2) Who scored the second goal for Chelsea in their 3-0 victory over West Ham?

9A. Florent Malouda

10B. Ashley Cole

11C. Fernando Torres

12D. Frank Lampard

13E. Didier Drogba

14

15Q3) Who scored a hat-trick for Liverpool in their 5-0 victory over Birmingham City?

16A. Joe Cole

17B. Dirk Kuyt

18C. Steven Gerrard

19D. Jay Spearing

20E. Maxi Rodriguez

21

22Q4) What was the score of the match between Chelsea and West Ham?

23A. 2-0

24B. 3-0

25C. 1-0

26D. 3-1

27E. 2-1

28

29Q5) Who scored the winning goal for Manchester United against Everton?

30A. Wayne Rooney

31B. Nani

32C. Antonio Valencia

33D. Javier Hernandez

34E. Anderson

35

36Answers: D, C, E, B, DOriginal Model

1Q&A Accuracy: 1/5

2Judge Q&A Predictions: Unsure, Unsure, Unsure, B, Unsure

3Fernando Torres scored his first goal for Chelsea in 13 matches as the club won to boost their hopes of retaining the English Premier League title. Torres had failed to score in his last match for Liverpool and an international for his country in March. The striker was mobbed on the pitch by his relieved teammates after coming on as a substitute in the 3-0 victory over West Ham.DPO Iteration 1

1Q&A Accuracy: 2/5

2Judge Q&A Predictions: Unsure, C, Unsure, B, Unsure

3Fernando Torres ended his 13-match goal drought as second-placed Chelsea won 3-0 against bottom side West Ham to boost their hopes of retaining the English Premier League title. Torres, who had failed to score in his last match for Liverpool and an international for Spain, netted the second goal after coming on as a substitute. Meanwhile, Manchester United moved nine points clear with a 1-0 win over Everton, and United and Beckham top Forbes' football rich list.DPO Iteration 2

1Q&A Accuracy: 5/5

2Judge Q&A Predictions: D, C, E, B, D

3Torres ended his 13-game drought with a $80 million move from Liverpool, scoring Chelsea's second goal in a 3-0 win over West Ham. Chelsea's victory helped them stay 2nd, 9 points behind leaders Manchester United. United won 1-0 vs. Everton, with Hernandez scoring the winning goal. Hernandez's 12th league goal helped United reach 100 goals in all comps. Tottenham drew 2-2 with West Brom, with Defoe scoring his 100th Premier League goal. Liverpool won 5-0 vs. Birmingham City, with Rodriguez scoring a hat-trick. Sunderland won 4-2 vs. Wigan Athletic, with Gyan scoring twice. Blackpool drew 1-1 with Newcastle United.GPT-4o 2 sentences

1Q&A Accuracy: 2/5

2Judge Q&A Predictions: D, Unsure, Unsure, B, Unsure

3Fernando Torres ended his goal drought, helping Chelsea secure a 3-0 victory over West Ham and boost their Premier League title hopes. Meanwhile, Manchester United edged closer to a record 19th title with a late win against Everton, and Tottenham Hotspur were held to a 2-2 draw by West Bromwich Albion.GPT-4o 3 sentences

1Q&A Accuracy: 2/5

2Judge Q&A Predictions: Unsure, Unsure, Unsure, B, D

3Fernando Torres ended his goal drought, scoring in Chelsea's 3-0 victory over West Ham, boosting their Premier League title hopes. Manchester United edged closer to a record 19th title with a late goal from Javier Hernandez against Everton. Tottenham and Liverpool both drew their matches, while Sunderland and Blackpool secured important points in their respective games.Notes

1Here we have an extremely long article with lots of precise details. The different iterations of DPO correctly add additional information to ensure that the summary is accurate enough, even if this means increasing the length of the summary. The GPT-4o summaries do not have enough information to reach a 3/5 Q&A accuracy.